1.PKanalix documentation

Version 2024

This documentation is for PKanalix.

©Lixoft

PKanalix performs analysis on PK data set including:

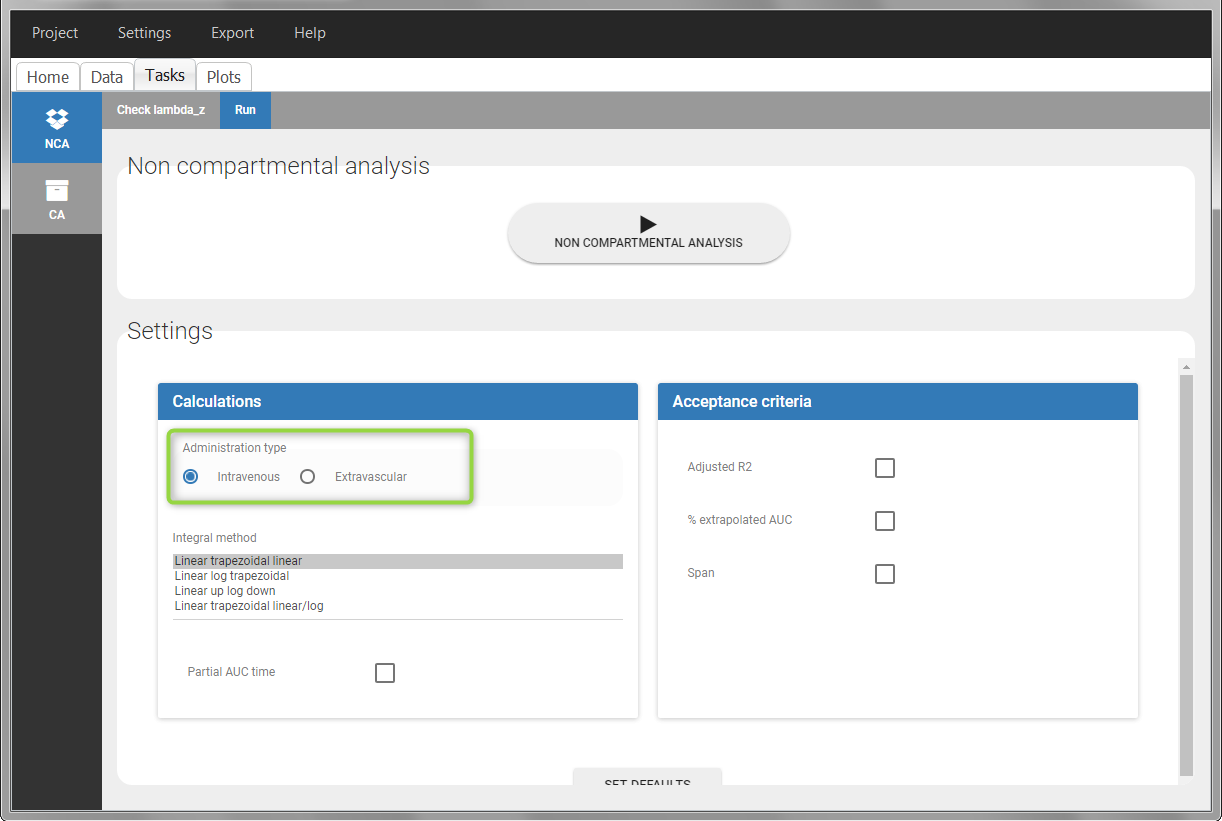

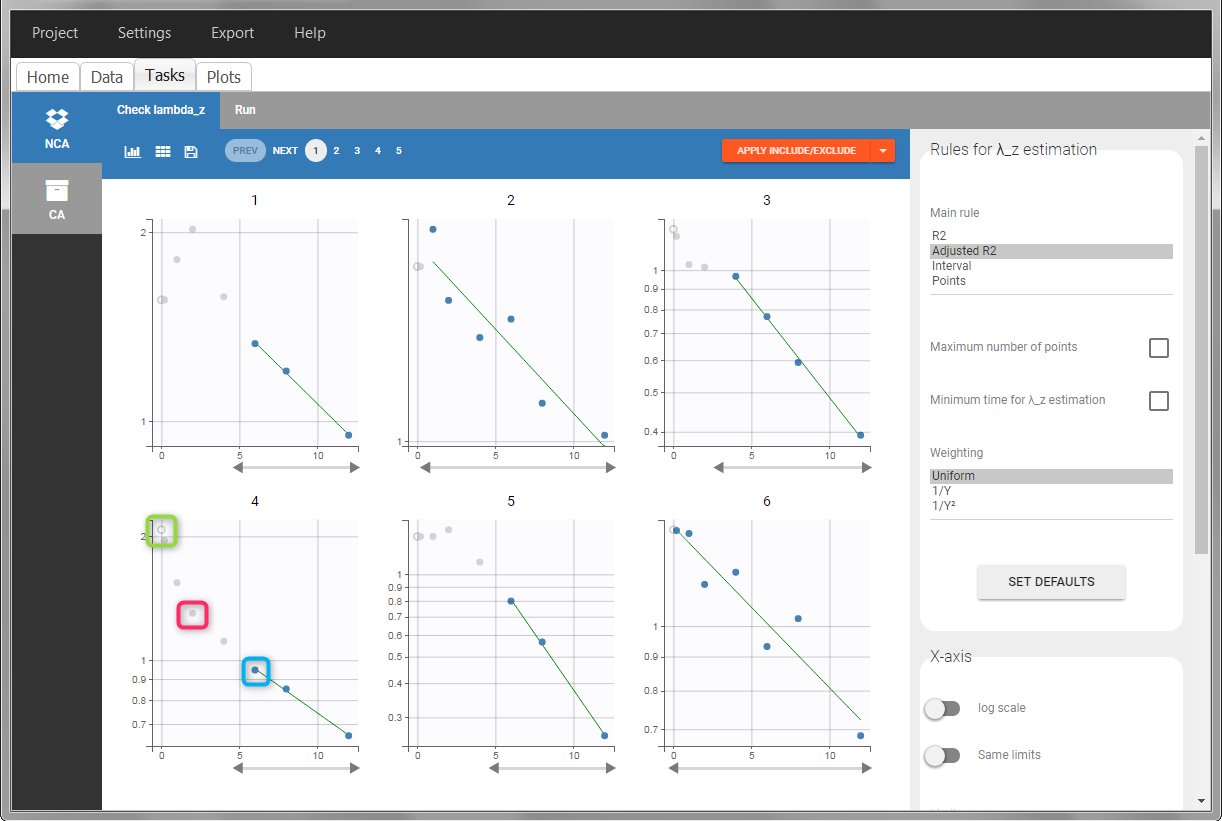

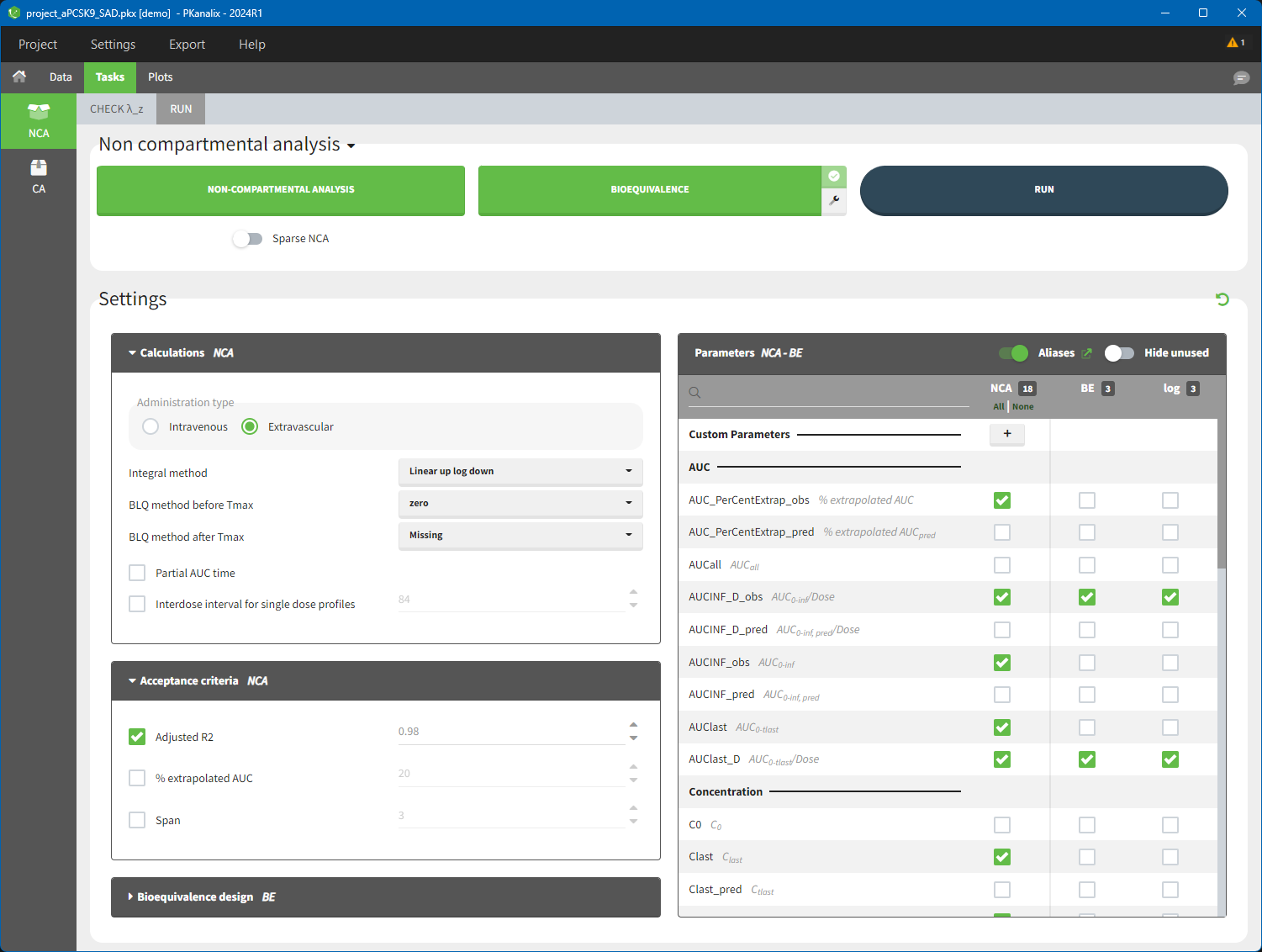

- The non compartmental analysis (NCA) – computation of the NCA parameters using the calculation of the \(\lambda_z\) – slope of the terminal elimination phase.

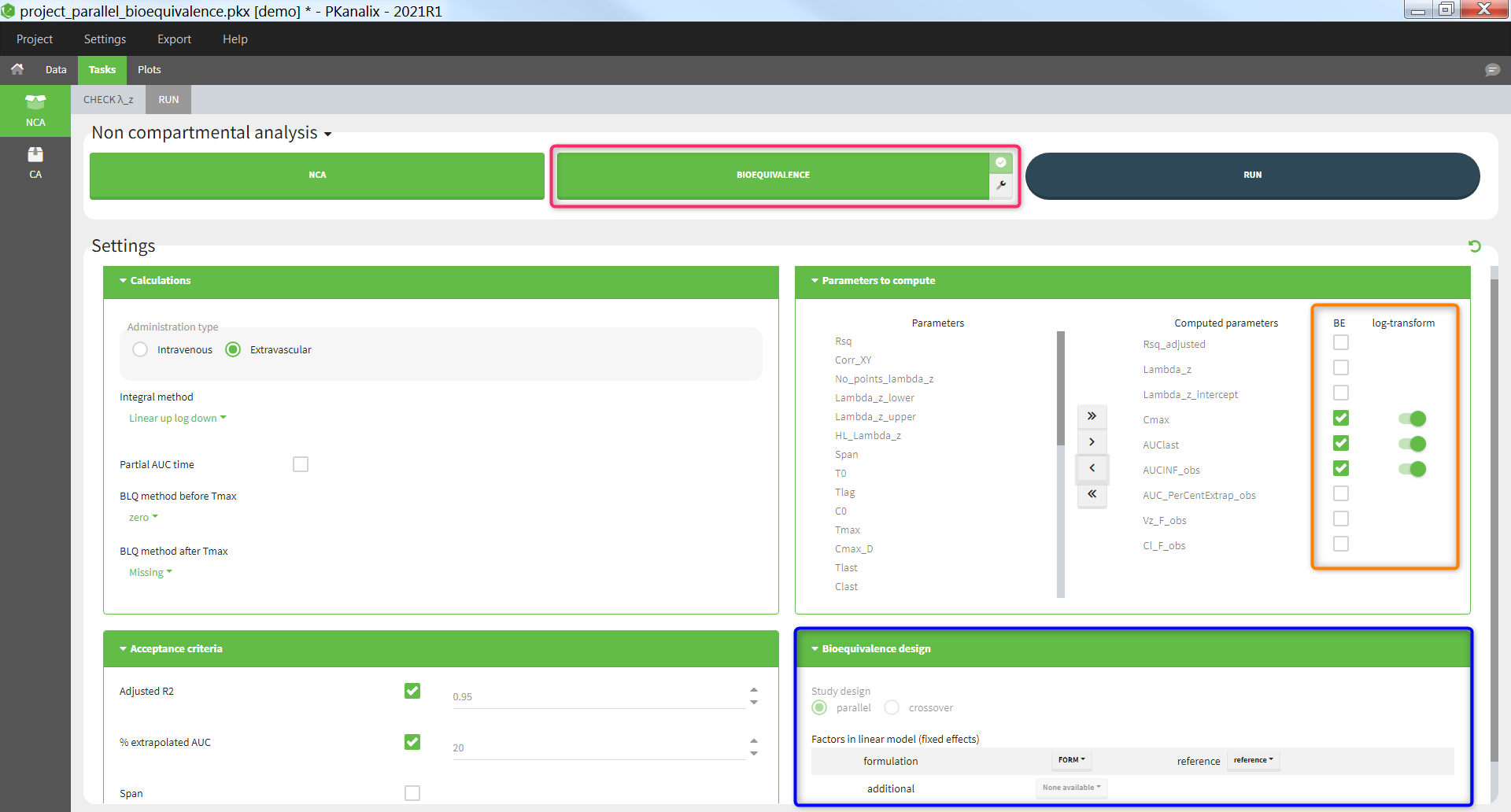

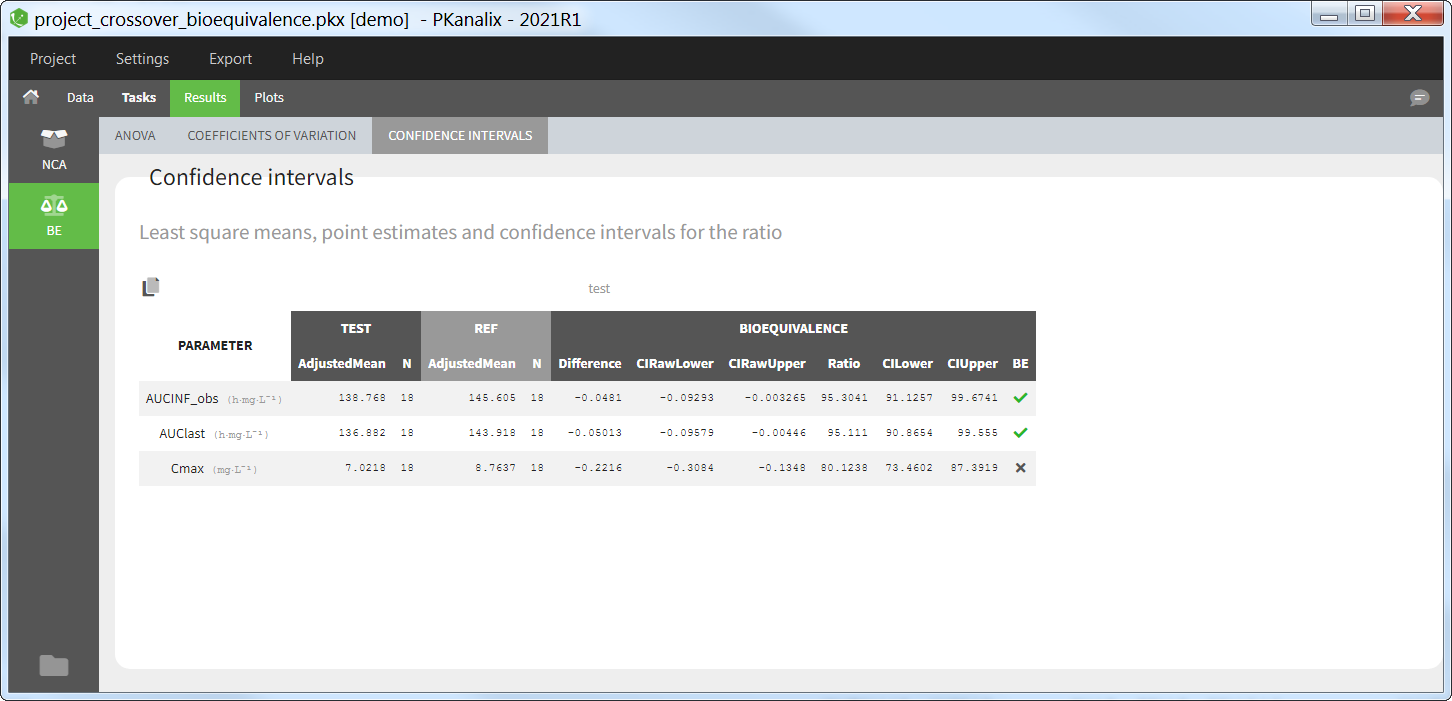

- The bioequivalence analysis (BE) – comparison of the NCA parameters of several drug products (usually two, called ‘test’ and ‘reference’) using the average bioequivalence.

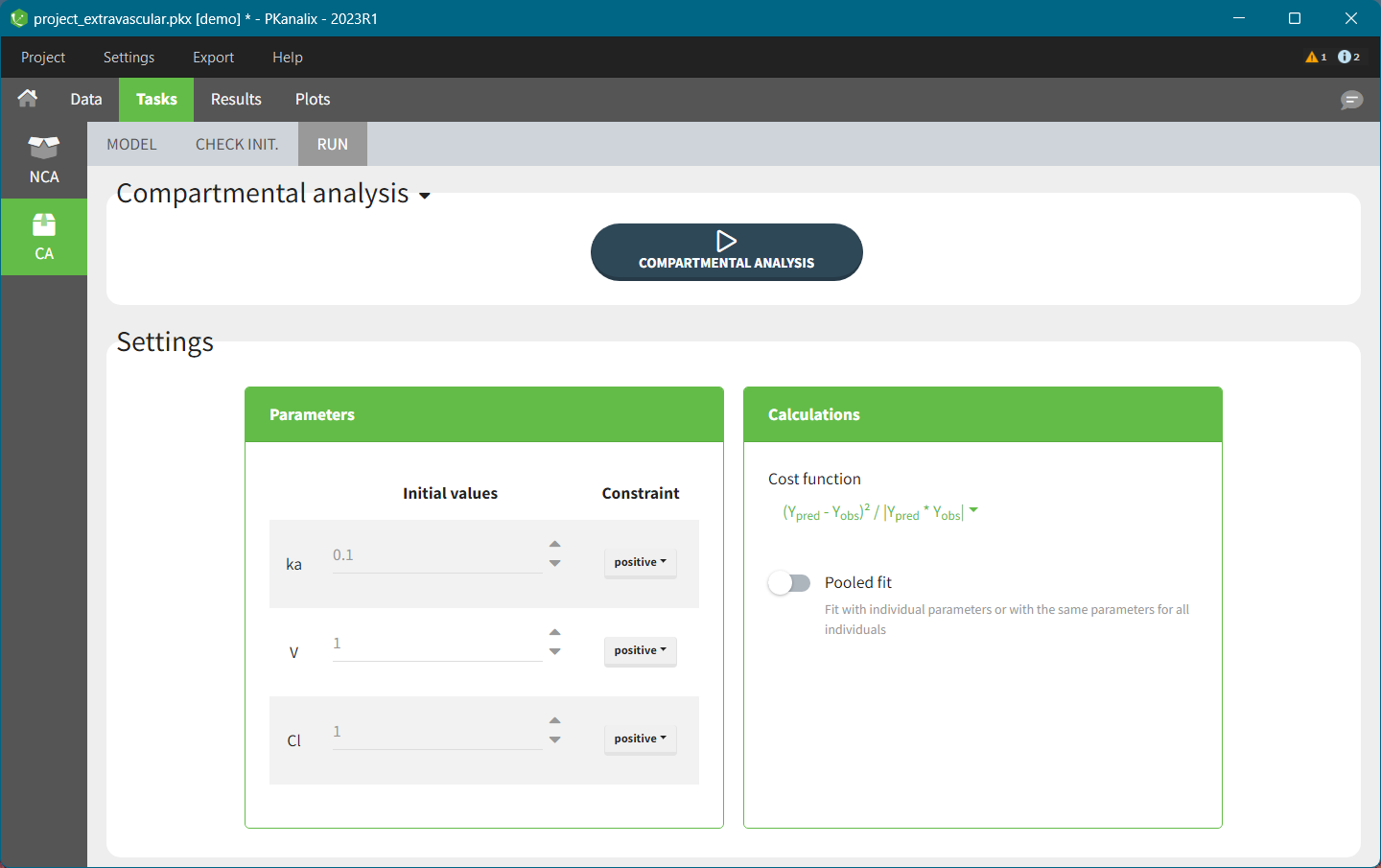

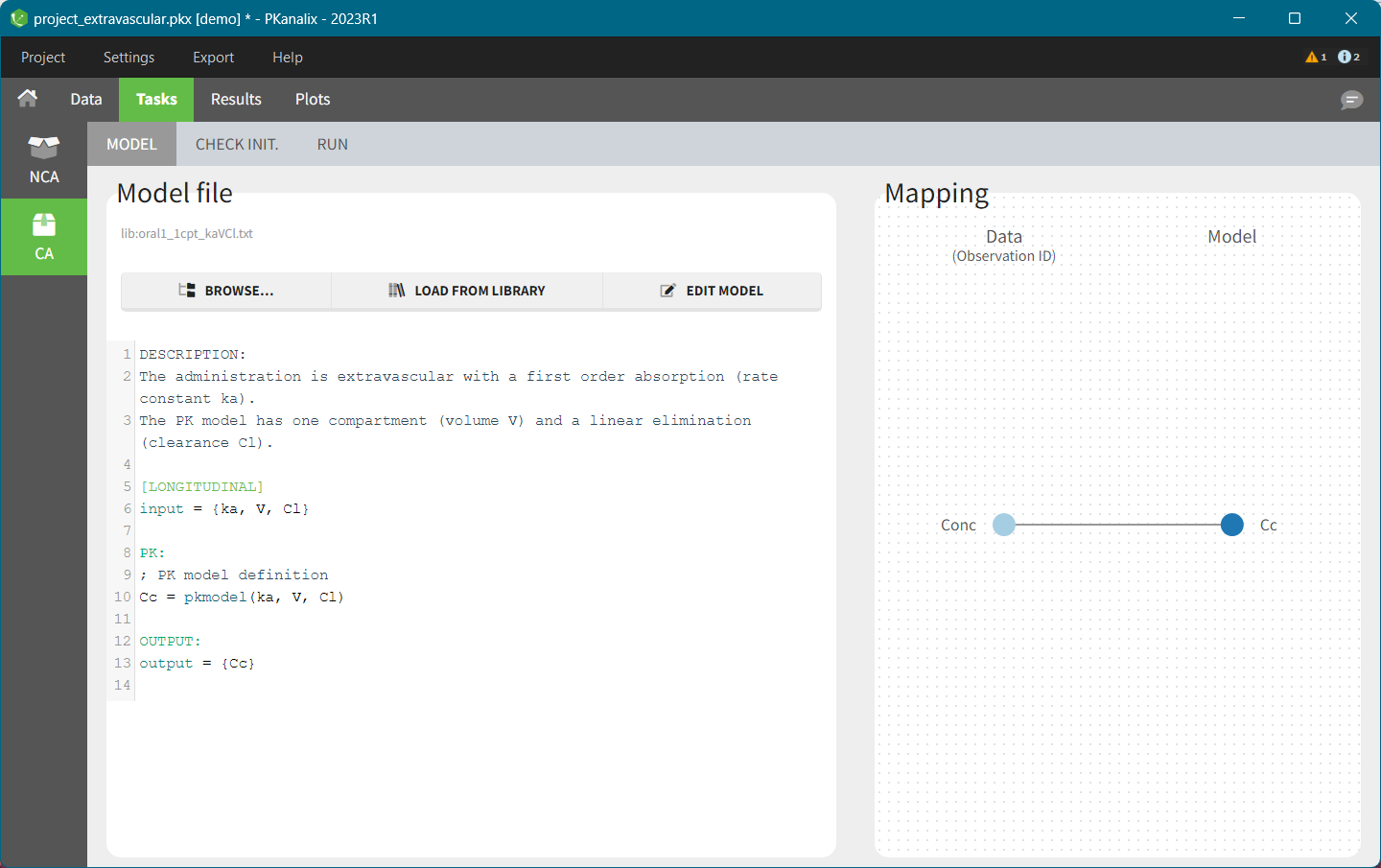

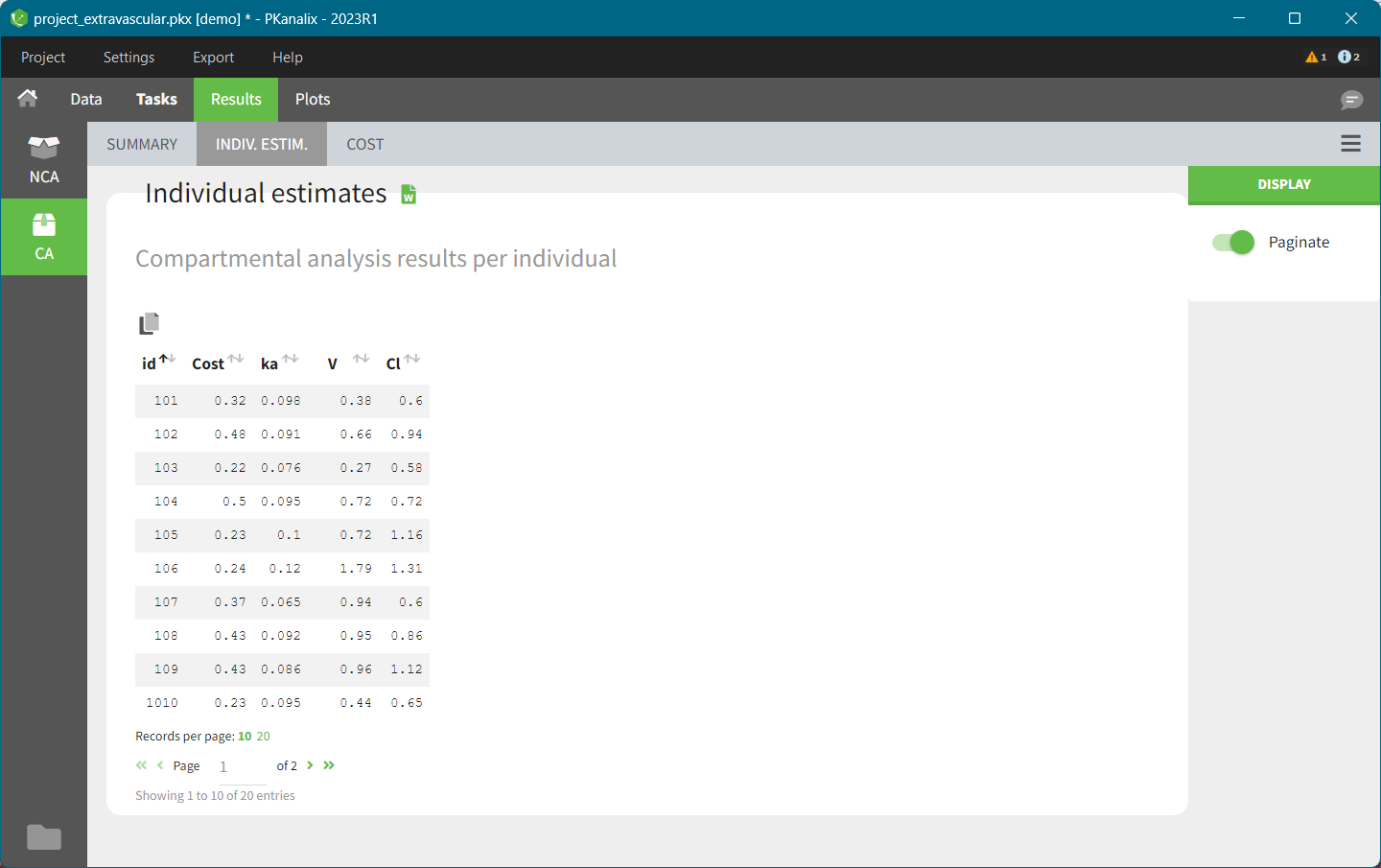

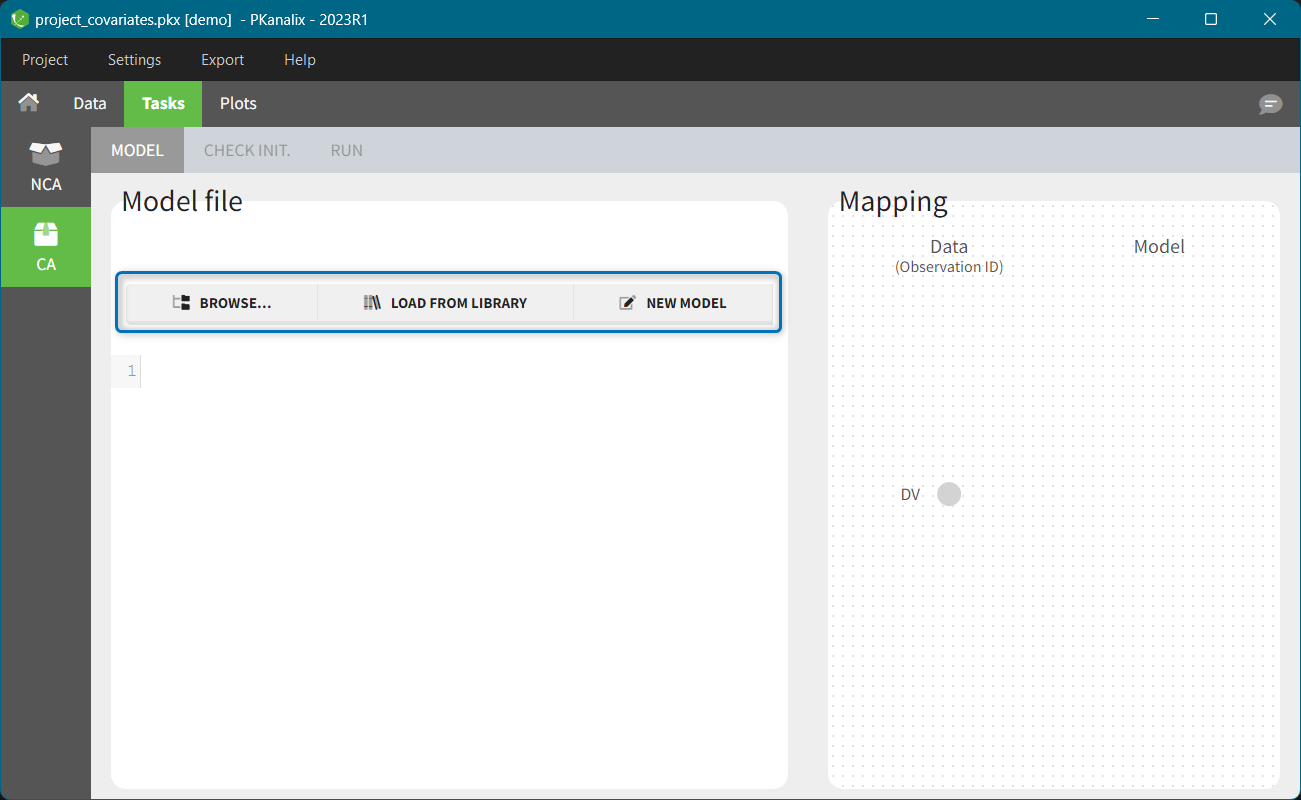

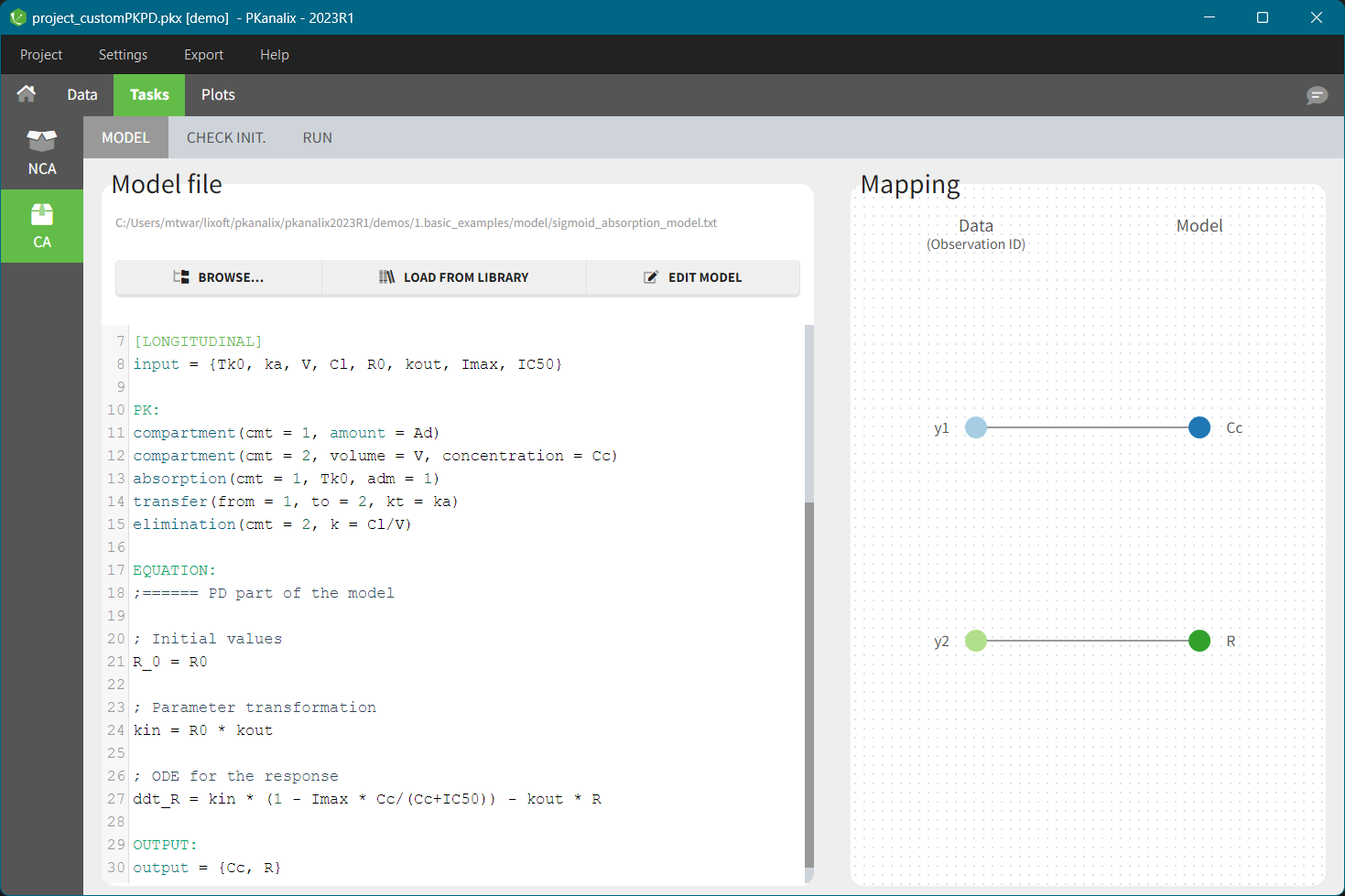

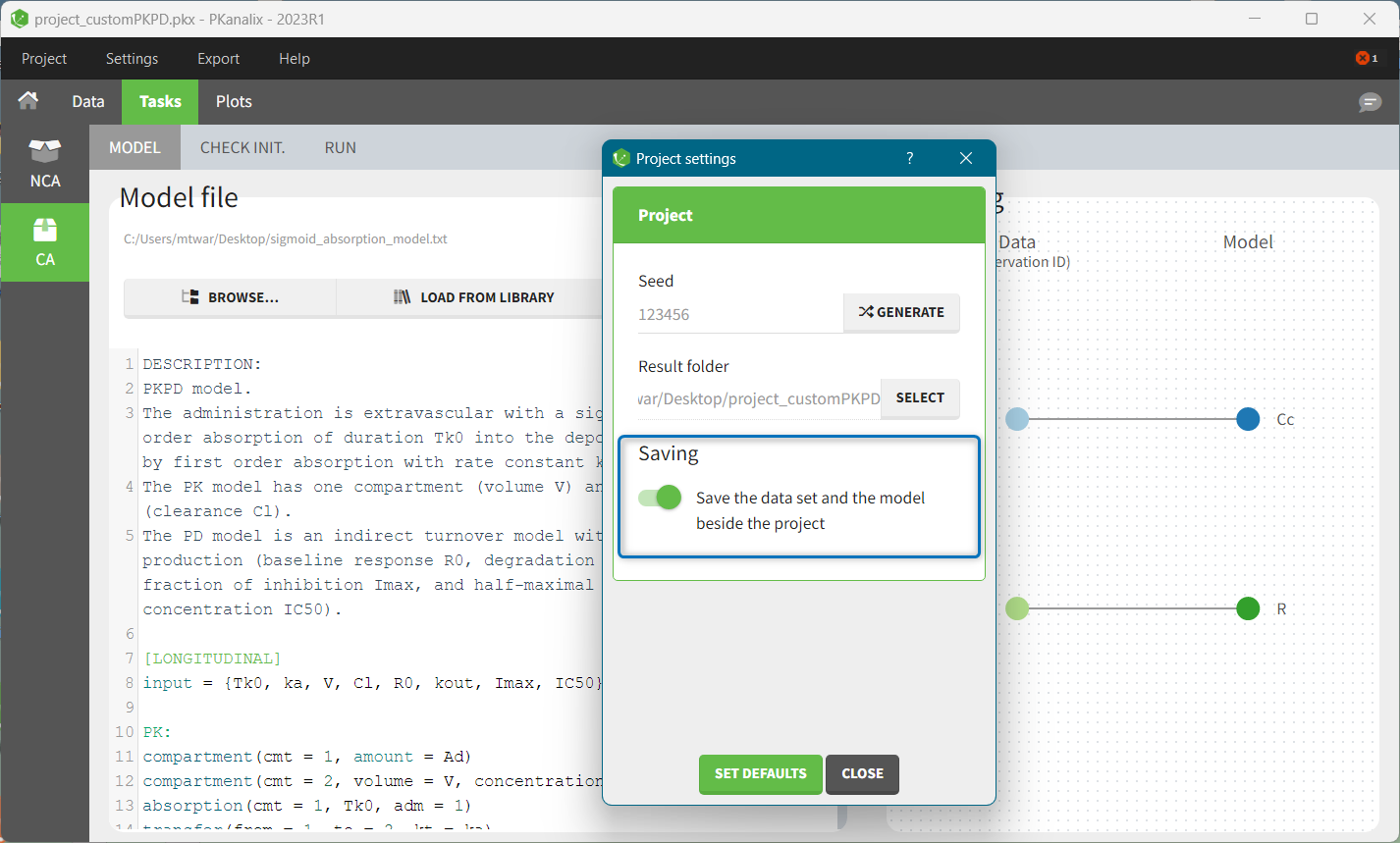

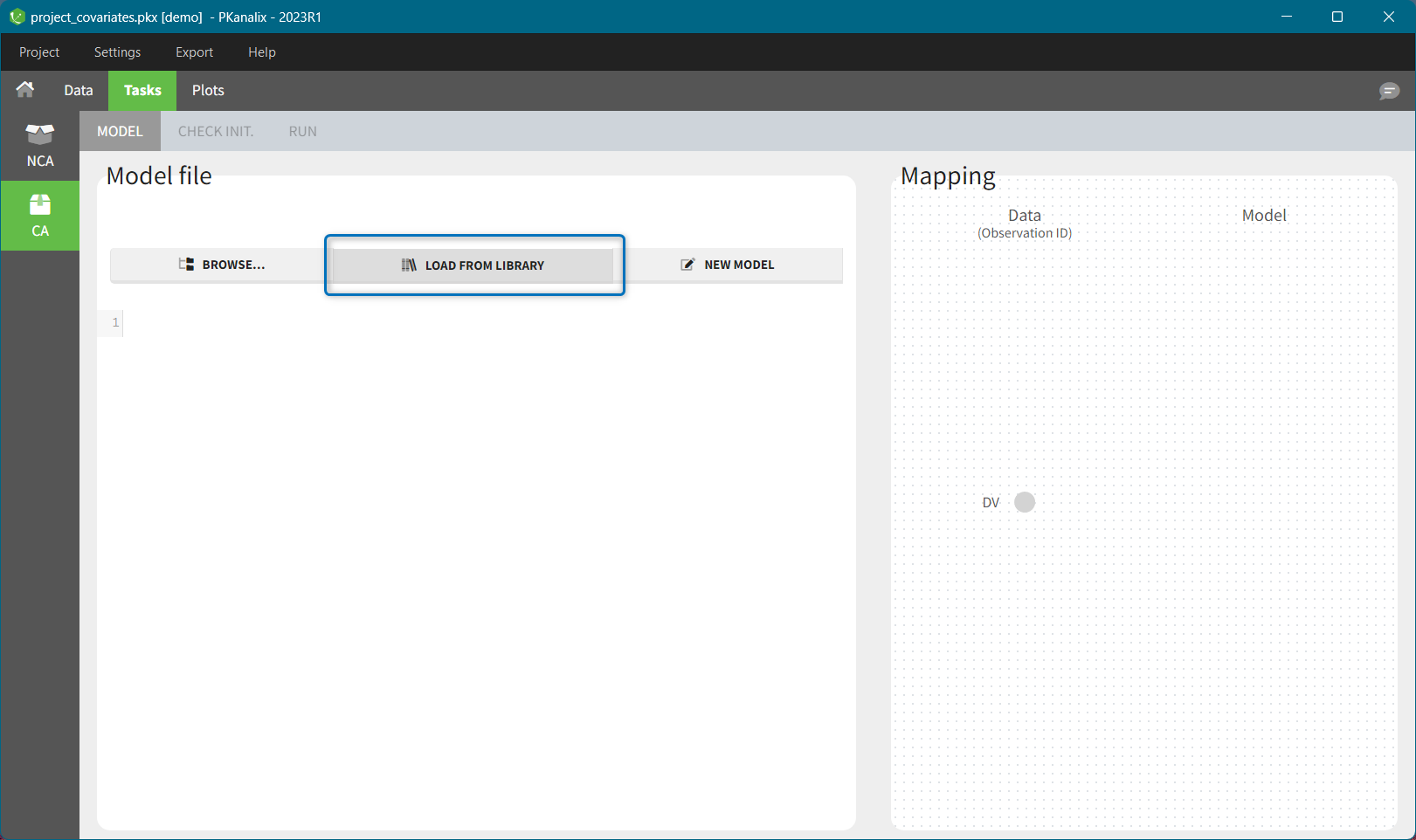

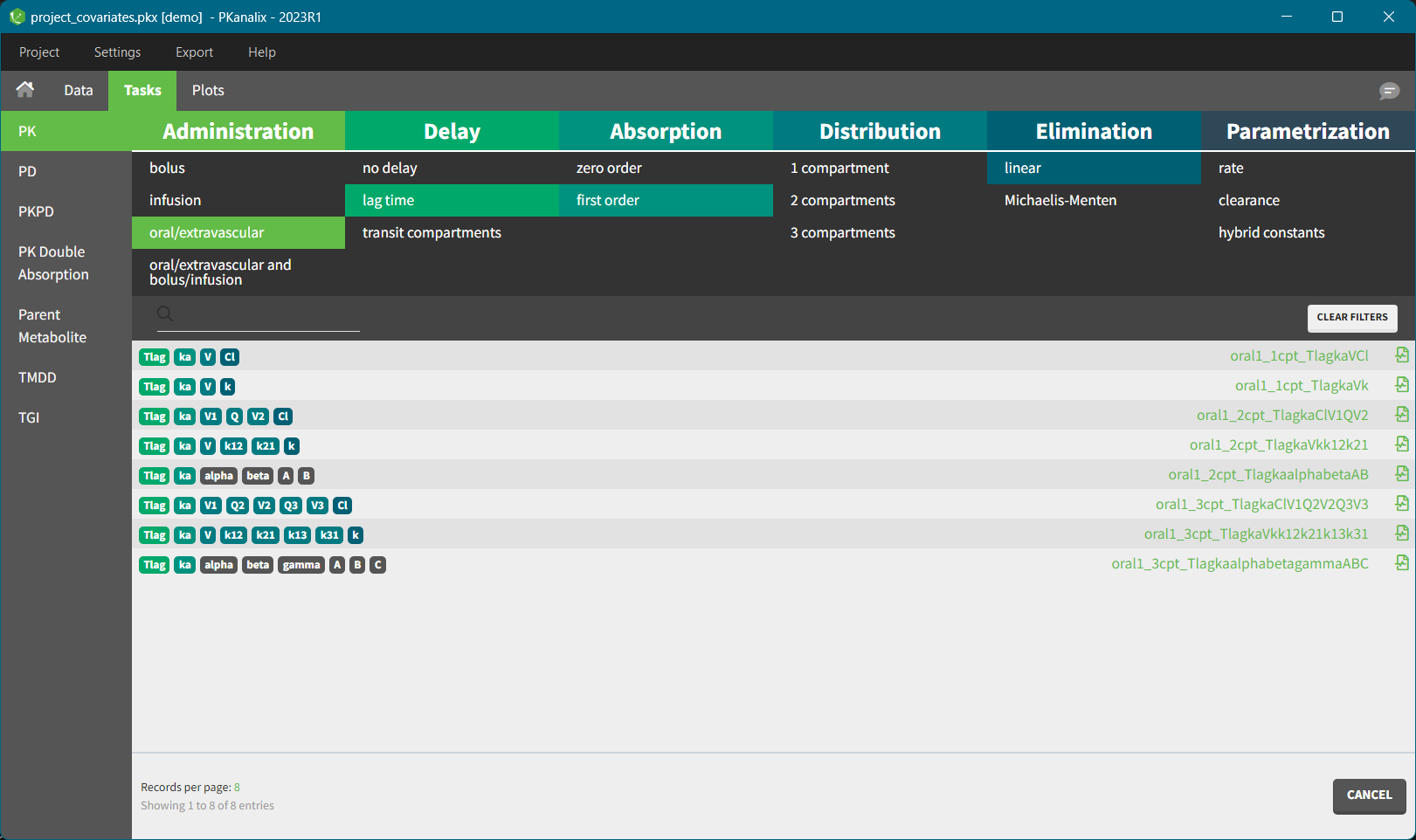

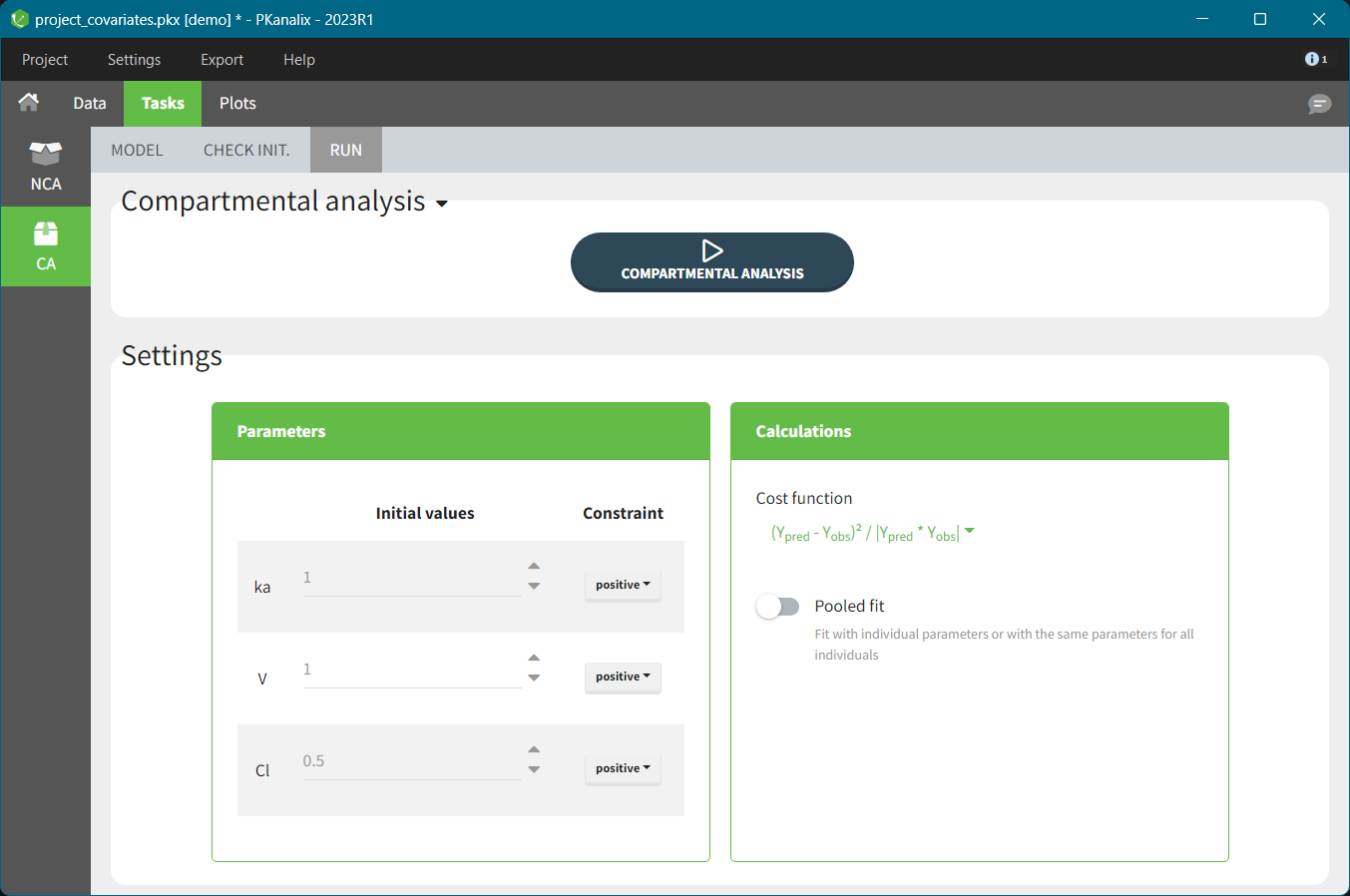

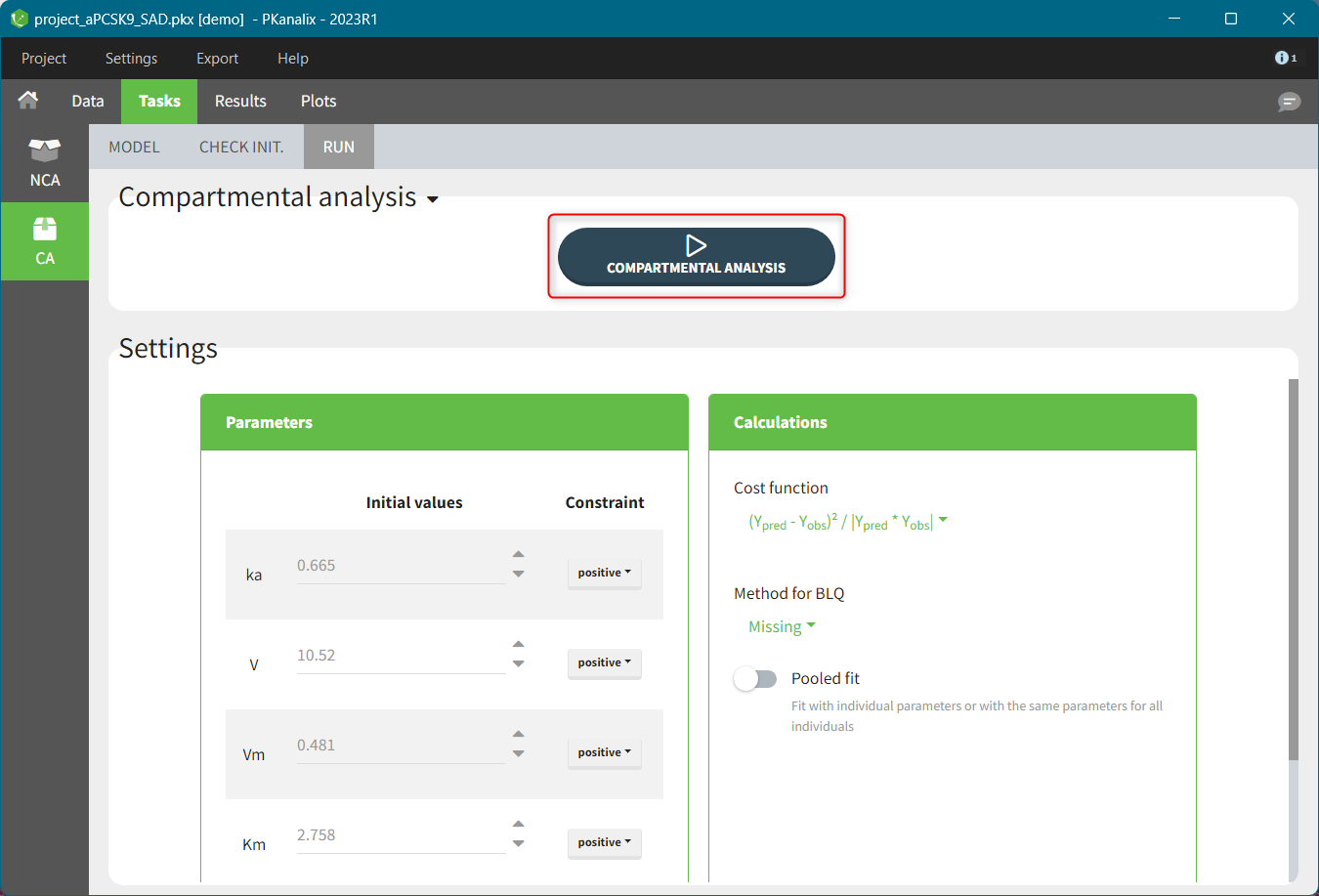

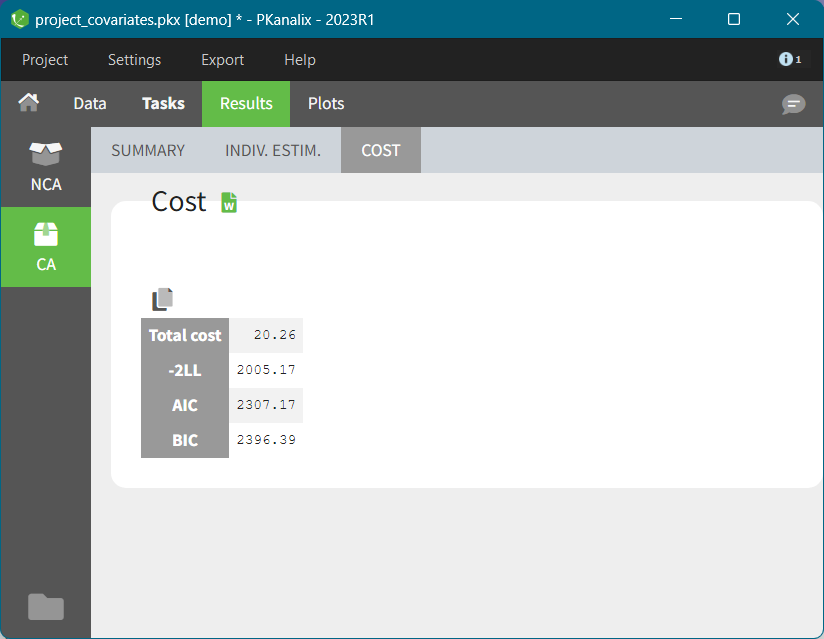

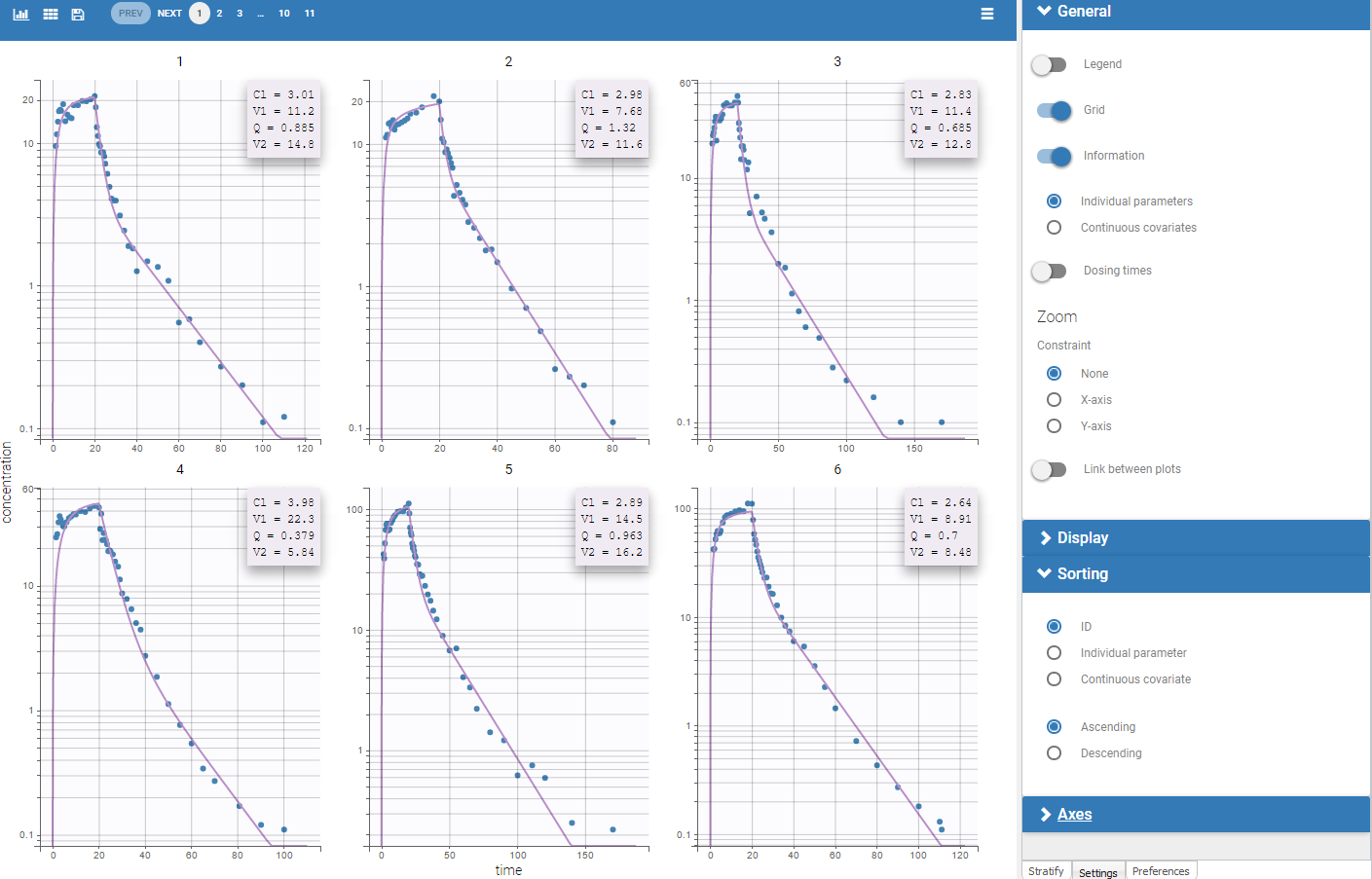

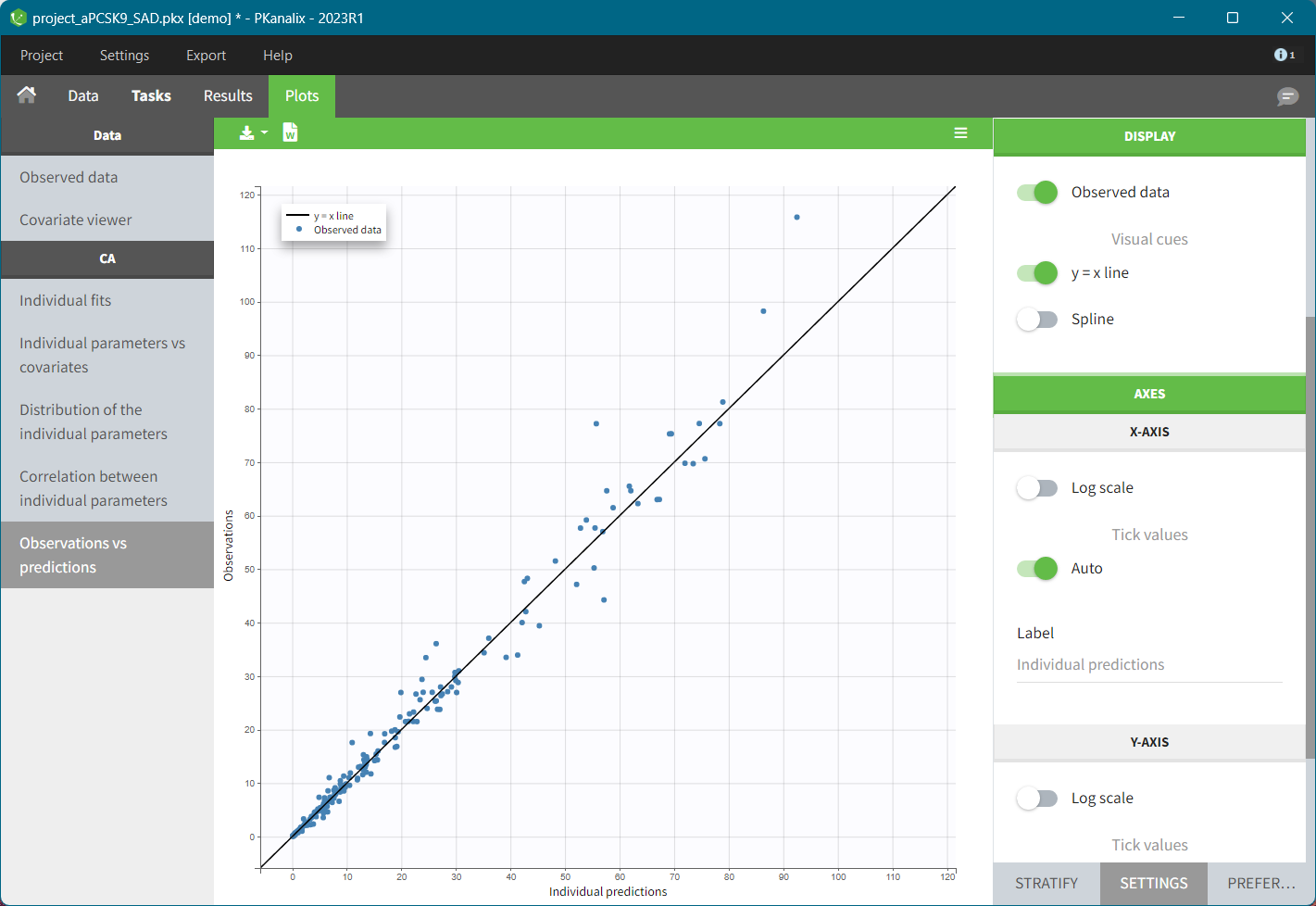

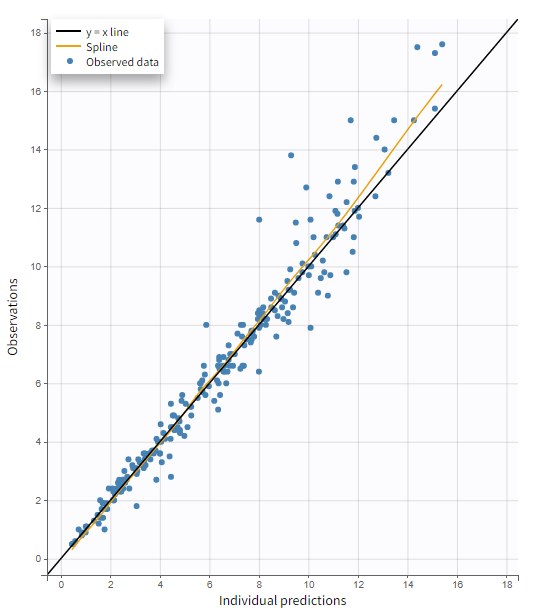

- The compartmental analysis (CA) – estimation of model parameters representing the PK as the dynamics in compartments for each individual. It does not include population analysis performed in Monolix.

What else?

- A clear user-interface with an intuitive workflow to efficiently run the NCA, BE and CA analysis.

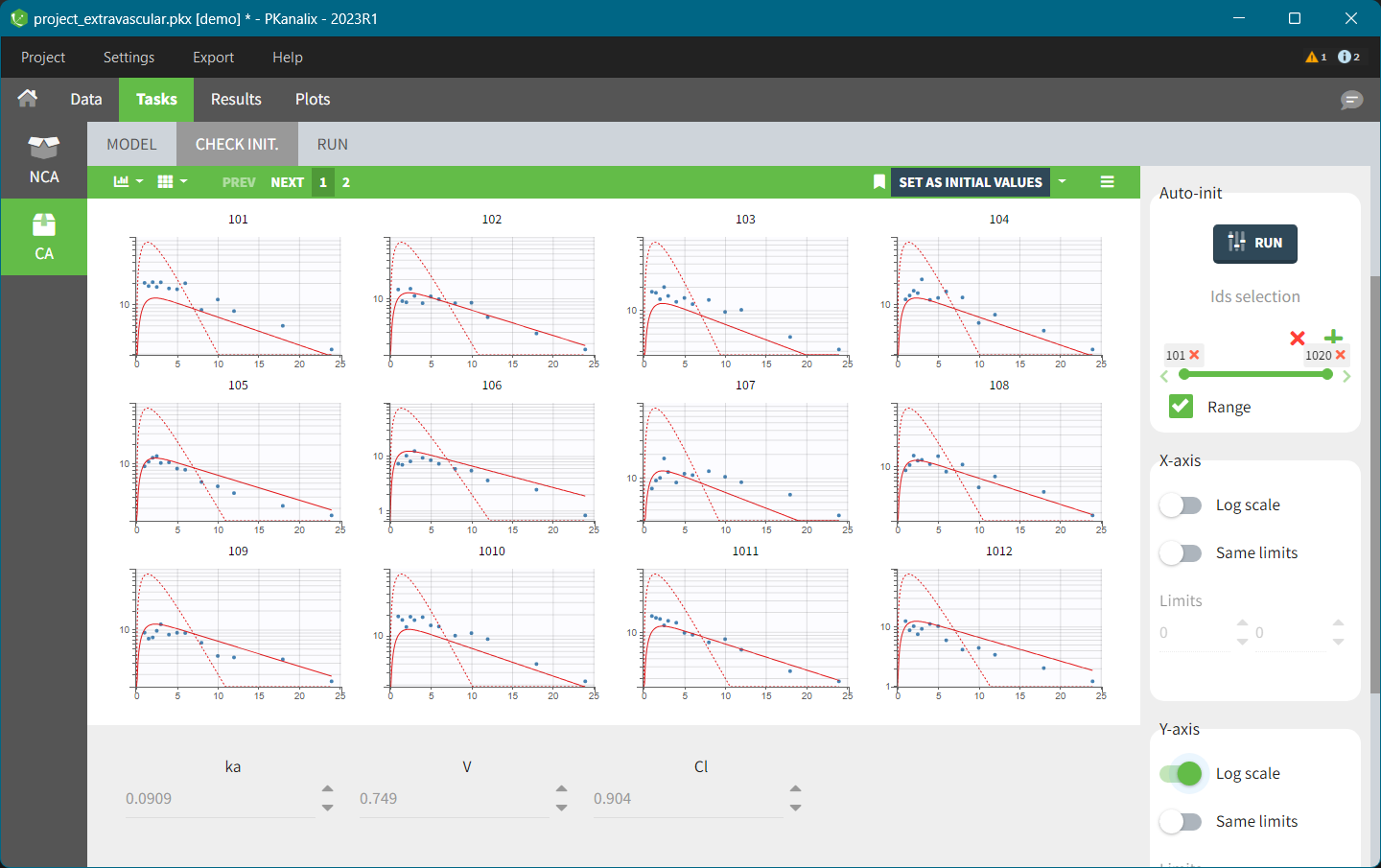

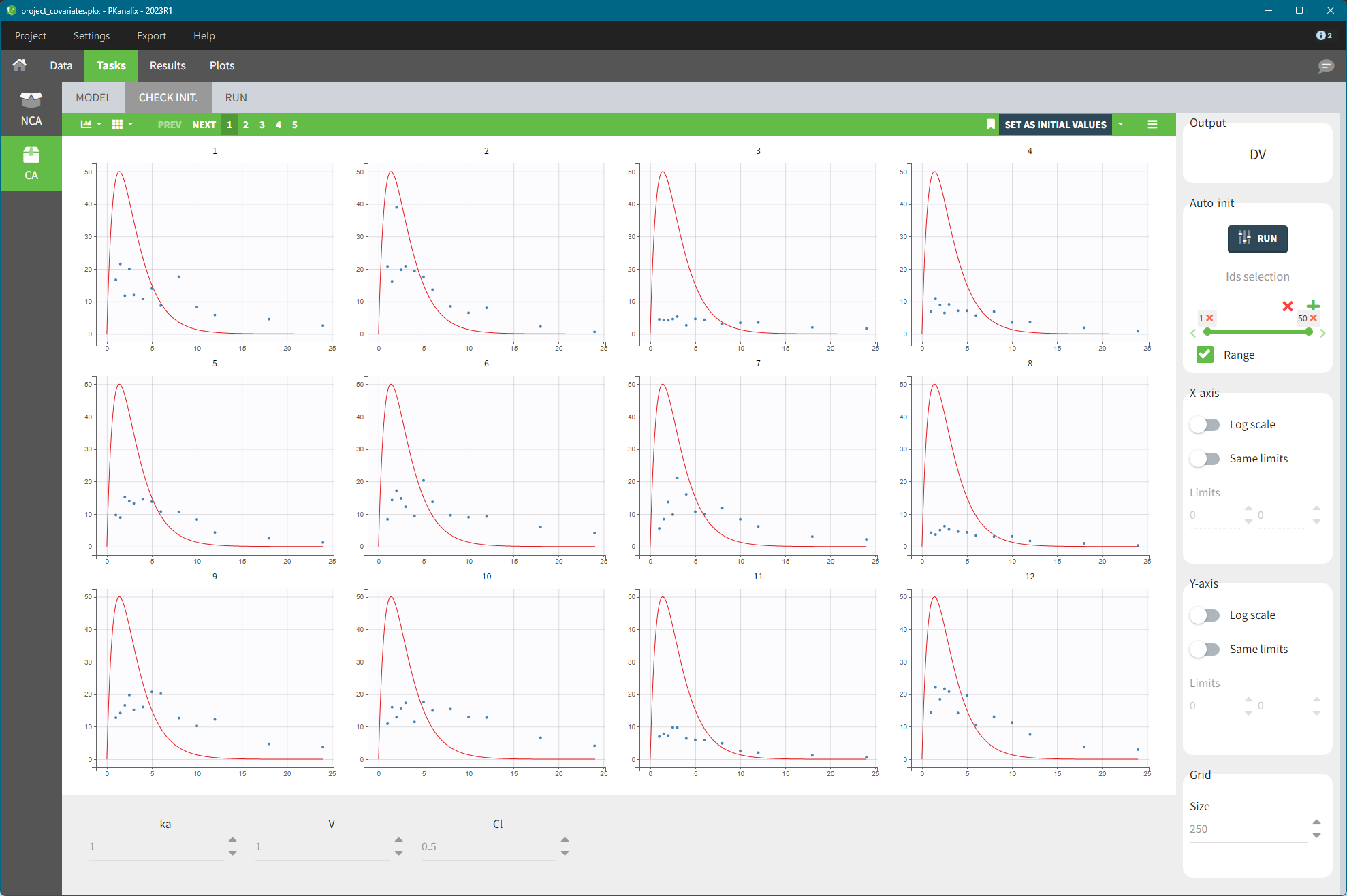

- Easily accessible PK models library and auto-initialization method to improve the convergence of the optimization of CA parameters.

- Integrated bioequivalence module to simplify the analysis

- Automatically generated results and plots to give an immediate feedback.

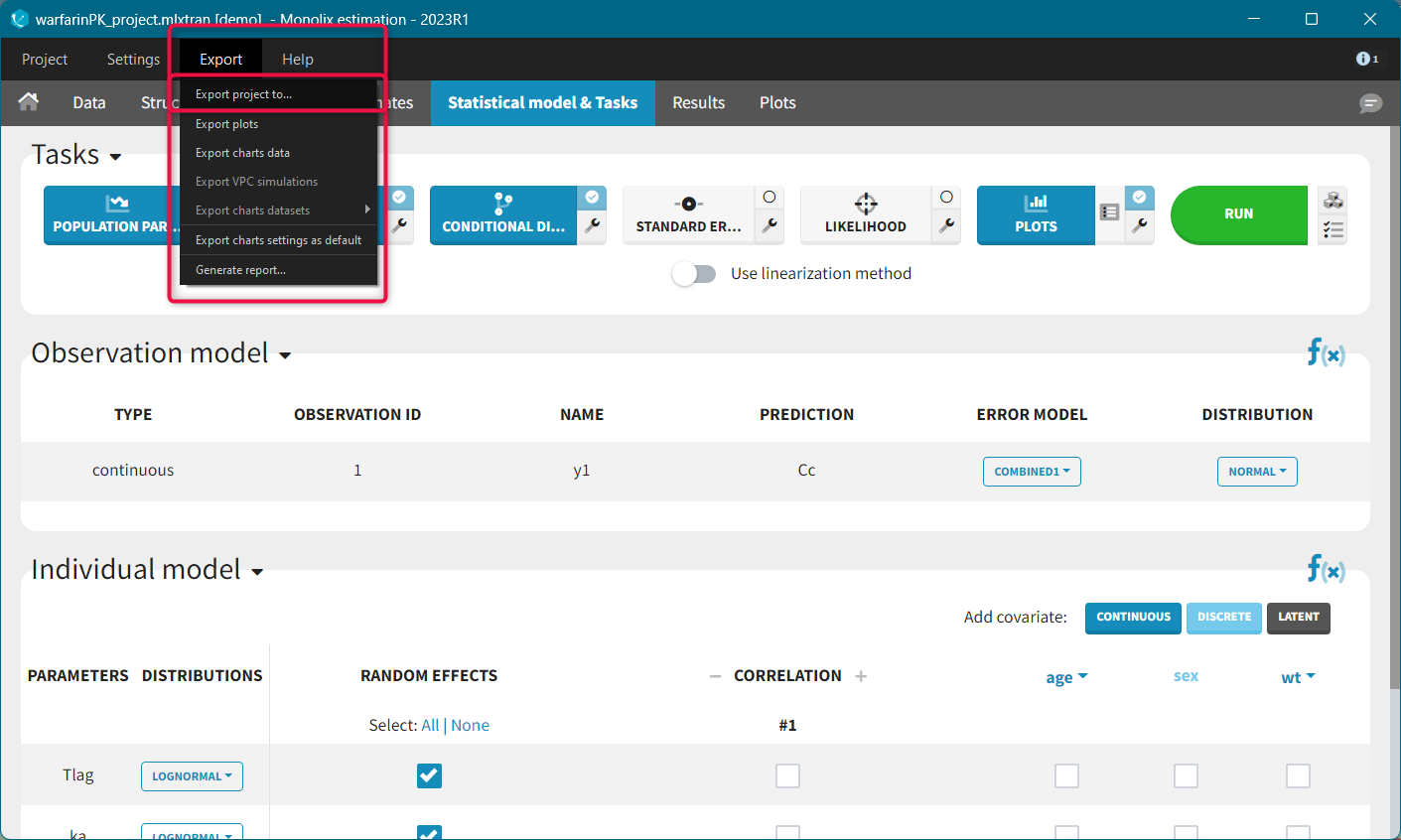

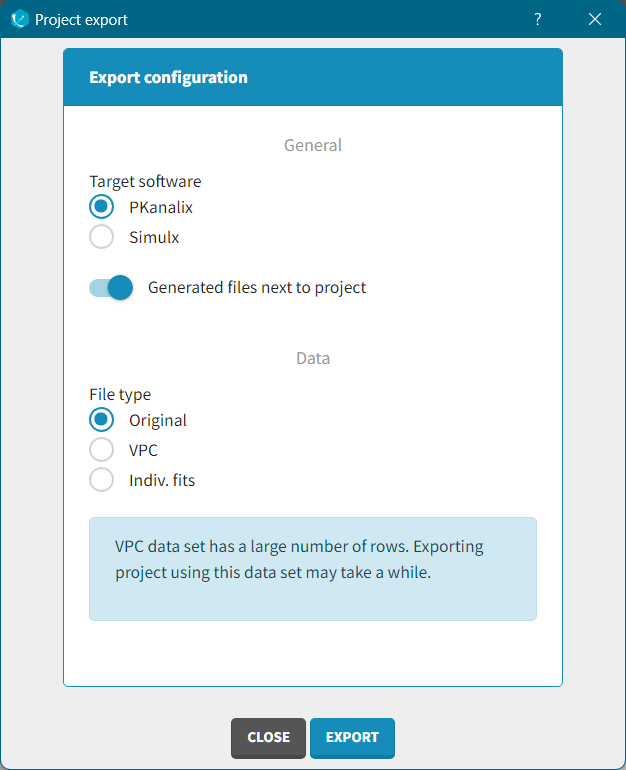

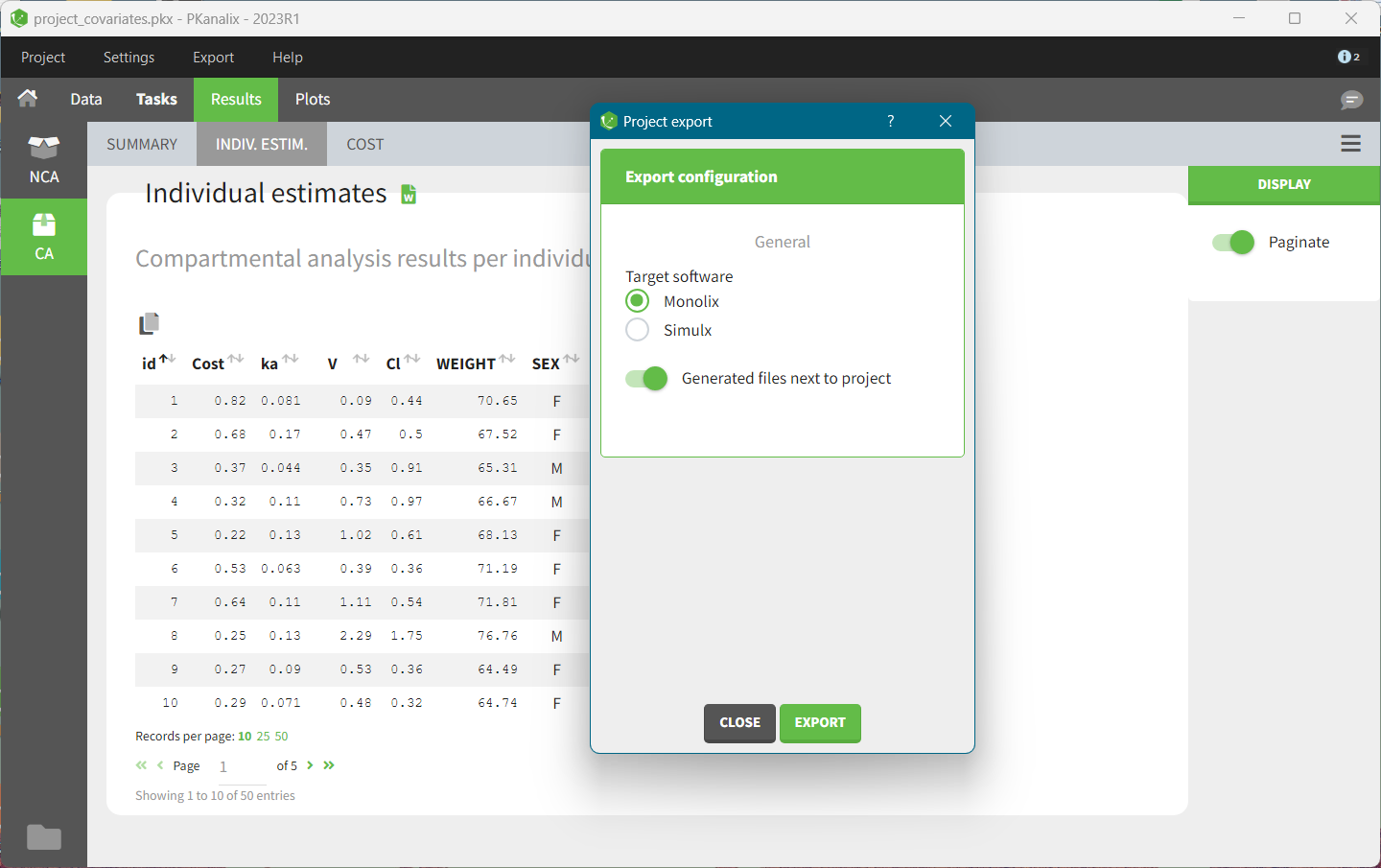

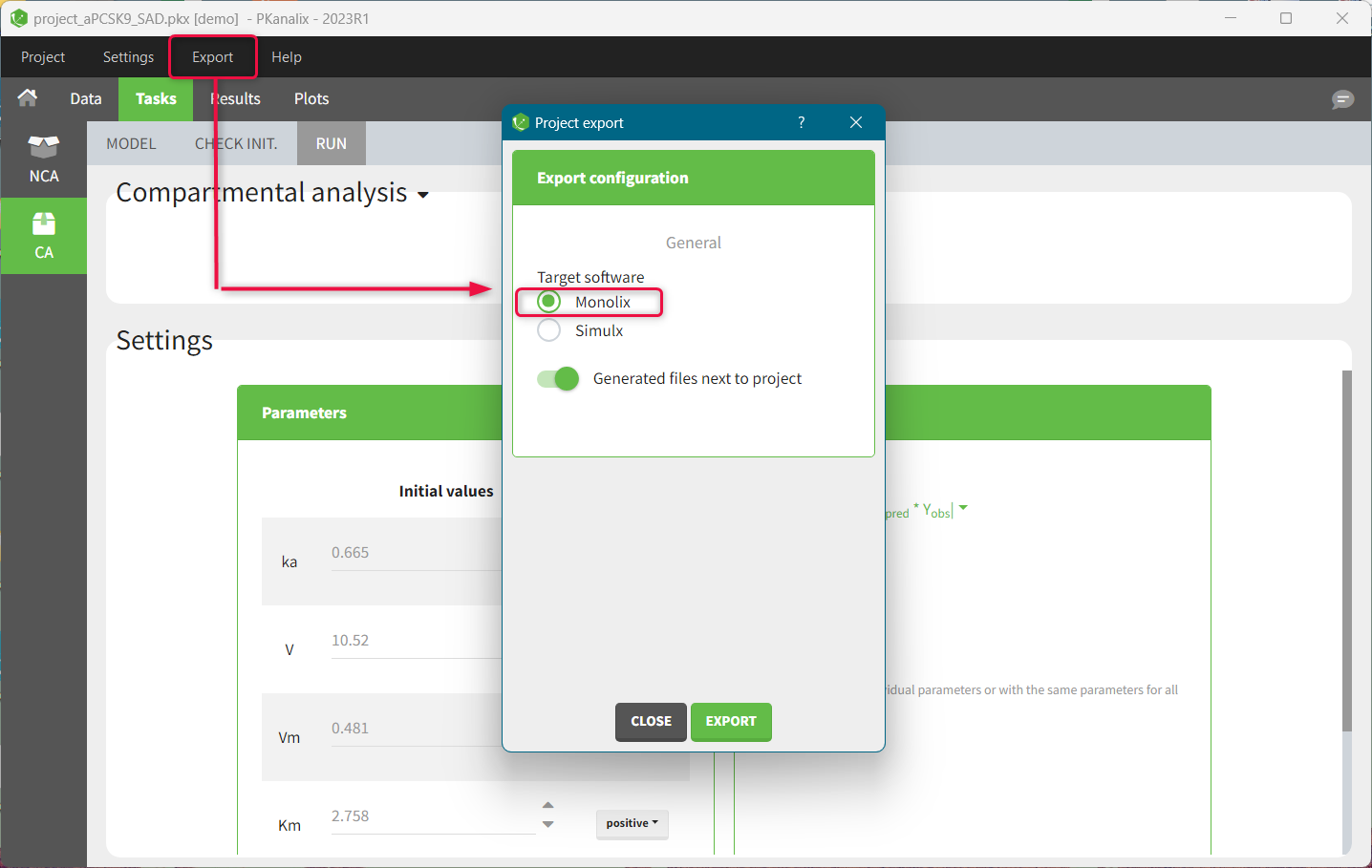

- Interconnection with MonolixSuite applications to export projects to Monolix for the population analysis.

PKanalix tasks

Pkanalix uses the dataset format common for all MonolixSuite applications, see here for more details. It allows to move your project between applications, for instance export a CA project to Monolix and perform a population analysis with one “click” of a button.

Non Compartmental Analysis |

Bioequivalence |

Compartmental Analysis |

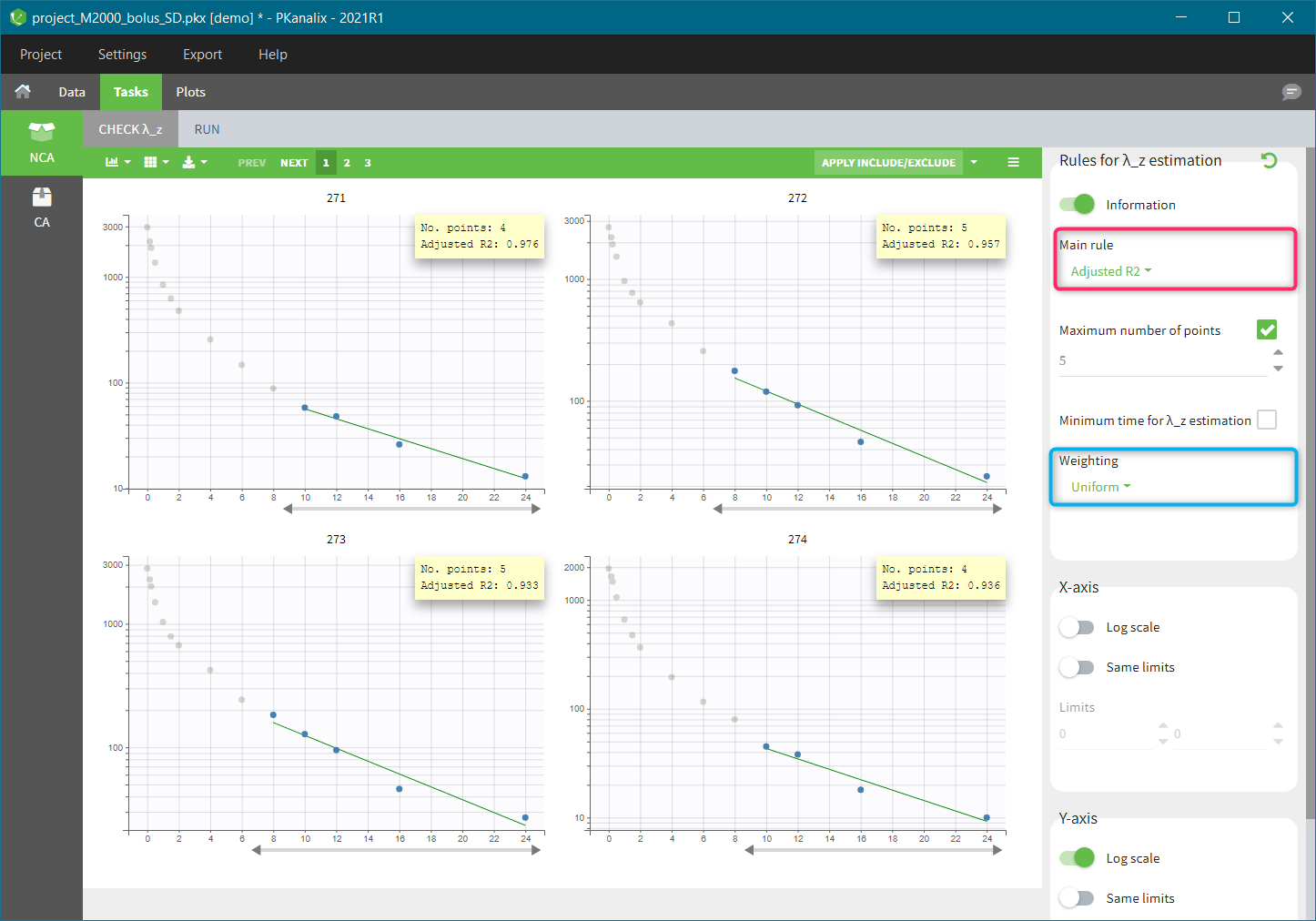

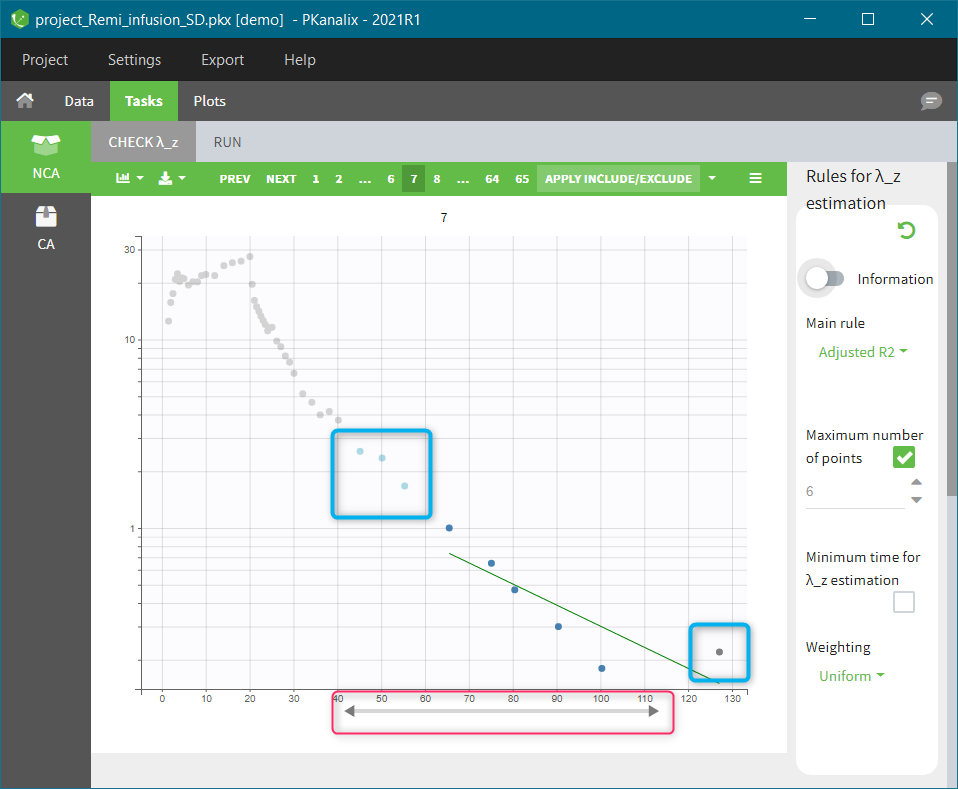

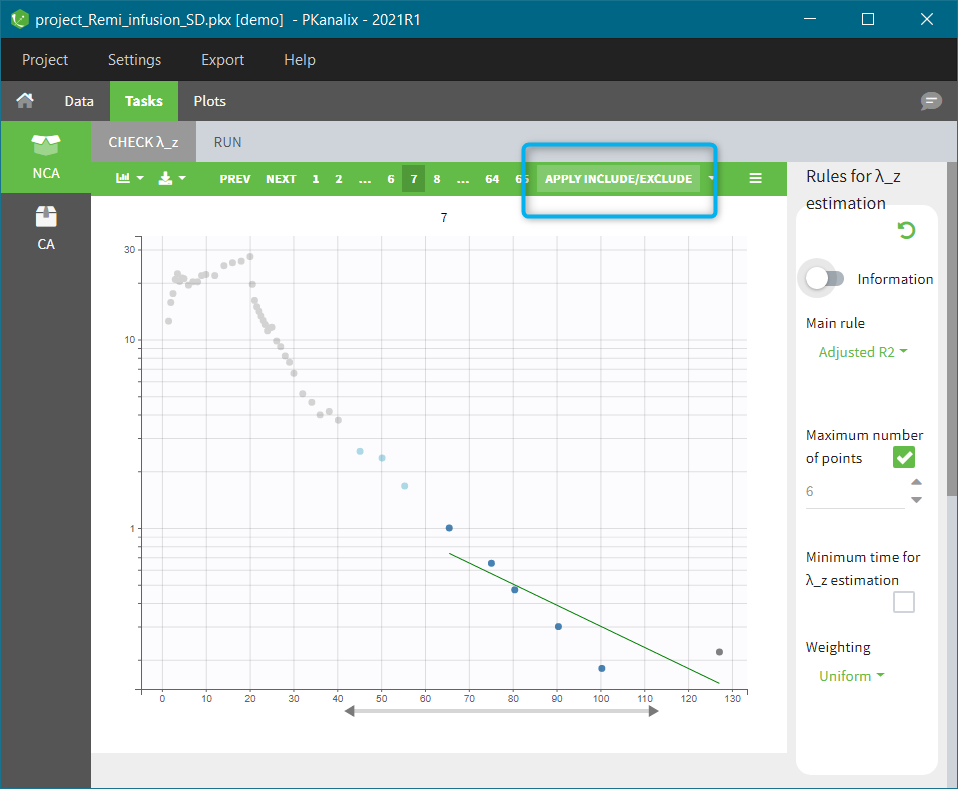

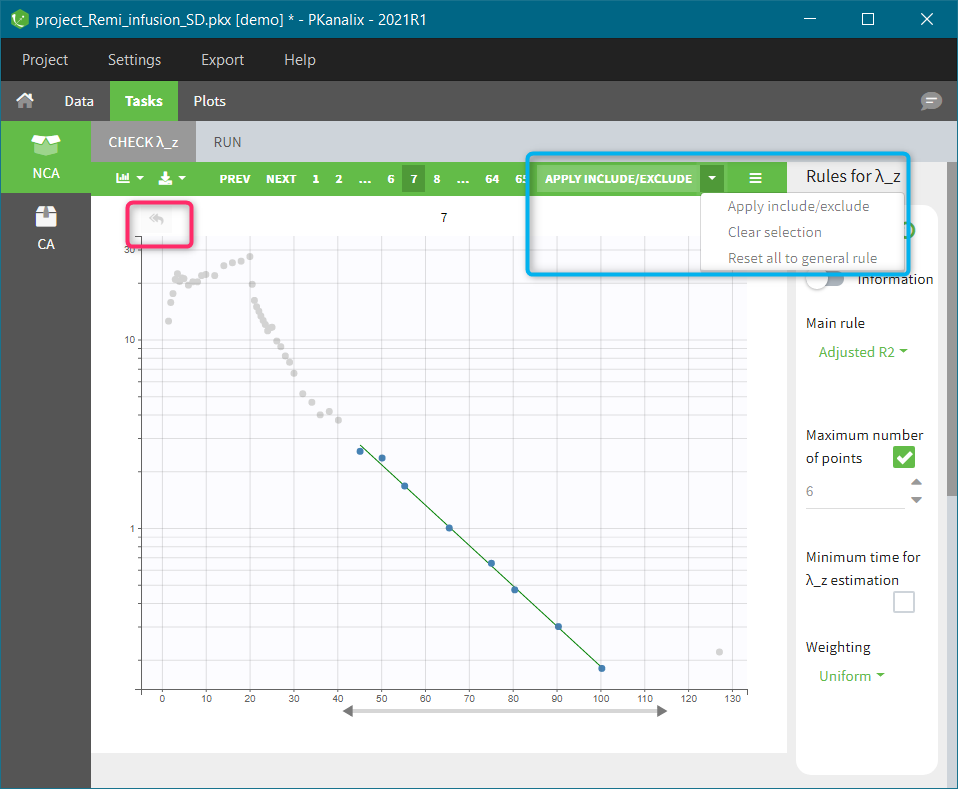

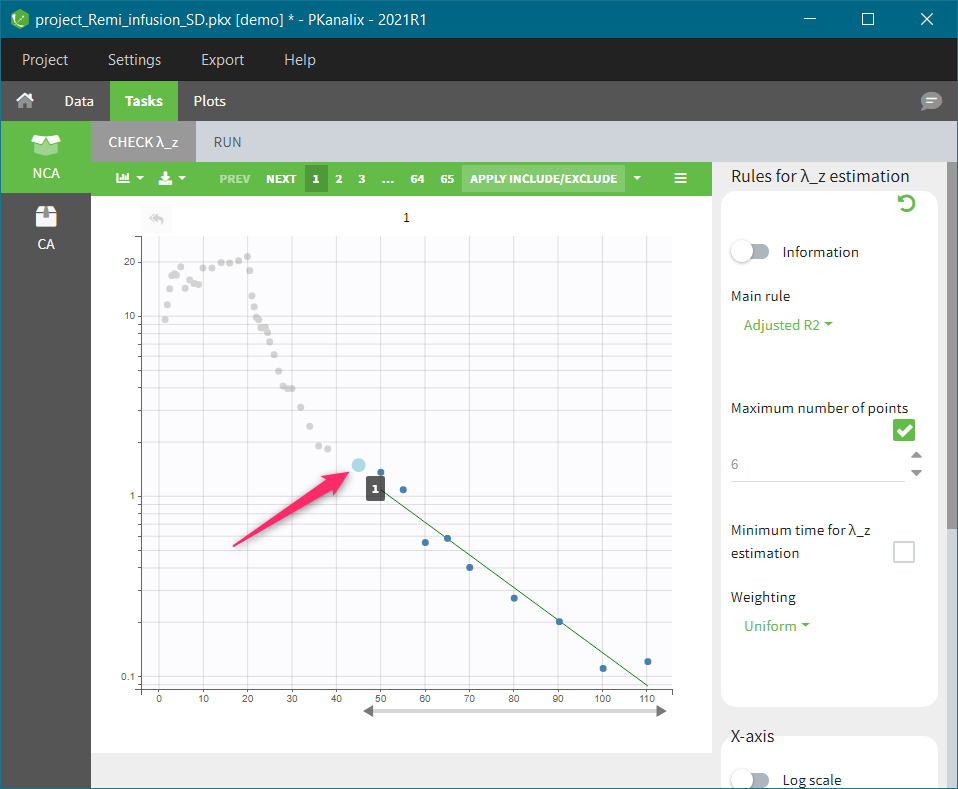

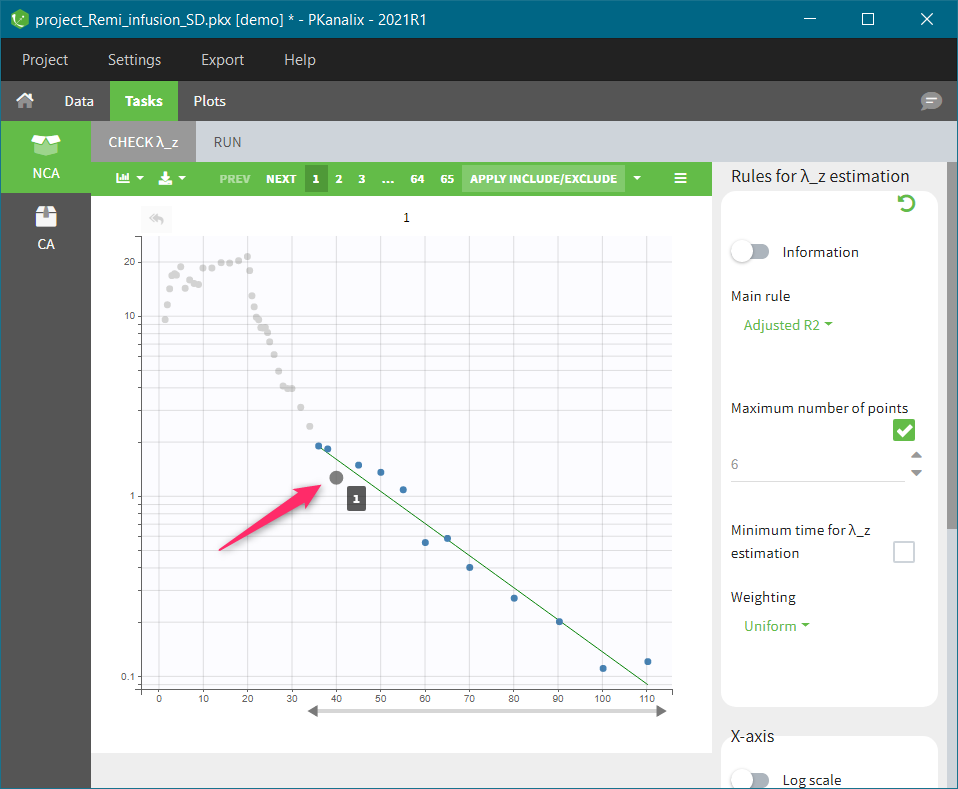

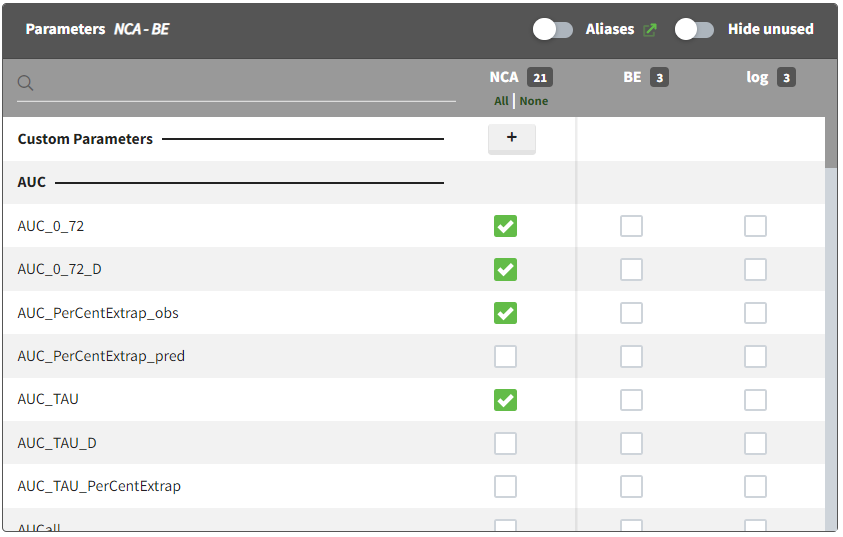

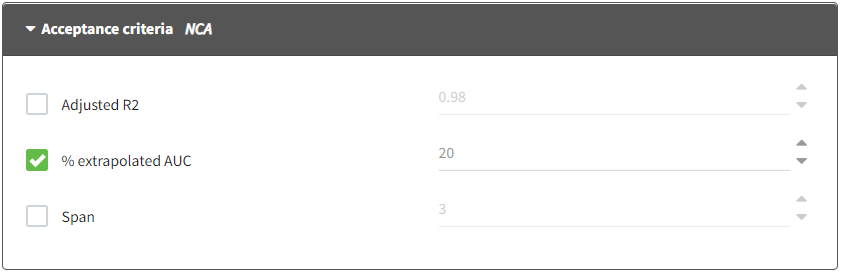

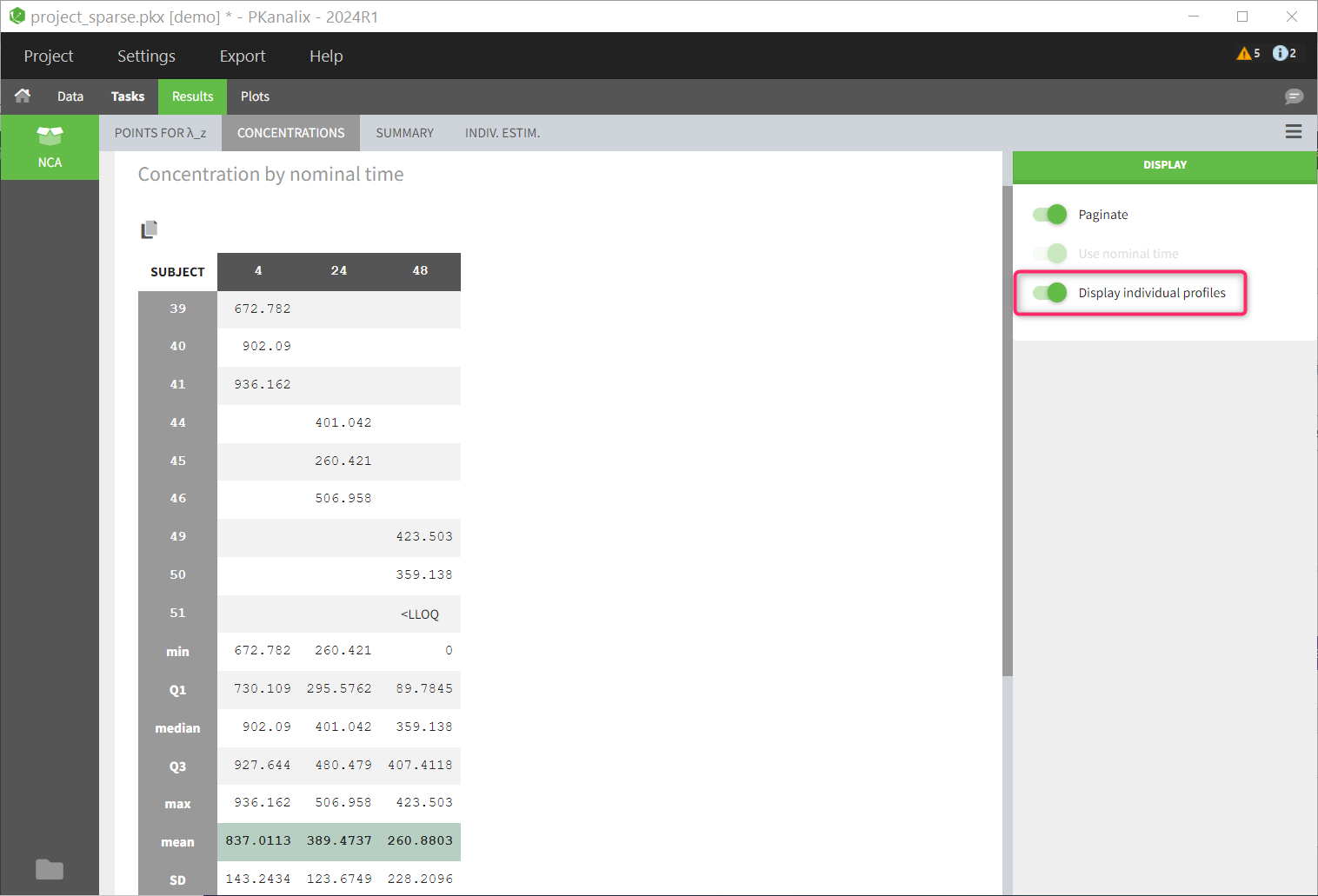

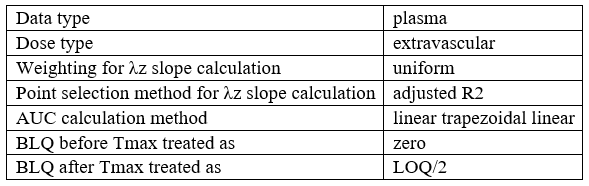

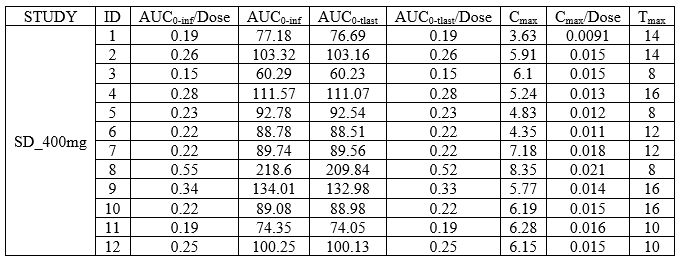

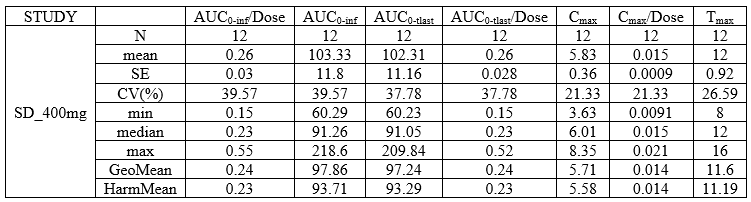

| This task consists in defining rules for the calculation of the \(\lambda_z\) (slope of the terminal elimination phase) to compute all the NCA parameters. This definition can be either global via general rules or manual on each individual – with the interactive plots the user selects or removes points in the \(\lambda_z\) calculation. |

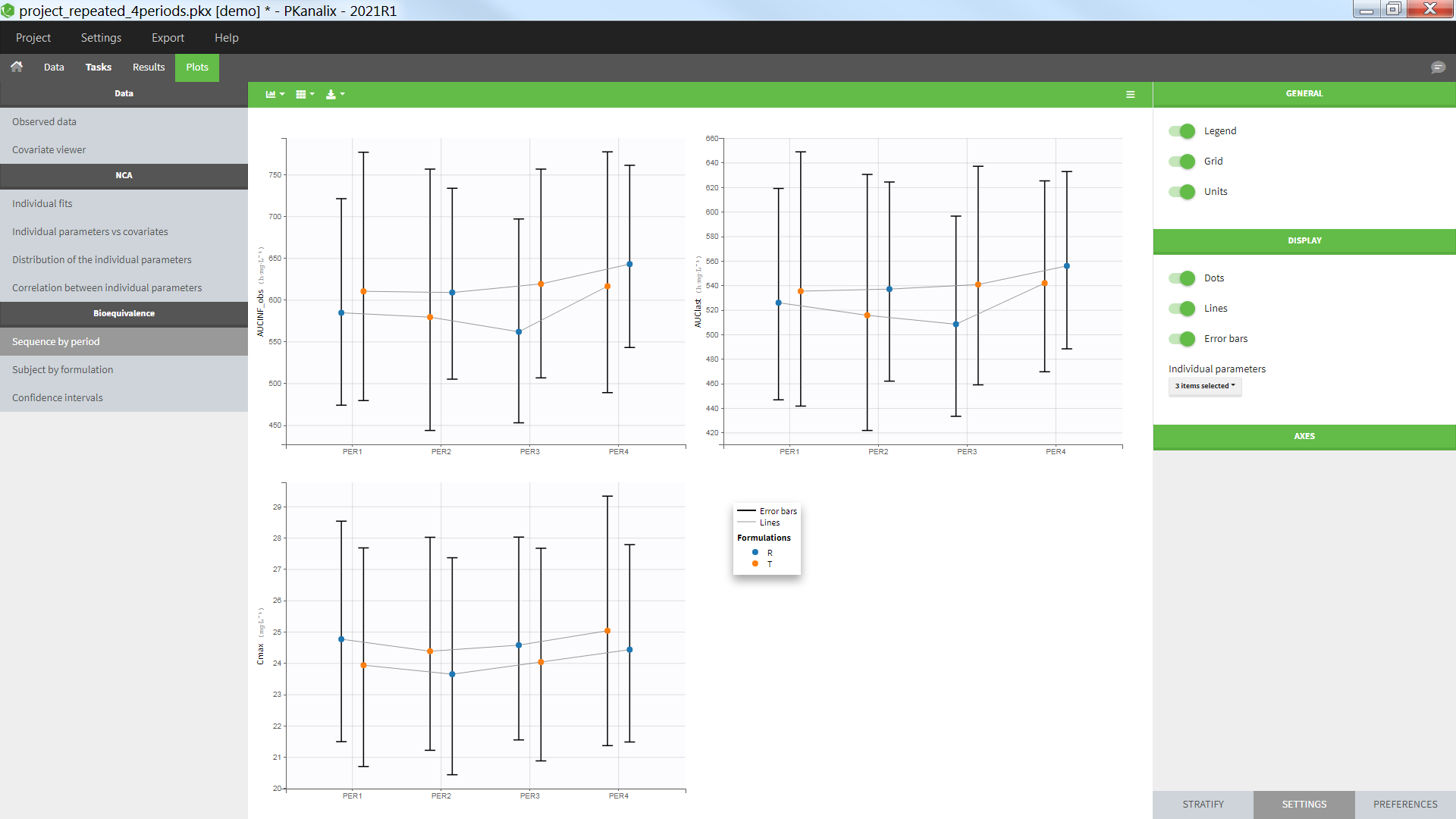

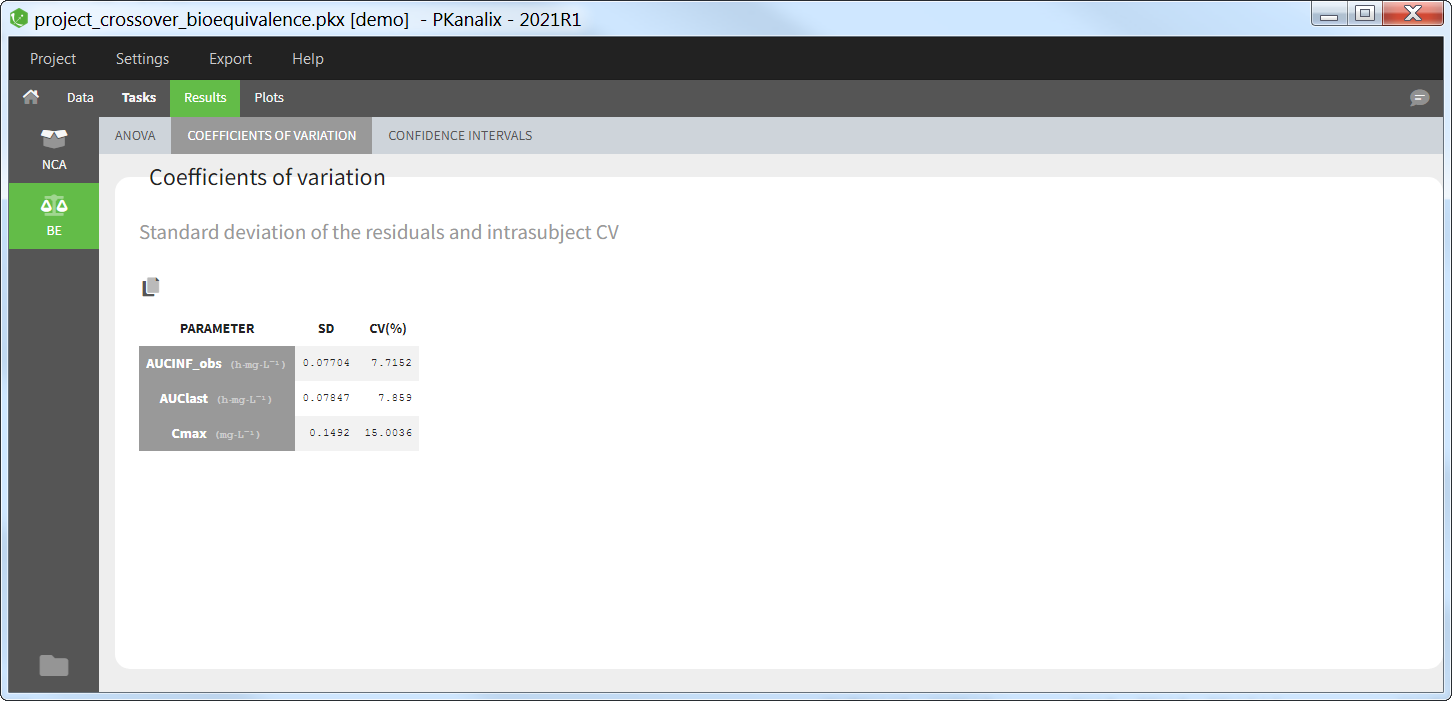

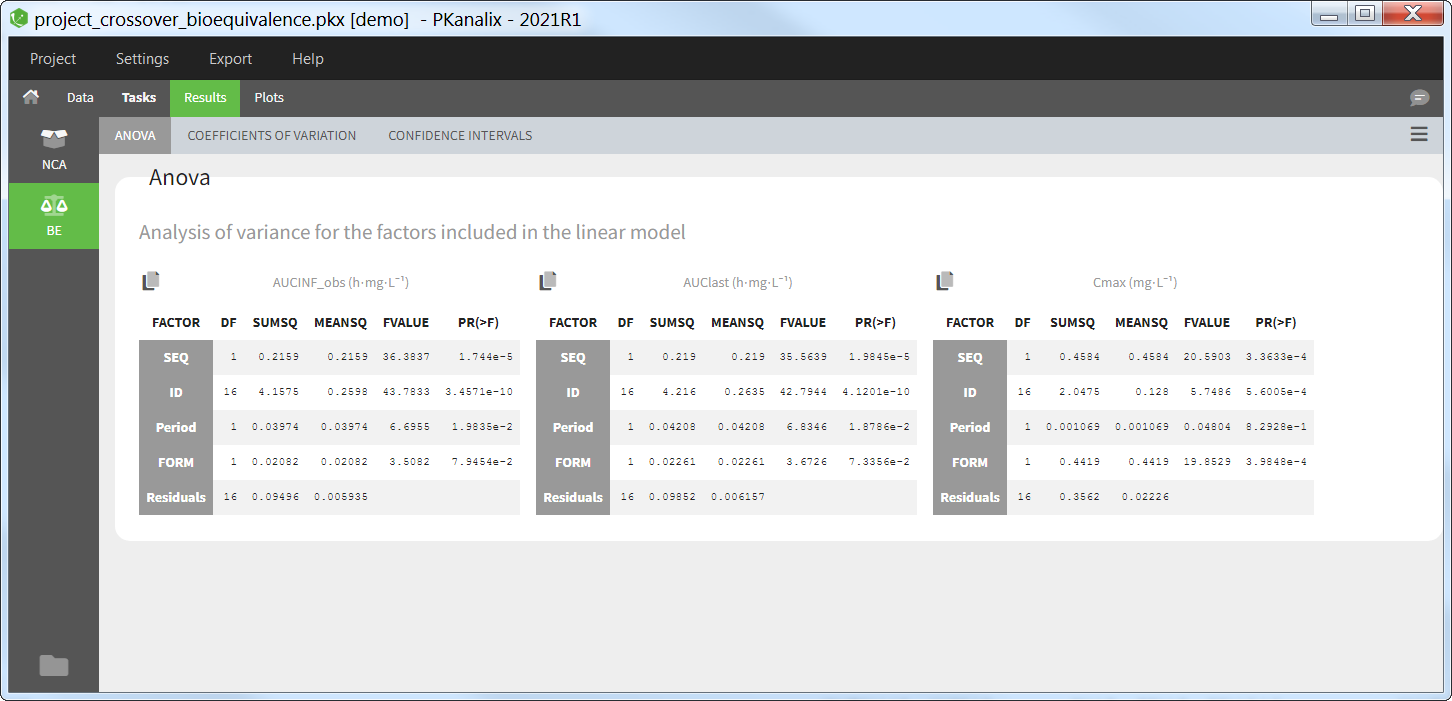

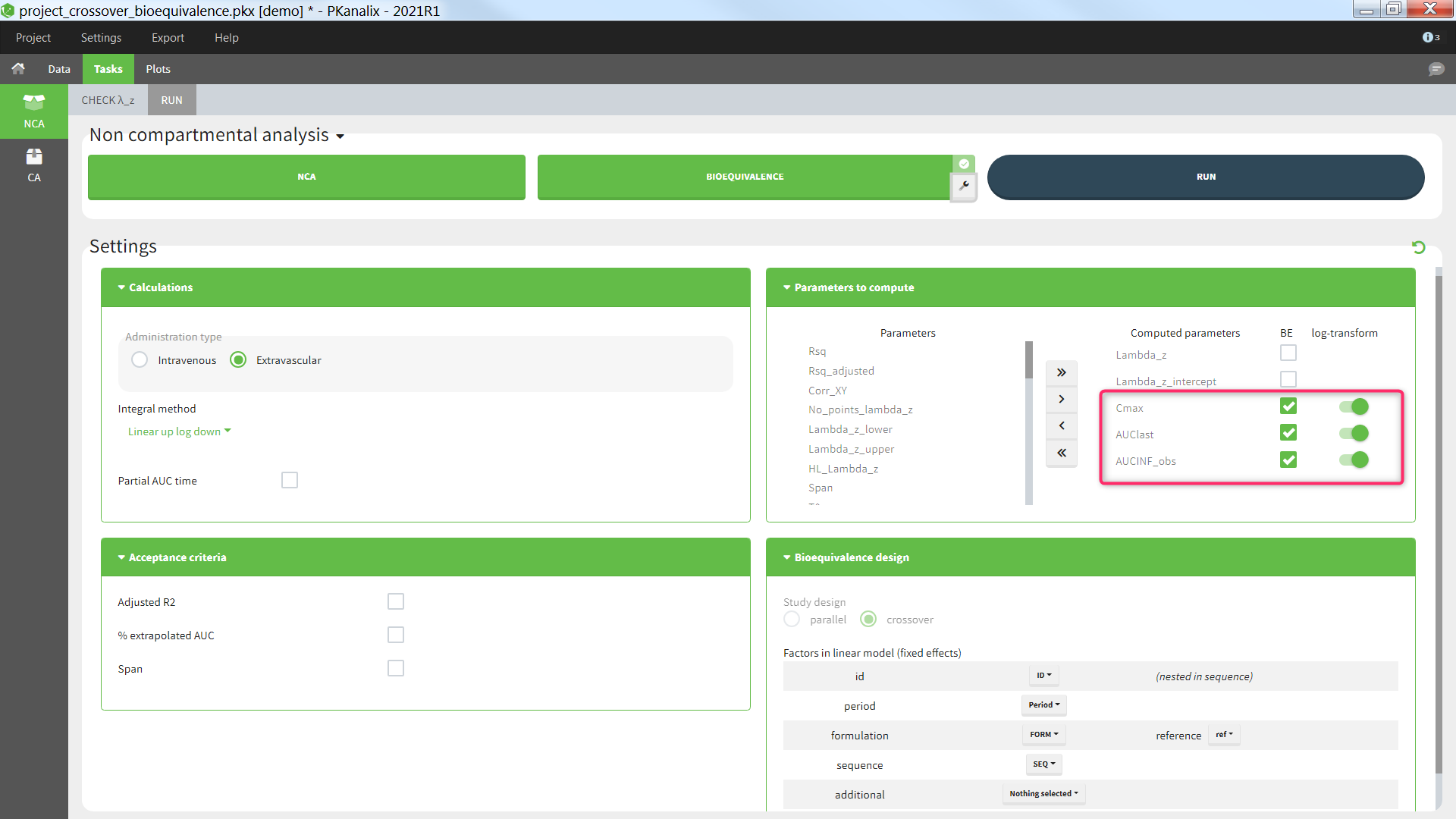

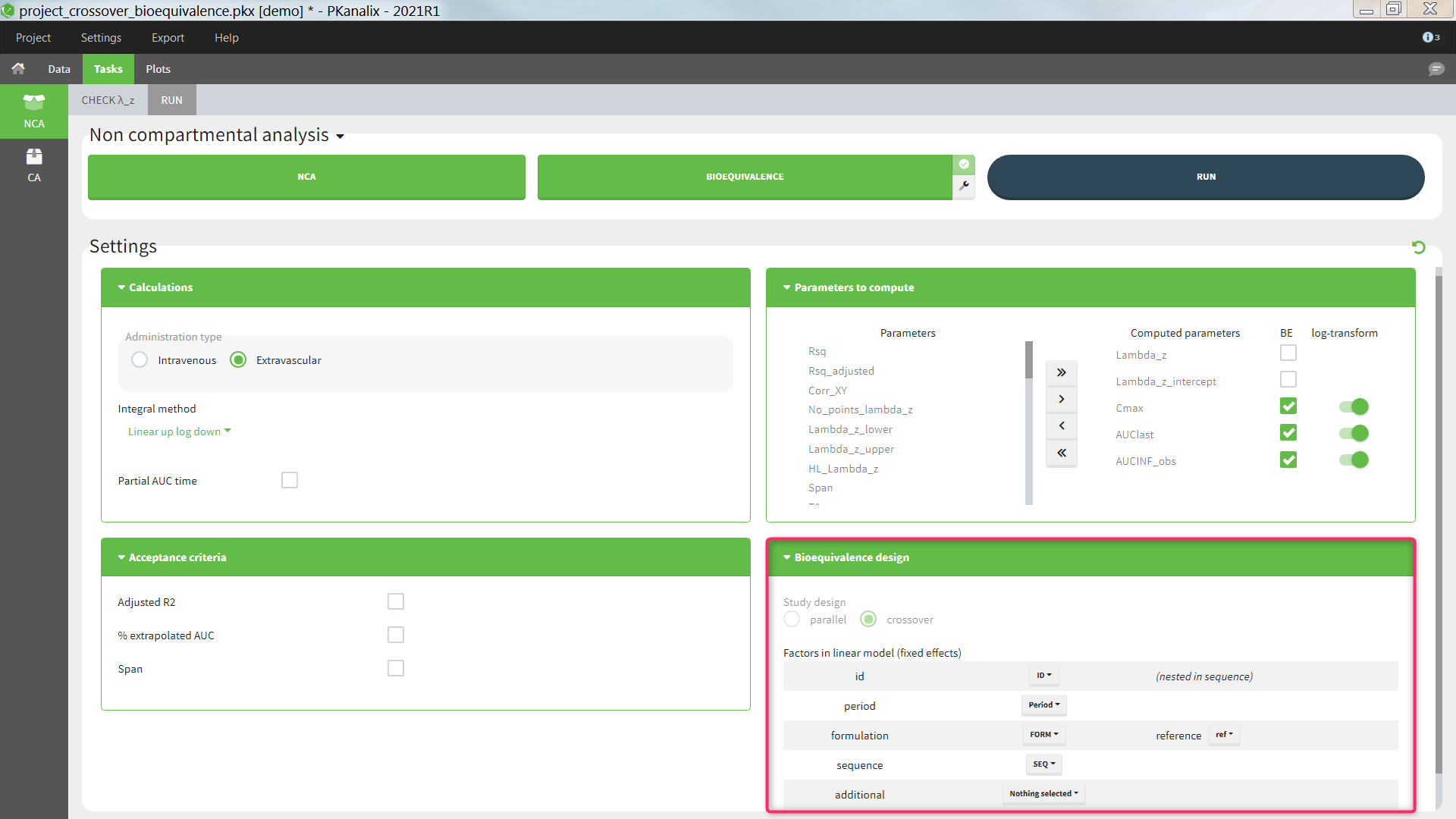

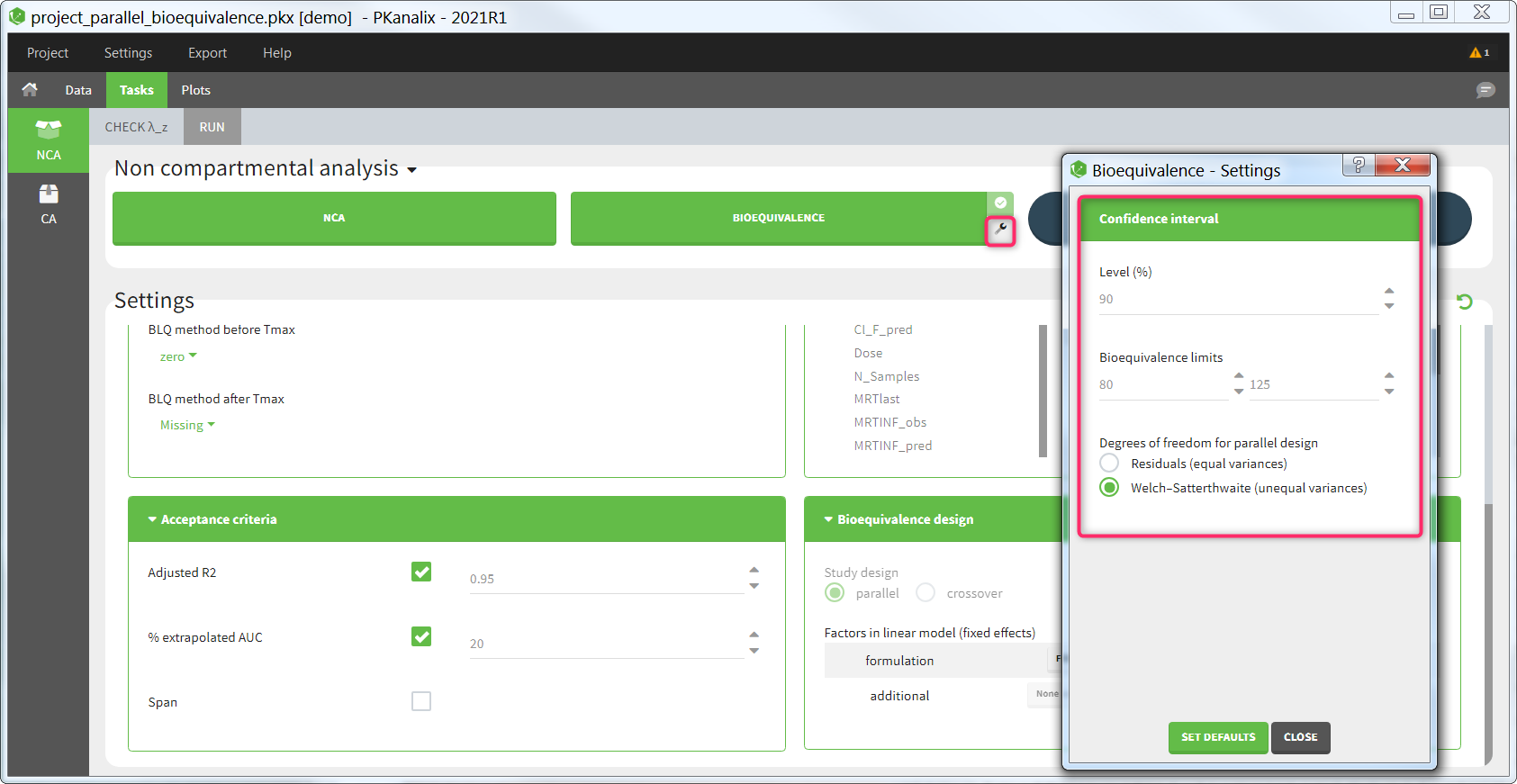

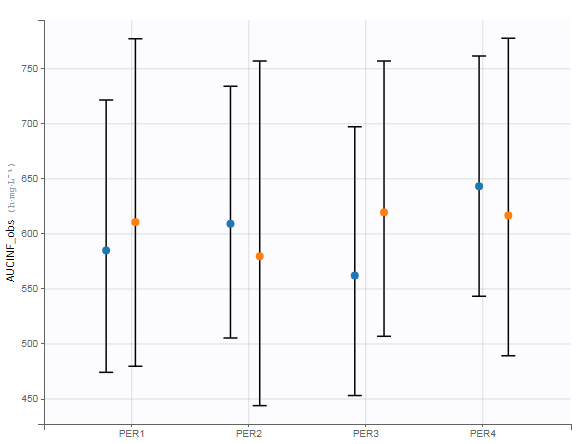

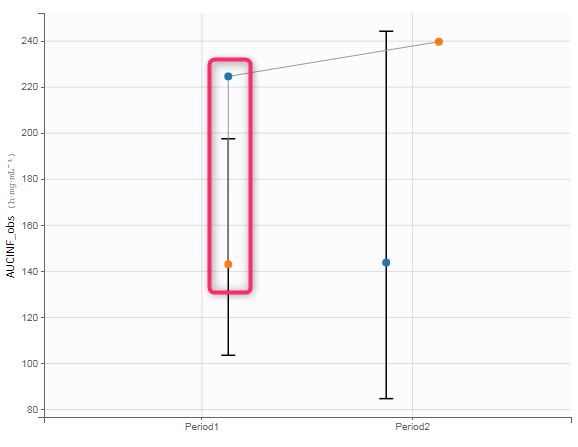

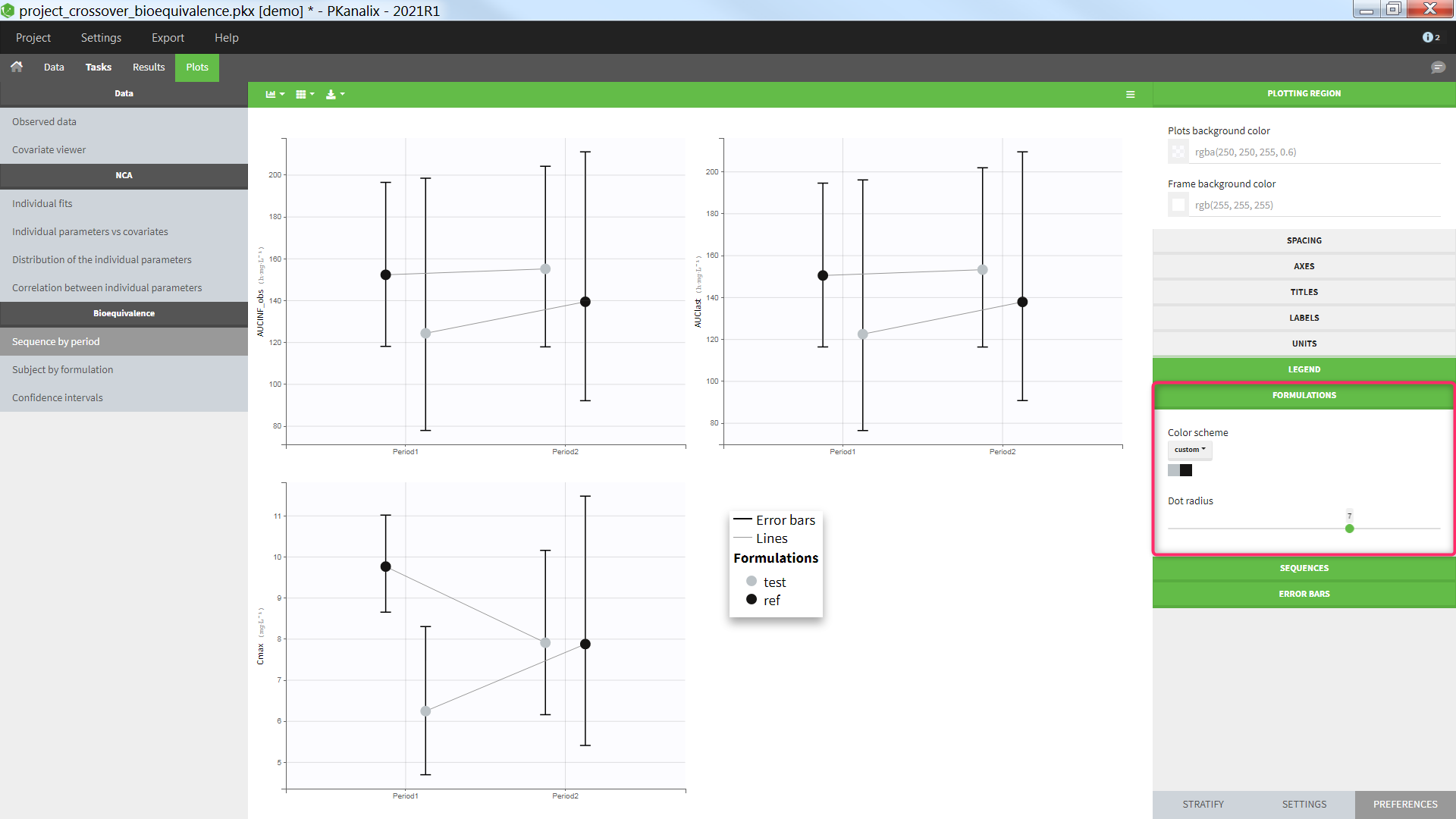

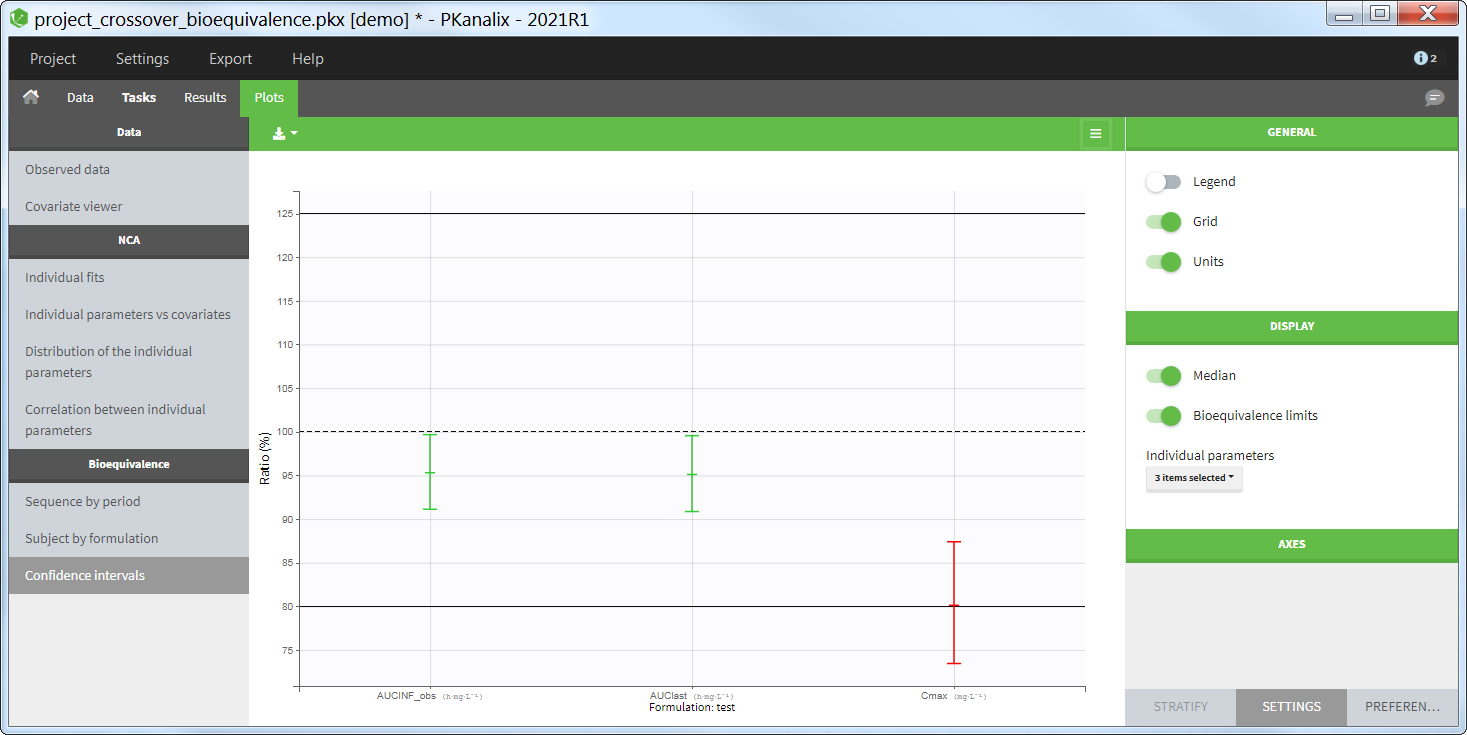

The average NCA parameters obtained for different groups (e.g a test and a reference formulation) can be compared using the Bioequivalence task. Linear model definition contains one or several fixed effects selected in an integrated module. It allows to obtain a confidence interval compared to the predefined BE limits and automatically displayed in intuitive tables and plots. |

This task estimates parameters of a pharmacokinetic model for each individual. The model can be custom or based on one of the user-friendly libraries. Automatic initialization method improves the convergence of parameters for each individual. |

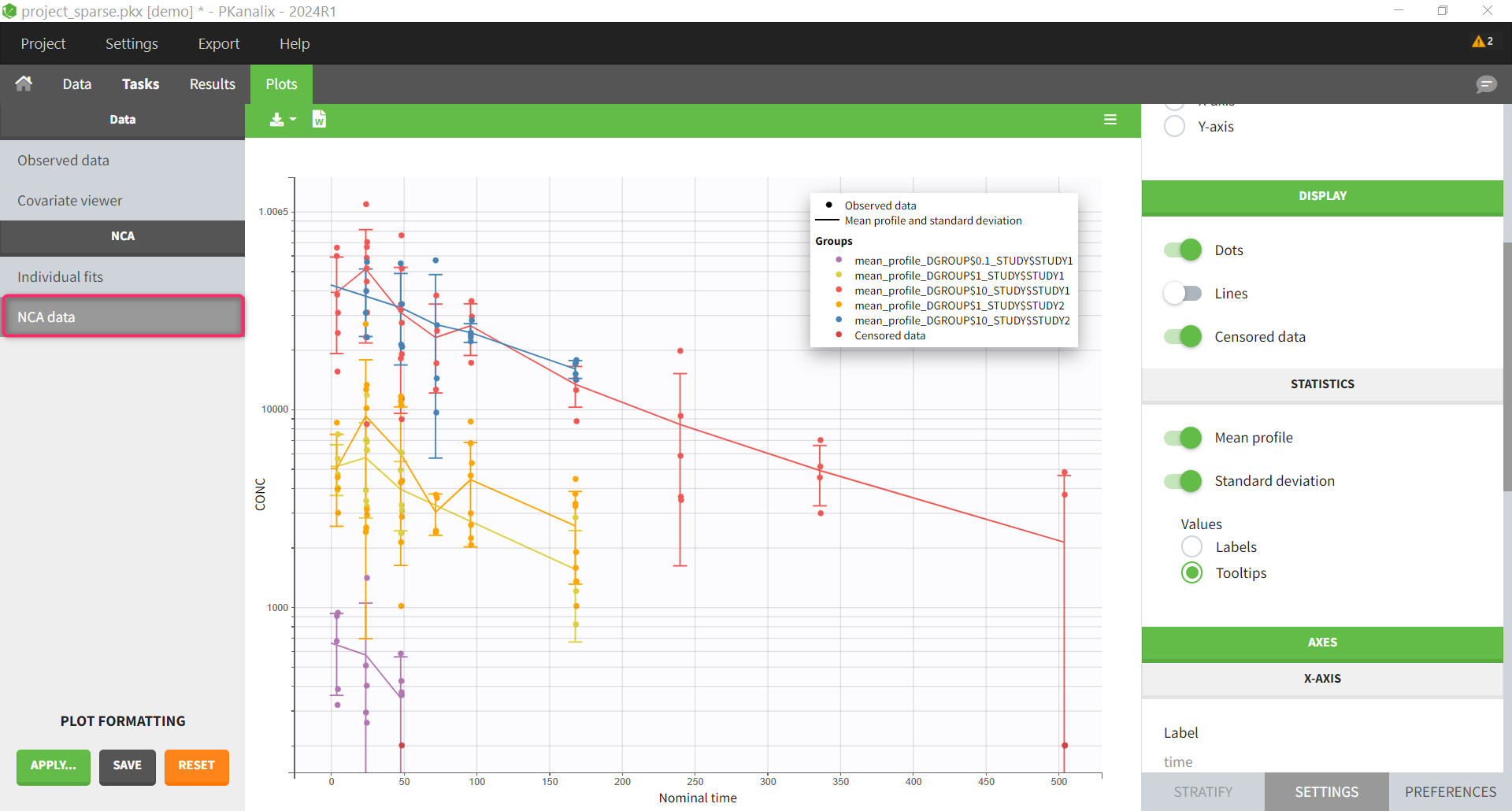

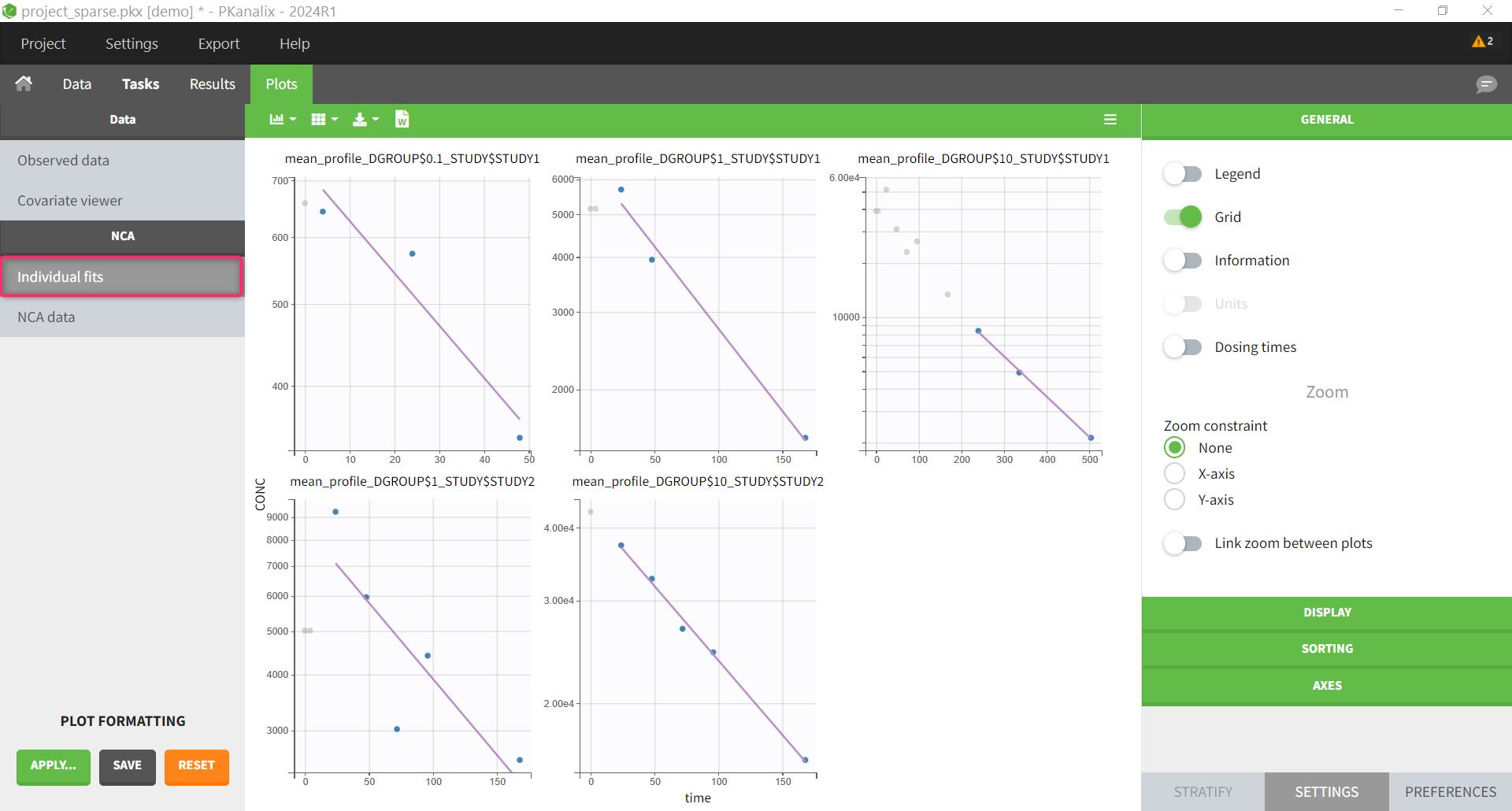

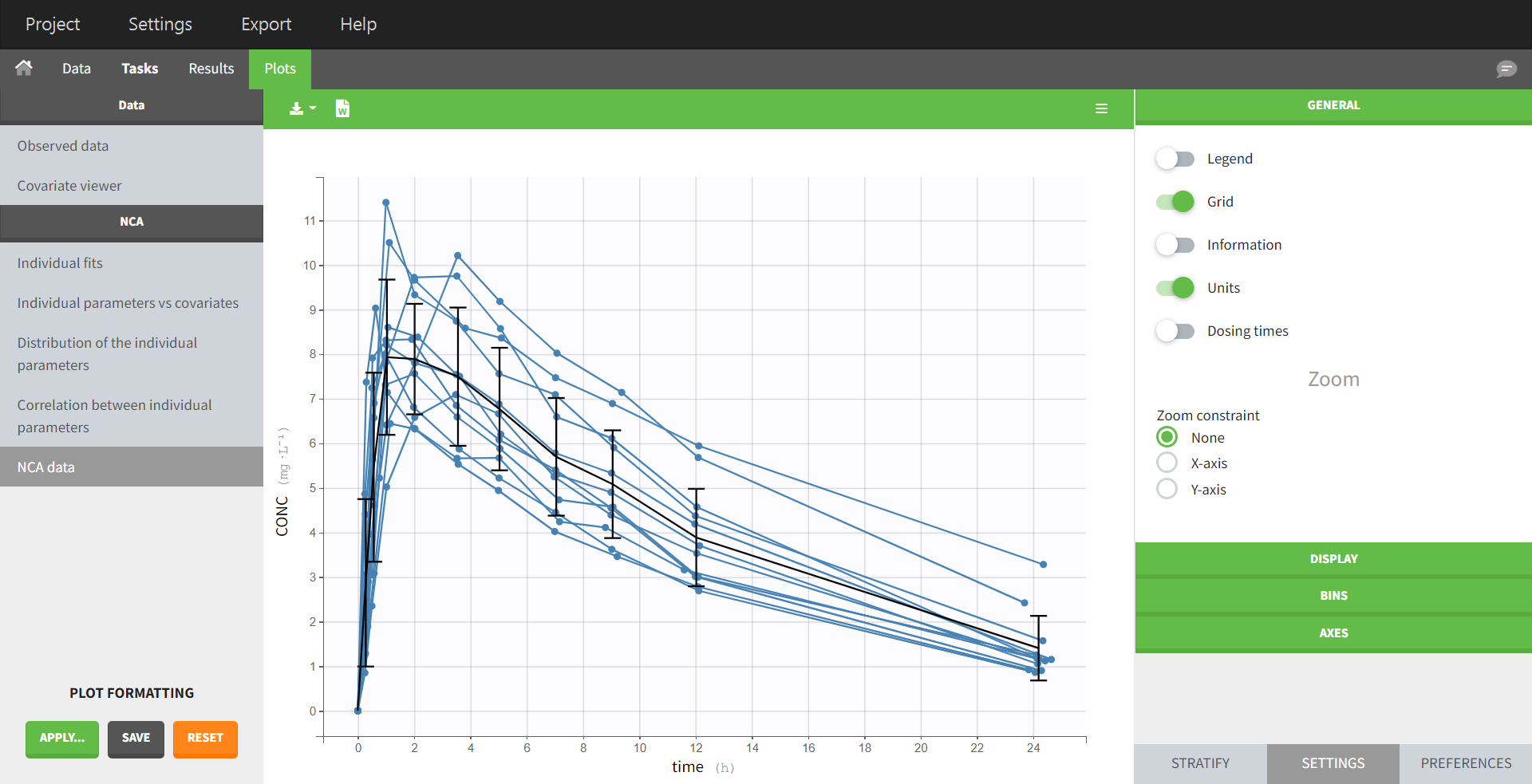

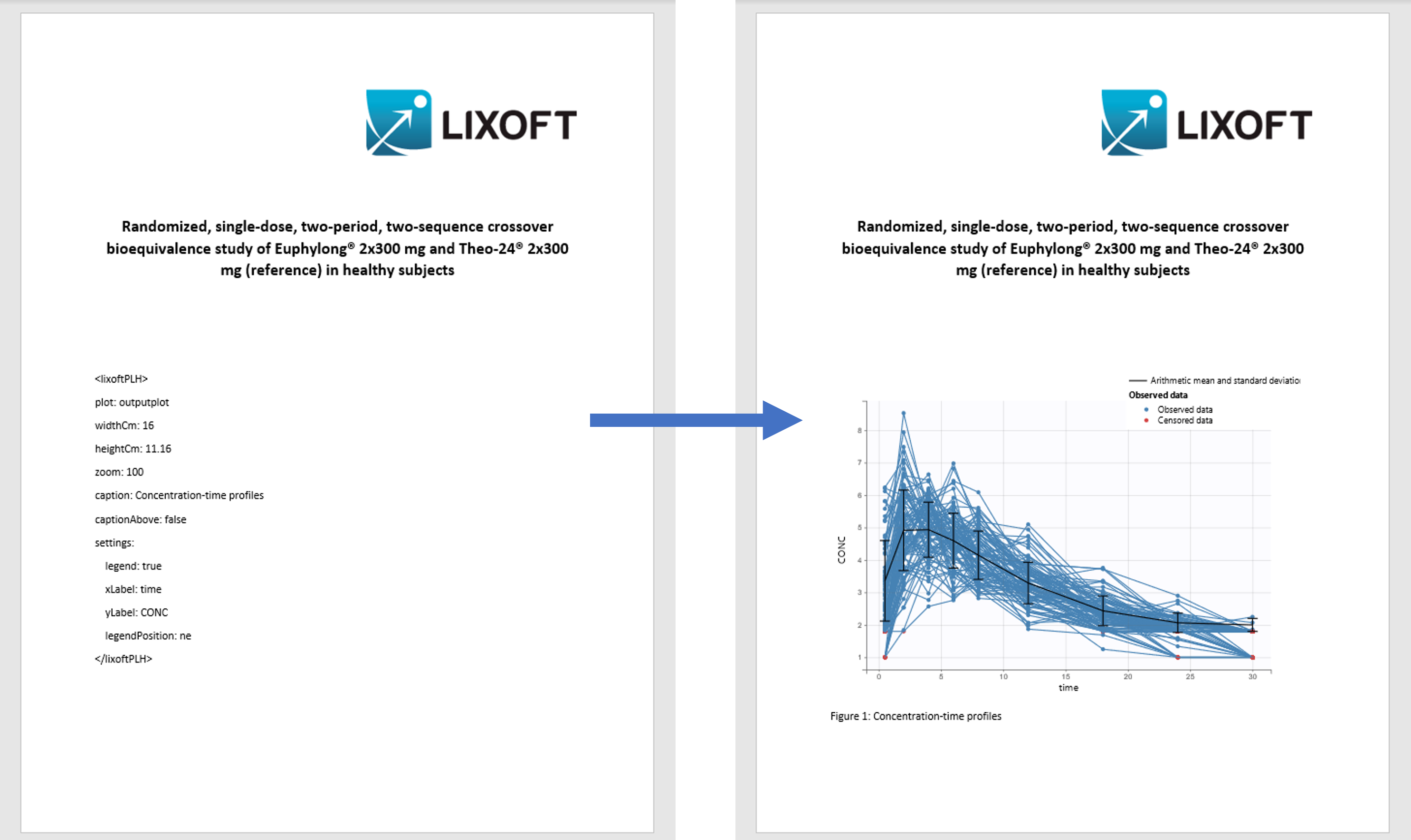

All the NCA, BE and/or CA outputs are automatically displayed in sortable tables in the Results tab. Moreover, they are exported in the result folder in a R-compatible format. Interactive plots give an immediate feedback and help to better interpret the results.

The usage of PKanalix is available not only via the user interface, but also via R with a dedicated R-package (detailed here). All the actions performed in the interface have their equivalent R-functions. It is particularly convenient for reproducibility purpose or batch jobs.

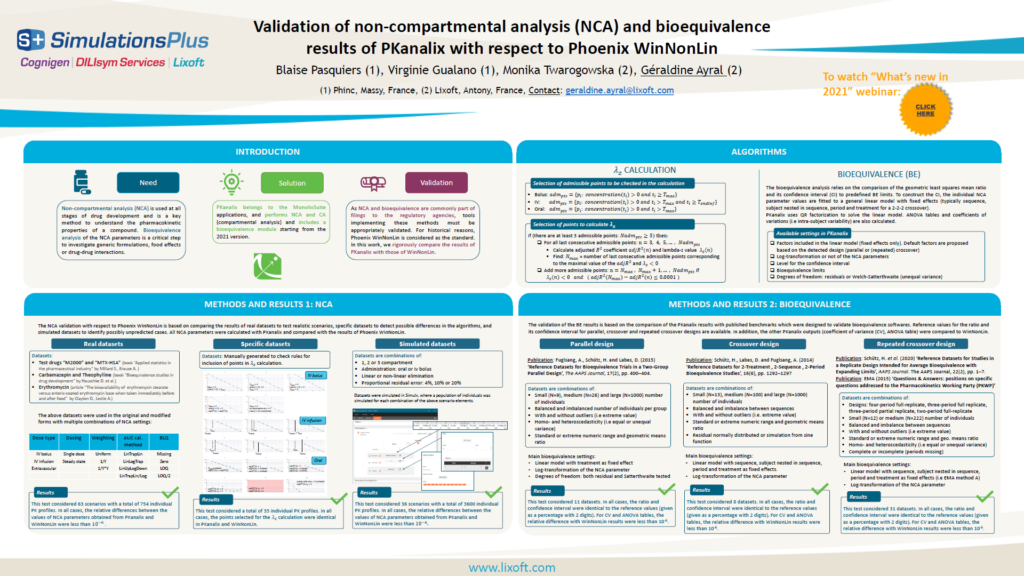

The results of the NCA calculations and bioequivalence calculations have been compared on an extensive number of datasets to the results of WinNonLin and to published results obtained with SAS. All results were identical. See the poster below for more details.

2.Data

2.1.Defining a dataset

To start a new PKanalix project, you need to define a dataset by loading a file in the Data tab.

Supported file types: Supported file types include .txt, .csv, and .tsv files. Starting with version 2024, additional Excel and SAS file types are supported: .xls, .xlsx, .sas2bdat, and .xpt files in addition to .txt, .csv, and .tsv files.

The data set format expected in the Data tab is the same as for the entire MonolixSuite, to allow smooth transitions between applications. The columns available in this format and example datasets are detailed on this page. Briefly:

- Each line corresponds to one individual and one time point

- Each line can include a single measurement (also called observation), or a dose amount (or both a measurement and a dose amount)

- Dosing information should be indicated for each individual, even if it is identical for all.

Your dataset may not be originally in this format, and you may want to add information on dose amounts, limits of quantification, units, or filter part of the dataset. To do so, you should proceed in this order:

- 1) Formatting: If needed, format your data first by loading the dataset in the Data Formatting tab. Briefly, it allows to:

- to deal with several header lines

- merge several observation columns into one

- add censoring information based on tags in the observation column

- add treatment information manually or from external sources

- add more columns based on another file

or

- 1) If the data is already in the right format, load it directly in the Data tab (otherwise use the formatted dataset created by data formatting). If the dataset does not follow a formatting rule, the dataset will not be accepted, but errors will guide you to find what is missing and could be added by data formatting.

- 2) Labeling: label the columns not recognized automatically to indicate their type and click on ACCEPT.

- 3) Units: If you want to work with units, indicate the units in the data and the ones you prefer to use inside the Data tab (if relevant)

- 4) Filters: If needed, filter your dataset to use only part of it in the Filters tab

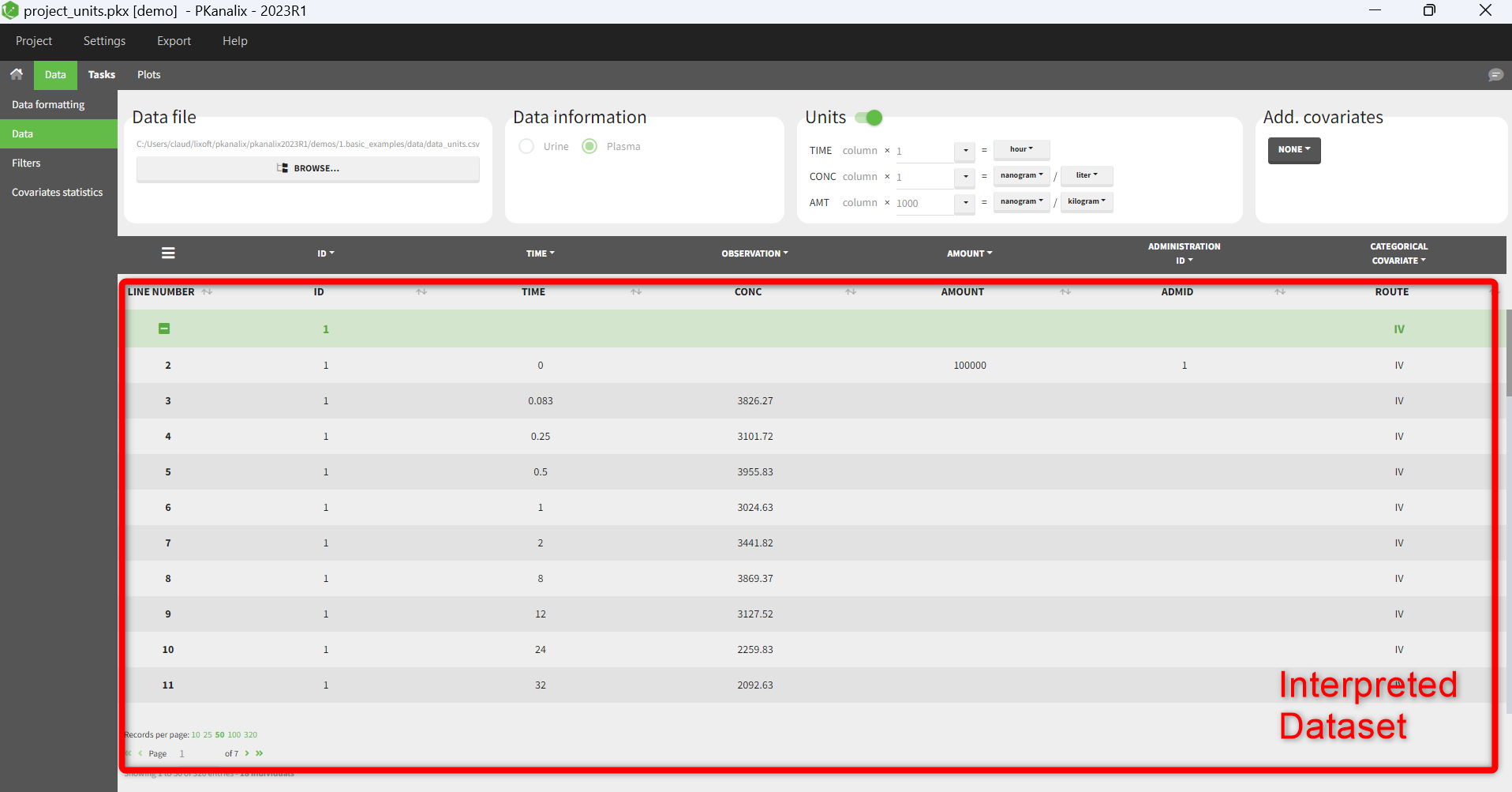

- 5) Explore: The interpreted dataset is displayed in Data, and Plots and covariate statistics are generated.

To use a dataset from a Monolix project, or to use simulations from a Simulx project, you can directly import or export the Monolix/Simulx project which will automatically define the dataset in the data tab.

Labeling

The column type suggested automatically by PKanalix based on the headers in the data can be customized in the preferences. By clicking on Settings>Preferences, the following windows pops up.

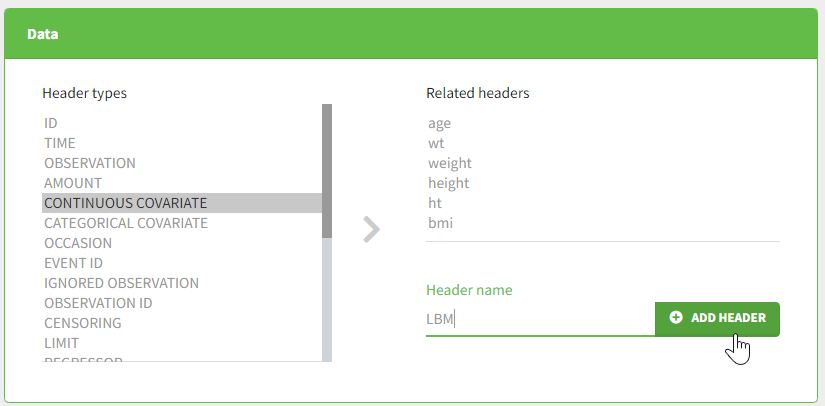

In the DATA frame, you can add or remove preferences for each column.

To remove a preference, double-click on the preference you would like to remove. A confirmation window will be proposed.

To add a preference, click on the header type you consider, add a name in the header name and click on “ADD HEADER” as on the following figure.

Notice that all the preferences are shared between Monolix, Datxplore, and PKanalix.

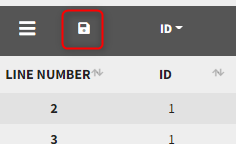

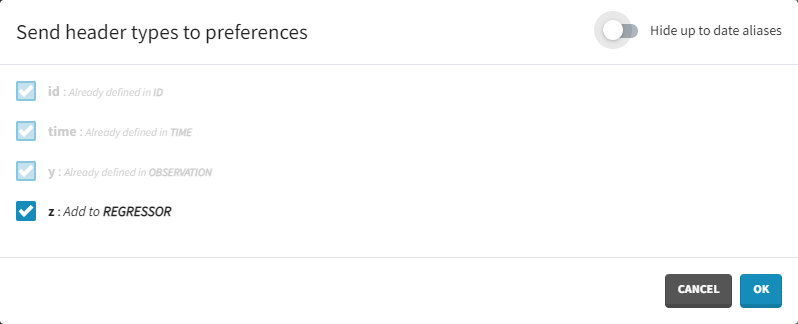

Starting from the version 2024, it is also possible to update the preferences with the columns tagged in the opened project, by clicking on the icon in the top left corner of the table:

Clicking on the icon will open a modal with the option to choose which of the tagged headers a user wants to add to preferences:

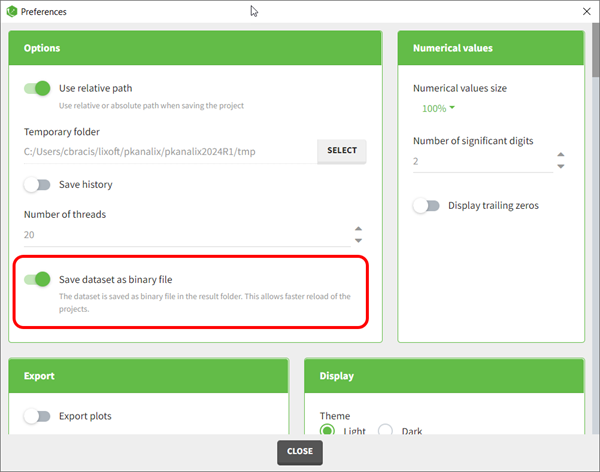

Dataset load times

Starting with the 2024 version, it is possible to improve the project load times, especially for projects with large datasets, but saving the data as a binary file. This option is available in Settings>Preferences and will save a copy of the data file in binary format in the results folder. When reloading a project, the dataset will be read from the binary file, which will be faster. If the original dataset file has been modified (compared to the binary), a warning message will appear, the binary dataset will not be used and the original dataset fiel will be loaded instead.

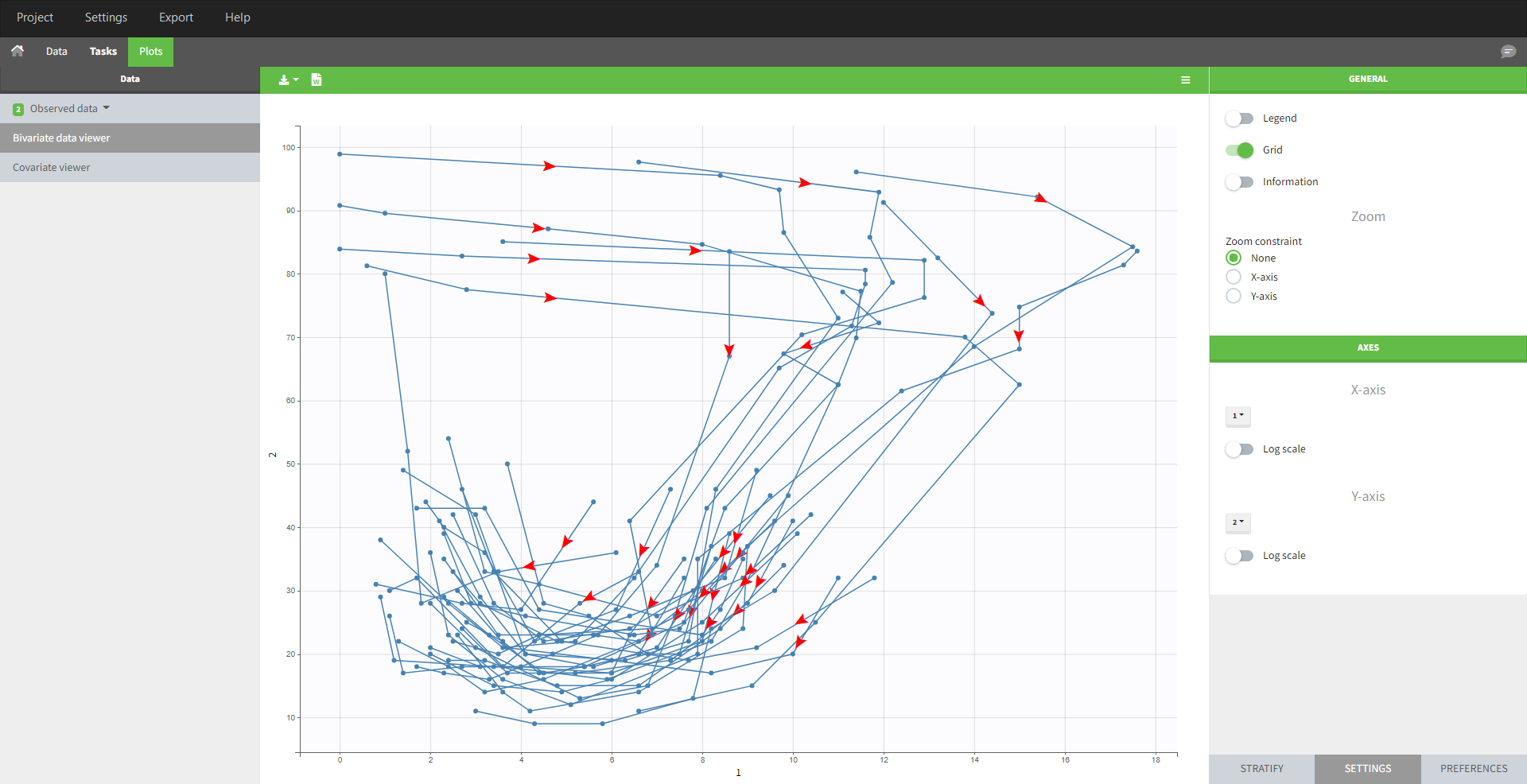

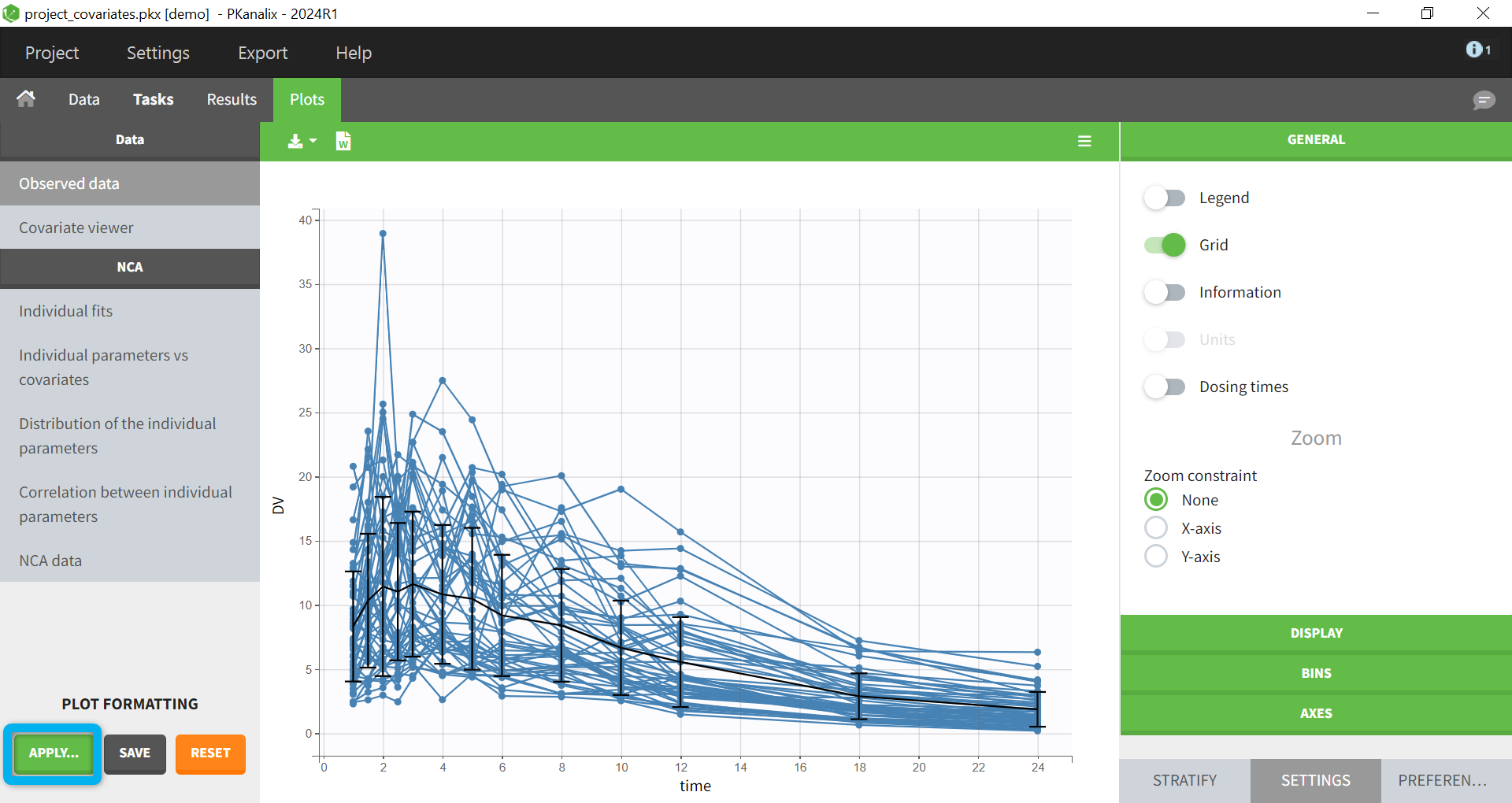

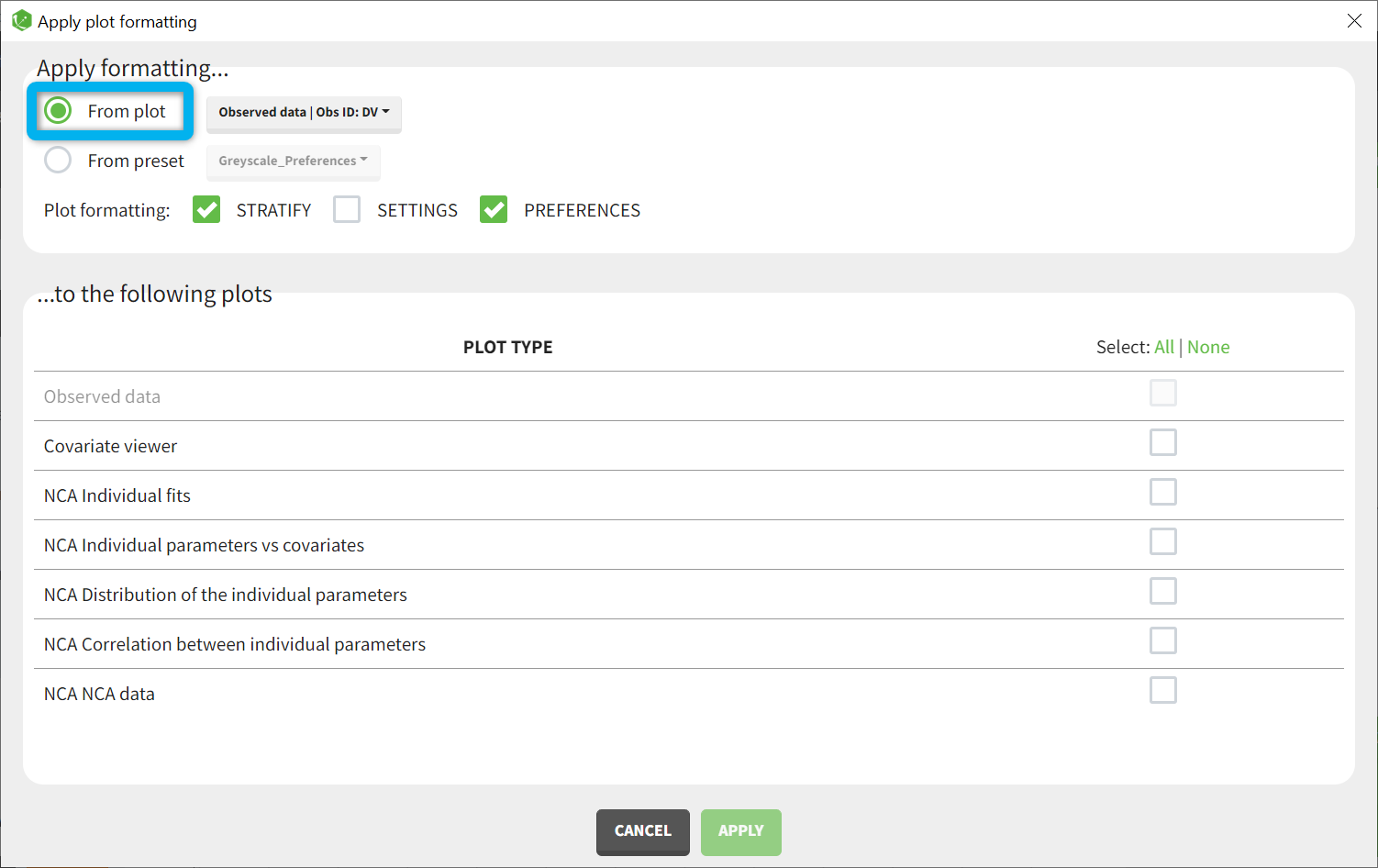

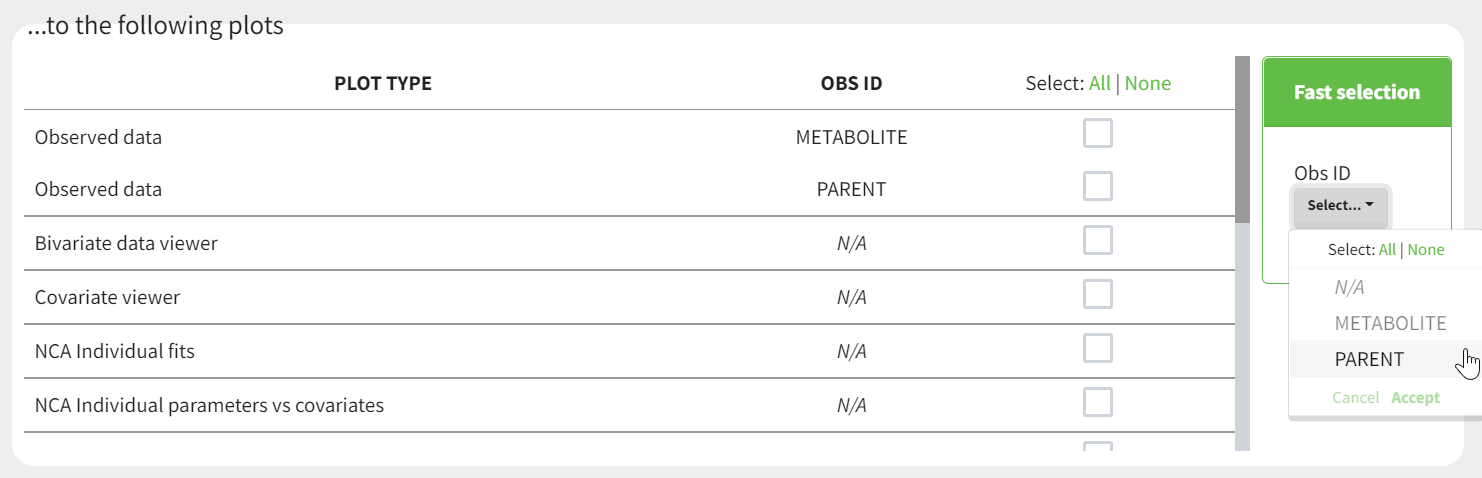

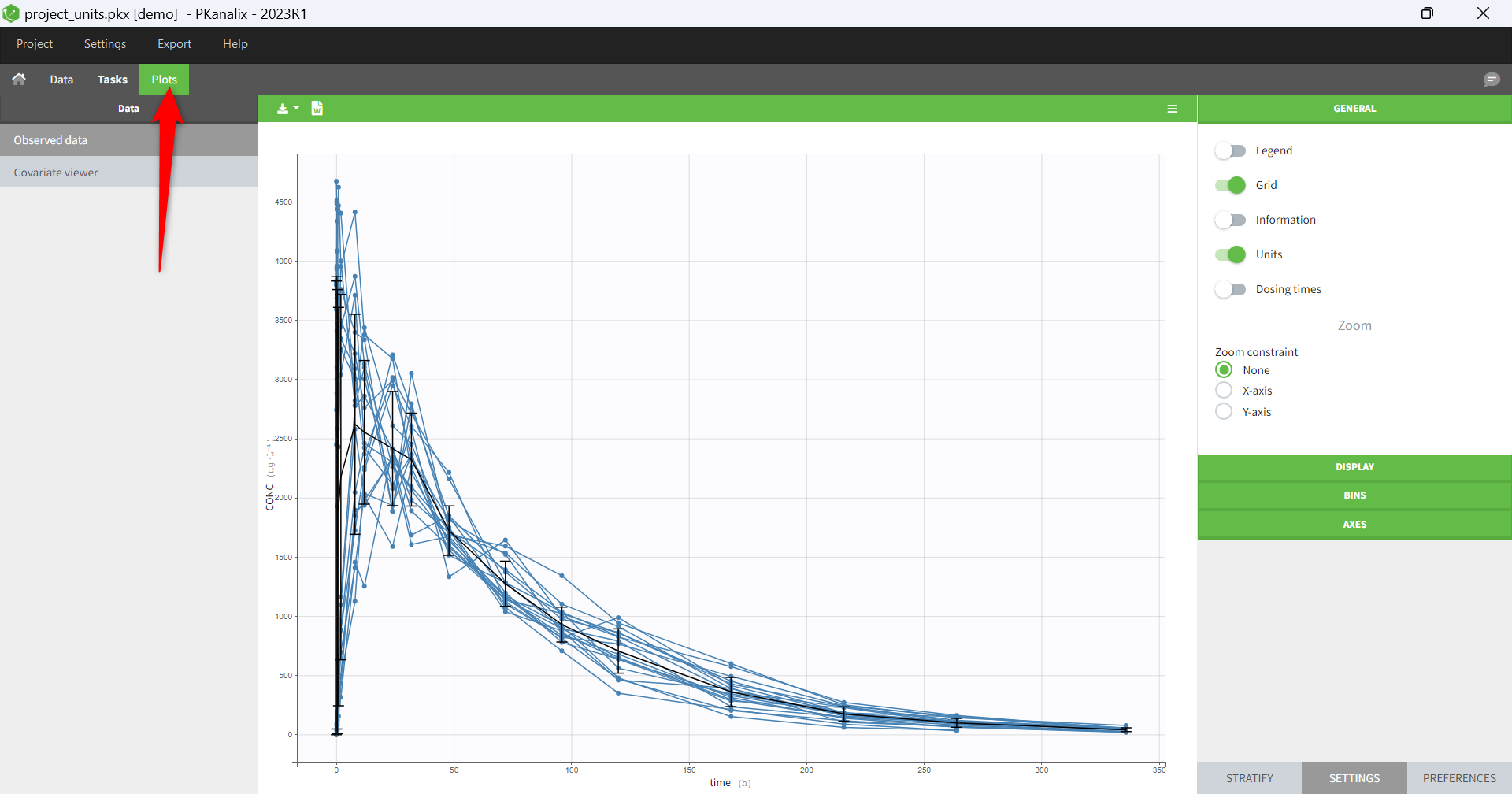

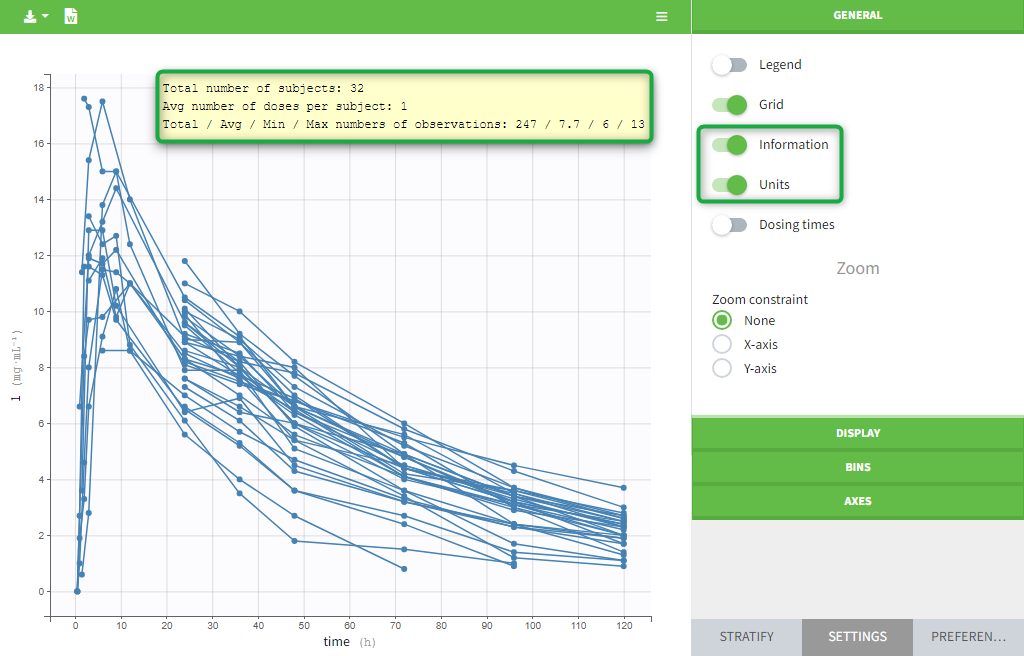

Resulting plots and tables to explore the data

Once the dataset is accepted:

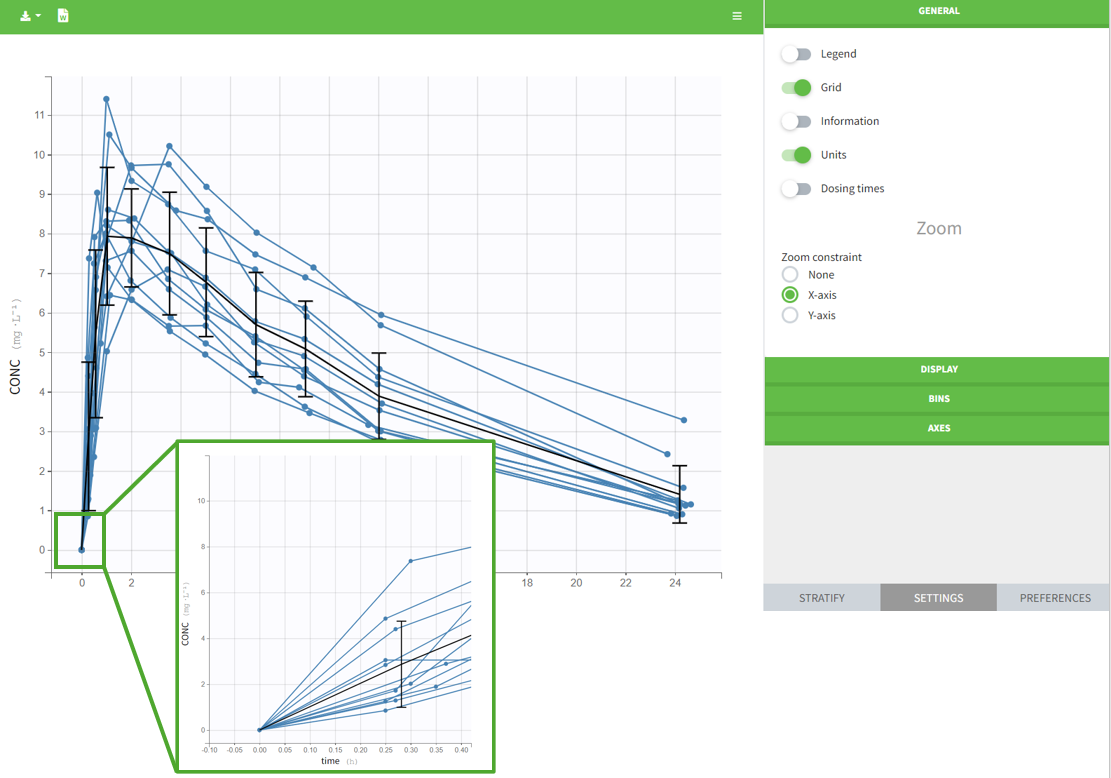

- Plots are automatically generated based on the interpreted dataset to help you proceed with a first data exploration before running any task.

- The interpreted dataset appears in Data tab, which incorporates all changes after formatting, setting units, and filtering.

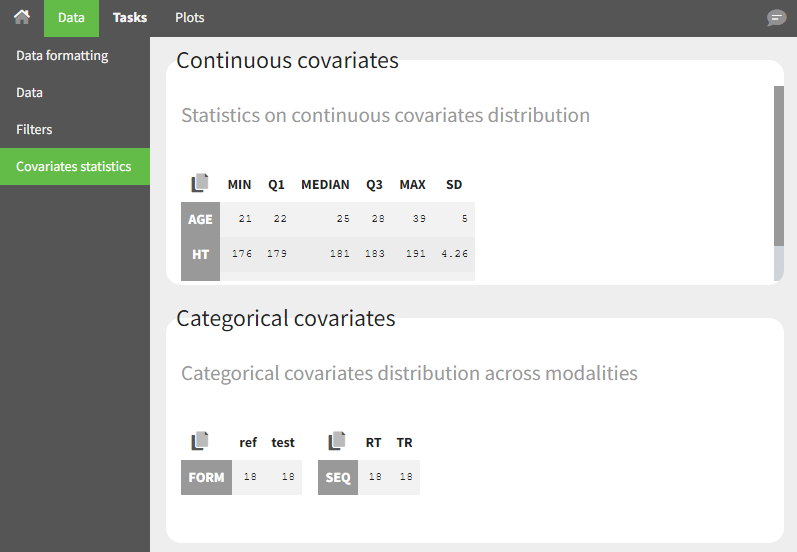

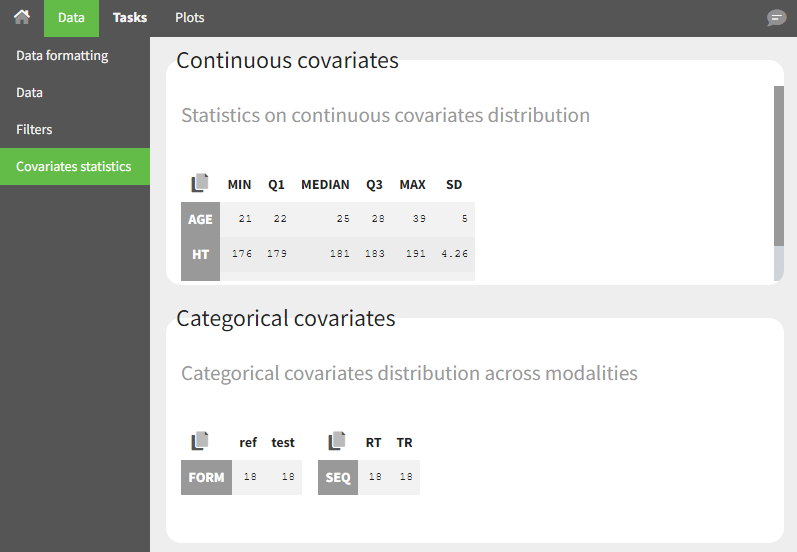

- Covariate Statistics appear in a section of the data tab.

2.2.Data format for NCA and CA analysis

In PKanalix, a dataset should be loaded in the Data tab to create a project. Once the dataset accepted, it is possible to specify units and filter the dataset, so units and filtering information should not be included in the file loaded in the Data tab.

The data set format used in PKanalix is the same as for the entire MonolixSuite, to allow smooth transitions between applications. In this format:

- Each line corresponds to one individual and one time point.

- Each line can include a single measurement (also called observation), or a dose amount (or both a measurement and a dose amount).

- Dosing information should be indicated for each individual in a specific column, even if it is the same treatment for all individuals.

- Headers are free but there can be only one header line.

- Different types of information (dose, observation, covariate, etc) are recorded in different columns, which must be tagged with a column type (see below).

If your dataset is not in this format, in most cases, it is possible to format it in a few steps in the data formatting tab, to incorporate the missing information.

- Overview of most common column types

- Example datasets

- Plasma concentration data

- Steady-state data

- BLQ data

- Urine data

- Occasions (“Sort” variables)

- Covariates (“Carry” variables)

- Other useful column-types

- Description of all possible column types

Overview of most common column-types

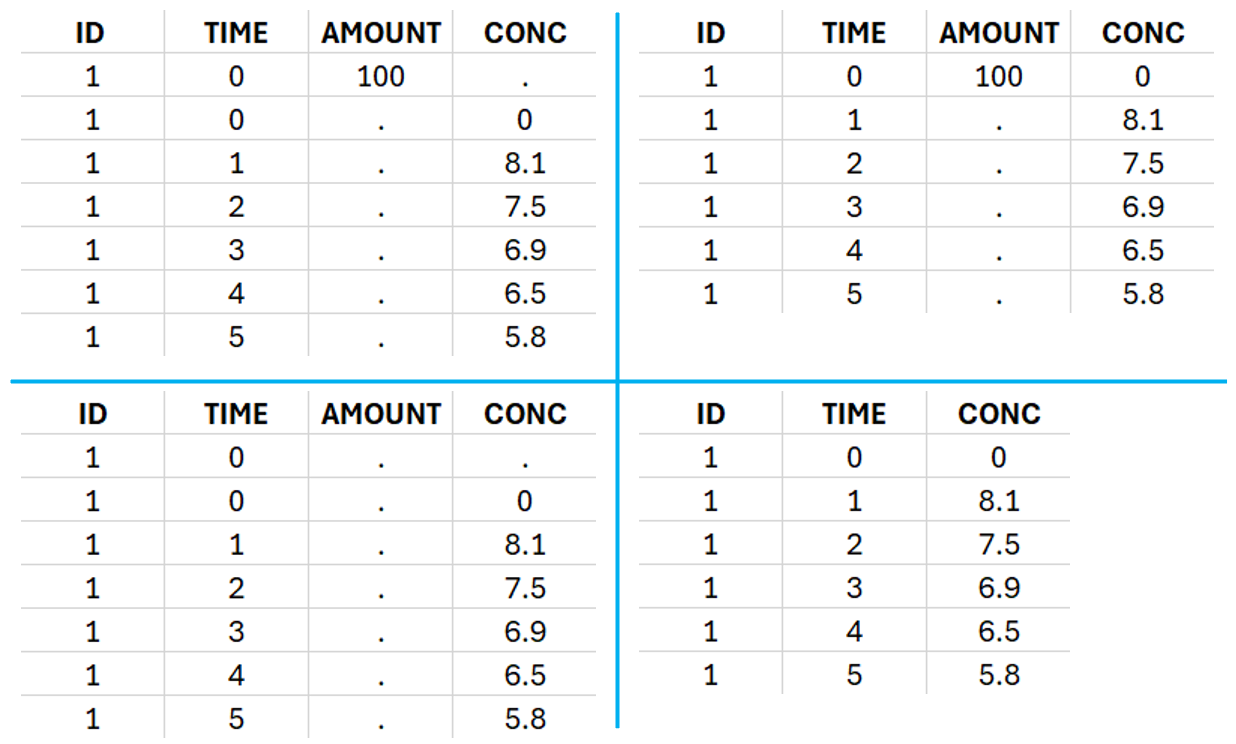

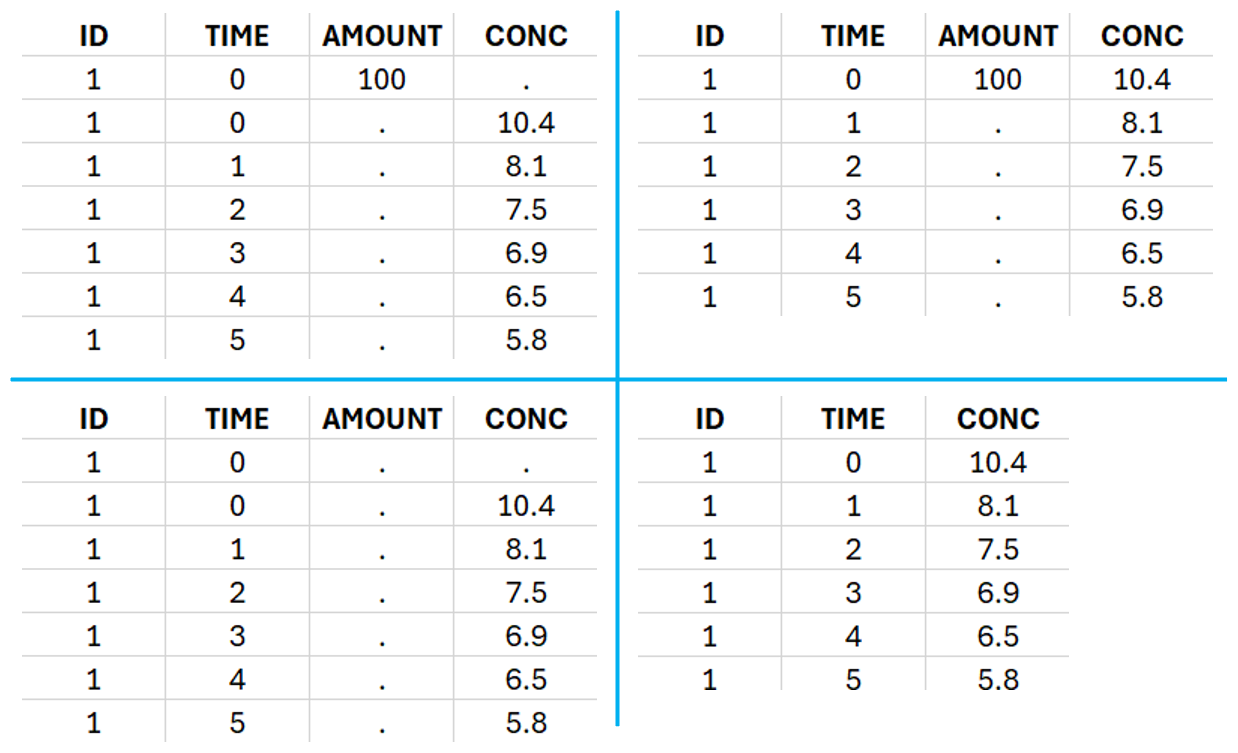

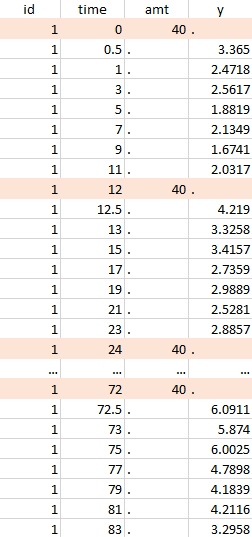

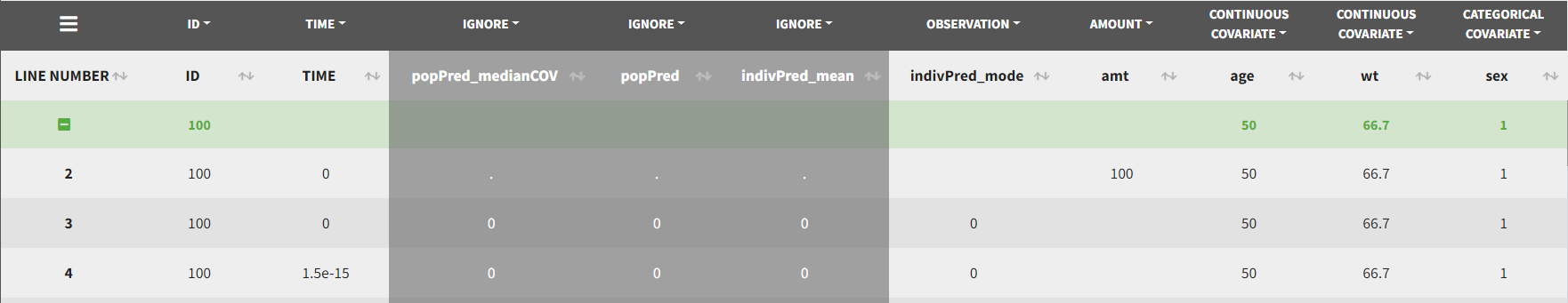

A data set typically contains only the following columns: ID (mandatory), TIME (mandatory), OBSERVATION (mandatiry), AMOUNT (optional). The main rules for interpreting the dataset are:

- Cells that do not contain information (e.g AMOUNT column on a line recording a measurement) should have a dot.

- Headers are free but there can be only one header line and the columns must be assigned to one of the available column-types. The full list of column-types is available at the end of this page and a detailed description is given on the dataset documentation website.

The most common column types in addition to ID, TIME, OBSERVATION and AMOUNT are:

- For IV infusion data, an additional INFUSION RATE or INFUSION DURATION is necessary.

- For steady-state data, either an INTERDOSE INTERVAL column is added, or the interdose interval tau is specified in the NCA settings.

- If a dose and a measurement occur at the same time, they can be encoded on the same line or on different lines.

- Sort and carry variables can be defined using the OCCASION, and CATEGORICAL COVARIATE and CONTINUOUS COVARIATE column-types.

- BLQ data are defined using the CENSORING and LIMIT column-types.

In addition to the typical cases presented above, a few additional column-types may also be convenient:

- ignoring a line: with the IGNORED OBSERVATION (ignores the measurement only) or IGNORED LINE (ignores the entire line, including regressor and dose information). However it is more convenient to filter lines of your dataset without modifying it by using filters (available once your dataset is accepted).

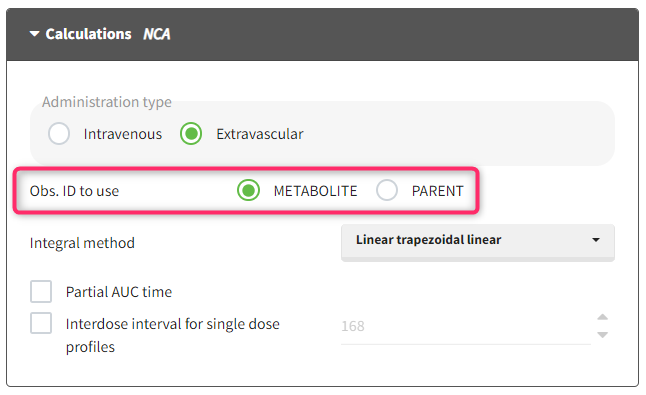

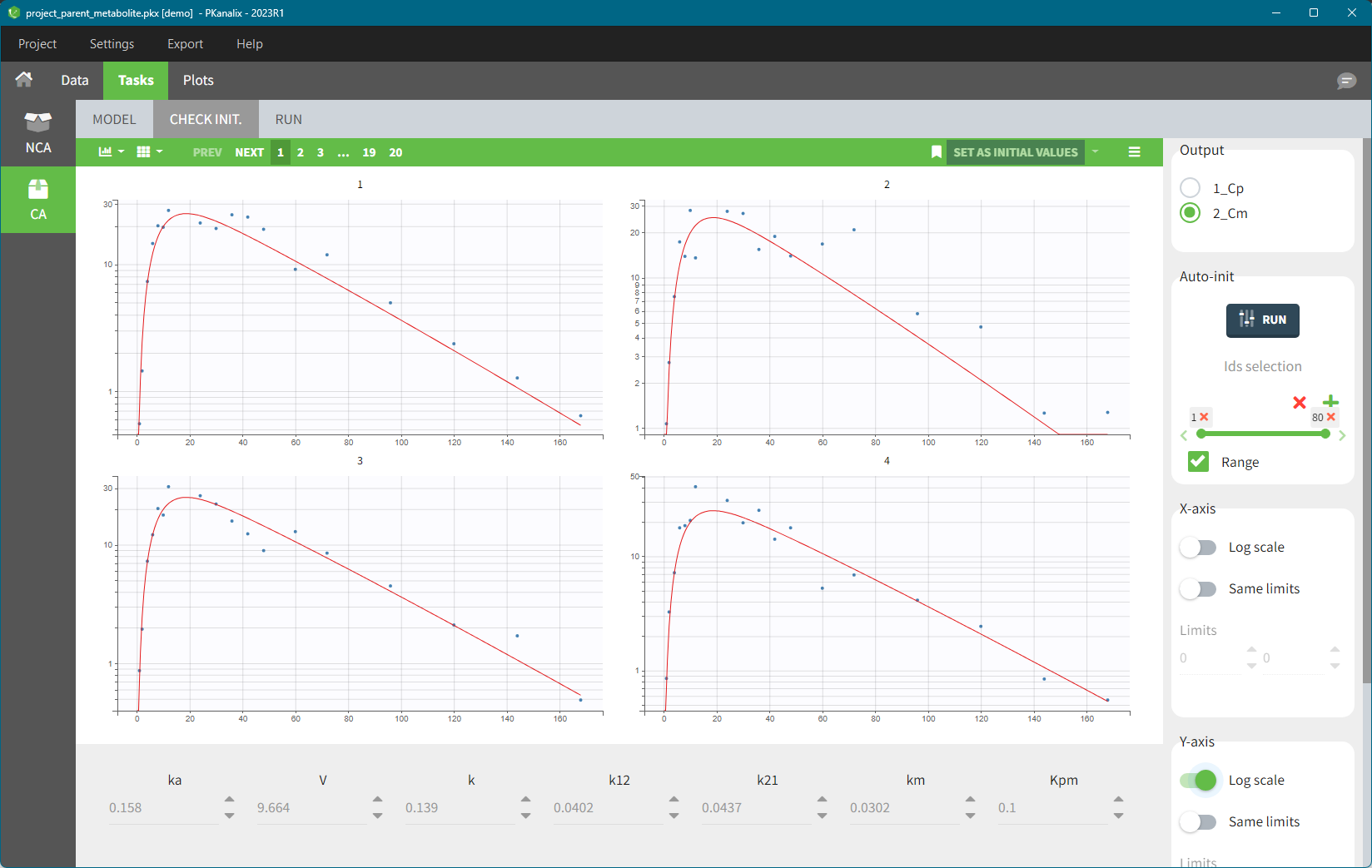

- working with several types of observations: If several observation types are present in the dataset (for example parent and metabolite), all measurements should still appear in the same OBSERVATION column, and another column should be used to distinguish the observation types. If this is not the case in your dataset, data formatting enables to merge several observation columns. To run NCA for several observations at the same time for the same id, tag the column listing the observation types as OCCASION . To run CA for several observations with a model including several outputs (for example PK and PD), tag the column listing the observation types as OBSERVATION ID. In this case, only one observation type will be available for NCA. It can be selected in the “NCA” section to perform the calculations.

- working with several types of administrations: a single data set can contain different types of administrations, for instance IV bolus and extravascular, distinguished using the ADMINISTRATION ID column-type. The setting “administration type” in “Tasks>Run” can be chosen separately for each administration id, and the appropriate parameter calculations will be performed.

Example datasets

Below we show how to encode the dataset for most typical situations.

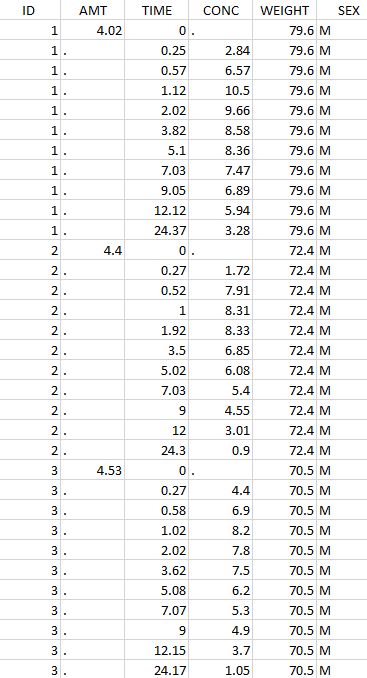

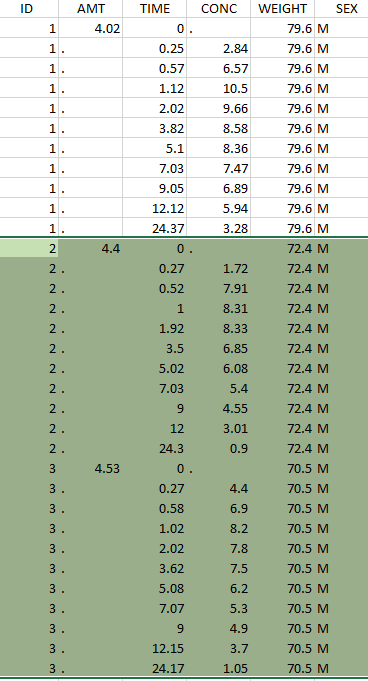

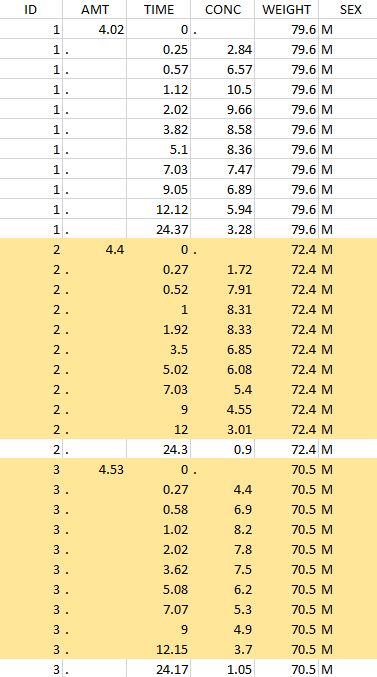

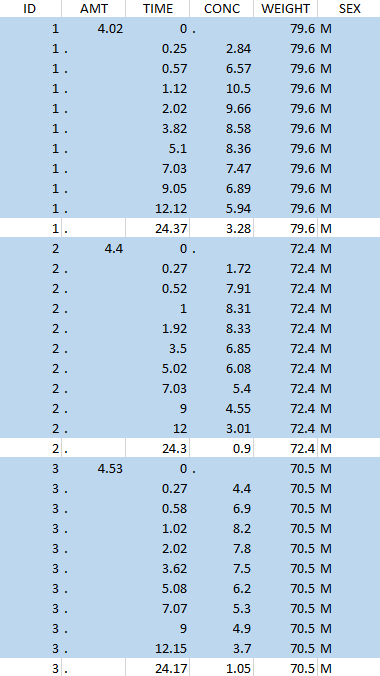

Plasma concentration data

Extravascular

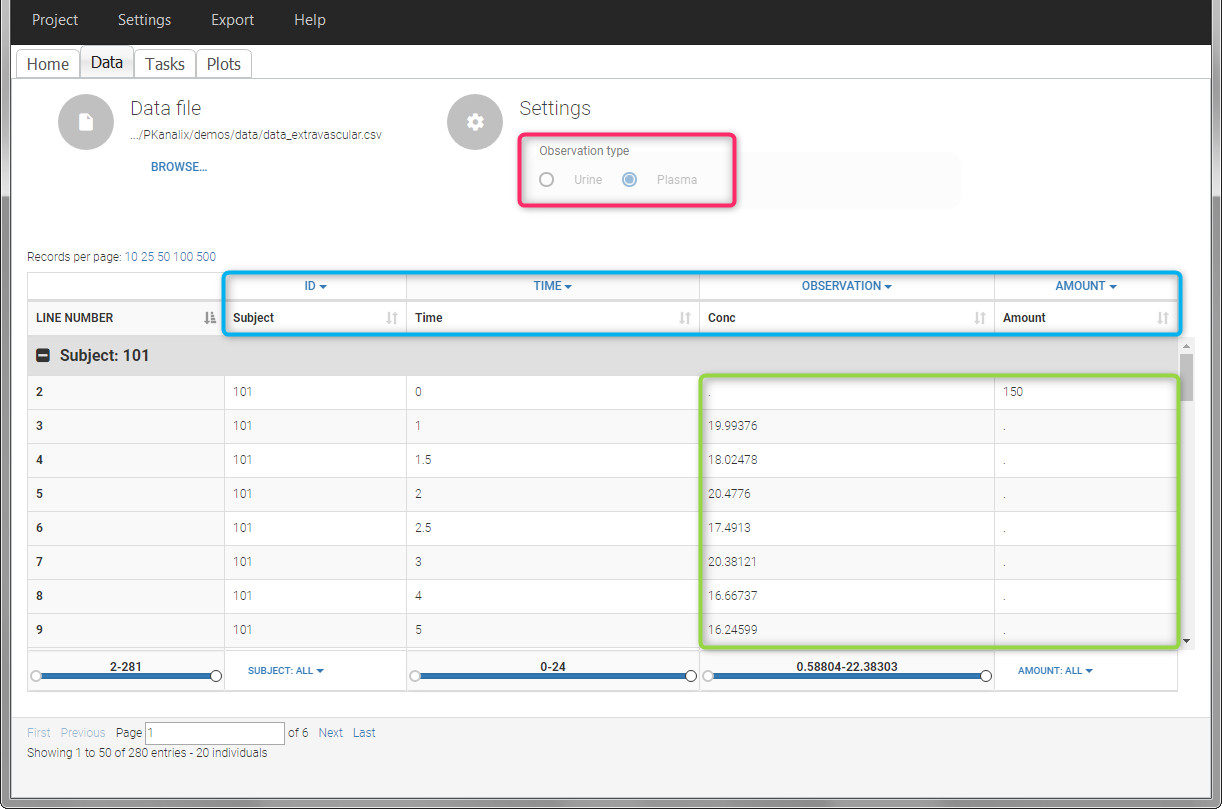

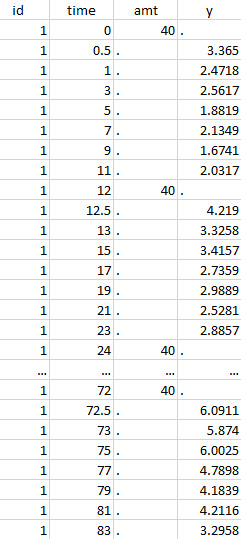

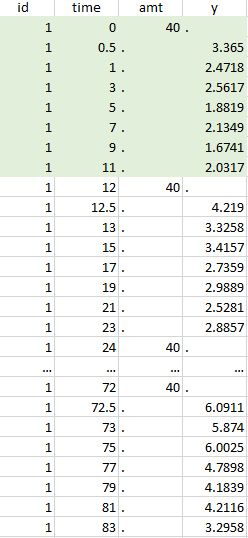

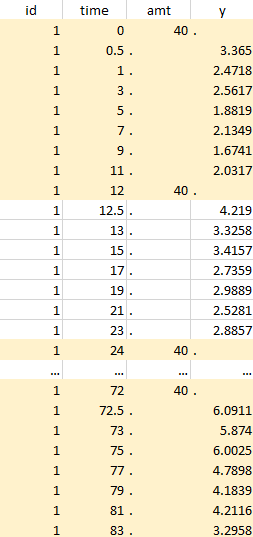

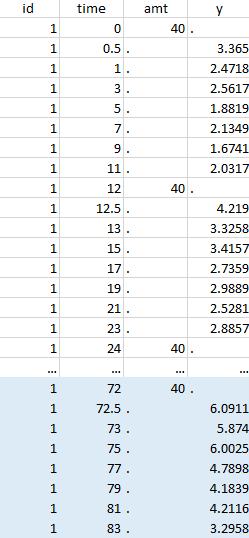

For extravascular administration, the mandatory column-types are ID (individual identifiers as integers or strings), OBSERVATION (measured concentrations), AMOUNT (dose amount administered, mandatory for 2023 and previous versions) and TIME (time of dose or measurement). Since version 2024, datasets that do not contain an AMOUNT column or no information in the AMOUT column are accepted for single dose or multiple dose administrations.

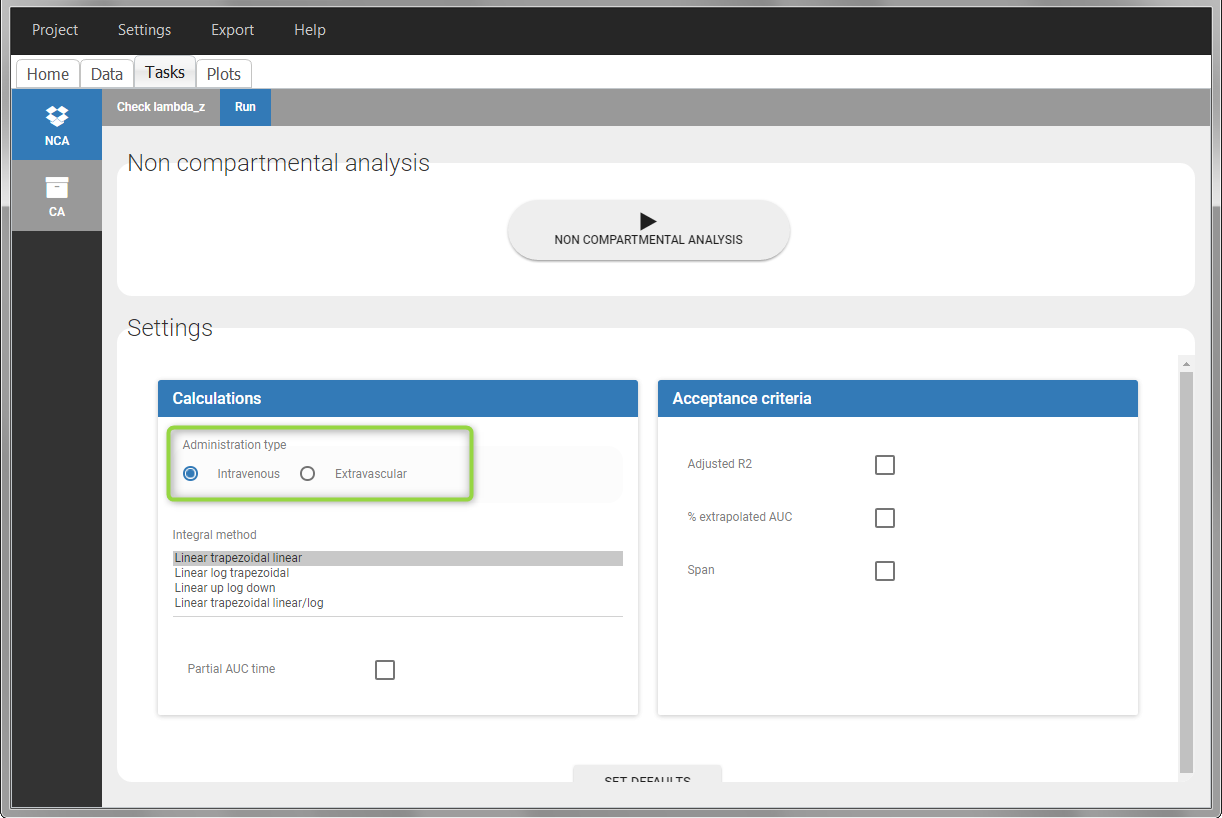

To distinguish the extravascular from the IV bolus case, in “Tasks>Run” the administration type must be set to “extravascular”.

If no measurement is recorded at the time of the dose, a concentration of zero is added for single dose data, the minimum concentration observed during the dose interval for steady-state data.

Example:

- demo project_extravascular.pkx

This data set records the drug concentration measured after single oral administration of 150 mg of drug in 20 patients. For each individual, the first line records the dose (in the “Amount” column tagged as AMOUNT column-type) while the following lines record the measured concentrations (in the “Conc” column tagged as OBSERVATION). Cells of the “Amount” column on measurement lines contain a dot, and respectively for the concentration column. The column containing the times of measurements or doses is tagged as TIME column-type and the subject identifiers, which we will use as sort variable, are tagged as ID. Check the OCCASION section if more sort variables are needed. After accepting the dataset, the data is automatically assigned as “Plasma”.

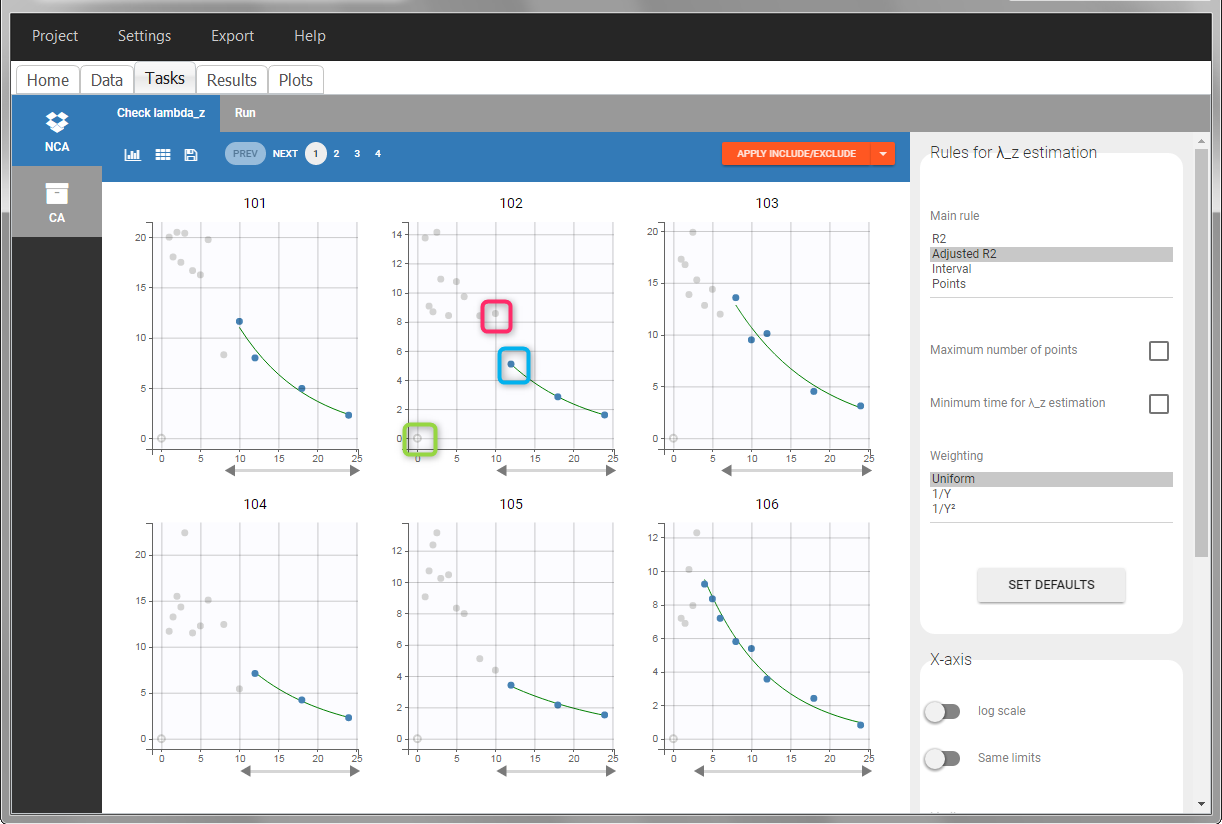

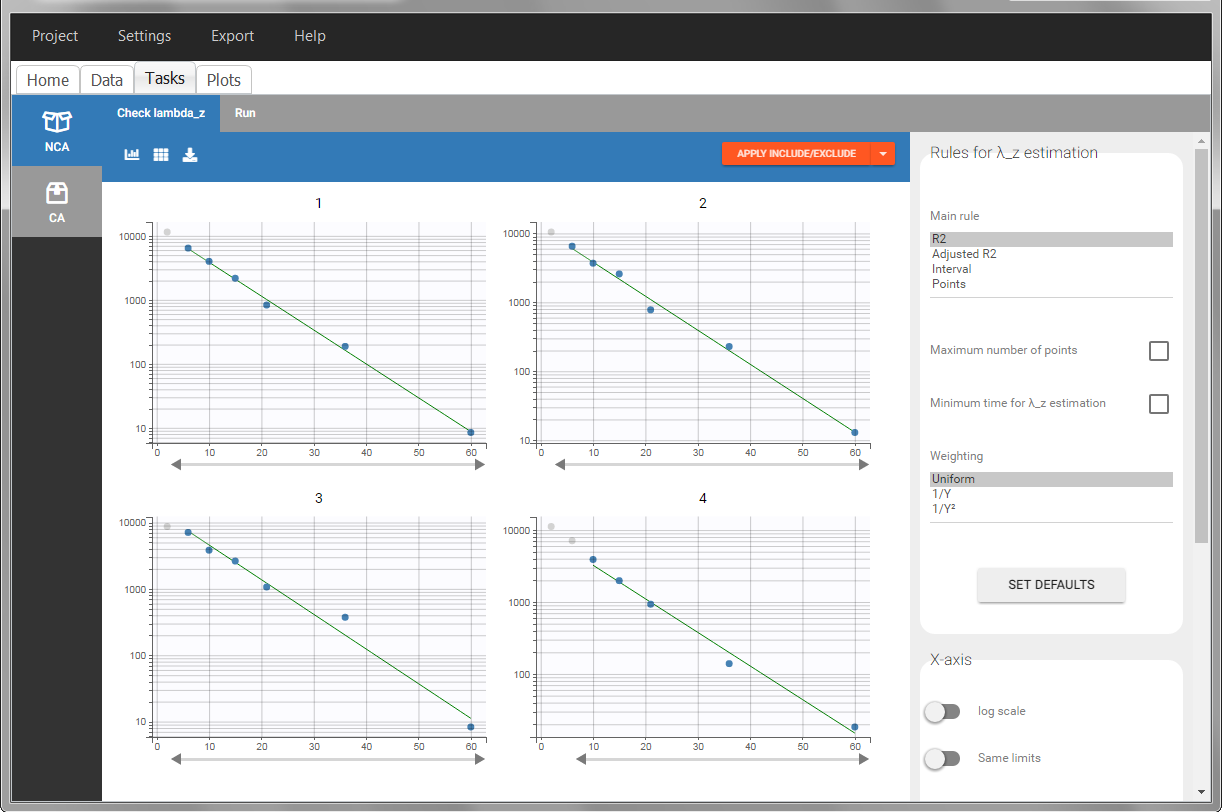

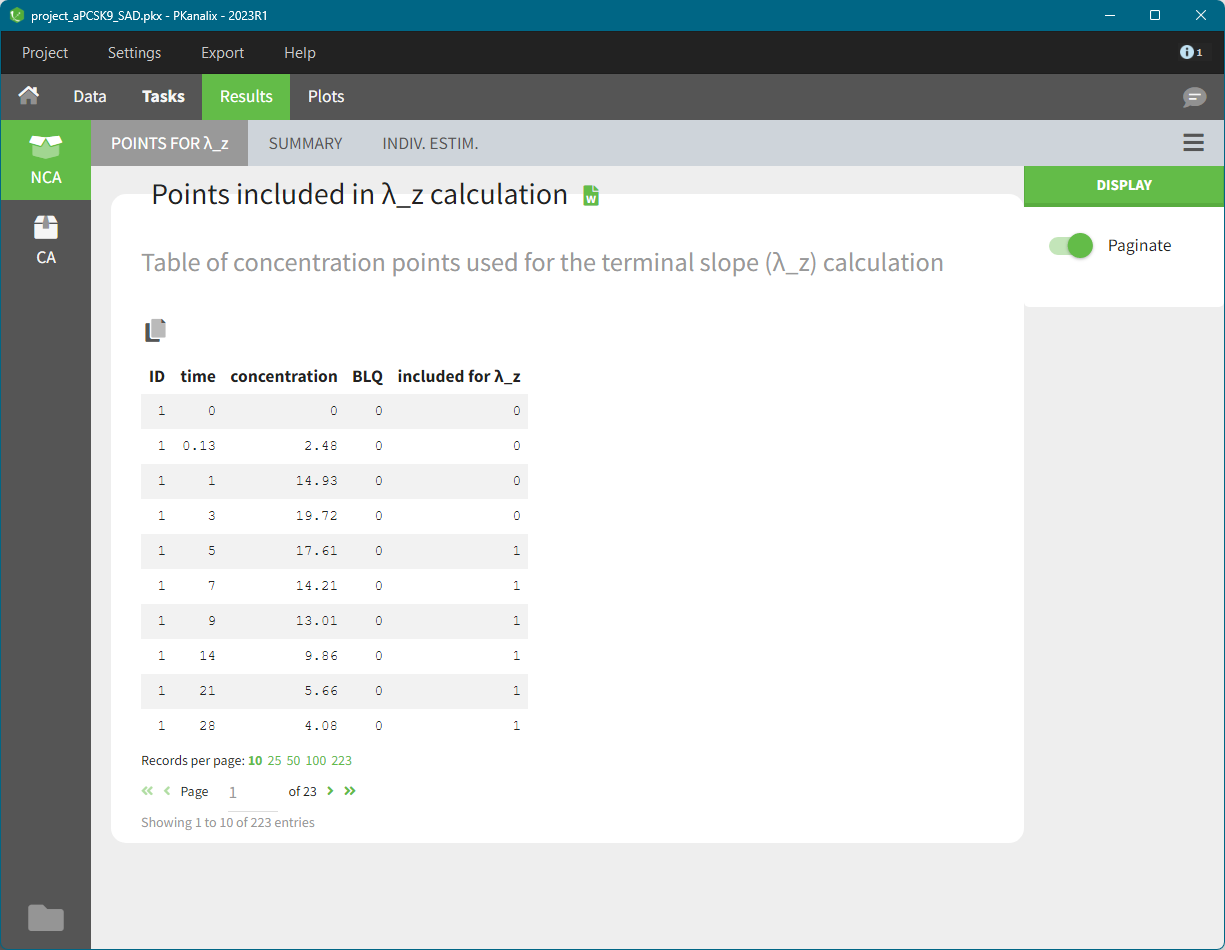

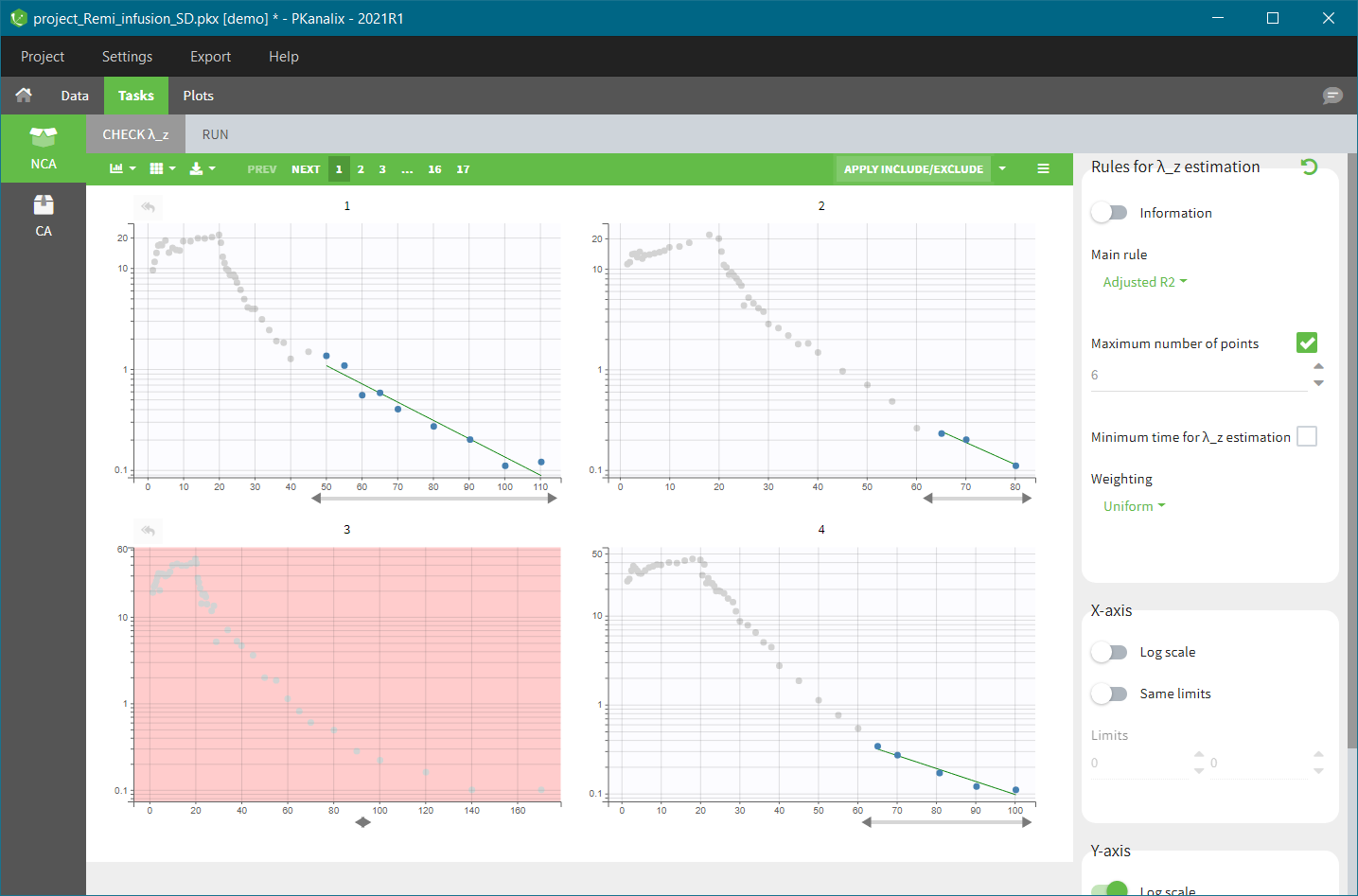

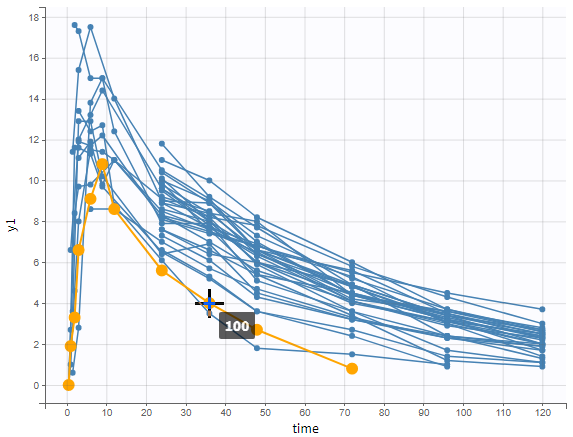

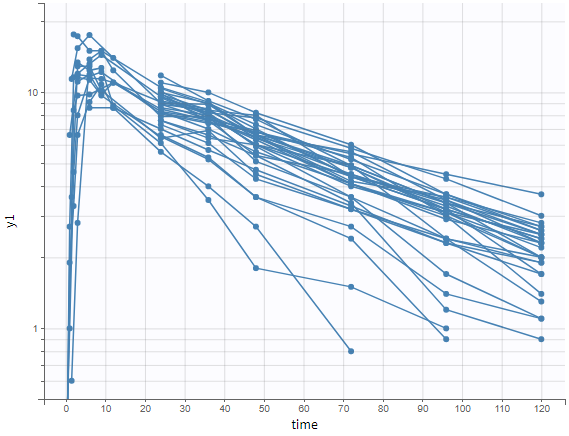

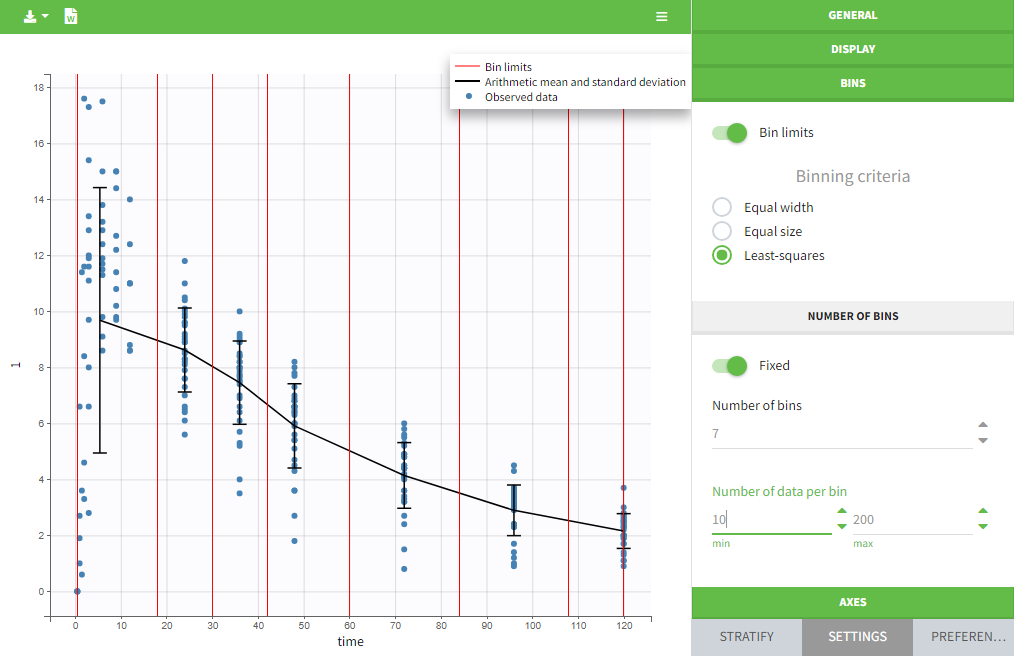

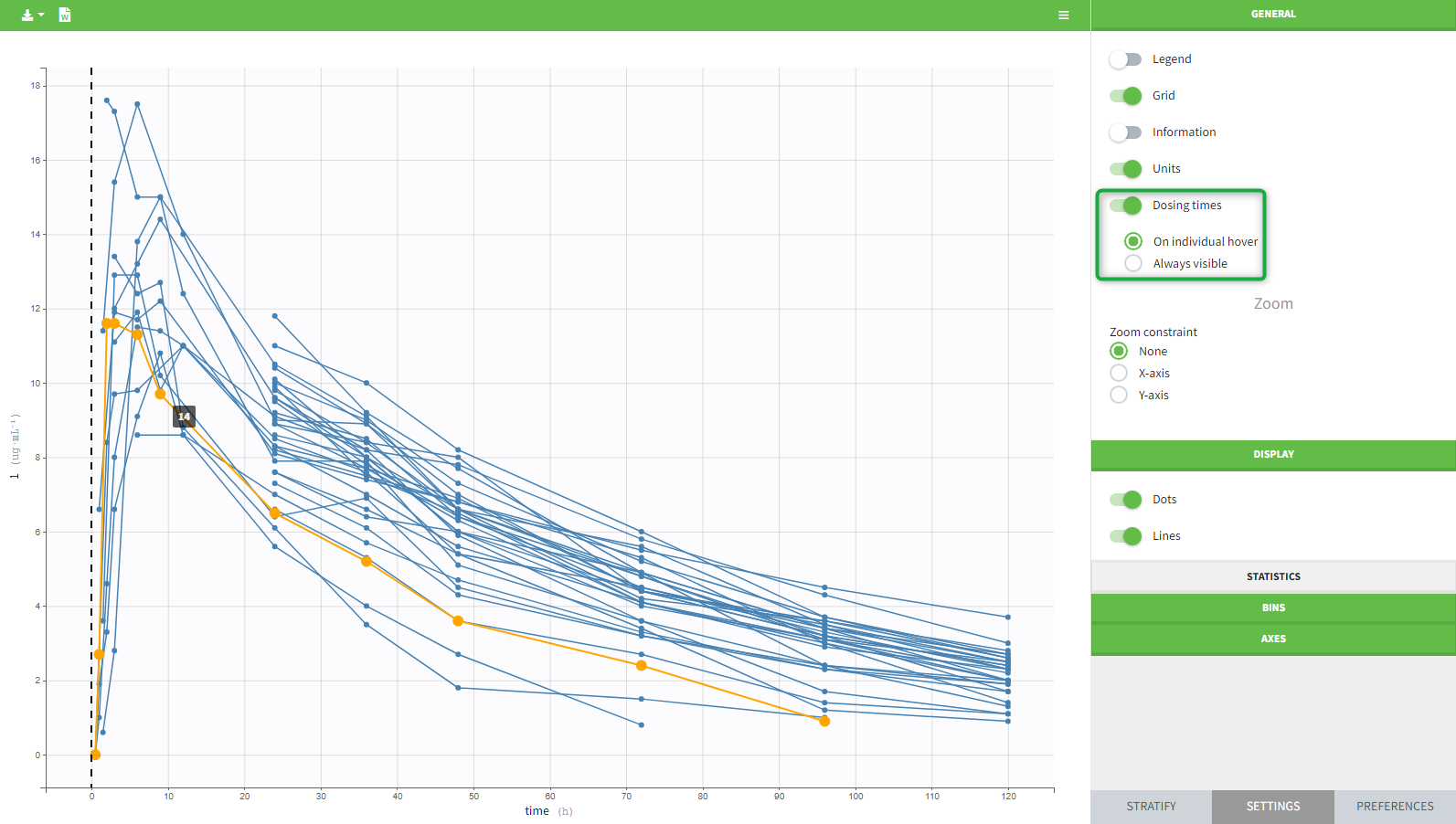

In the “Tasks/Run” tab, the user must indicate that this is extravascular data. In the “Check lambda_z”, on linear scale for the y-axis, measurements originally present in the data are shown with full circles. Added data points, such as a zero concentration at the dose time, are represented with empty circles. Points included in the \(\lambda_z\) calculation are highlighted in blue.

|

|

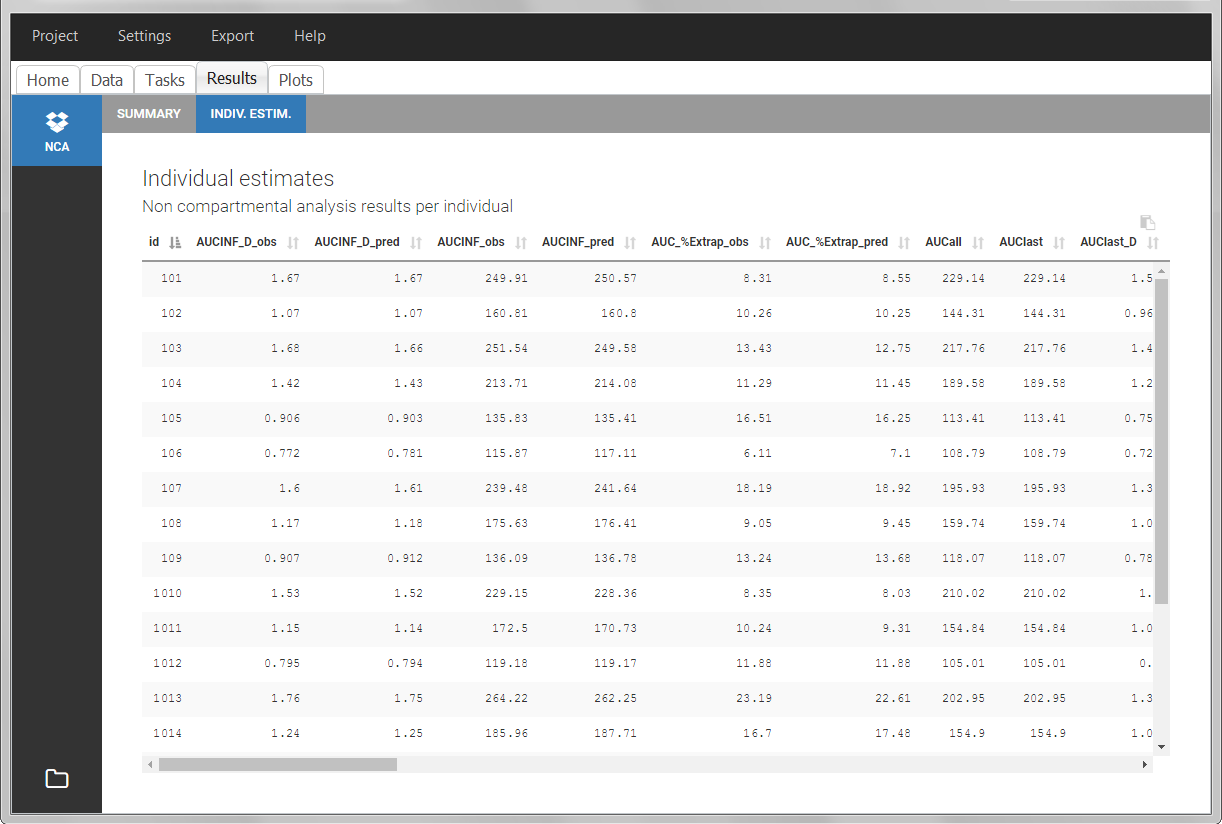

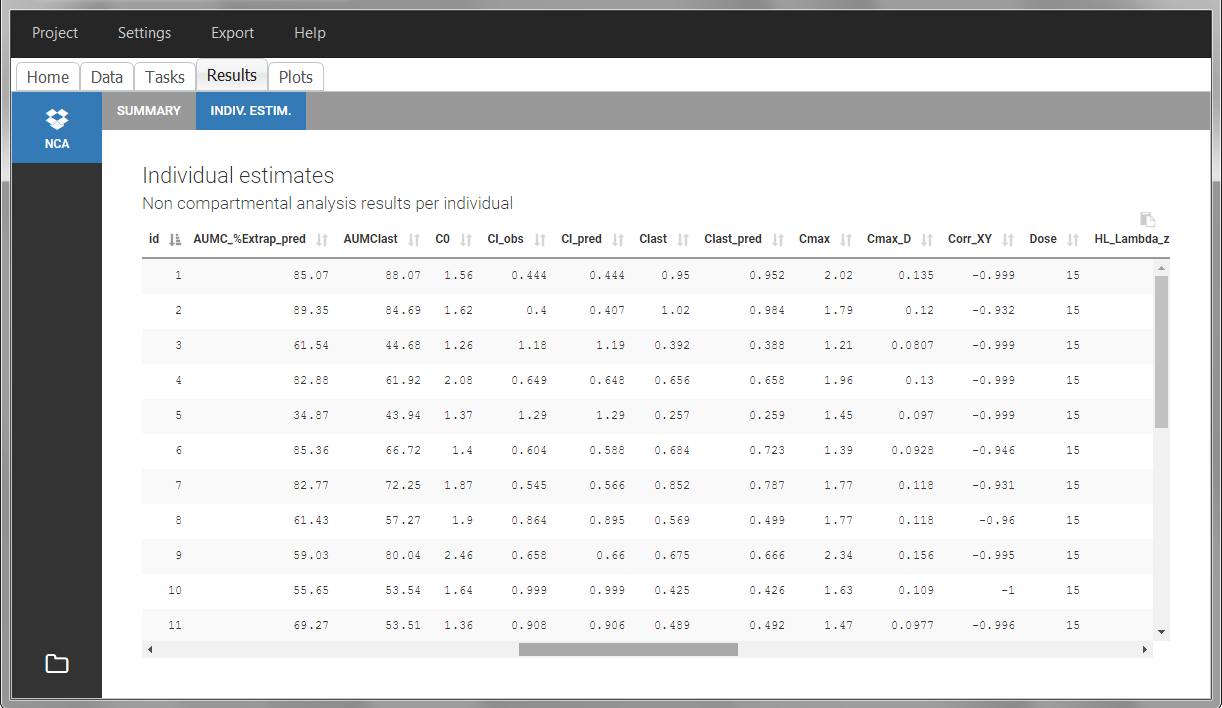

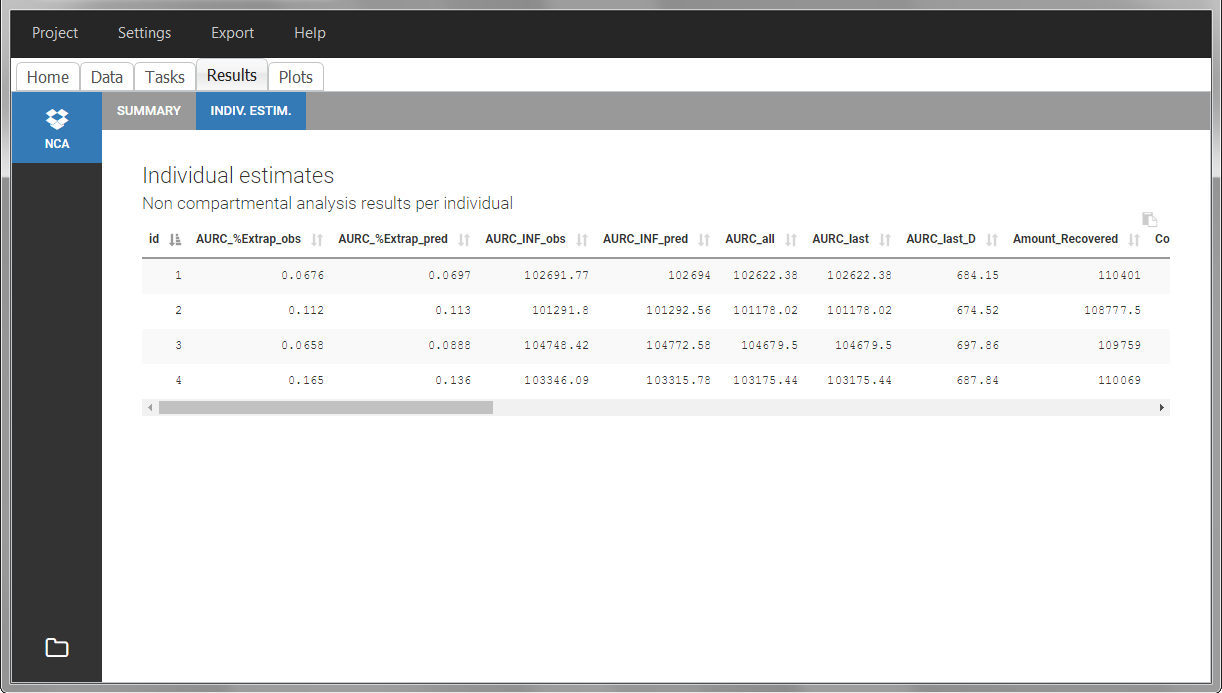

After running the NCA analysis, PK parameters relevant to extravascular administration are displayed in the “Results” tab.

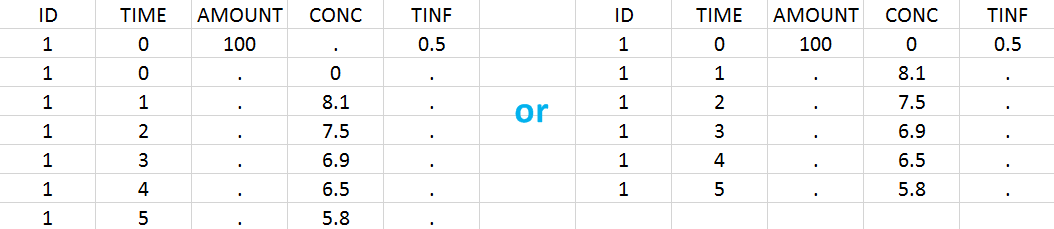

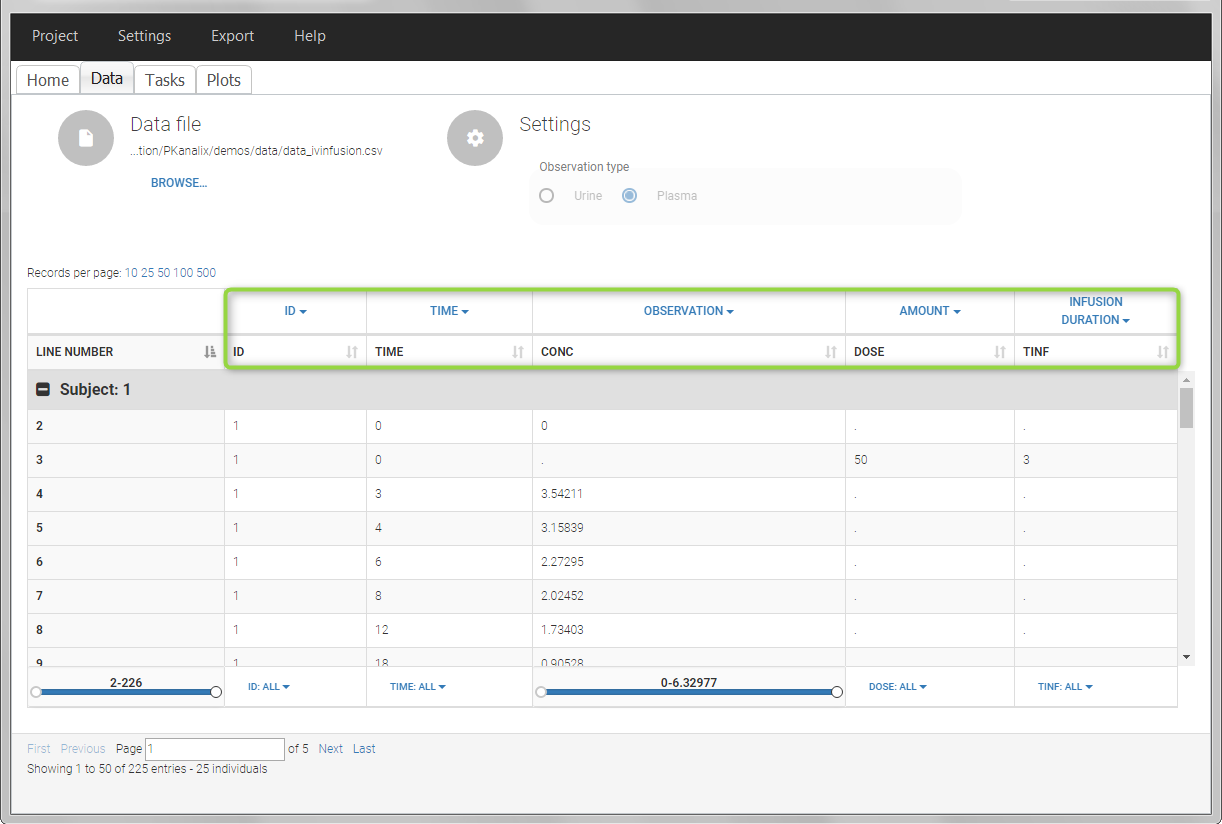

IV infusion

Intravenous infusions are indicated in the data set via the presence of an INFUSION RATE or INFUSION DURATION column-type, in addition to the ID (individual identifiers as integers or strings), OBSERVATION (measured concentrations), AMOUNT (dose amount administered, mandatory for 2023 and previous versions) and TIME (time of dose or measurement). The infusion duration (or rate) can be identical or different between individuals. Since version 2024, datasets that do not contain an AMOUNT column or no information in the AMOUT column are accepted for single dose or multiple dose administrations.

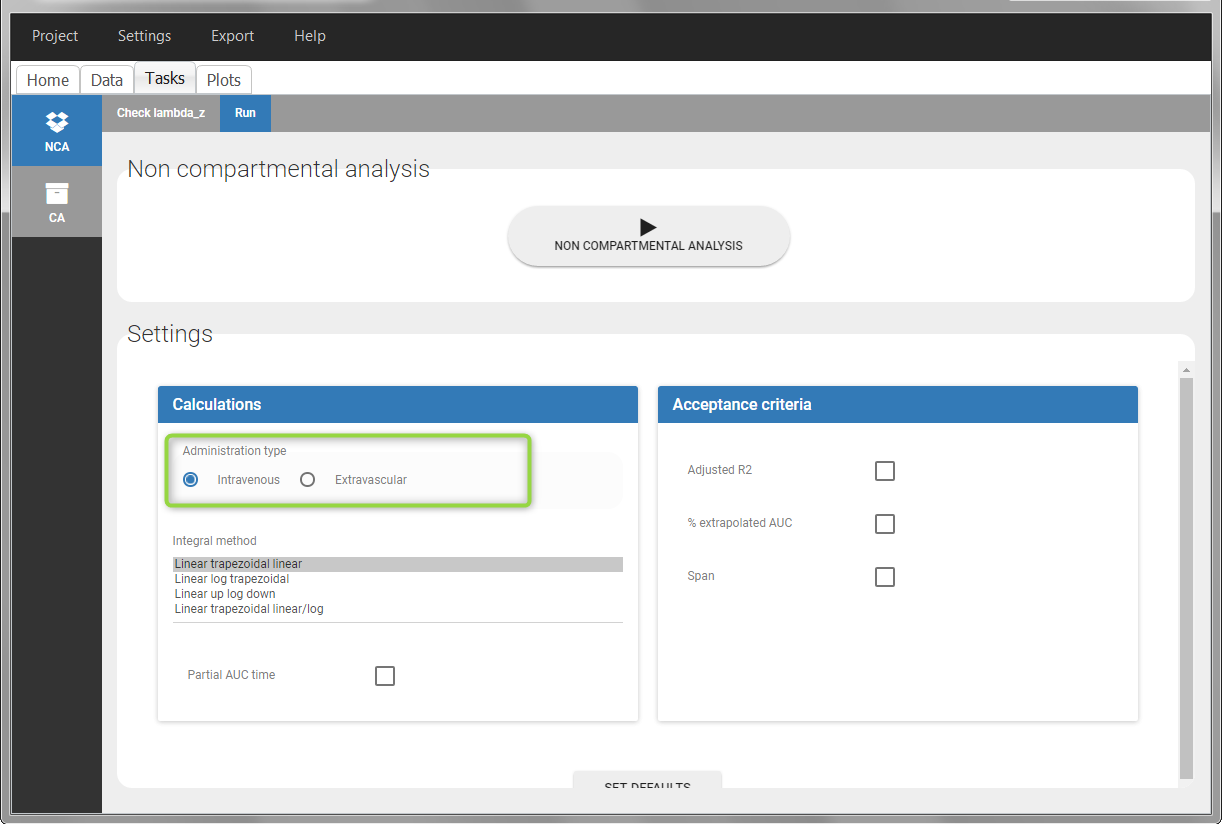

In “Tasks>Run” the administration type must be set to “intravenous”.

If no measurement is recorded at the time of the dose, a concentration of zero is added for single dose data, the minimum concentration observed during the dose interval for steady-state data.

Example:

- demo project_ivinfusion.pkx:

In this example, the patients receive an iv infusion over 3 hours. The infusion duration is recorded in the column called “TINF” in this example, and tagged as INFUSION DURATION.

In the “Tasks/Run” tab, the user must indicate that this is intravenous data.

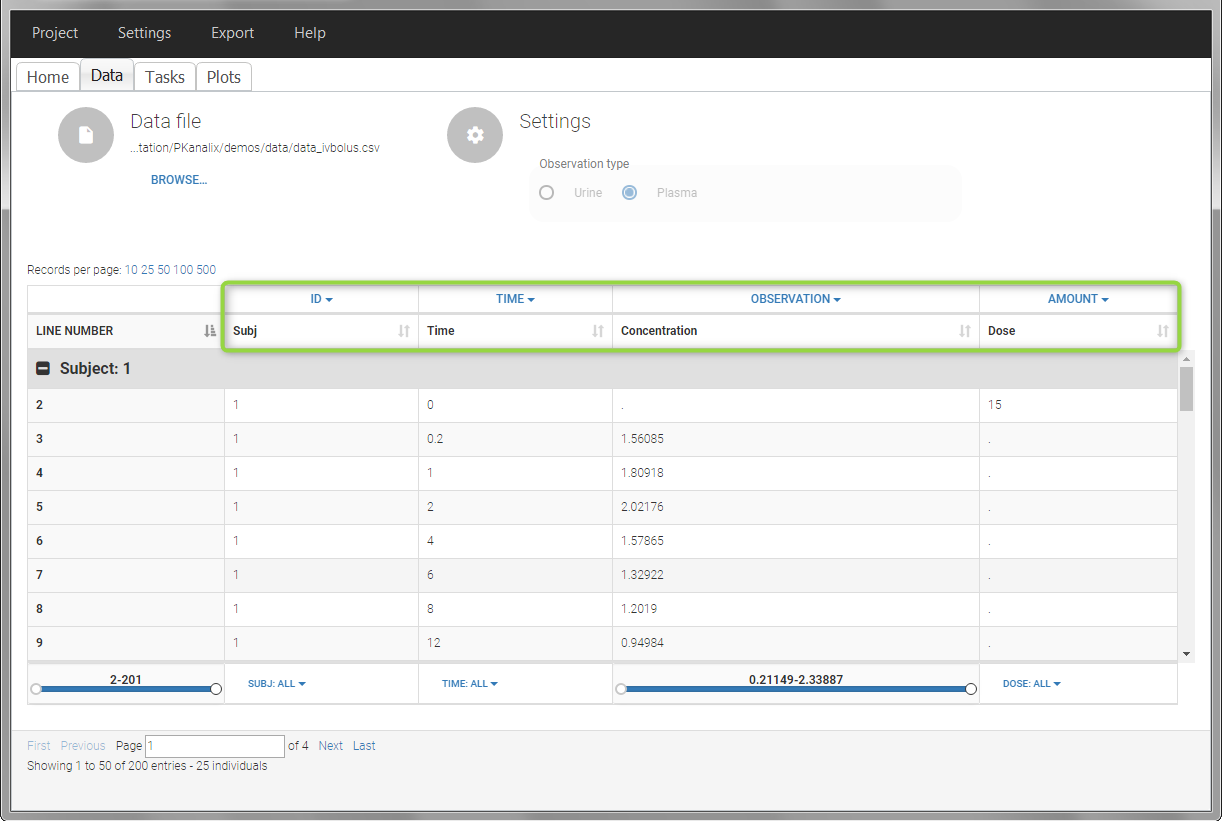

IV bolus

For IV bolus administration, the mandatory column-types are ID (individual identifiers as integers or strings), OBSERVATION (measured concentrations), AMOUNT (dose amount administered, mandatory for 2023 and previous versions) and TIME (time of dose or measurement). Since version 2024, datasets that do not contain an AMOUNT column or no information in the AMOUT column are accepted for single dose or multiple dose administrations.

To distinguish the IV bolus from the extravascular case, in “Tasks>Run” the administration type must be set to “intravenous”.

If no measurement is recorded at the time of the dose, the concentration of at time zero is extrapolated using a log-linear regression of the first two data points, or is taken to be the first observed measurement if the regression yields a slope >= 0. See the calculation details for more information.

Example:

- demo project_ivbolus.pkx:

In this data set, 25 individuals have received an iv bolus and their plasma concentration have been recorded over 12 hours. For each individual (indicated in the column “Subj” tagged as ID column-type), we record the dose amount in a column “Dose”, tagged as AMOUNT column-type. The measured concentrations are tagged as OBSERVATION and the times as TIME. Check the OCCASION section if more sort variables are needed in addition to ID. After accepting the dataset, the data is automatically assigned as “plasma”.

In the “Tasks/Run” tab, the user must indicate that this is intravenous data. In the “Check lambda_z”, measurements originally present in the data are shown with full circles. Added data points, such as the C0 at the dose time, are represented with empty circles. Points included in the \(\lambda_z\) calculation are highlighted in blue.

|

|

After running the NCA analysis, PK parameters relevant to iv bolus administration are displayed in the “Results” tab.

Steady-state

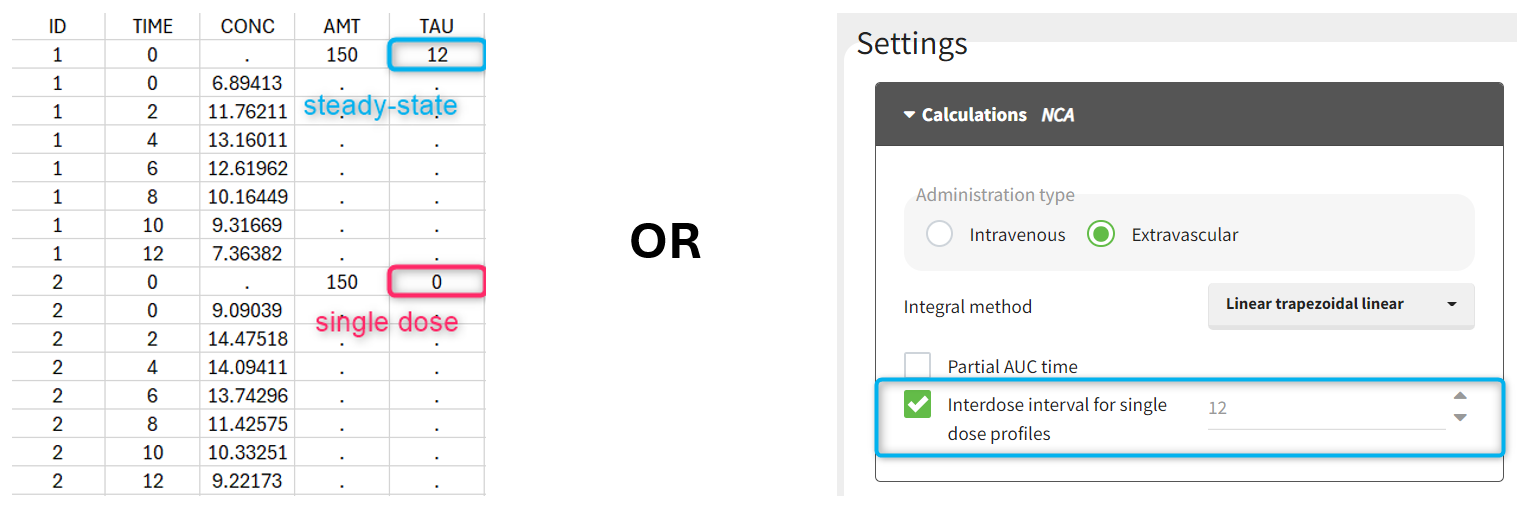

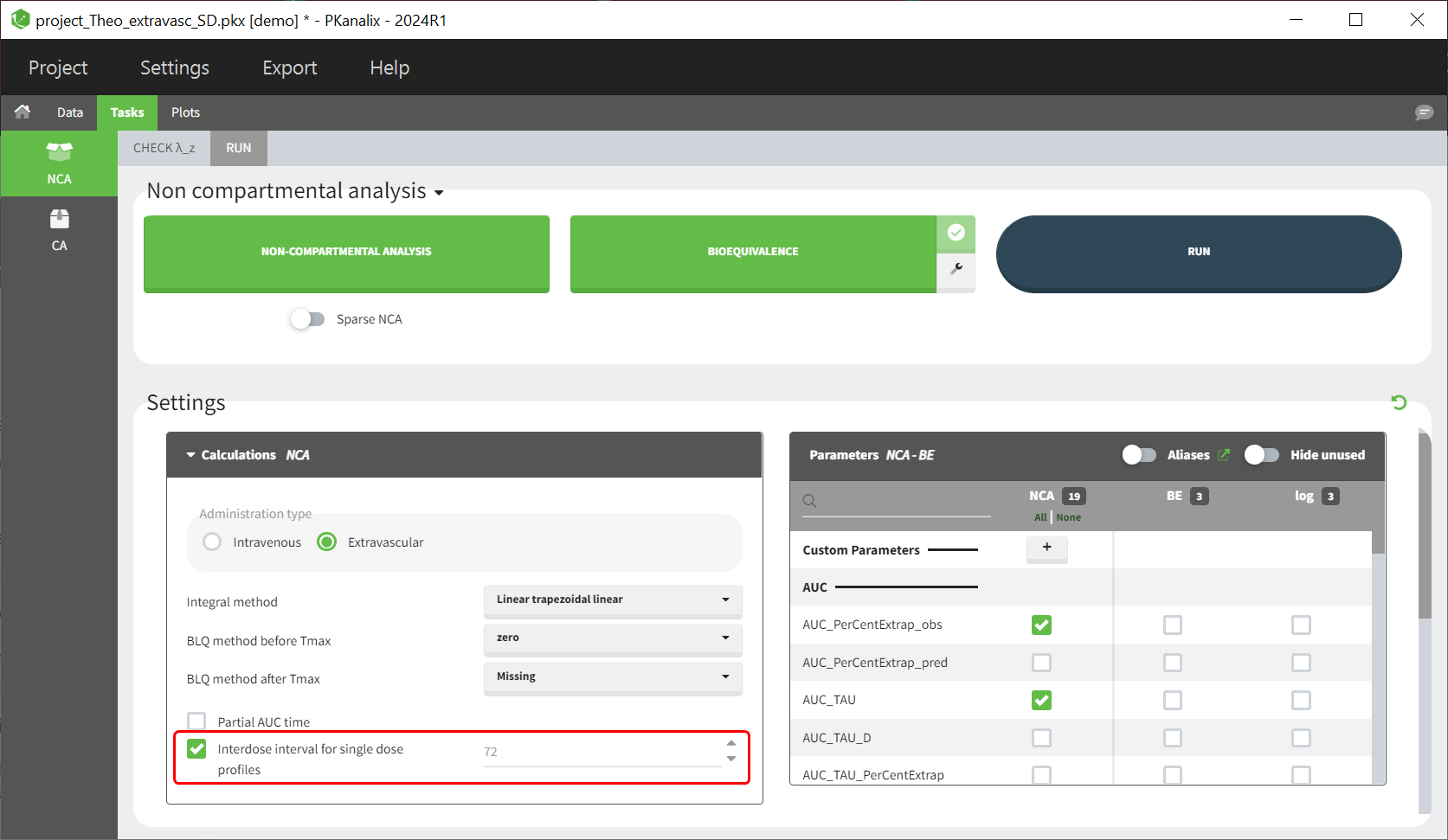

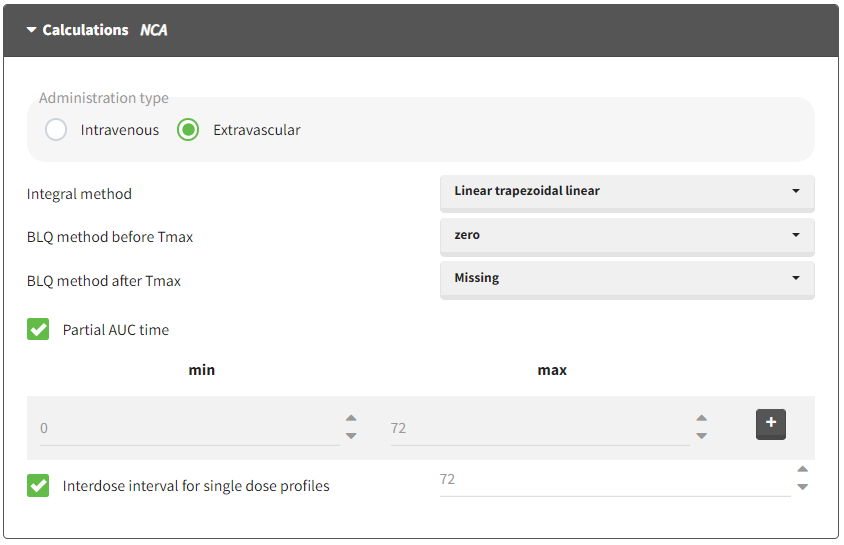

Starting from version 2024, the interdose interval tau used to calculate NCA parameters specific to steady-state can be specified either in the dataset column tagged as INTERDOSE INTERVAL, or directly as part of the NCA settings.

In the 2023 and previous versions, it was necessary to indicate steady-state using the STEADY STATE column-type: SS=1 indicates that the individual is already at steady-state when receiving the dose. This implicitly assumes that the individual has received many doses before this one. SS=0 or ‘.’ indicates a single dose. Starting from version 2024, the SS column is accepted but not mandatory anymore.

The dosing interval (also called tau) is indicated in the INTERDOSE INTERVAL column on the lines defining the doses, or as part of the NCA settings.

Steady state calculation formulas will be applied for individuals having a dose with INTERDOSE INTERVAL = double. A data set can contain individuals which are at steady-state and some which are not. If the NCA setting “Interdose interval for single dose profiles” is selected, steady-state parameters are calculated for all individuals.

If no measurement is recorded at the time of the dose, the minimum concentration observed during the dose interval is added at the time of the dose for extravascular and infusion data. For iv bolus, a regression using the two first data points is performed. Only measurements between the dose time and dose time + interdose interval will be used.

Examples:

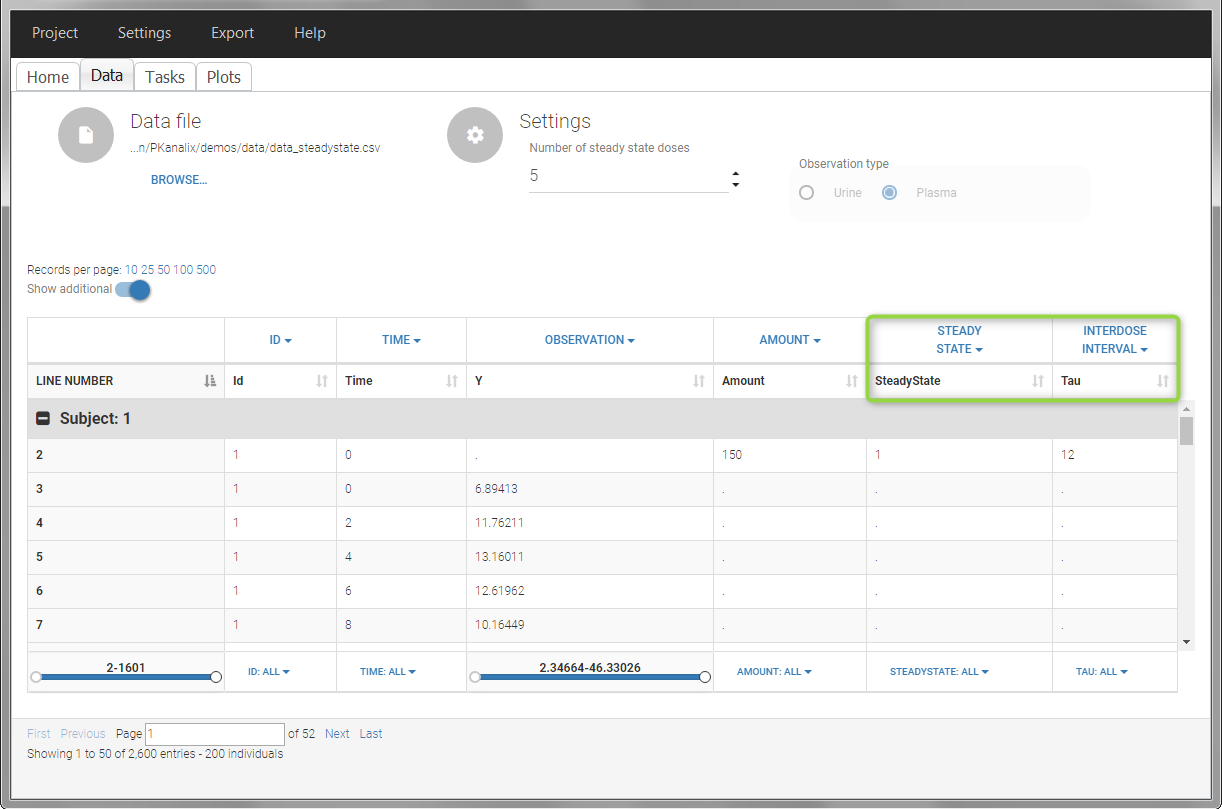

- demo project_steadystate.pkx:

In this example, the individuals are already at steady-state when they receive the dose. This is indicated in the data set via the column “SteadyState” tagged as STEADY STATE column-type, which contains a “1” on lines recording doses. The interdose interval is noted on those same line in the column “tau” tagged as INTERDOSE INTERVAL. When accepting the data set, a “Settings” section appears, which allows to define the number of steady-state doses. This information is relevant when exporting to Monolix, but not used in PKanalix directly.

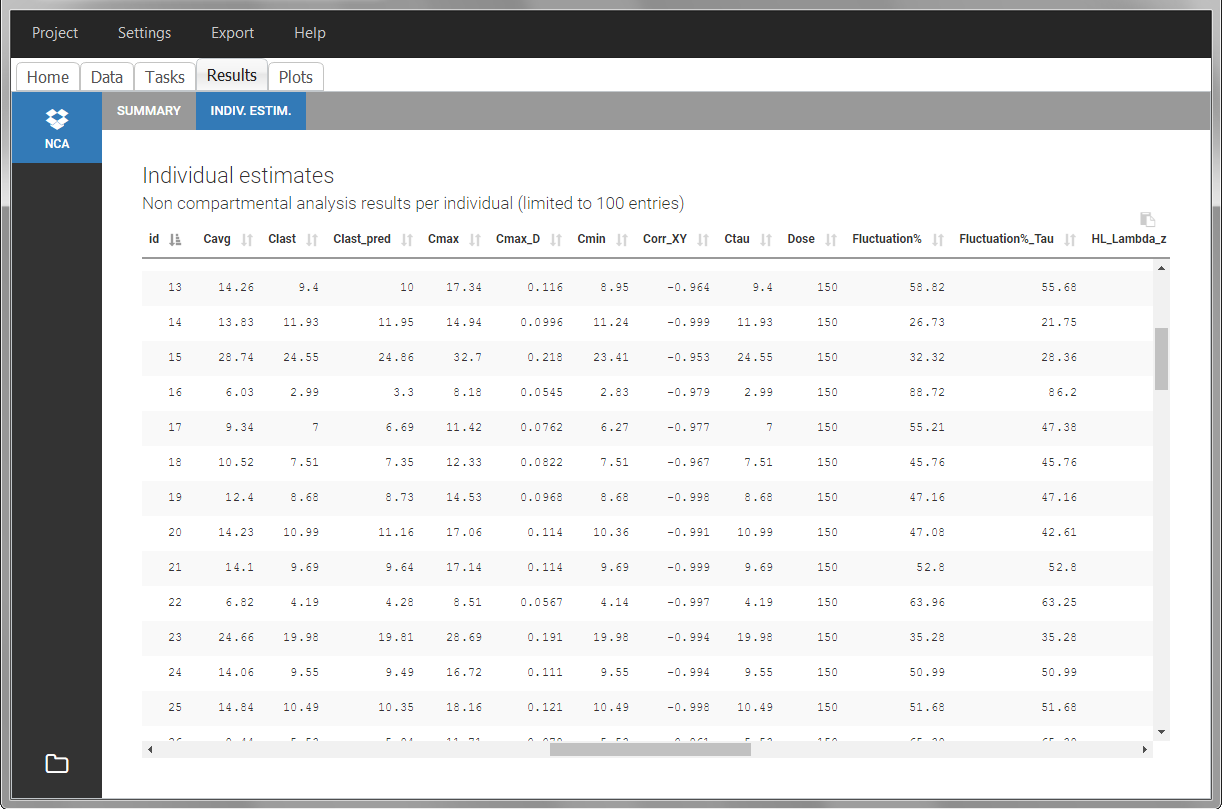

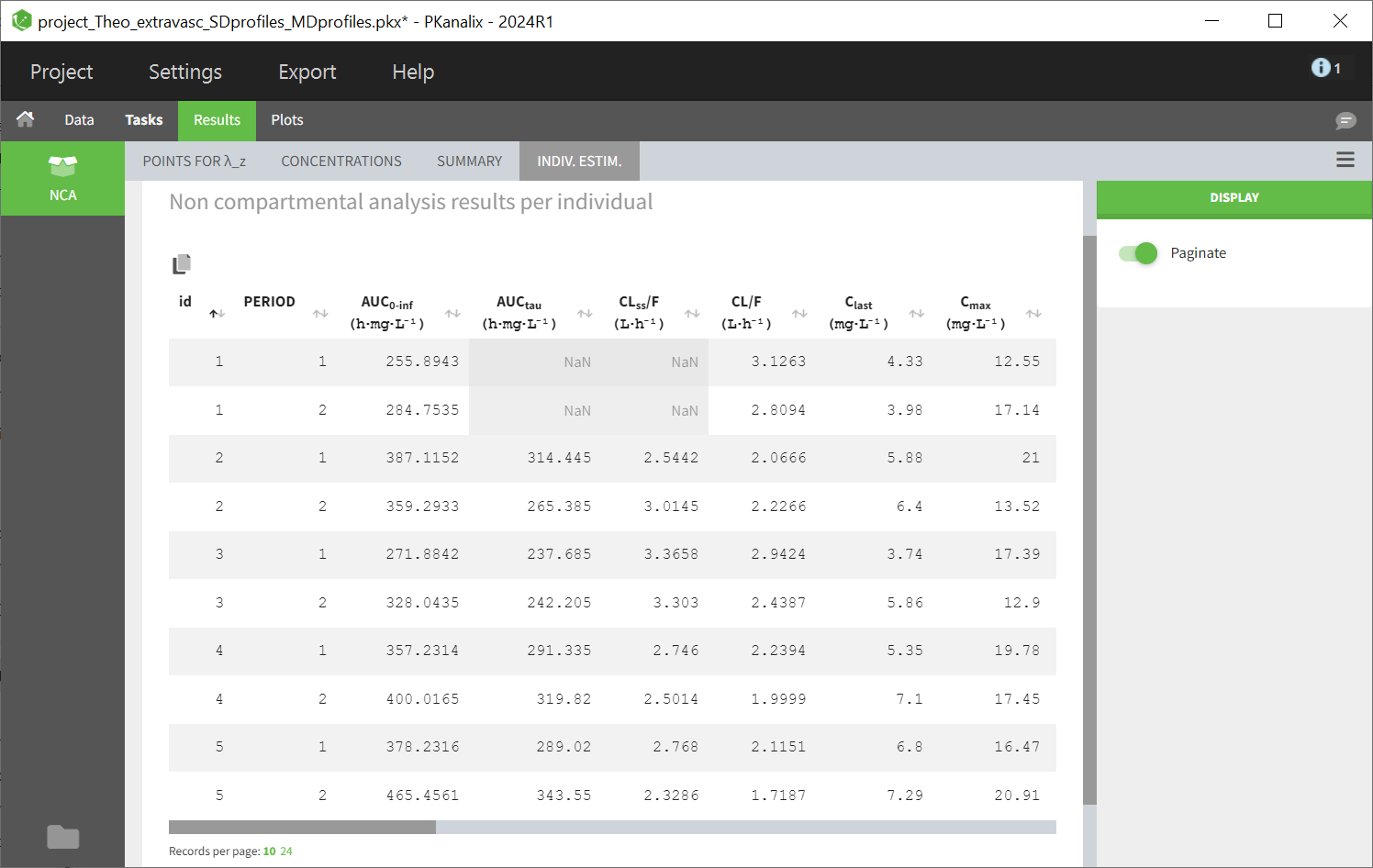

After running the NCA estimation task, steady-state specific parameters are displayed in the “Results” tab.

BLQ data

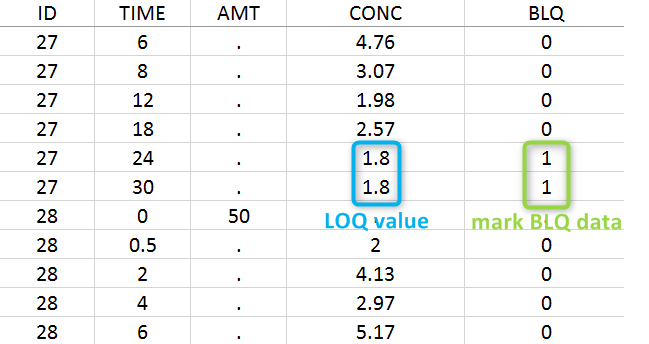

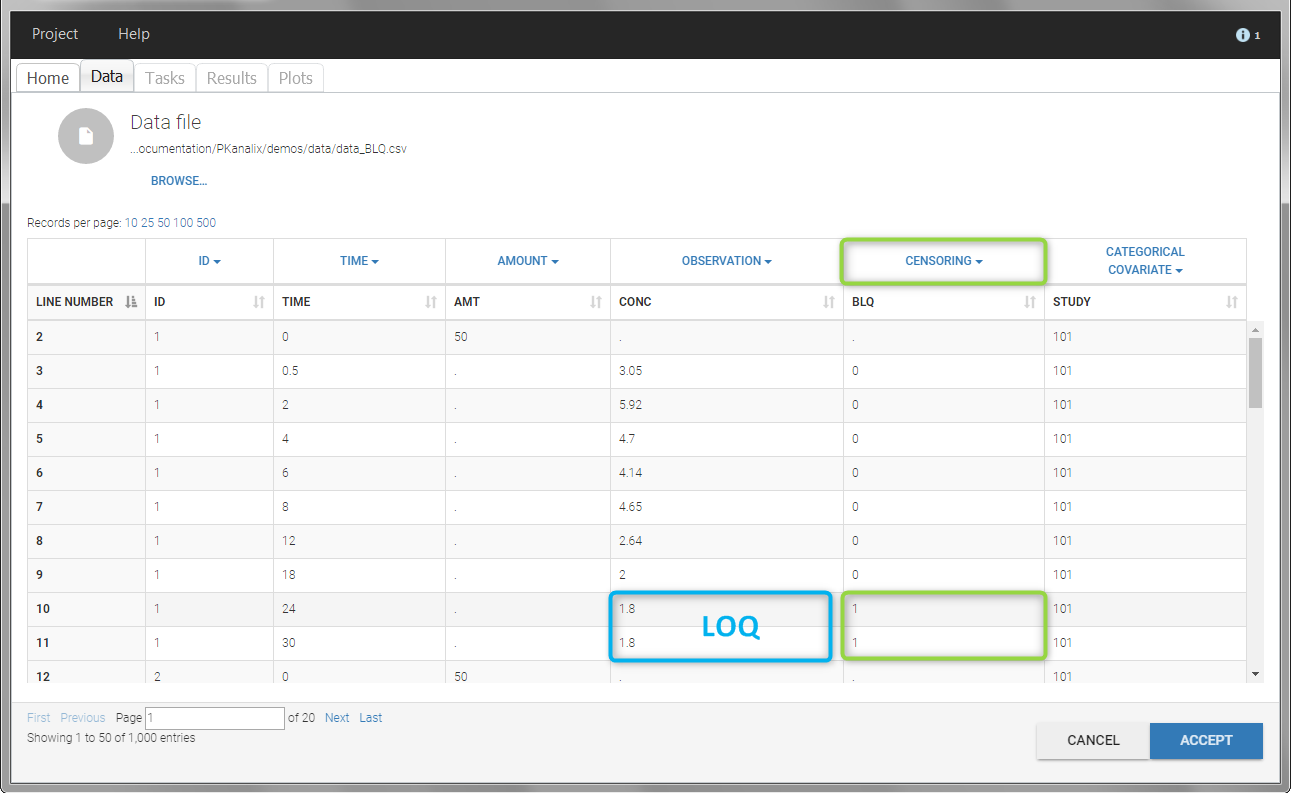

Below the limit of quantification (BLQ) data can be recorded in the data set using the CENSORING column:

- “0” indicates that the value in the OBSERVATION column is the measurement.

- “1” indicates that the observation is BLQ.

The lower limit of quantification (LOQ) must be indicated in the OBSERVATION column when CENSORING = “1”. Note that strings are not allowed in the OBSERVATION column (except dots). A different LOQ value can be used for each BLQ data.

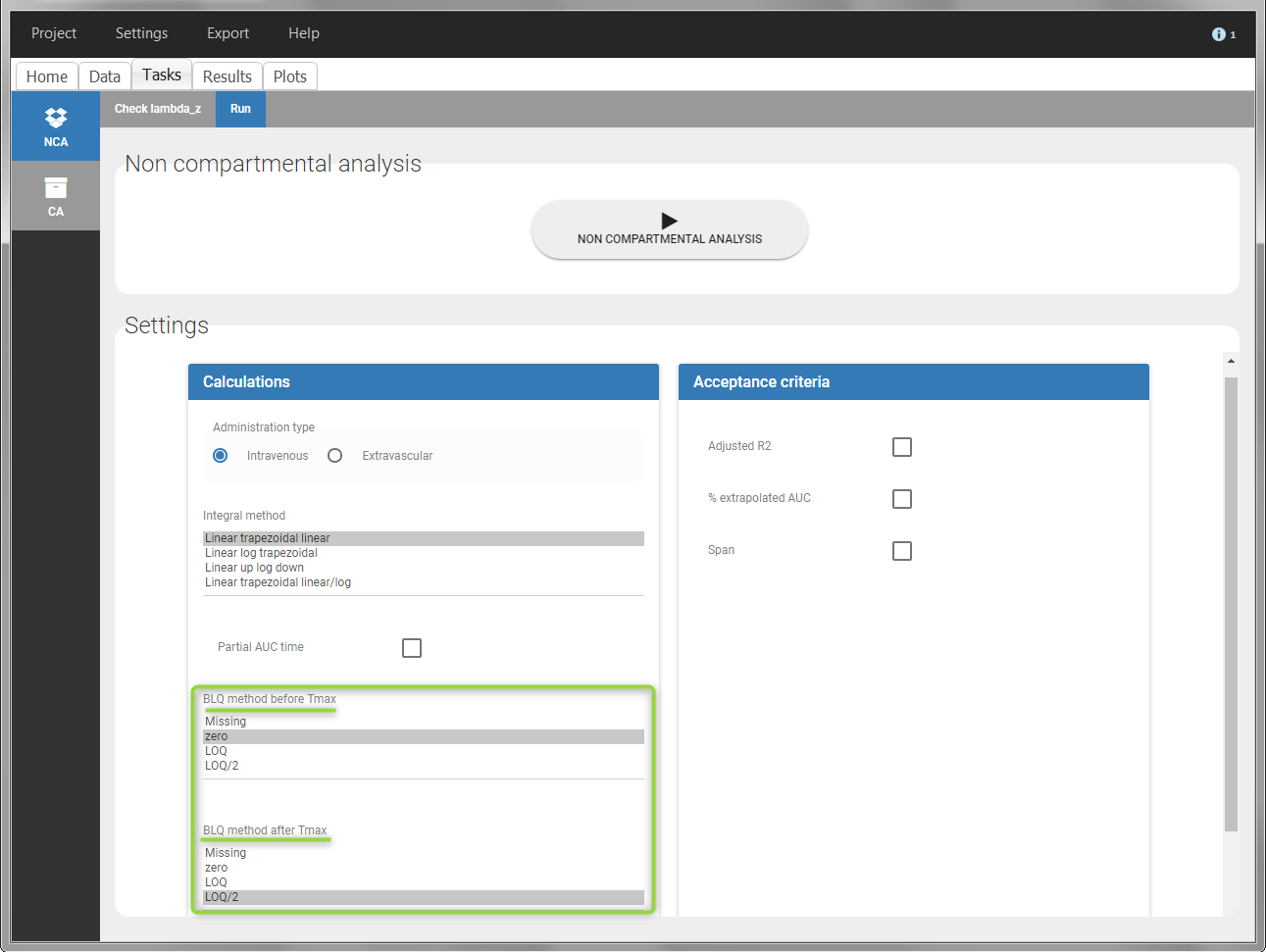

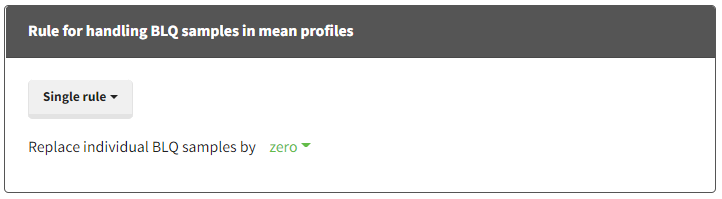

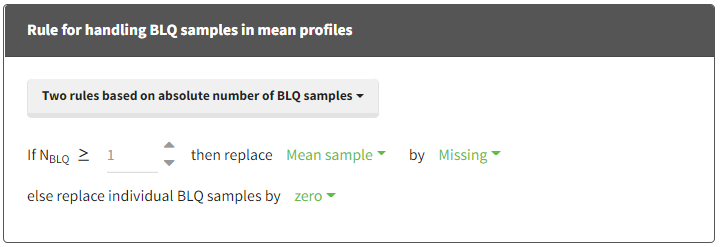

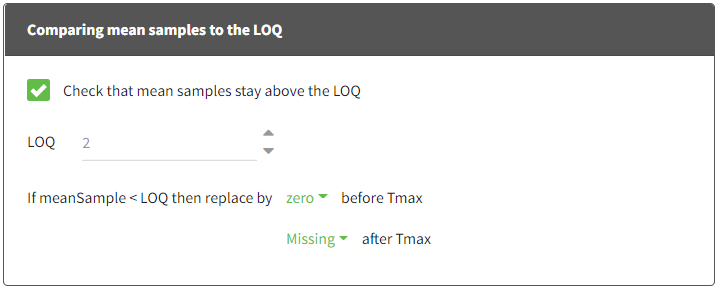

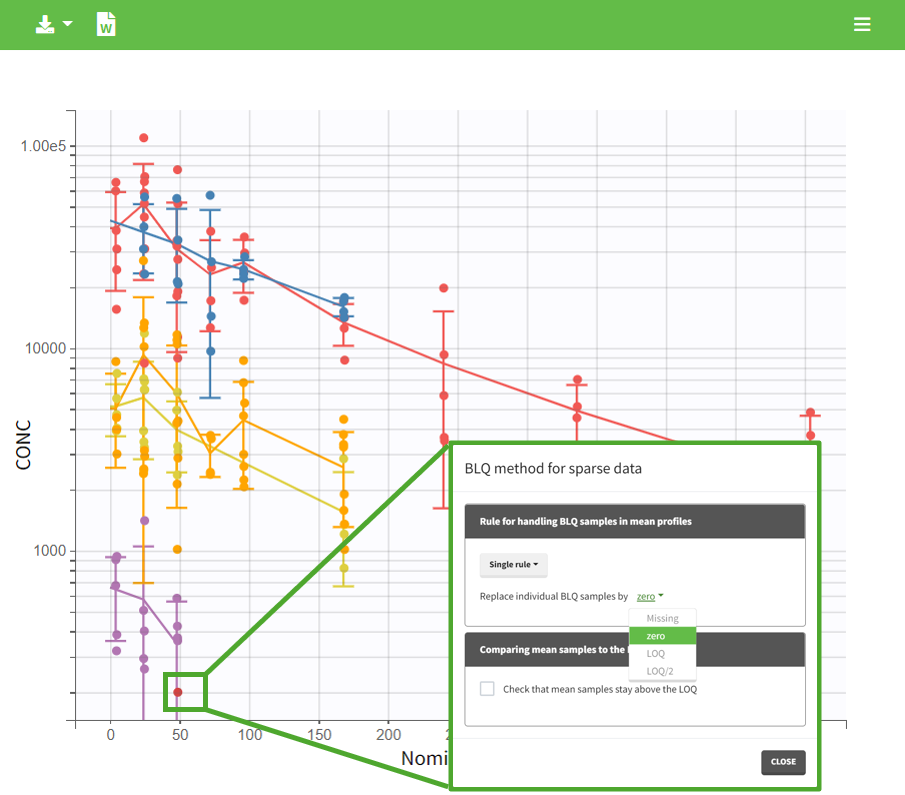

When performing an NCA analysis, the BLQ data before and after the Tmax are distinguished. They can be replaced by:

- zero

- the LOQ value

- the LOQ value divided by 2

- or considered as missing

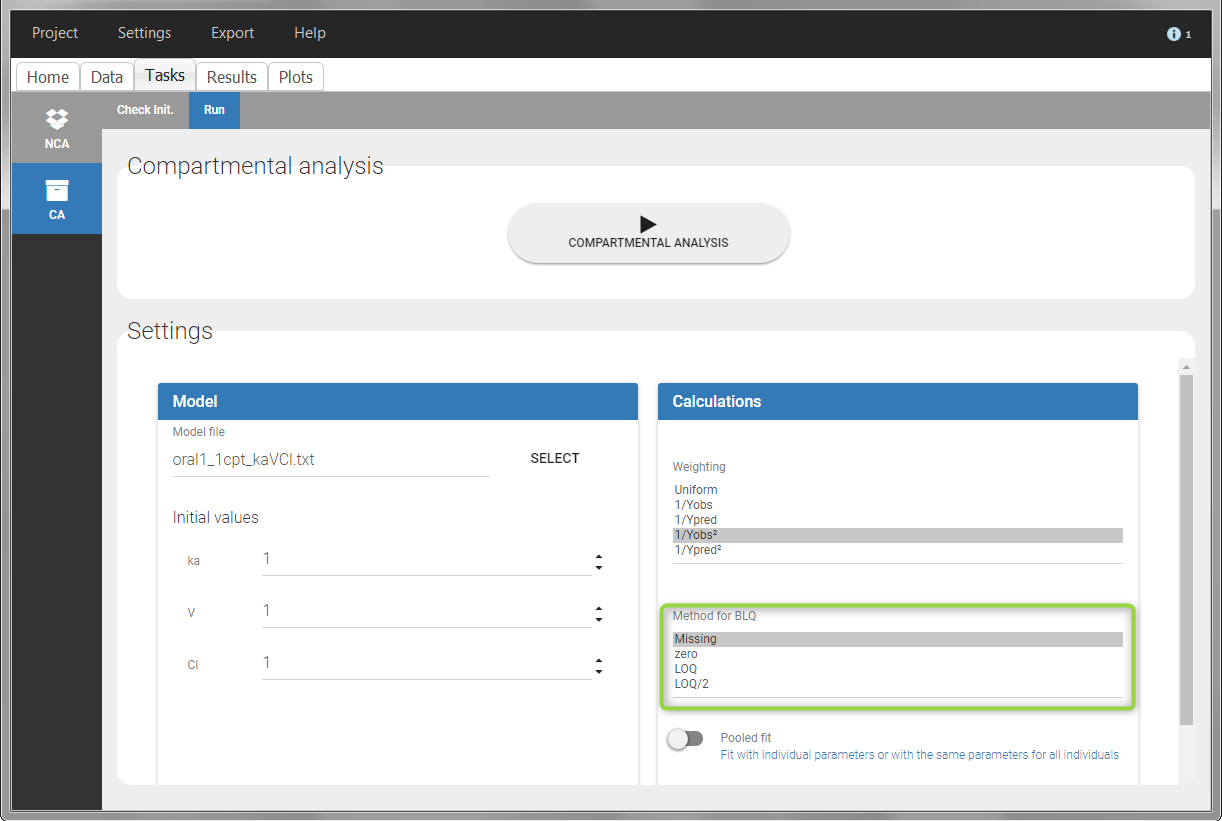

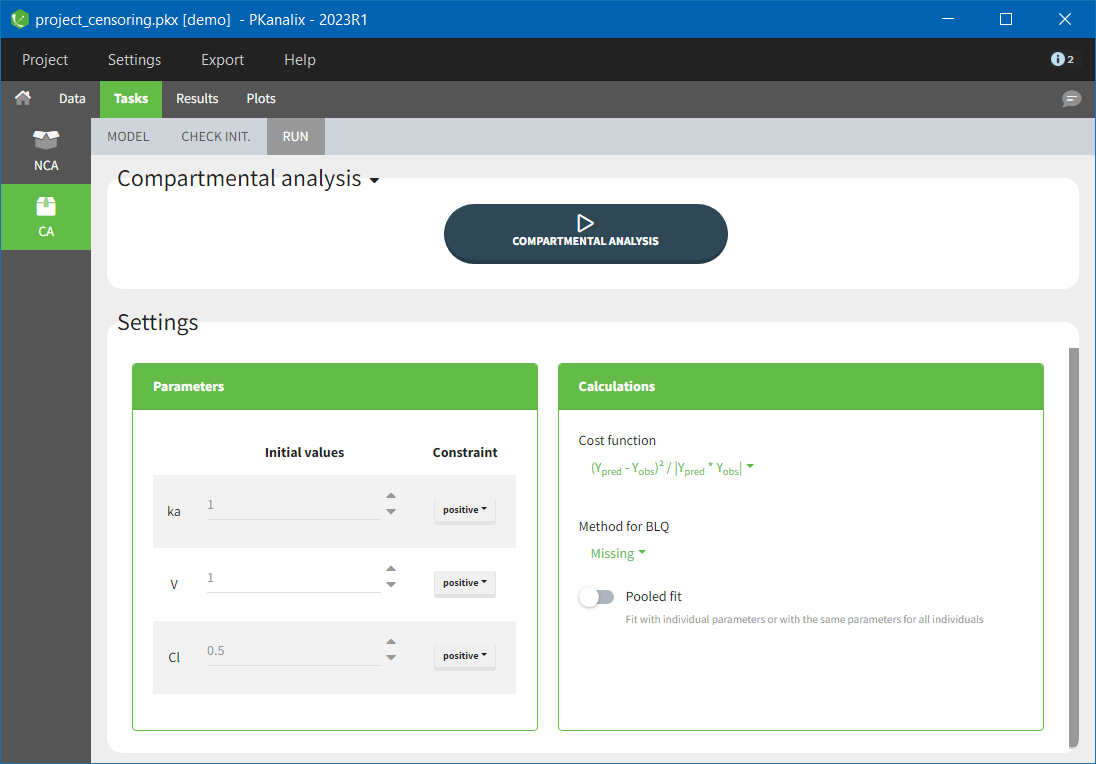

For a CA analysis, the same options are available, but no distinction is done between before and after Tmax. Once replaced, the BLQ data are handled as any other observation.

A LIMIT column can be added to record the other limit of the interval (in general zero). This value will not be used by PKanalix but can facilitate the transition from an NCA/CA analysis PKanalix to a population model with Monolix.

To easily encode BLQ data in a dataset that only has BLQ tags in the observation column, you can use Data formatting.

Examples:

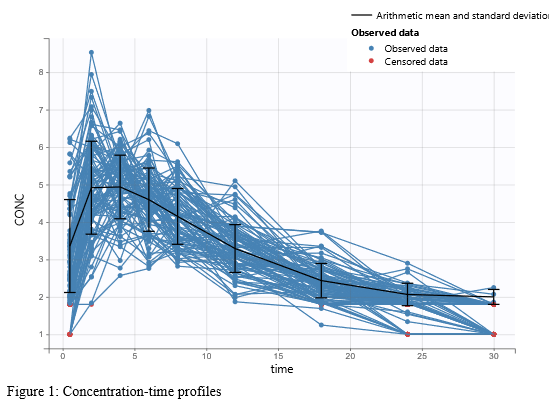

- demo project_censoring.pkx: two studies with BLQ data with two different LOQ

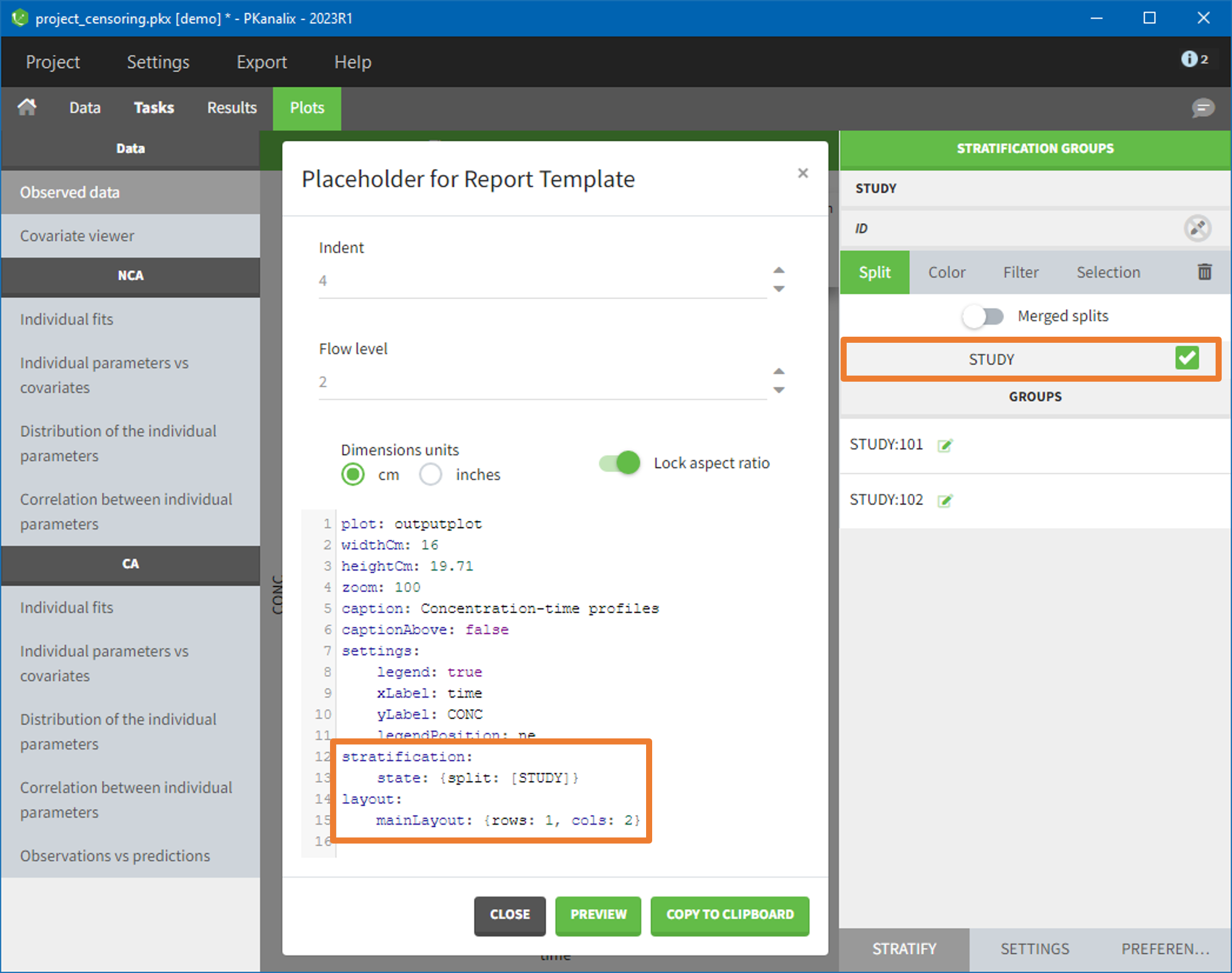

In this dataset, the measurements of two different studies (indicated in the STUDY column, tagged as CATEGORICAL COVARIATE in order to be carried over) are recorded. For the study 101, the LOQ is 1.8 ug/mL, while it is 1 ug/mL for study 102. The BLQ data are marked with a “1” in the BLQ column, which is tagged as CENSORING. The LOQ values are indicated for each BLQ in the CONC column of measurements, tagged as OBSERVATION.

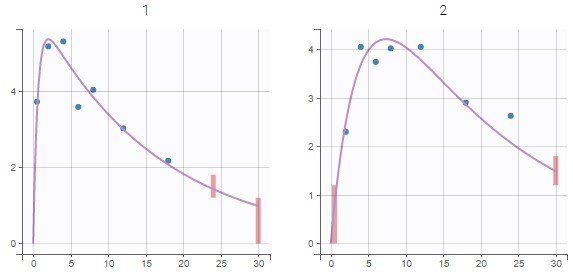

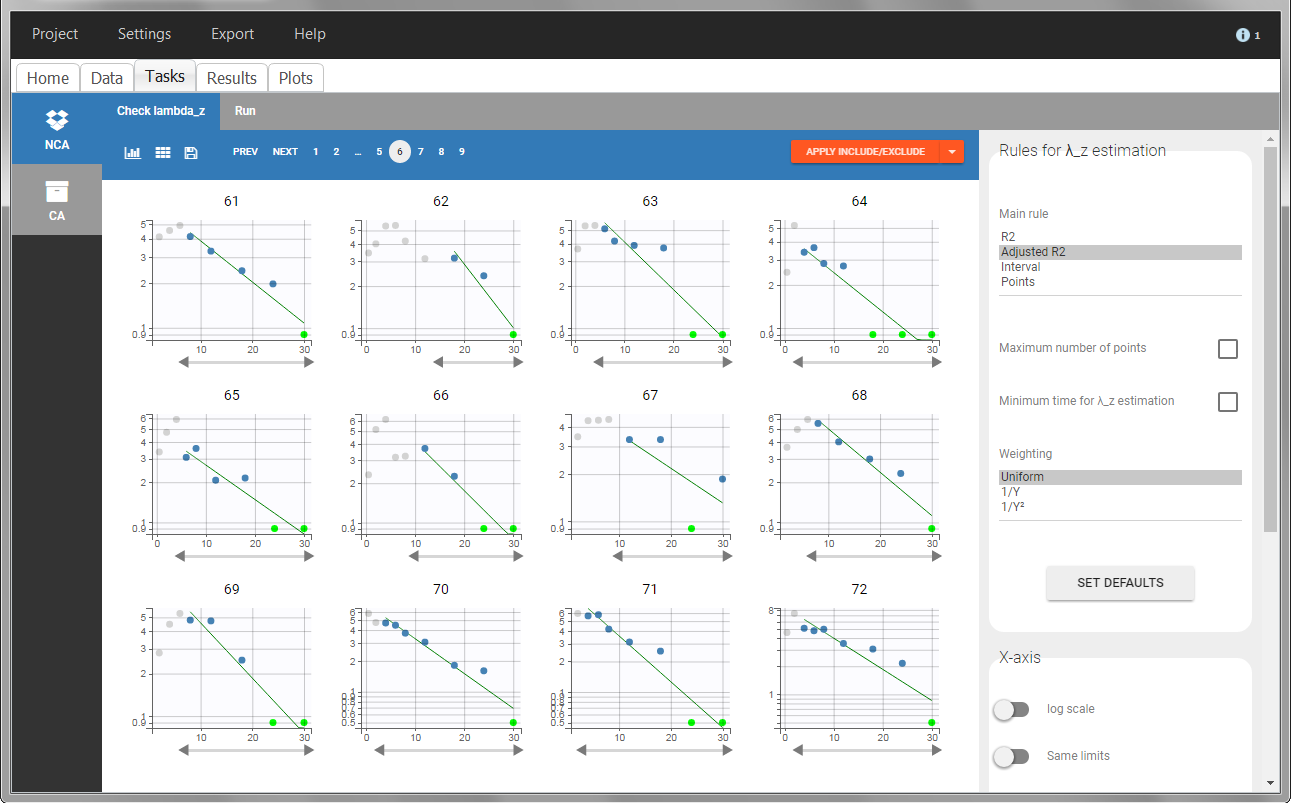

In the “Task>NCA>Run” tab, the user can choose how to handle the BLQ data. For the BLQ data before and after the Tmax, the BLQ data can be considered as missing (as if this data set row would not exist), or replaced by zero (default before Tmax), the LOQ value or the LOQ value divided by 2 (default after Tmax). In the “Check lambda_z” tab, the BLQ data are shown in green and displayed according to the replacement value.

|

|

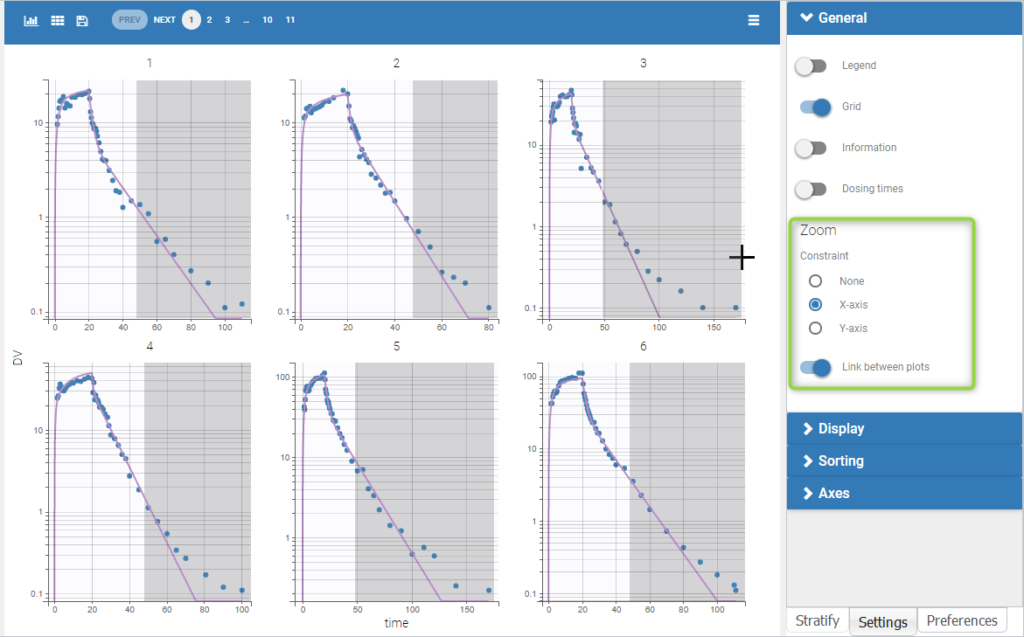

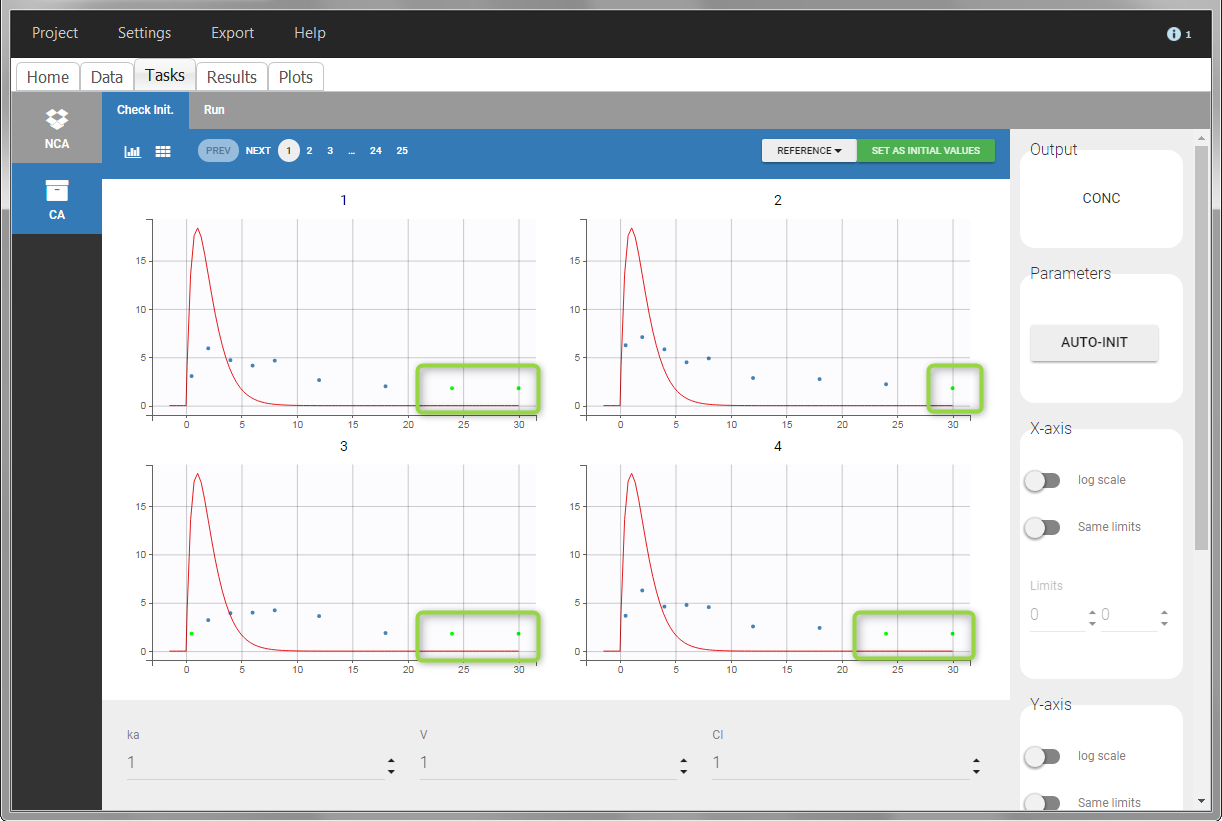

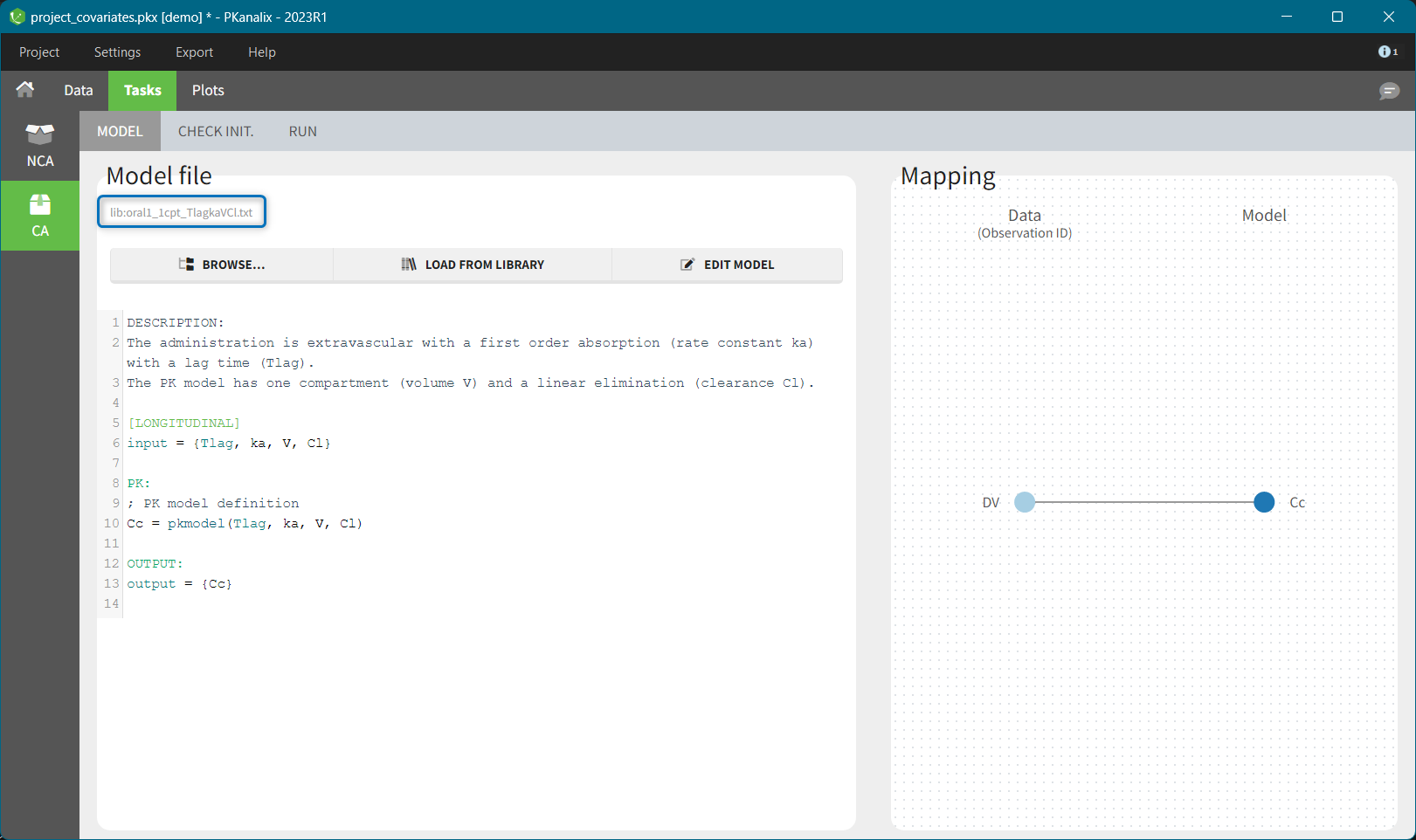

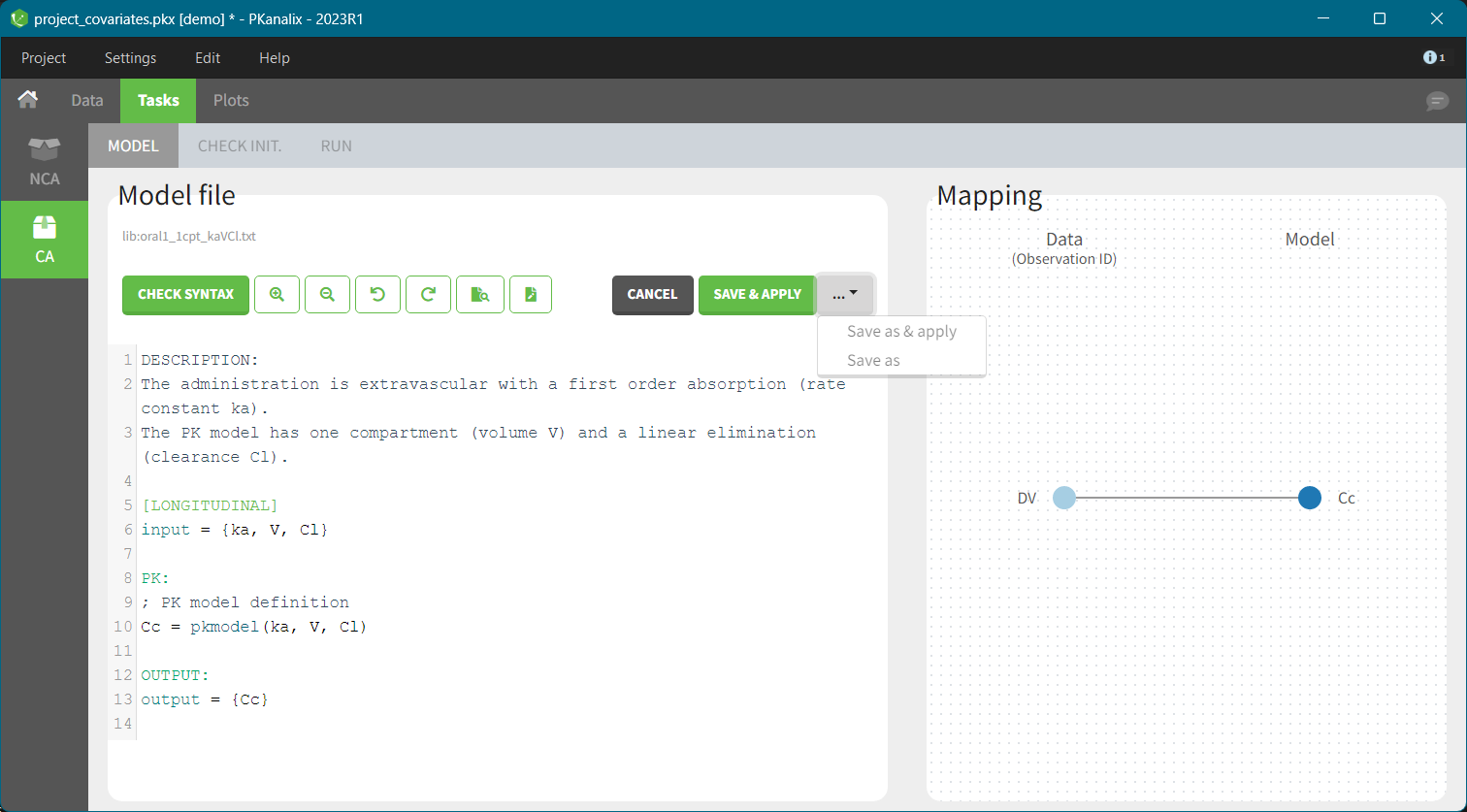

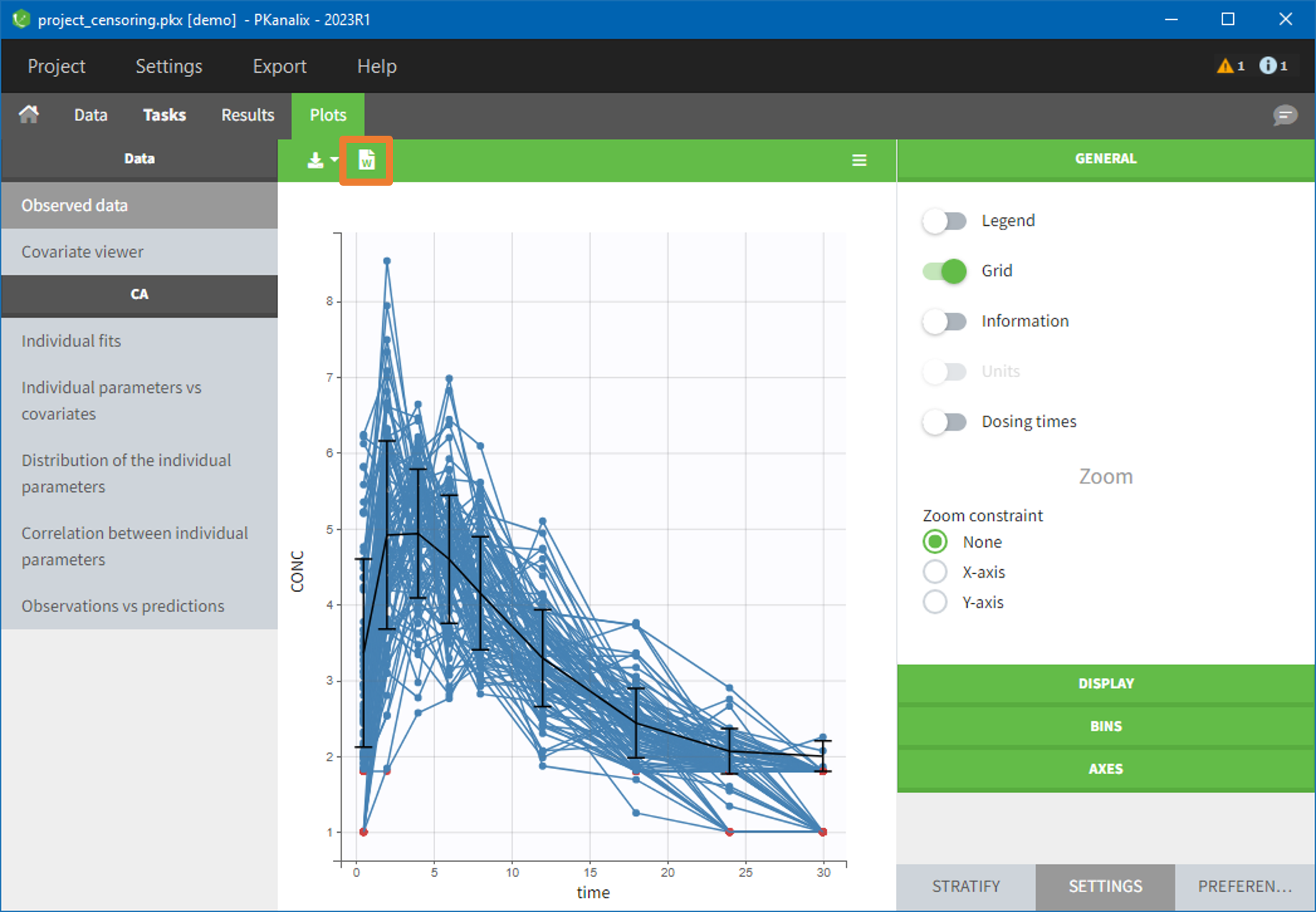

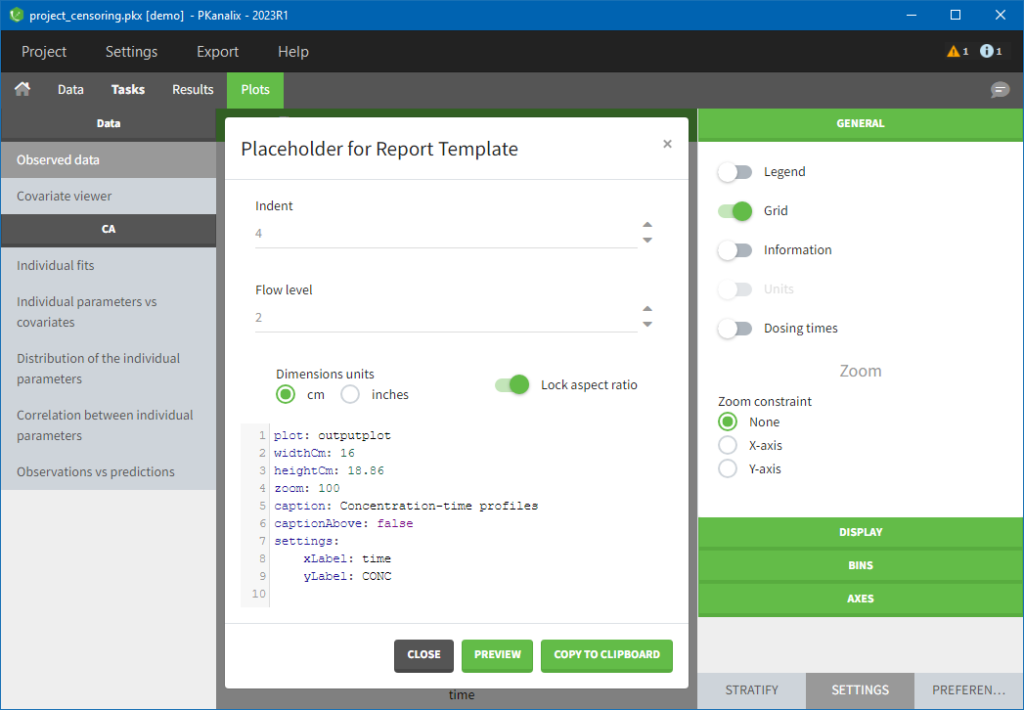

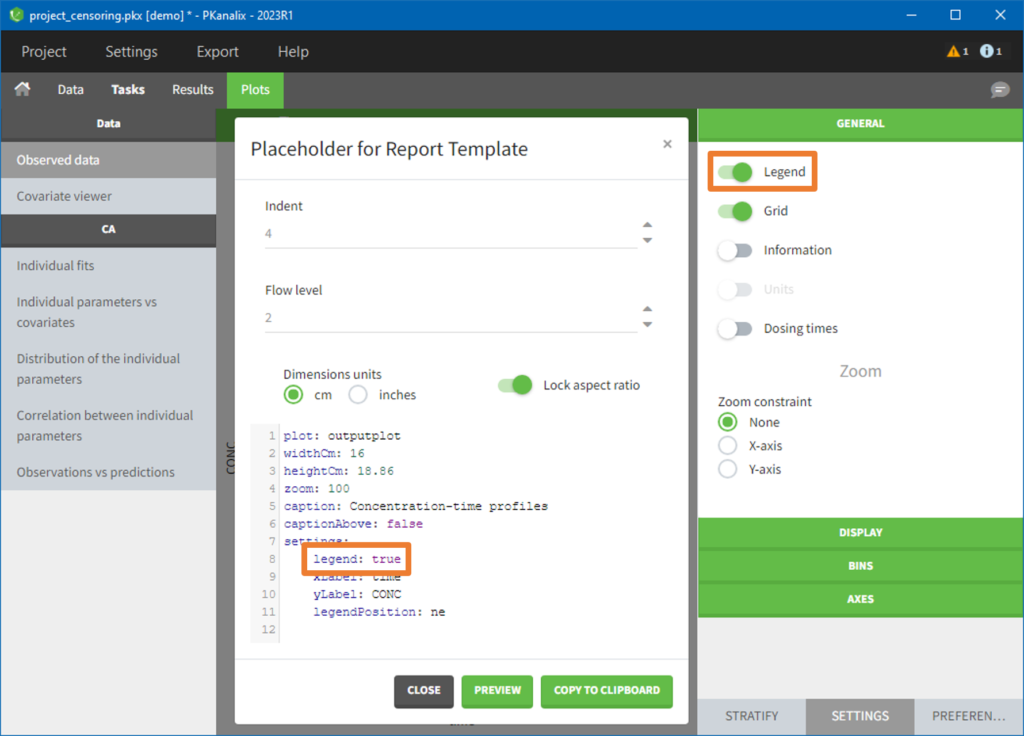

For the CA analysis, the replacement value for all BLQ can be chosen in the settings of the “Run” tab (default is Missing). In the “Check init.” tab, the BLQ are again displayed in green, at the LOQ value (irrespective of the chosen method for the calculations).

|

|

Urine data

To work with urine data, it is necessary to record the time and amount administered, the volume of urine collected for each time interval, the start and end time of the intervals and the drug concentration in a urine sample of each interval. The time intervals must be continuous (no gaps allowed).

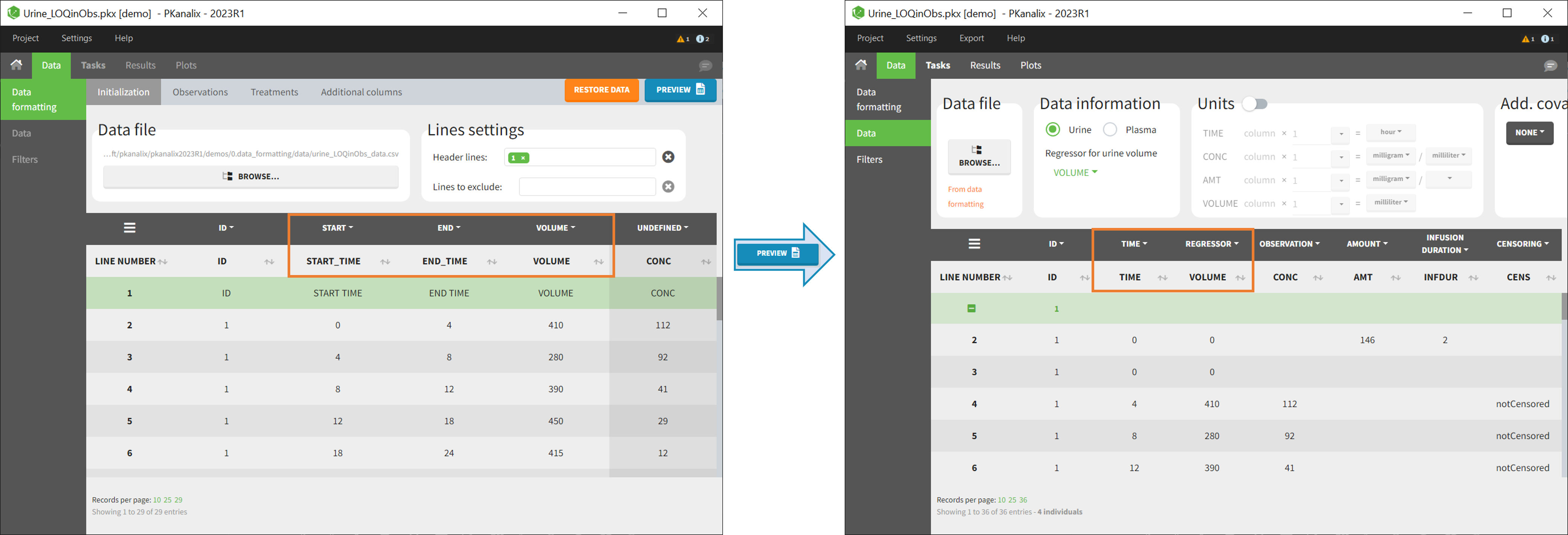

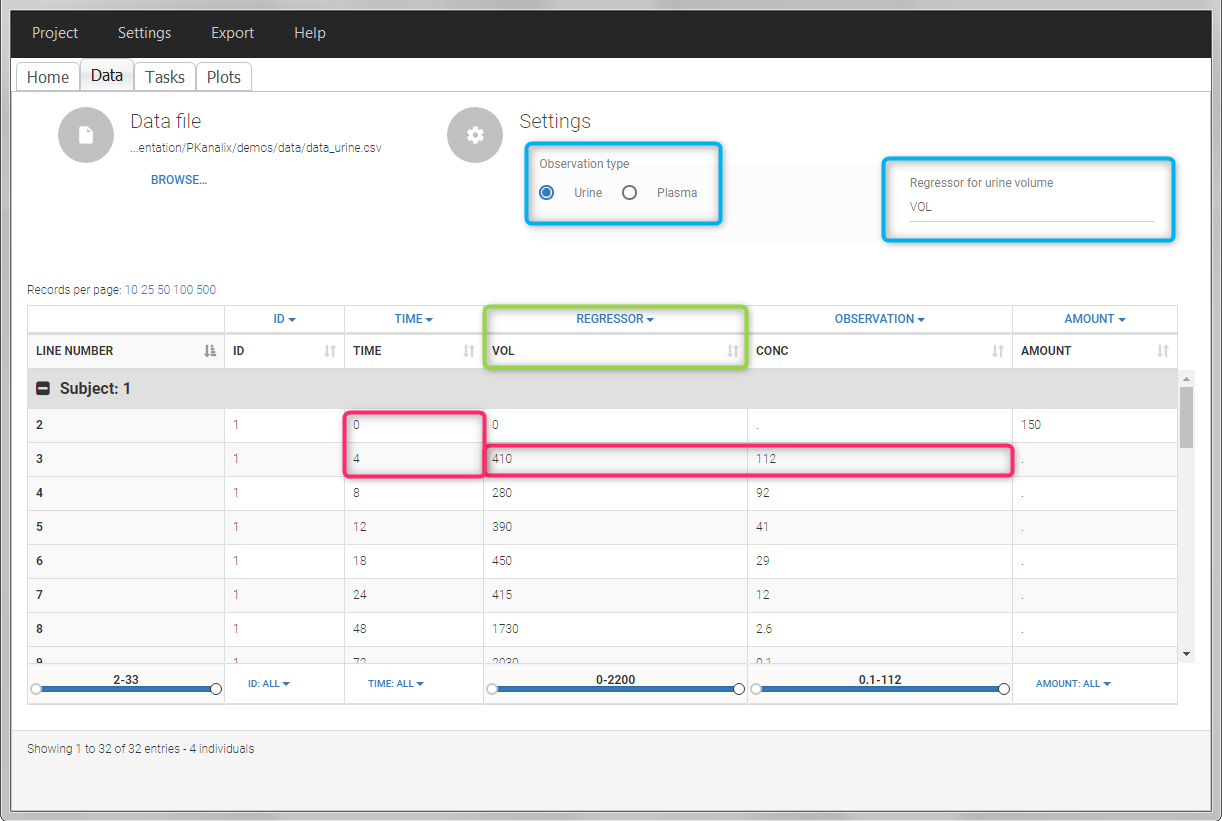

In PKanalix, the start and end times of the intervals are recorded in a single column, tagged as TIME column-type. In this way, the end time of an interval automatically acts as start time for the next interval. The concentrations are recorded in the OBSERVATION column. The volume column must be tagged as REGRESSOR column type. This general column-type of MonolixSuite data sets allows to easily transition to the other applications of the Suite. As several REGRESSOR columns are allowed, the user can select which REGRESSOR column should be used as volume. The concentration and volume measured for the interval [t1,t2] are noted on the t2 line. The volume value on the dose line is meaningless, but it cannot be a dot. We thus recommend to set it to zero.

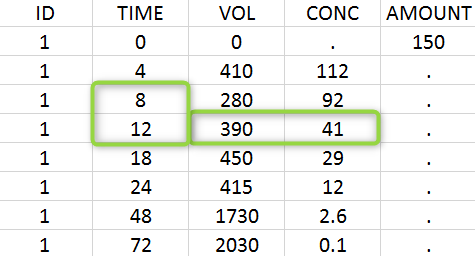

A typical urine data set has the following structure. A dose of 150 ng has been administered at time 0. The first sampling interval spans from the dose at time 0 to 4h post-dose. During this time, 410 mL of urine have been collected. In this sample, the drug concentration is 112 ng/mL. The second interval spans from 4h to 8h, the collected urine volume is 280 mL and its concentration is 92 ng/mL. The third interval is marked on the figure: 390mL of uring have been collected from 8h to 12h.

The given data is used to calculate the intervals midpoints, and the excretion rates for each interval. This information is then used to calculate the \(\lambda_z\) and calculate urine-specific parameters. In “Tasks/Check lambda_z”, we display the midpoints and excretion rates. However, in the “Plots>Data viewer”, we display the measured concentrations at the end time of the interval.

Example:

- demo project_urine.pkx: urine PK dataset

In this urine PK data set, we record the consecutive time intervals in the “TIME” column tagged as TIME. The collected volumes and measured concentration are in the columns “VOL” and “CONC”, respectively tagged as REGRESSOR and OBSERVATION. Note that the volume and concentration are indicated on the line of the interval end time. The volume on the first line (start time of the first interval, as well as dose line) is set to zero as it must be a double. This value will not be used in the calculations. Once the dataset is accepted, the observation type must be set to “urine” and the regressor column corresponding to the volume defined.

In “Tasks>Check lambda_z”, the excretion rate are plotted on the midpoints time for each individual. The choice of the lambda_z works as usual.

Once the NCA task has run, urine-specific PK parameters are displayed in the “Results” tab.

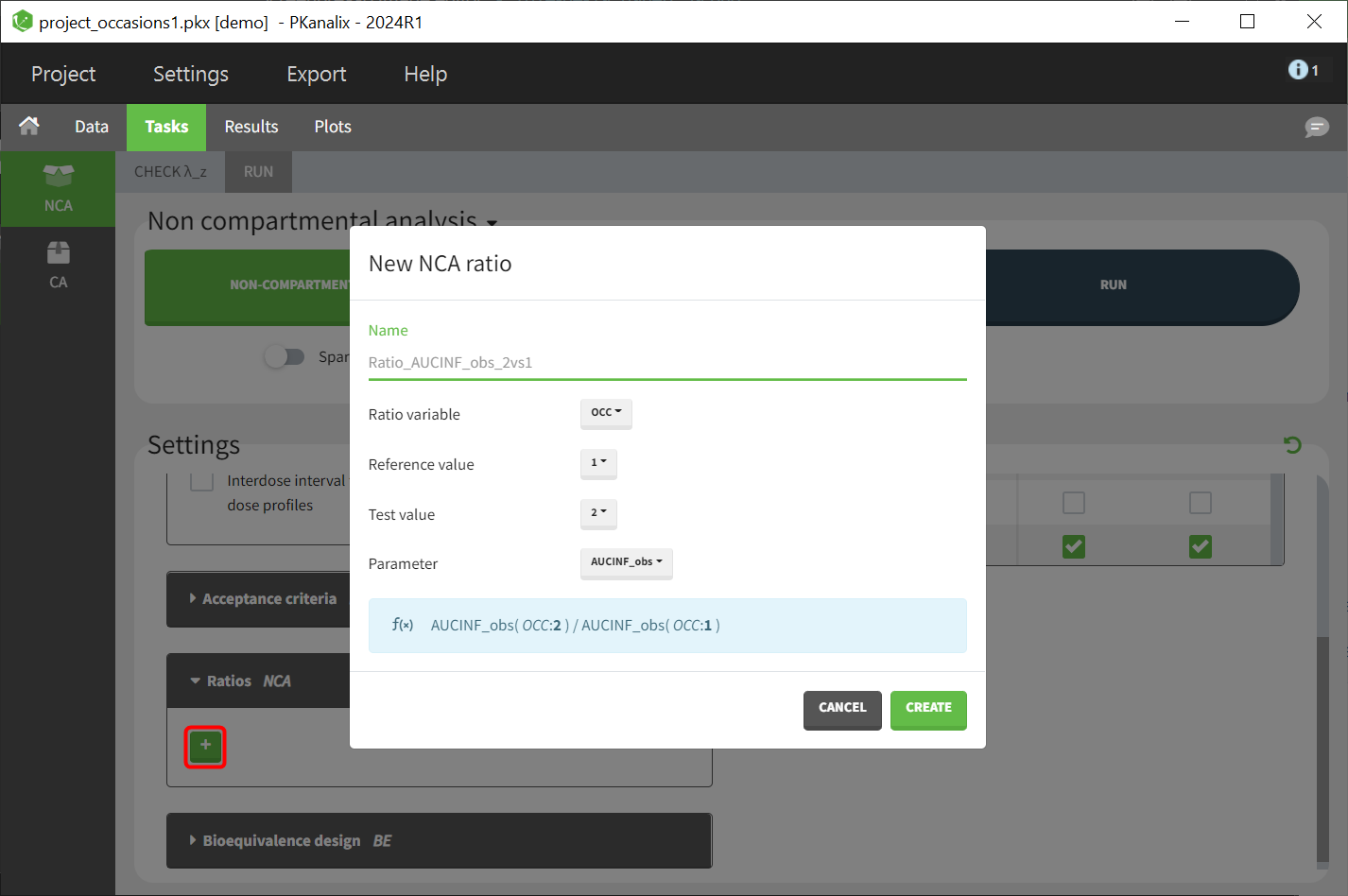

Occasions (“Sort” variables)

The main sort level are the individuals indicated in the ID column. Additional sort levels can be encoded using one or several OCCASION column(s). OCCASION columns contain integer values that permit to distinguish different time periods for a given individual. The time values can restart at zero or continue when switching from one occasion to the next one. The variables differing between periods, such as the treatment for a crossover study, are tagged as CATEGORICAL or CONTINUOUS COVARIATES (see below). The NCA and CA calculations will be performed on each ID-OCCASION combination. Each occasion is considered independent of the other occasions (i.e a washout is applied between each occasion).

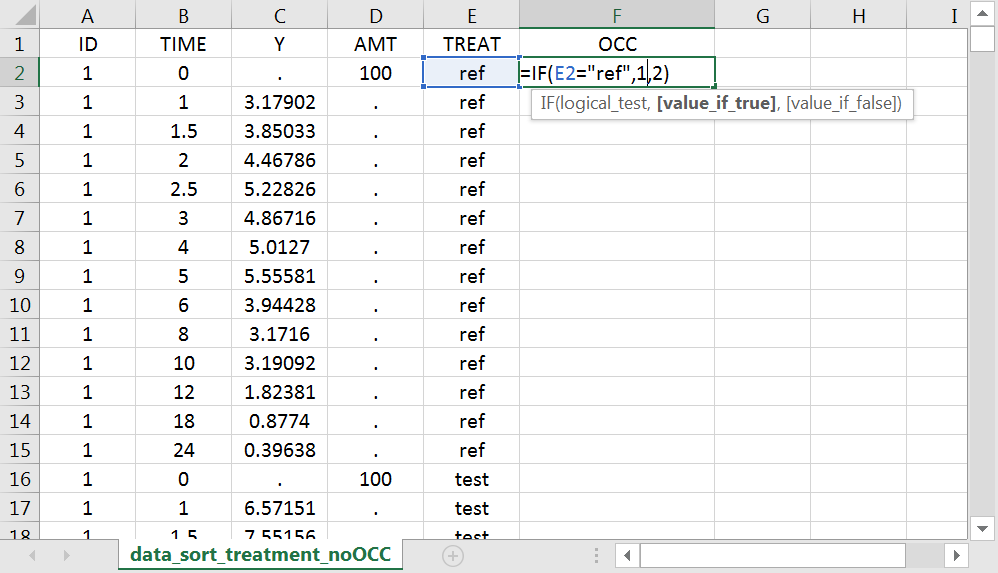

Note: occasions columns encoding the sort variables as integers can easily be added to an existing data set using Excel or R.

With R, the “OCC” column can be added to an existing “data” data frame with a column “TREAT” using data$OCC <- ifelse(data$TREAT=="ref", 1, 2).

With Excel, assuming the sort variable is encoded in the column E with values “ref” and “test”, type =IF(E2="ref",1,2) to generate the first value of the “OCC” column and then propagate to the entire column:

Examples:

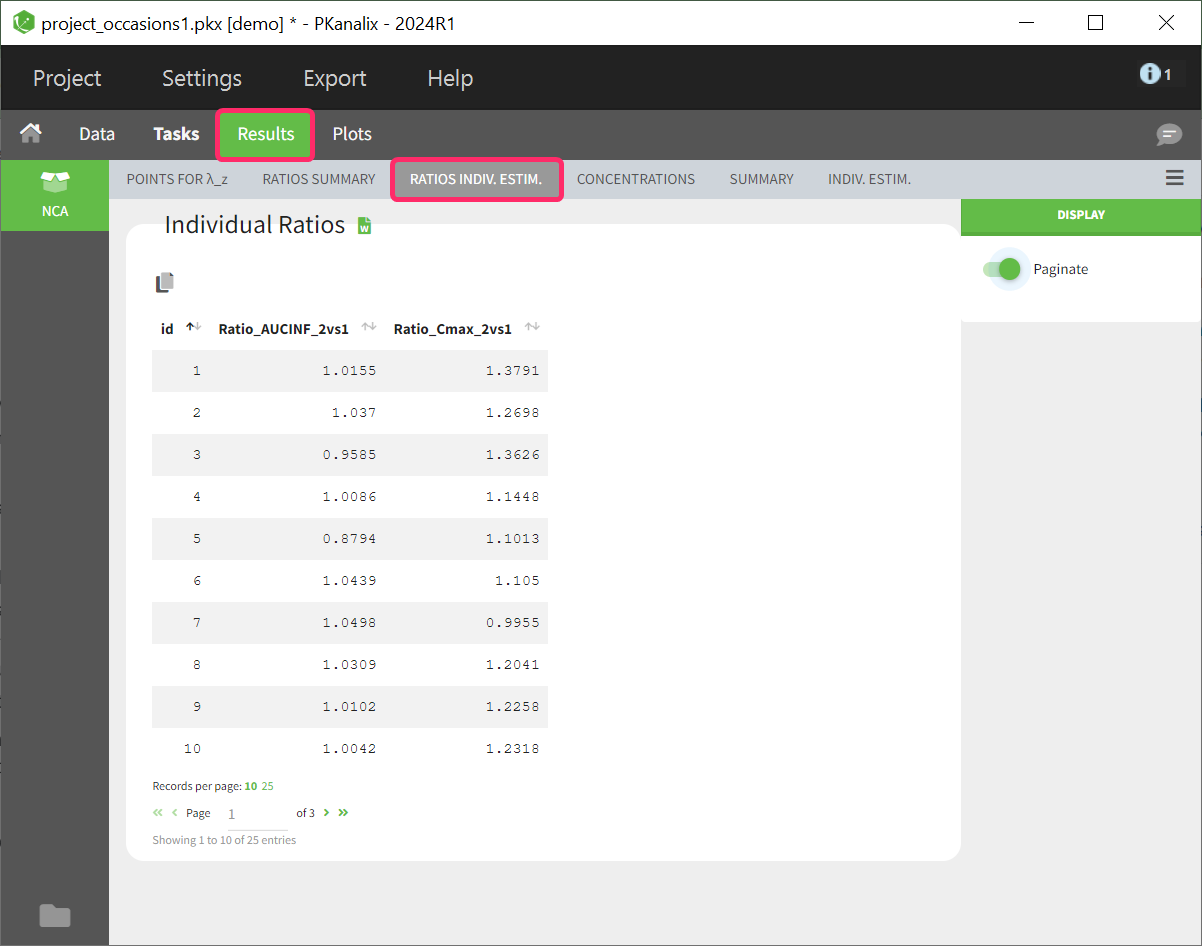

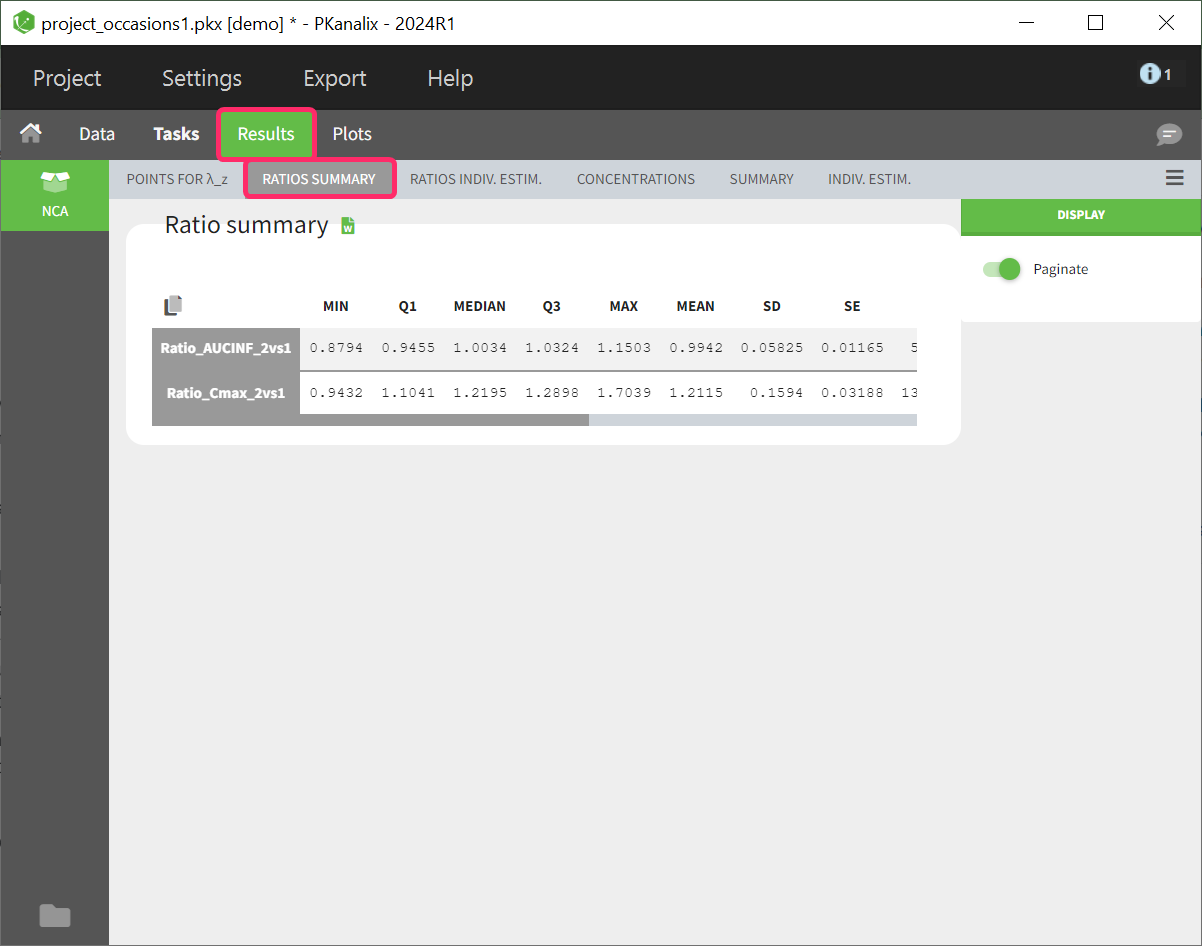

- demo project_occasions1.pkx: crossover study with two treatments

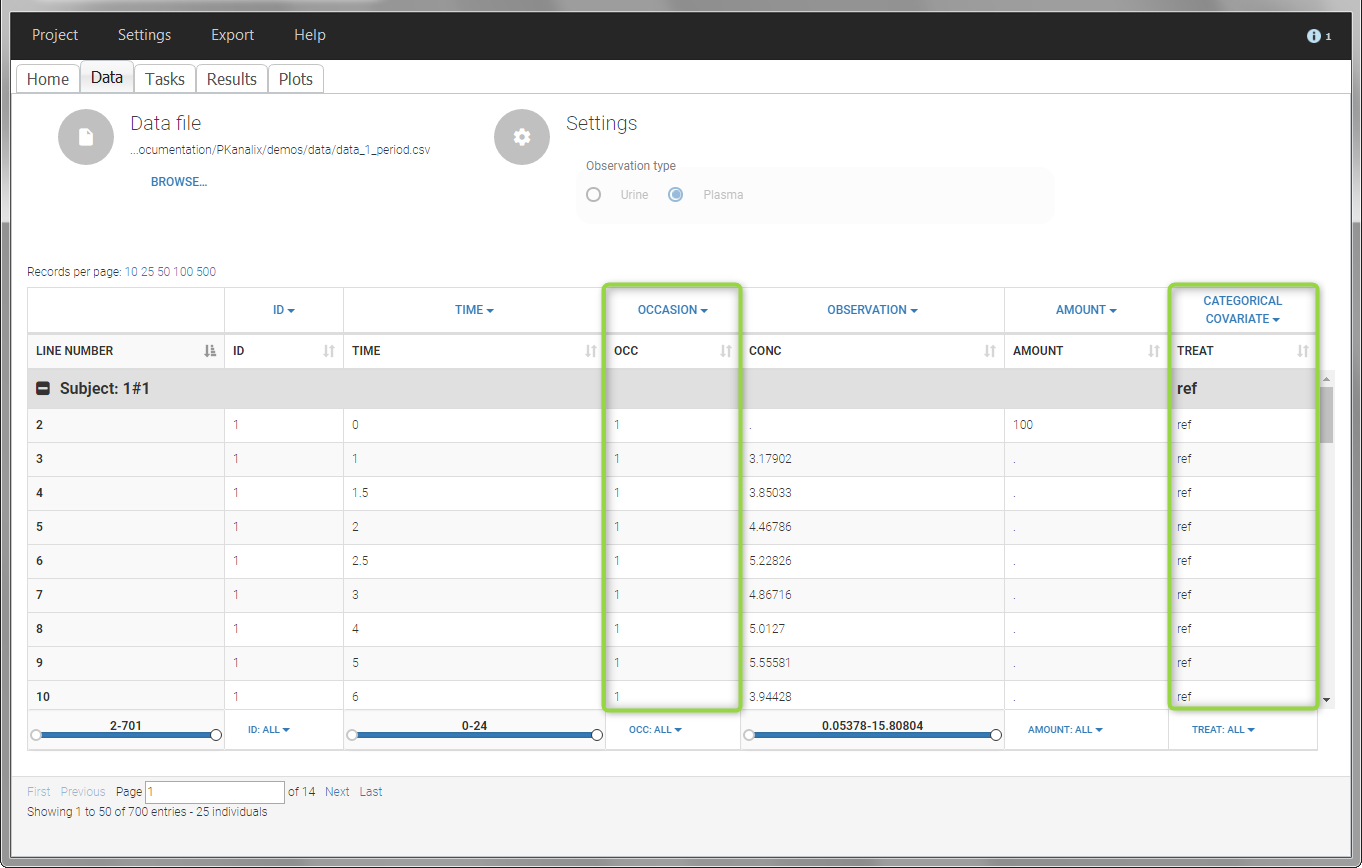

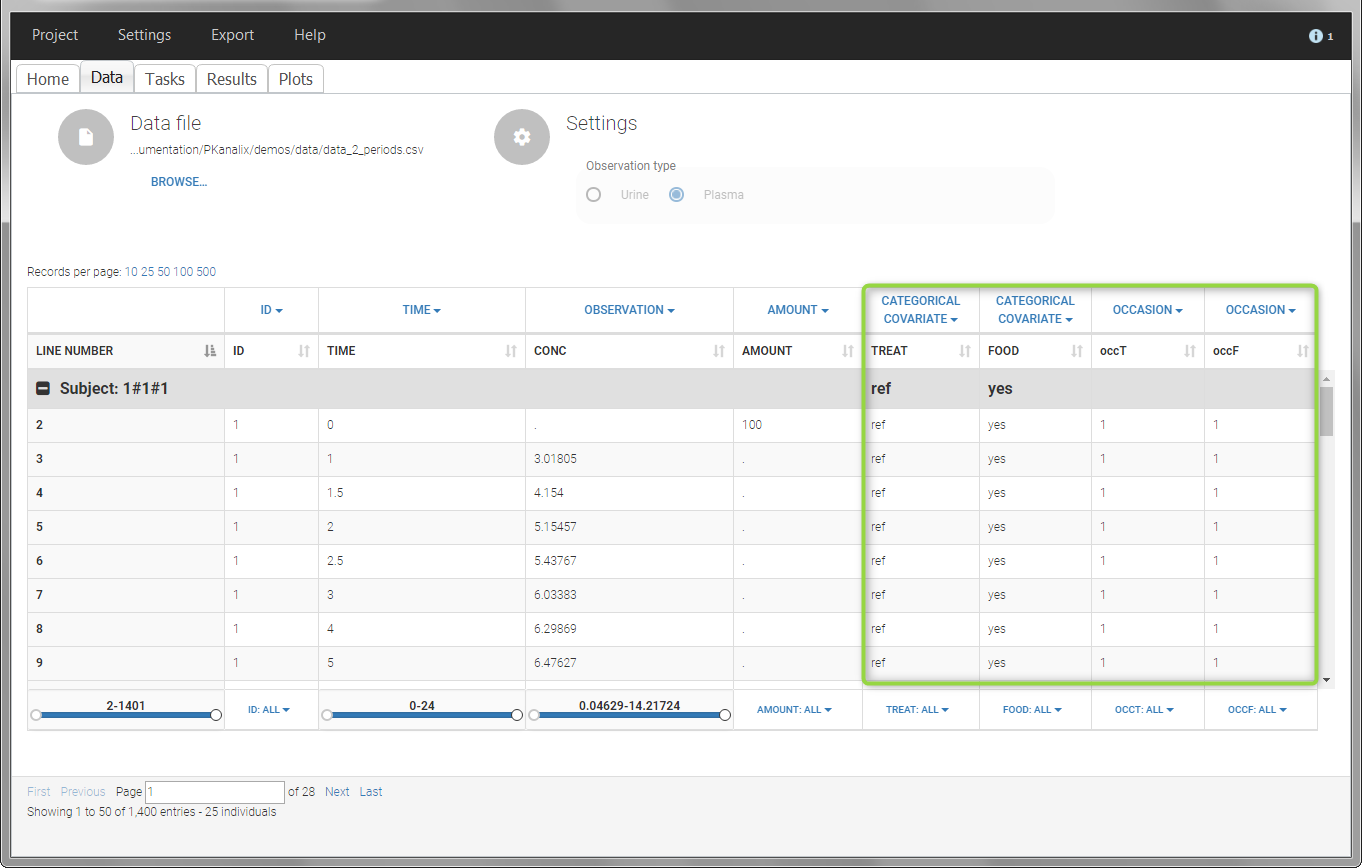

The subject column is tagged as ID, the treatment column as CATEGORICAL COVARIATE and an additional column encoding the two periods with integers “1” and “2” as OCCASION column.

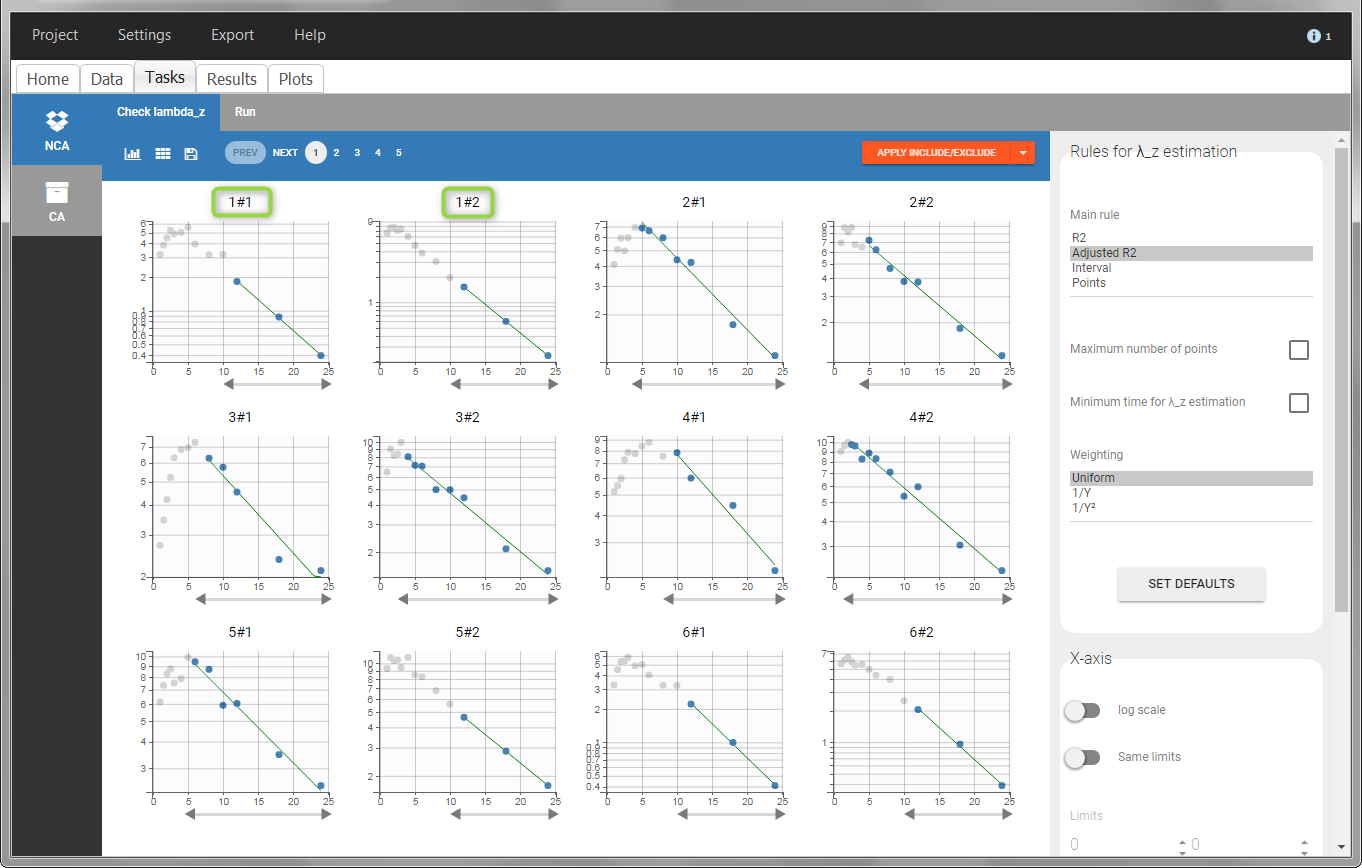

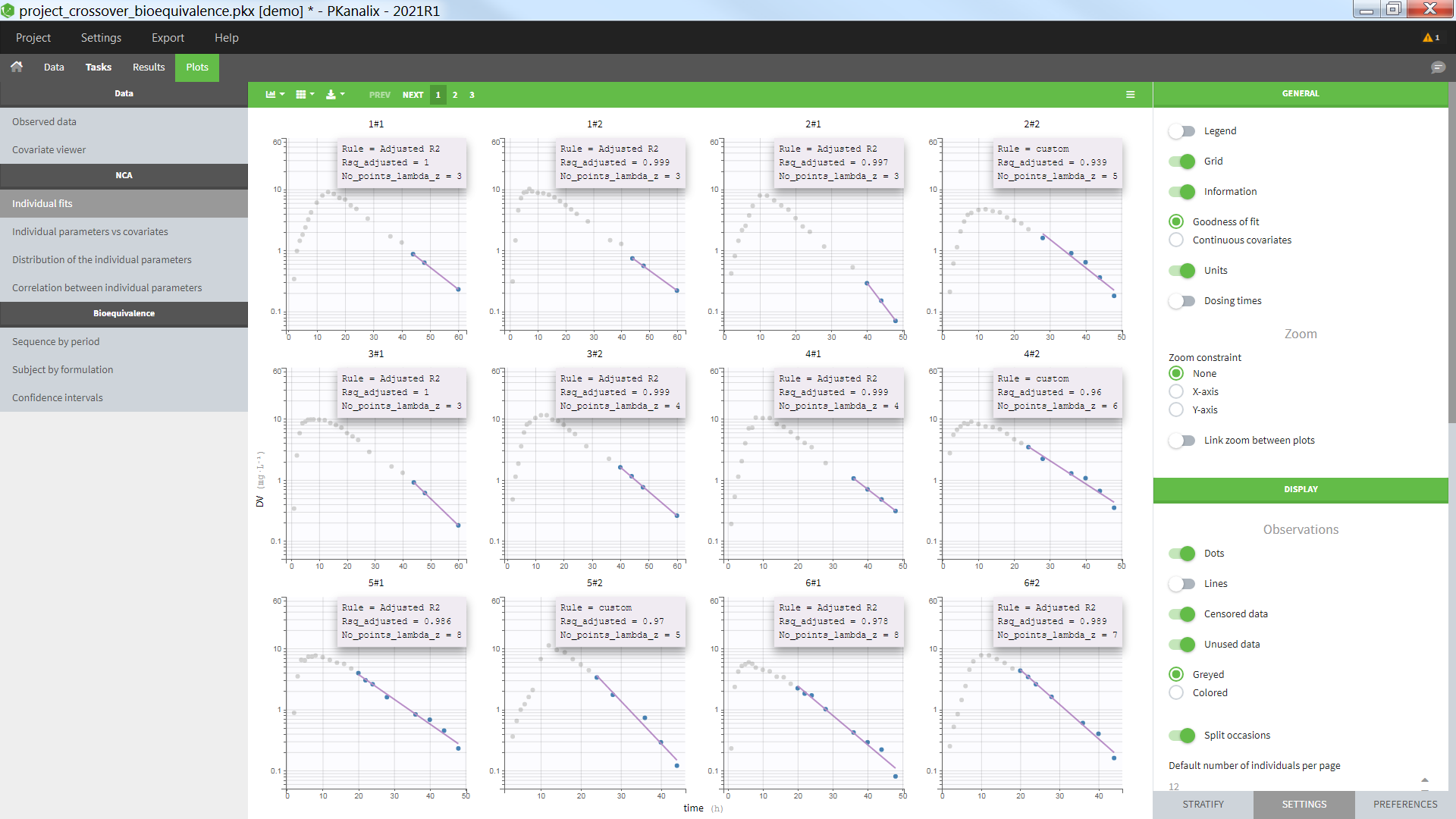

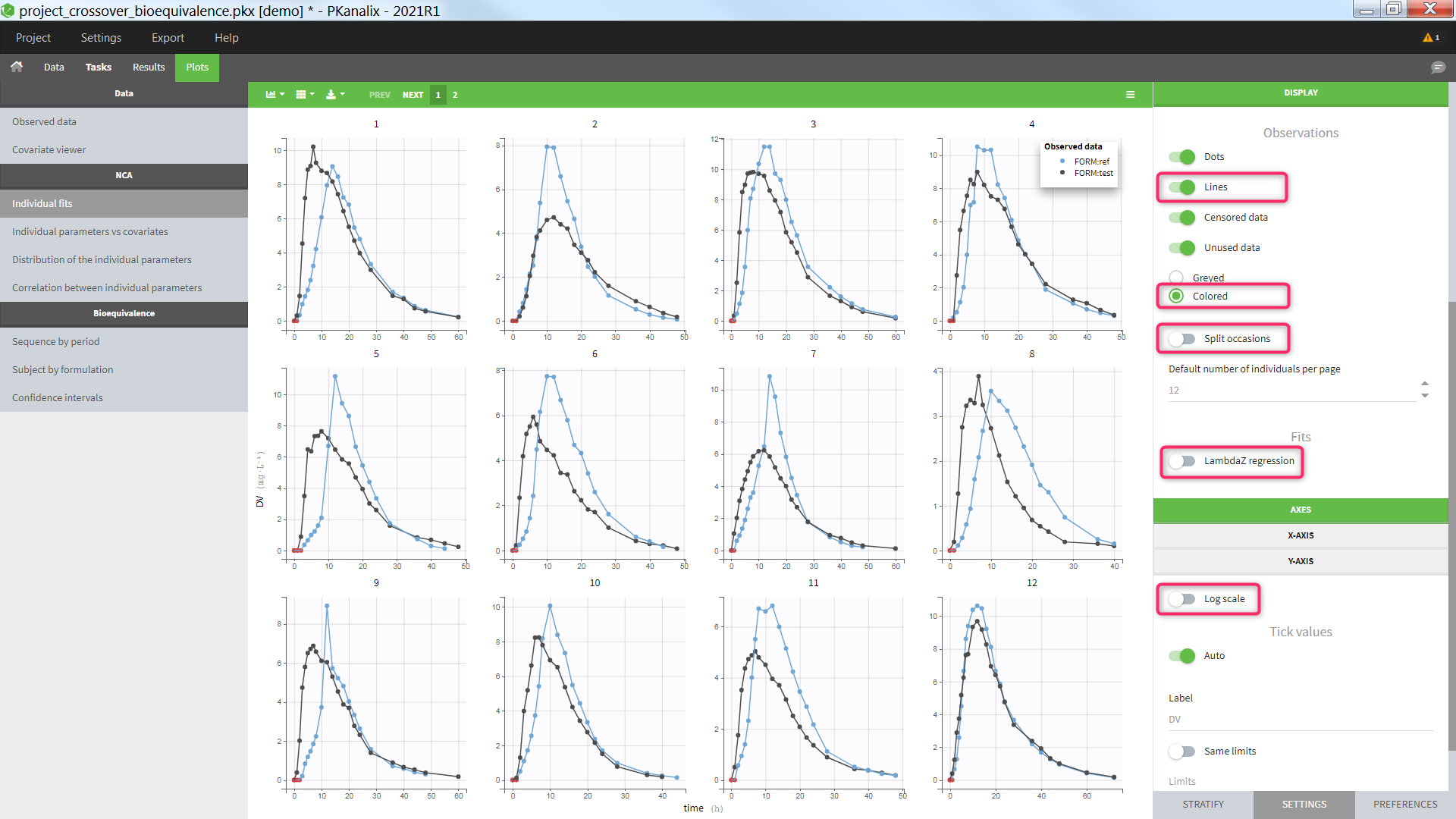

In the “Check lambda_z” (for the NCA) and the “Check init.” (for CA), each occasion of each individual is displayed. The syntax “1#2” indicates individual 1, occasion 2, according to the values defined in the ID and OCCASION columns.

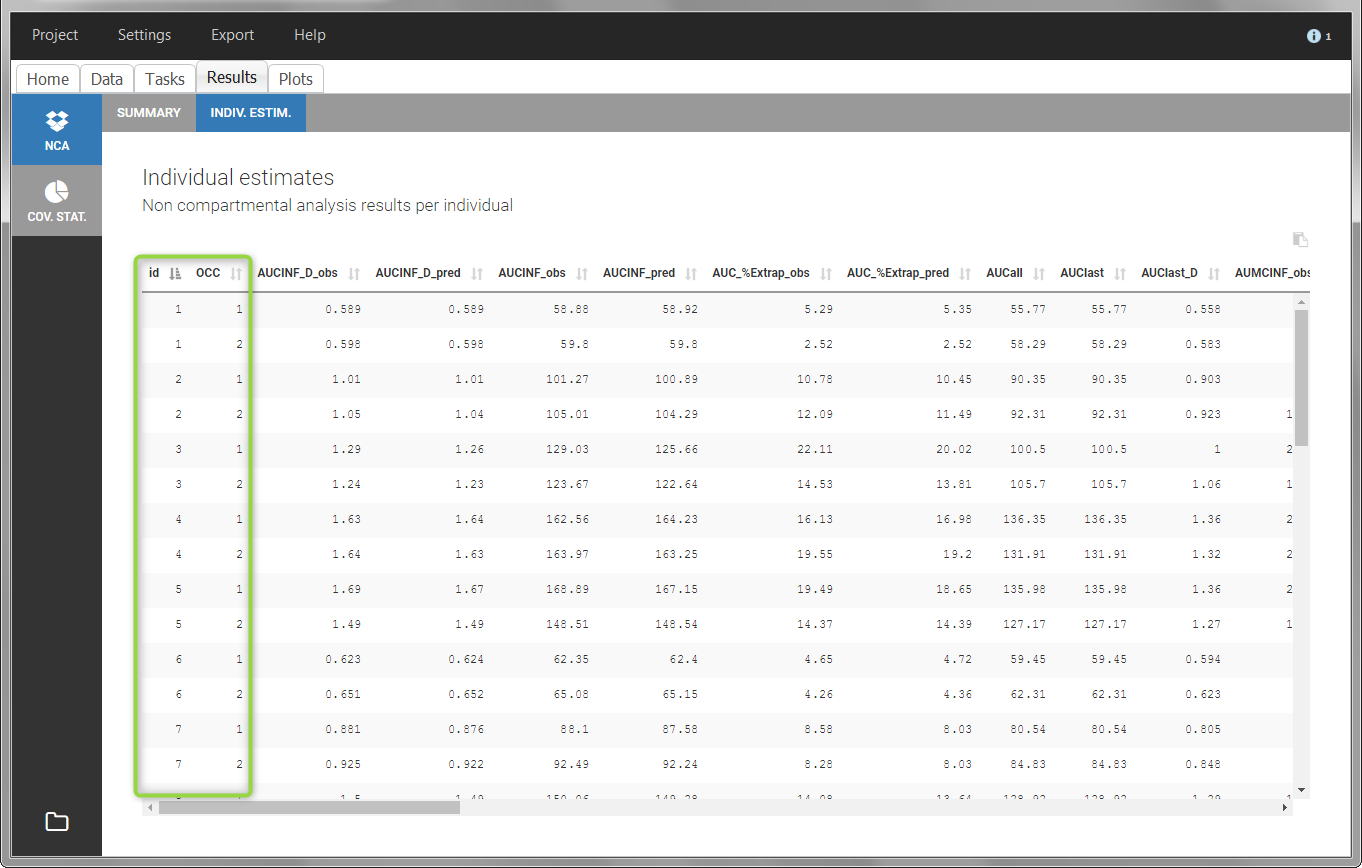

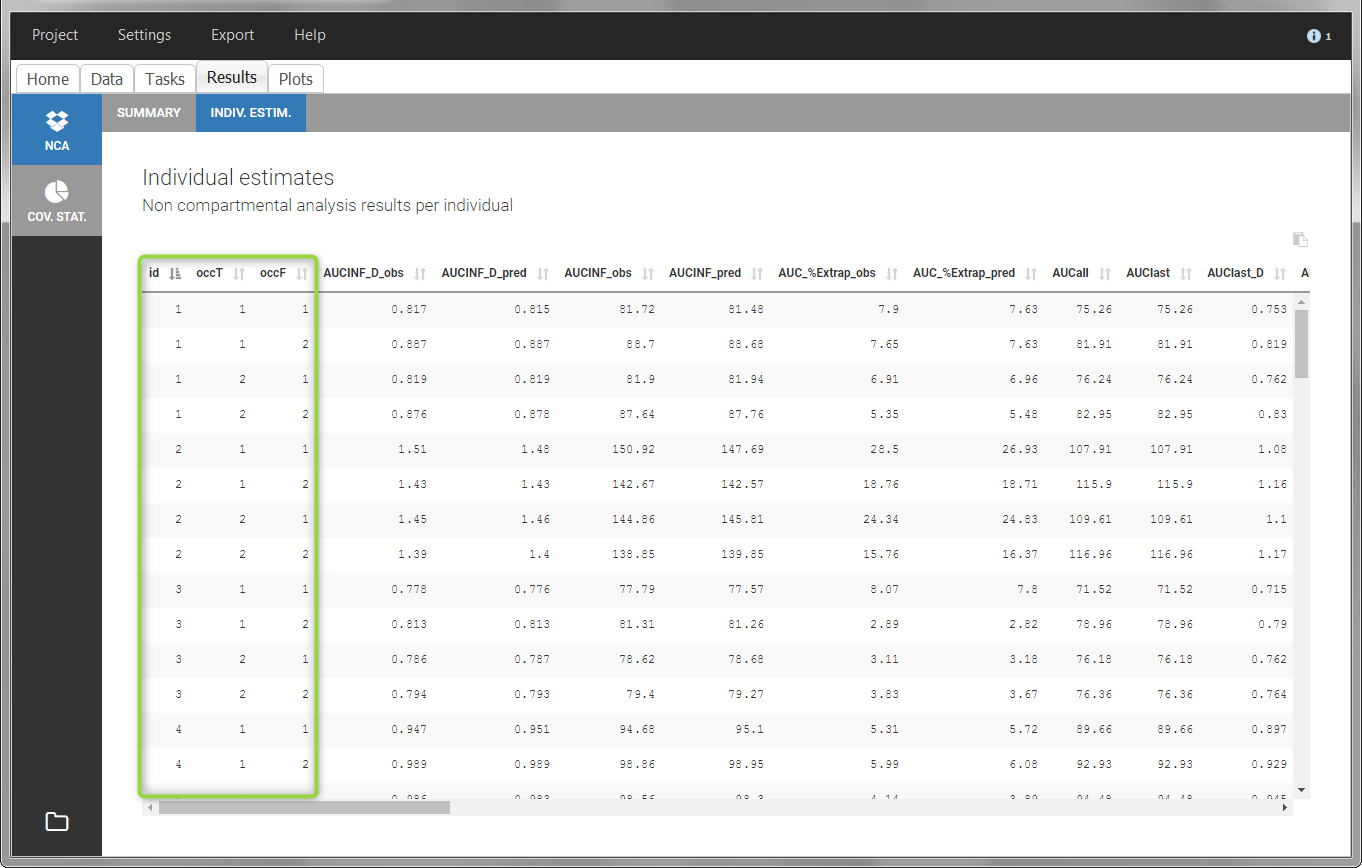

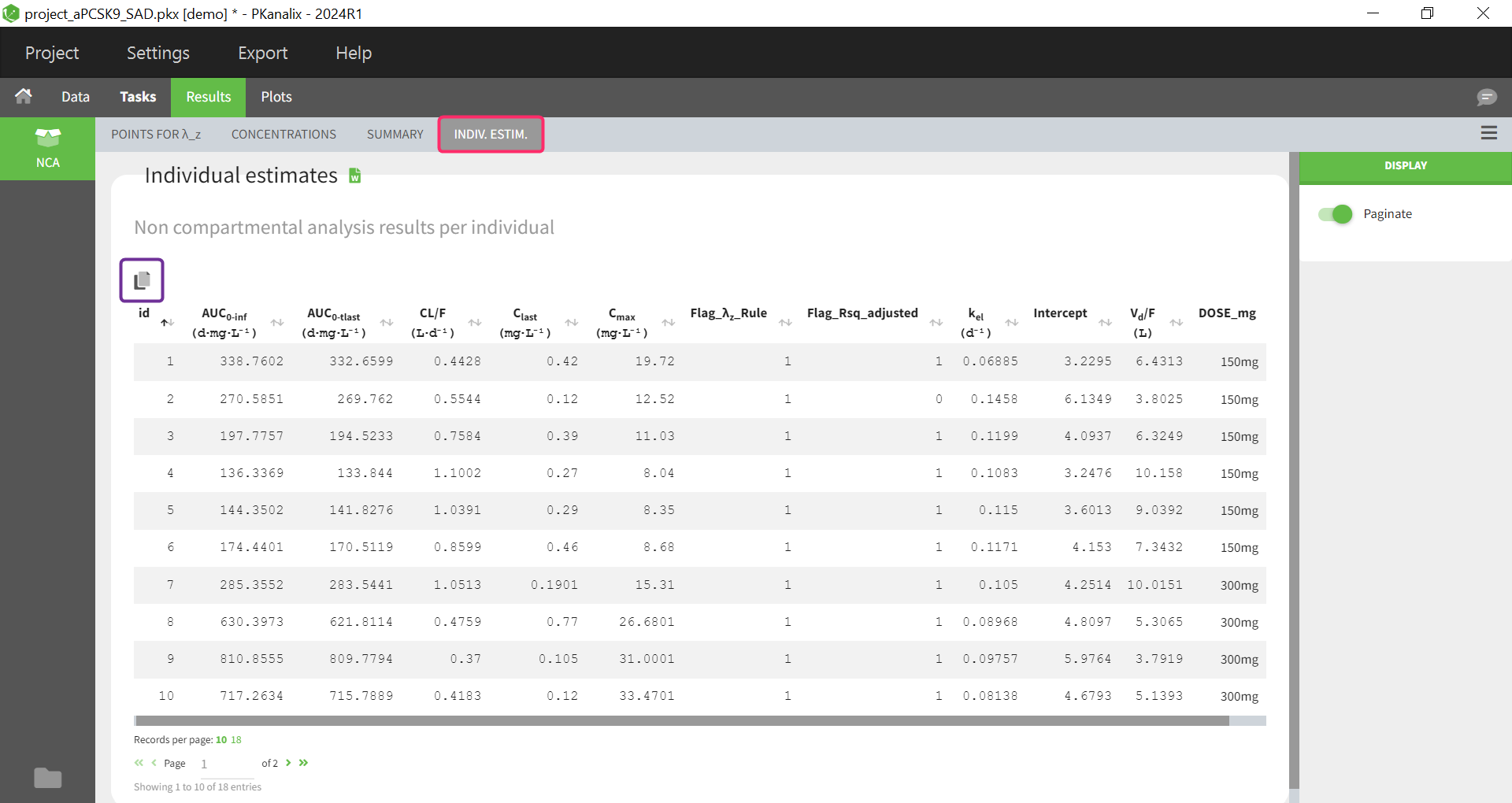

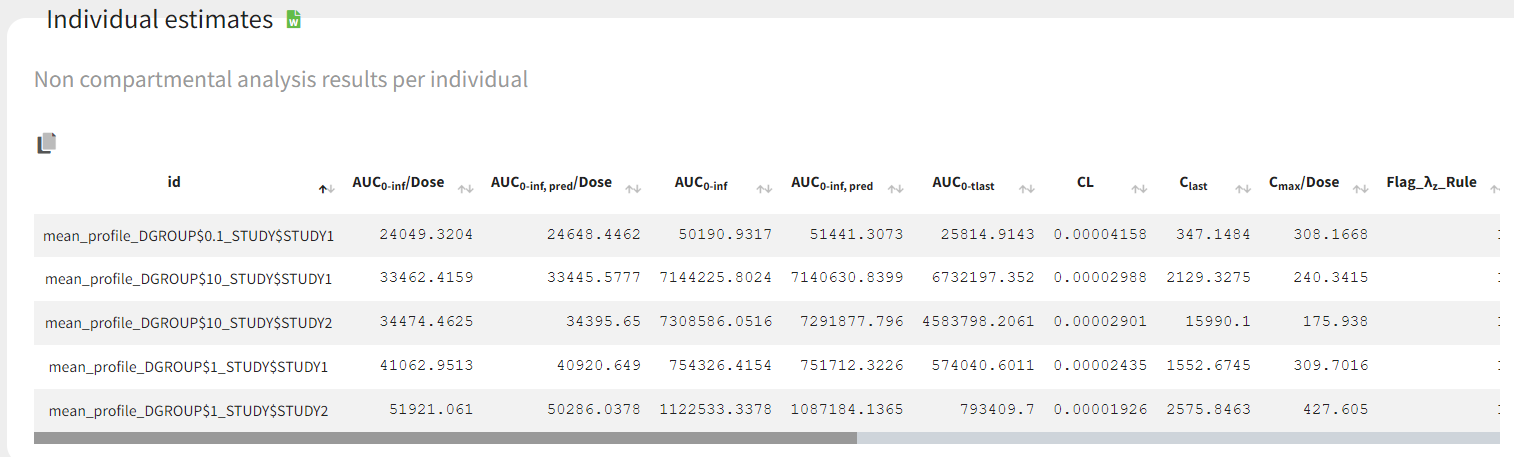

In the “Individual estimates” output tables, the first columns indicate the ID and OCCASION (reusing the data set headers). The covariates are indicated at the end of the table. Note that it is possible to sort the table by any column, including, ID, OCCASION and COVARIATES.

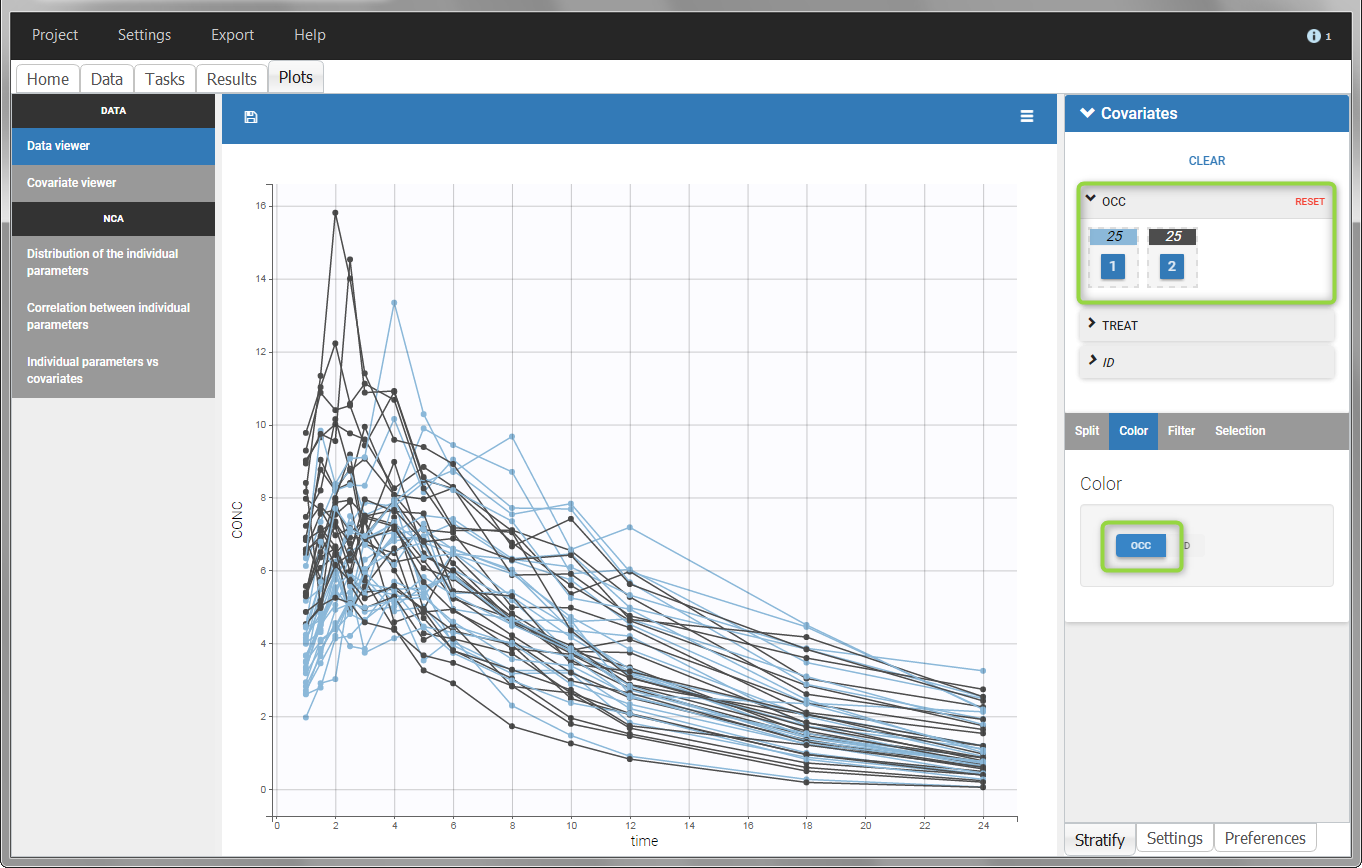

The OCCASION values are available in the plots for stratification, in addition to possible CATEGORICAL or CONTINUOUS COVARIATES (here “TREAT”).

- demo project_occasions2.pkx: study with two treatments and with/without food

In this example, we have three sorting variables: ID, TREAT and FOOD. The TREAT and FOOD columns are duplicated: once with strings to be used as CATEGORICAL COVARIATE (TREAT and FOOD) and once with integers to be used as OCCASION (occT and occF).

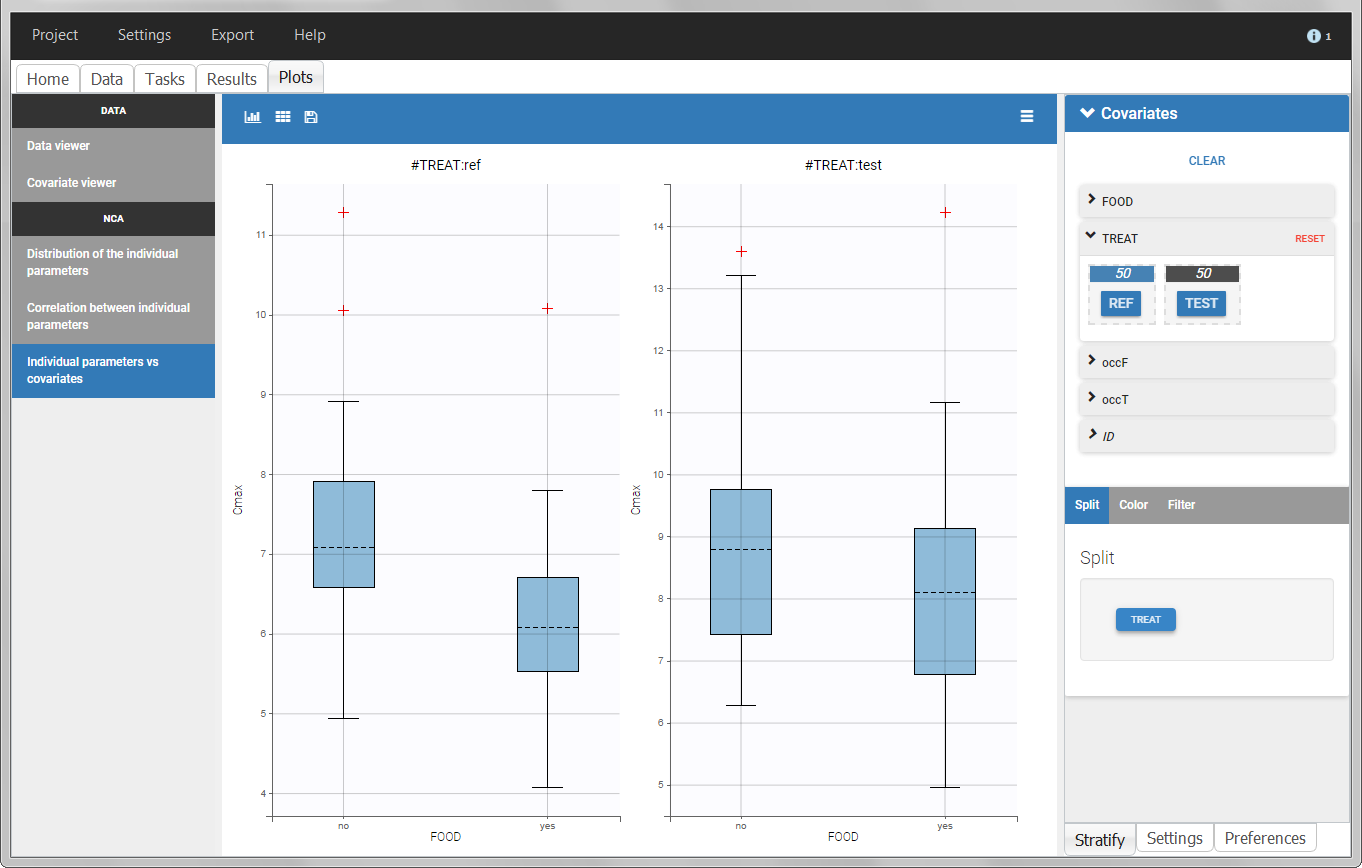

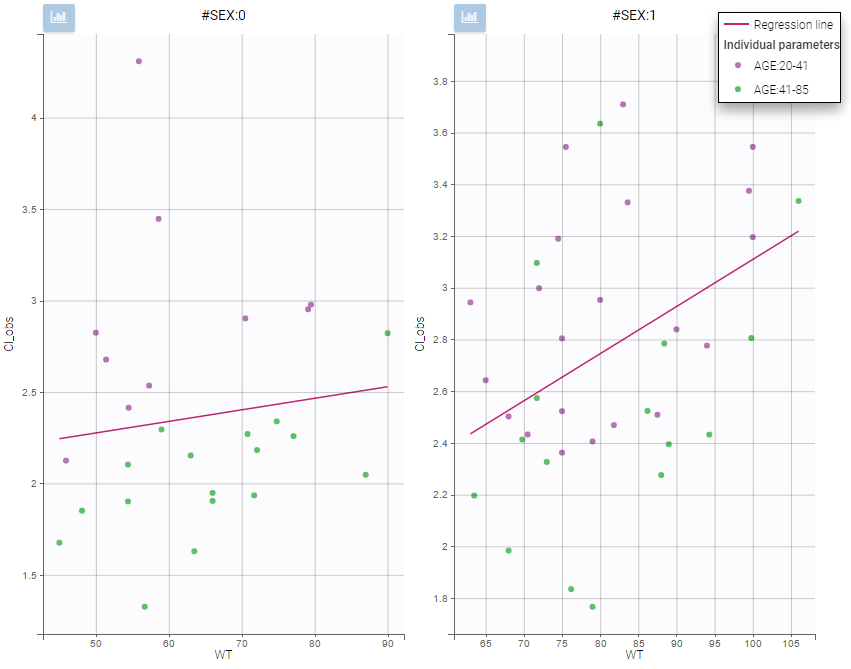

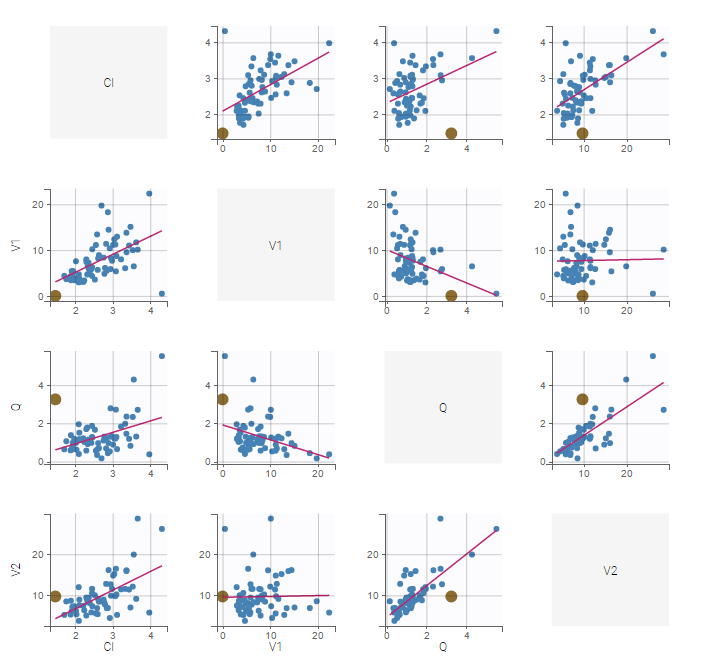

In the individual parameters tables and plots, three levels are visible. In the “Individual parameters vs covariates”, we can plot Cmax versus FOOD, split by TREAT for instance (Cmax versus TREAT split by FOOD is also possible).

|

|

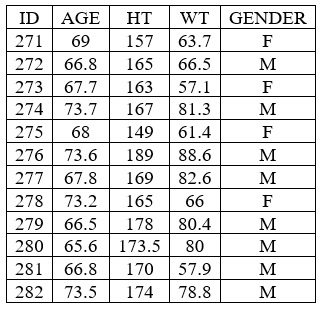

Covariates (“Carry” variables)

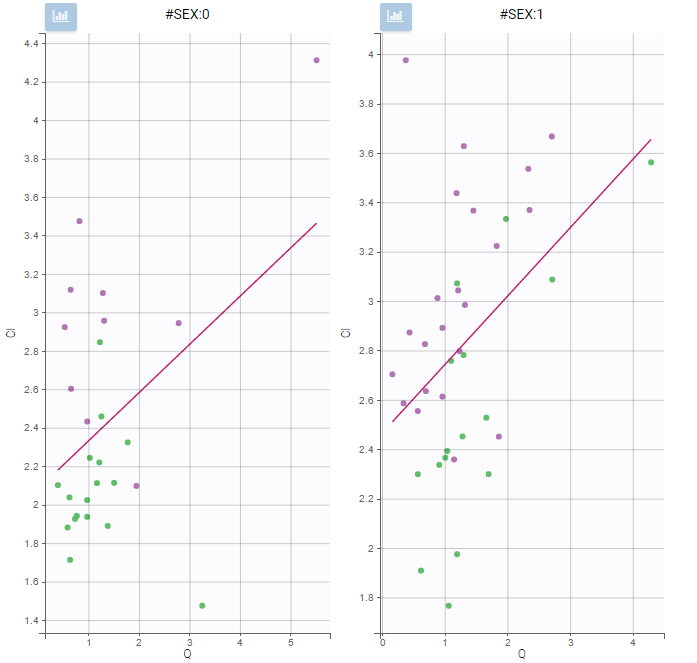

Individual information that need to be carried over to output tables and plots must be tagged as CATEGORICAL or CONTINUOUS COVARIATES. Categorical covariates define variables with a few categories, such as treatment or sex, and are encoded as strings. Continuous covariates define variables on a continuous scale, such as weight or age, and are encoded as numbers. Covariates will not automatically be used as “Sort” variables. A dedicated OCCASION column is necessary (see above).

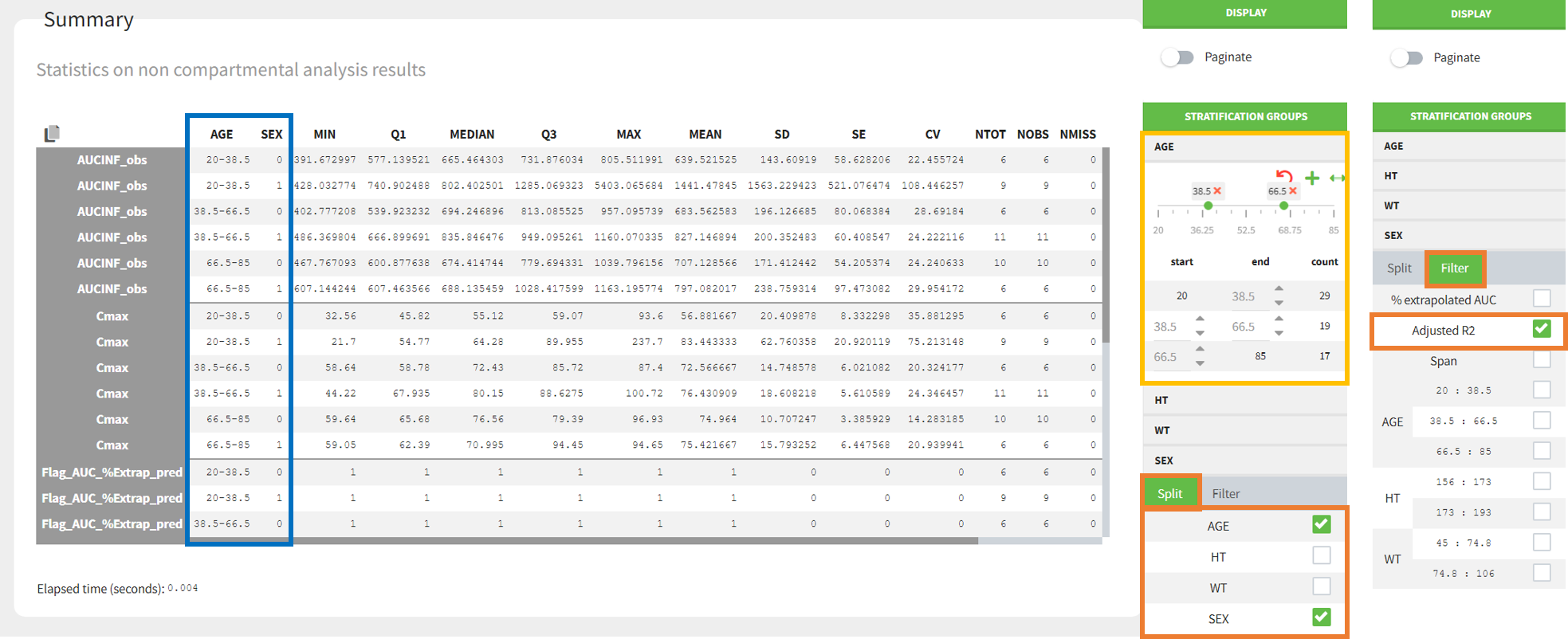

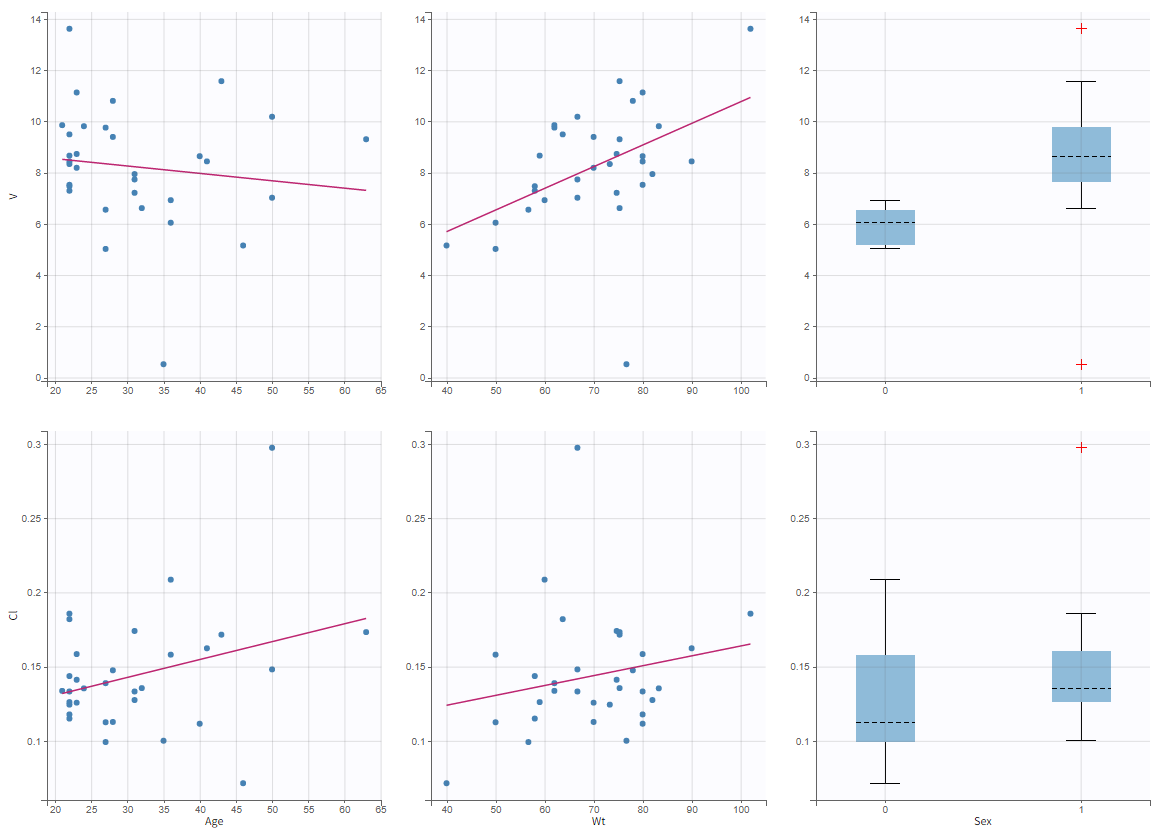

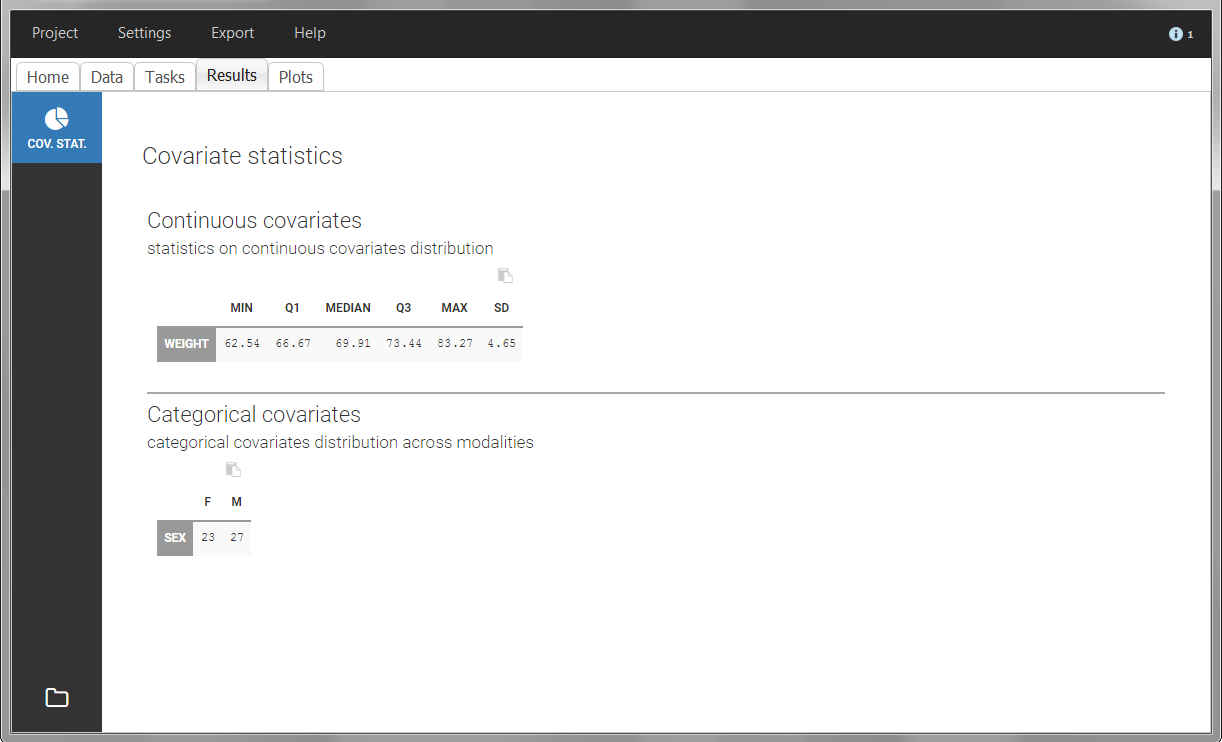

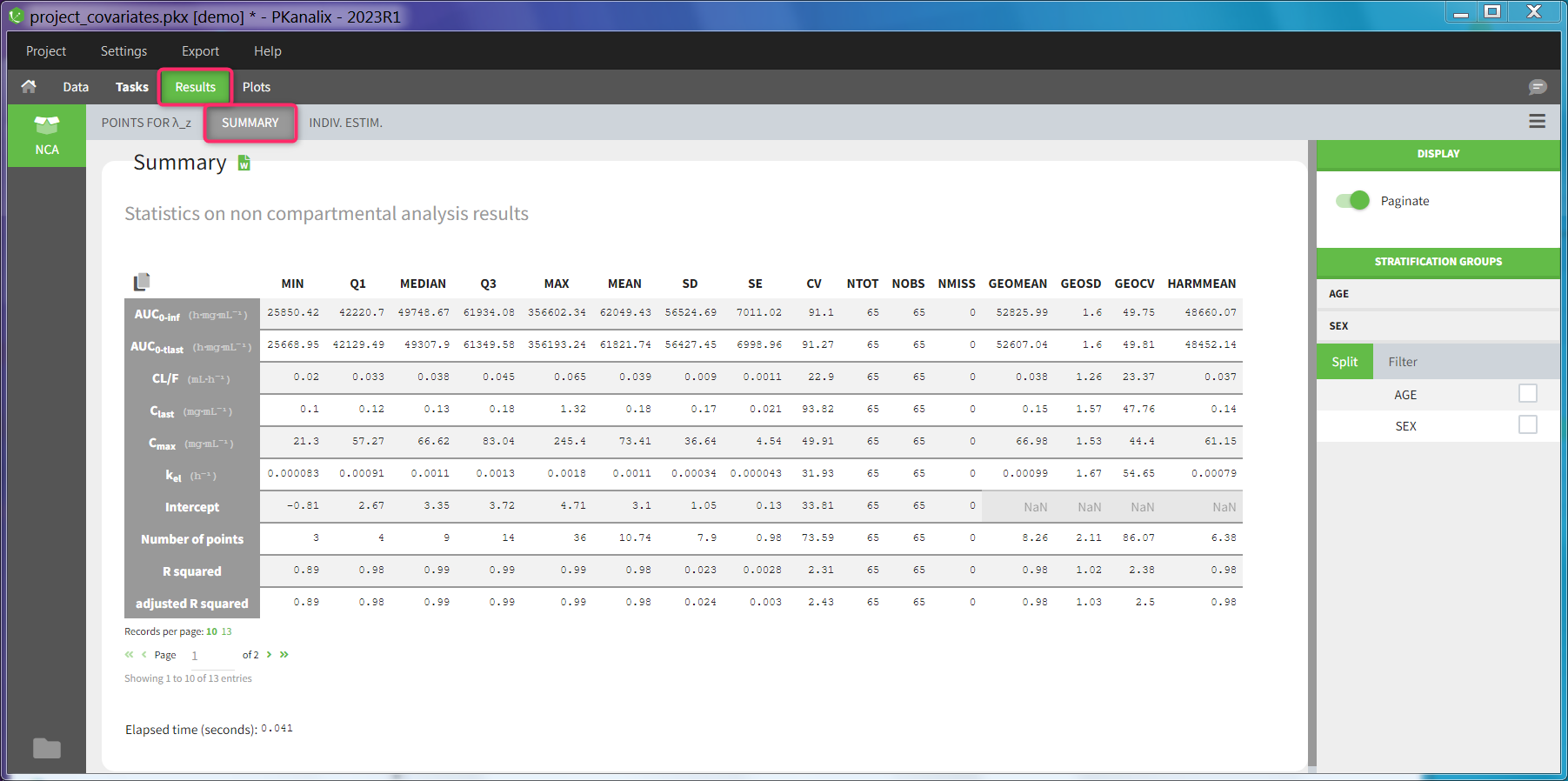

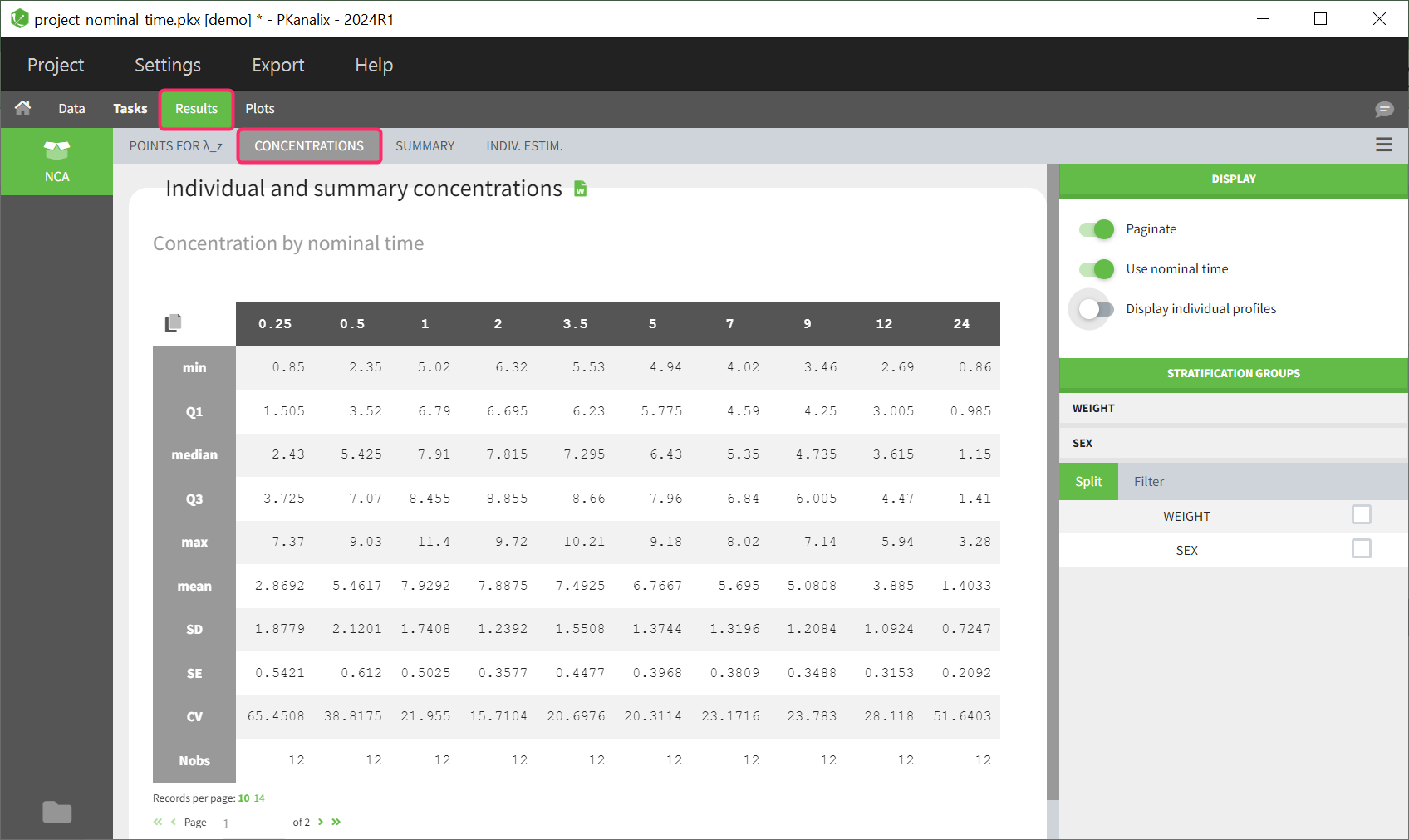

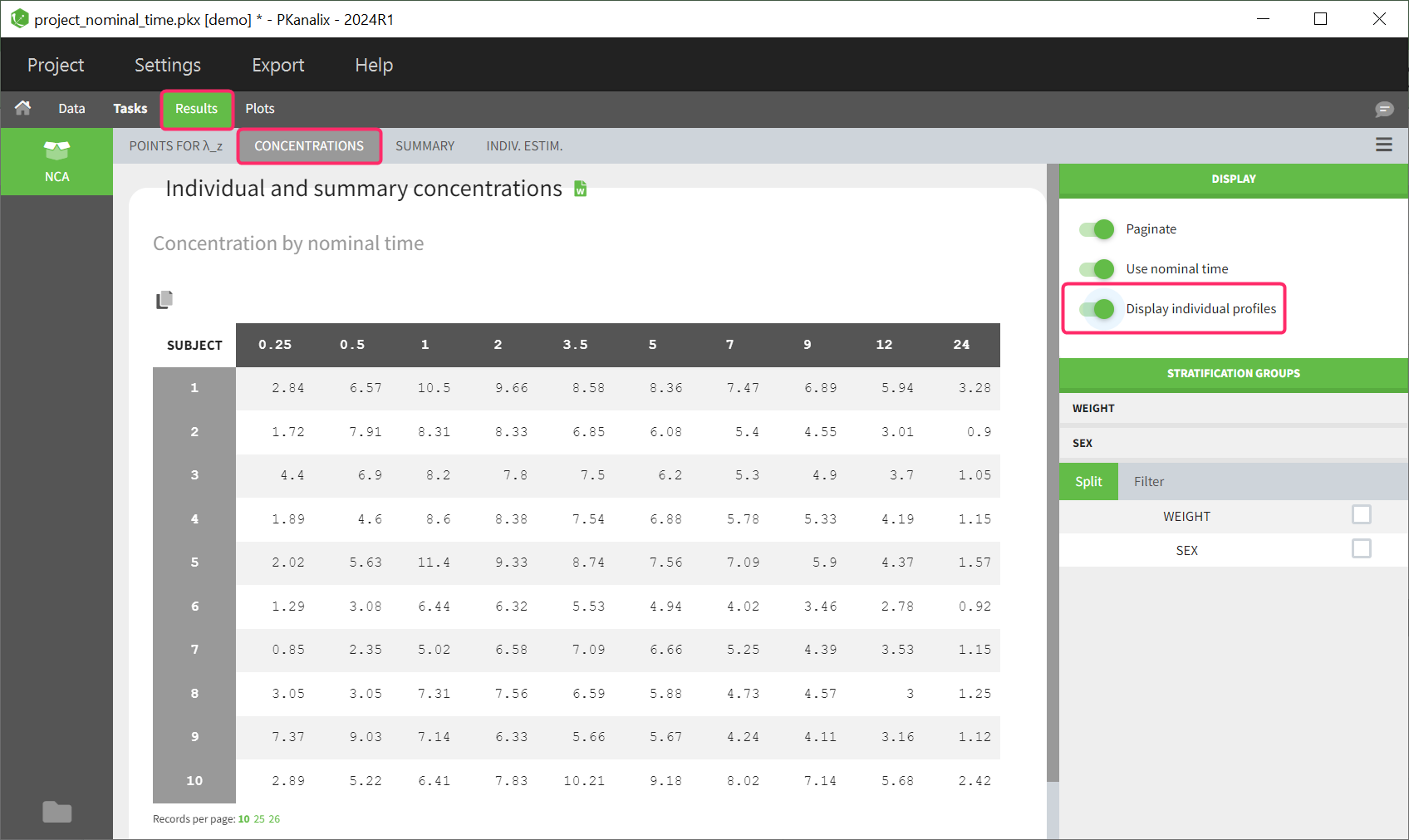

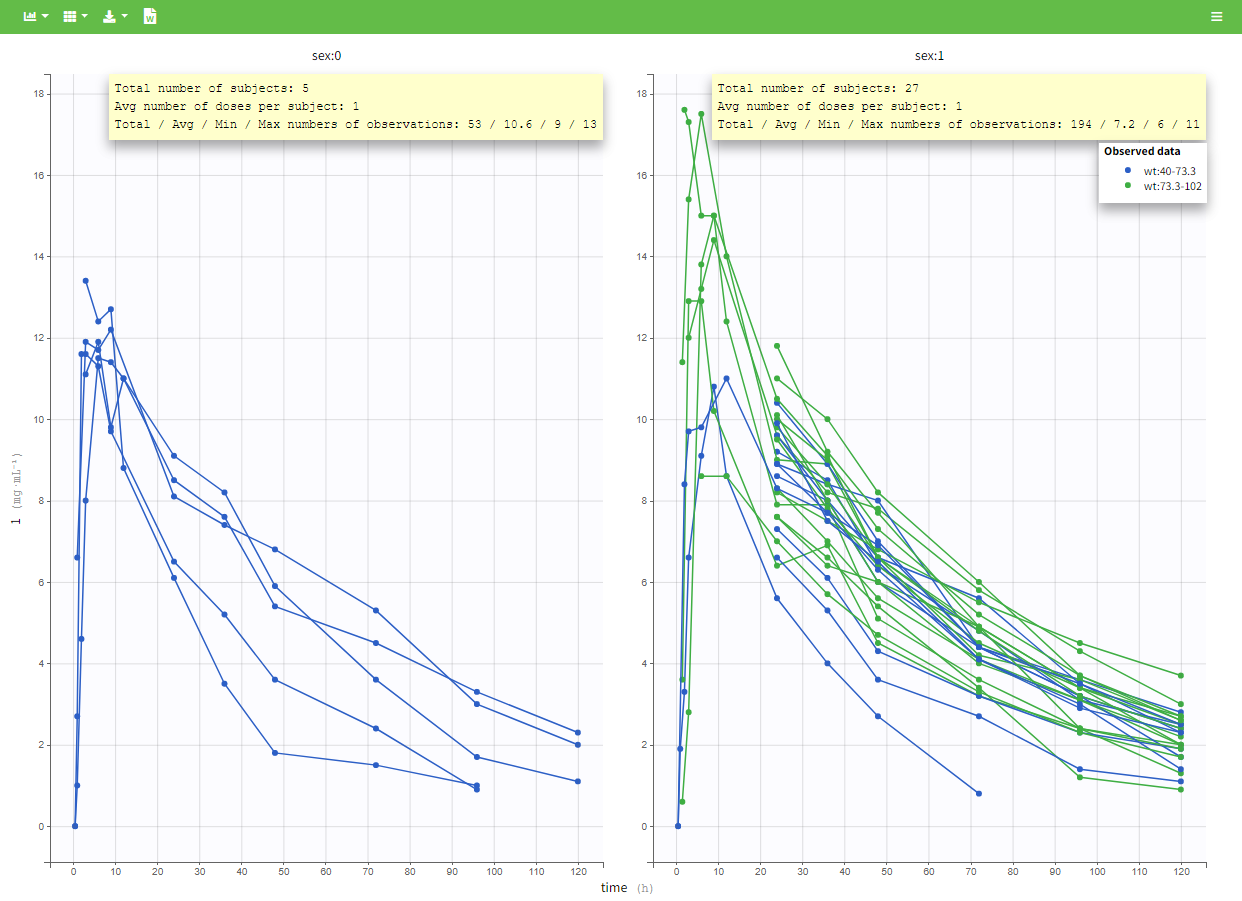

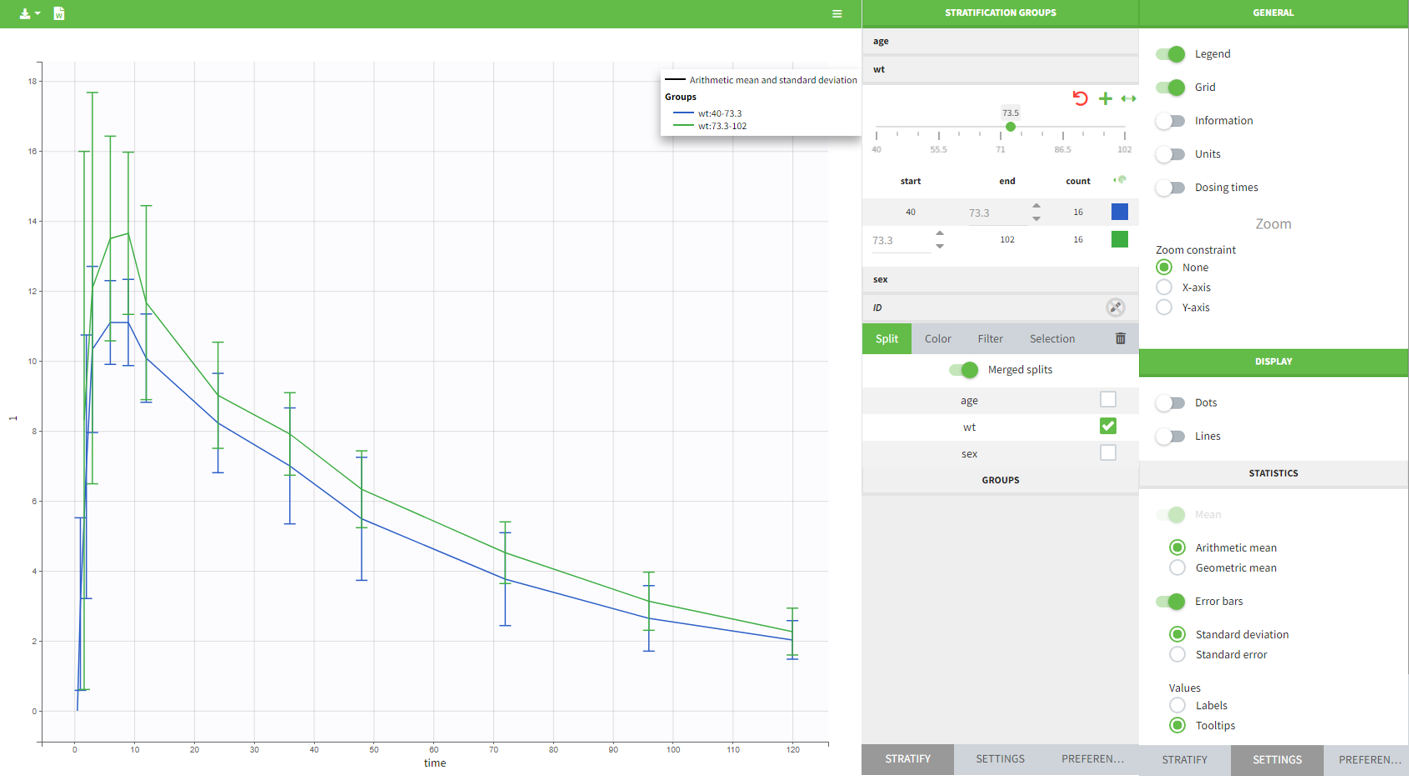

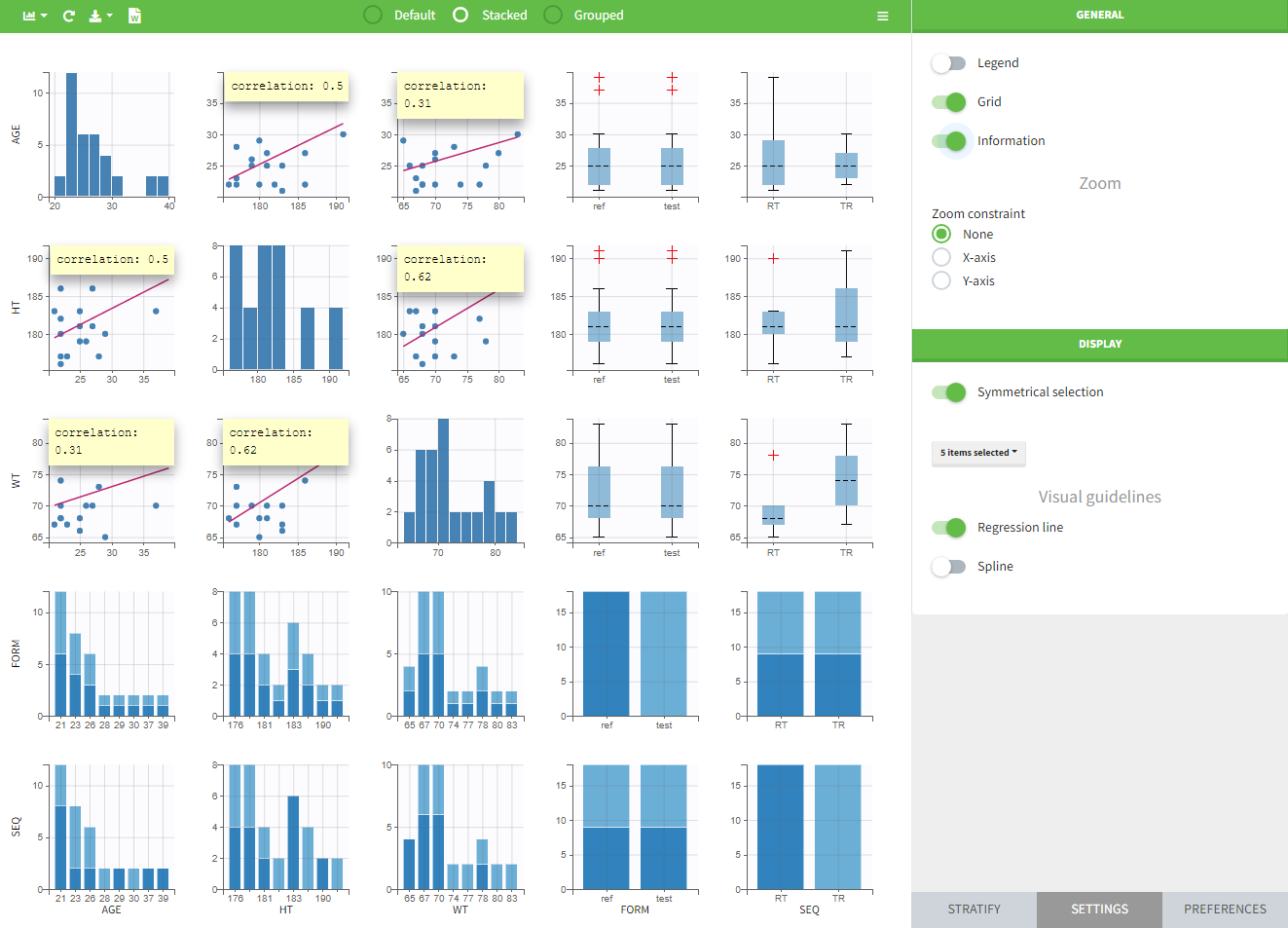

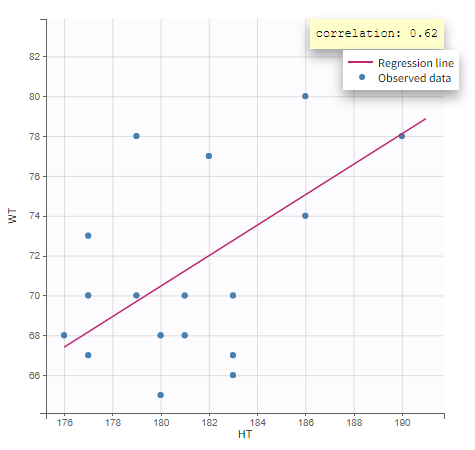

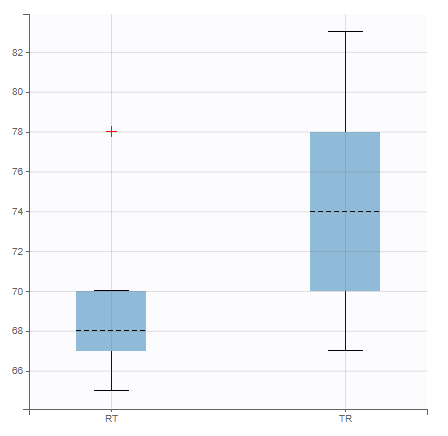

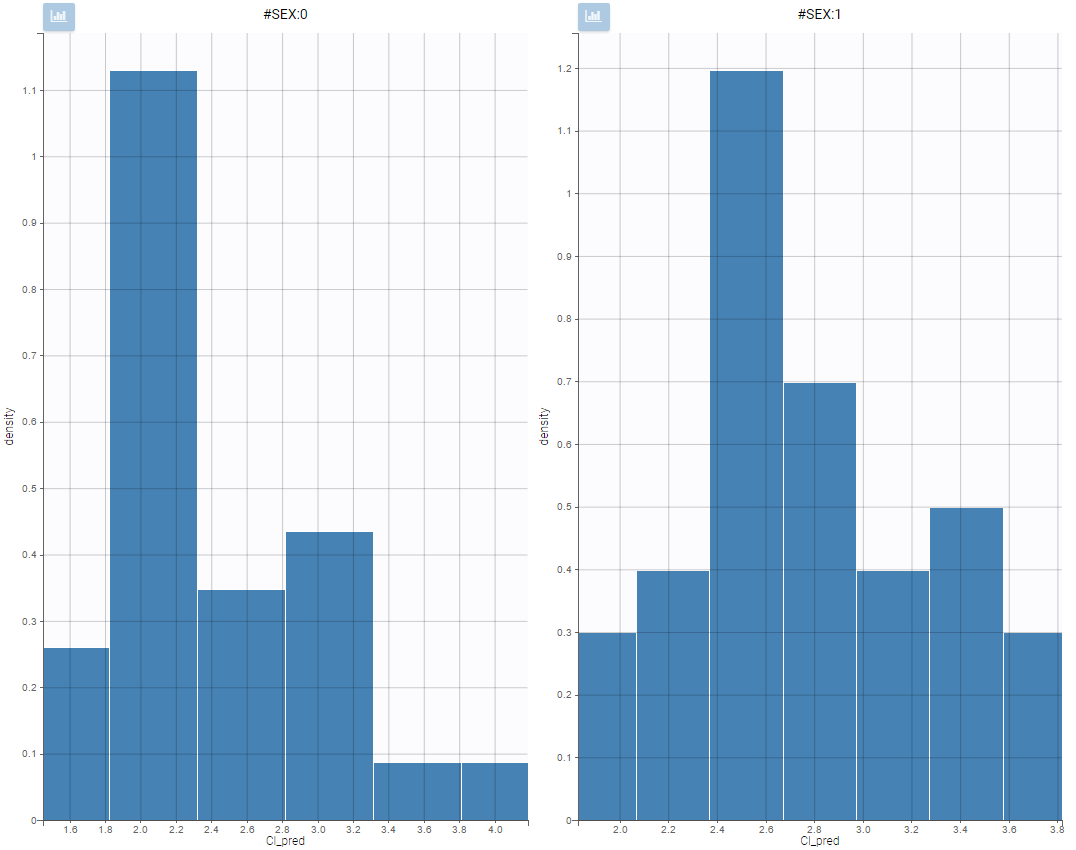

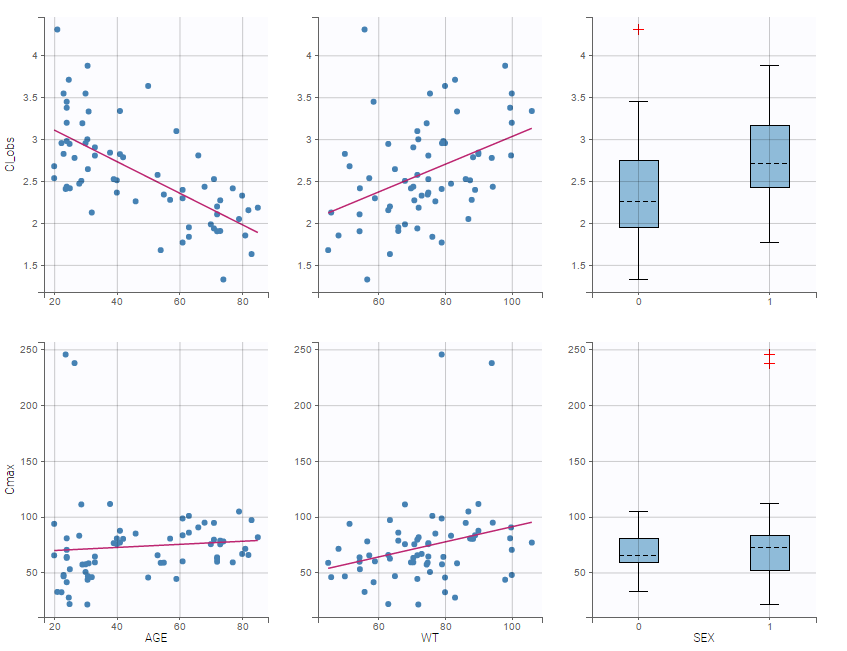

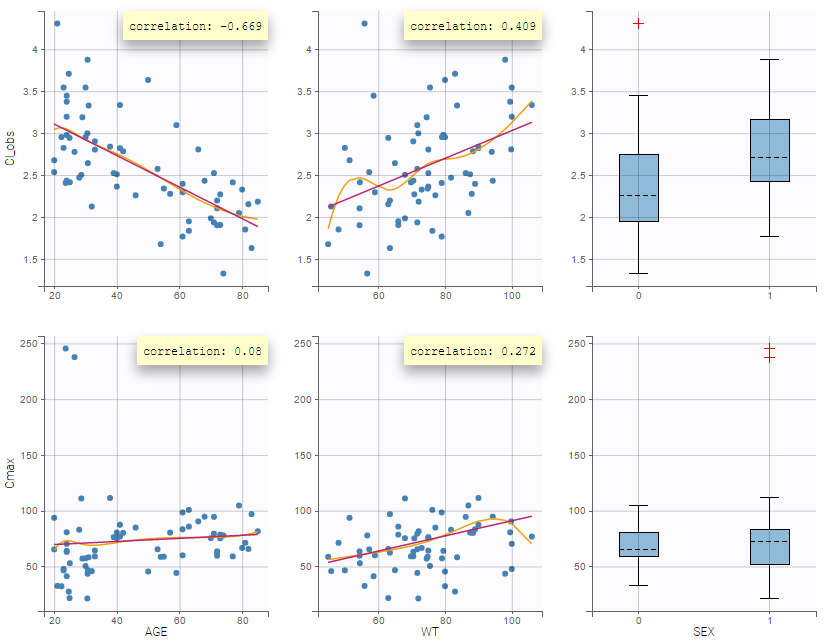

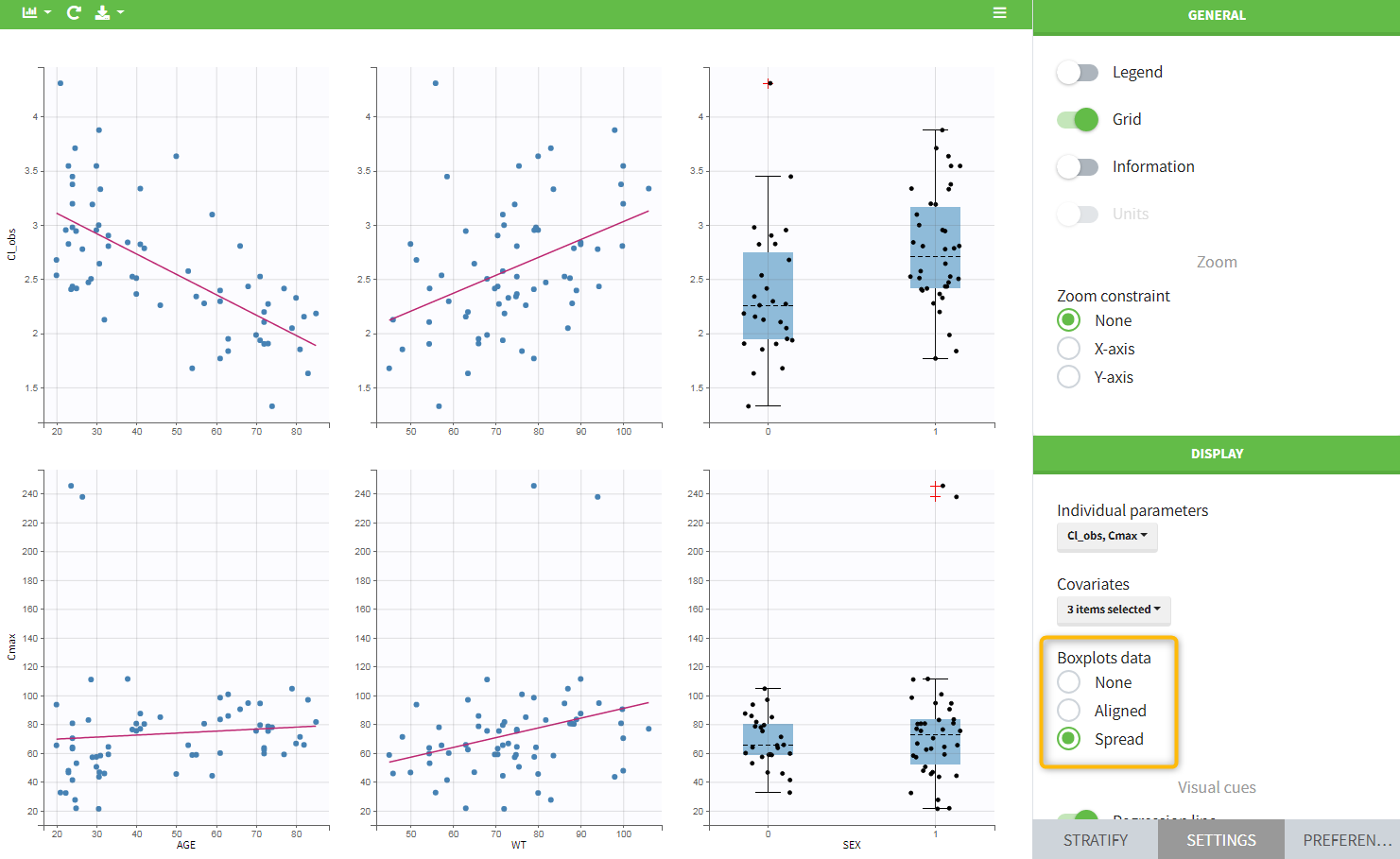

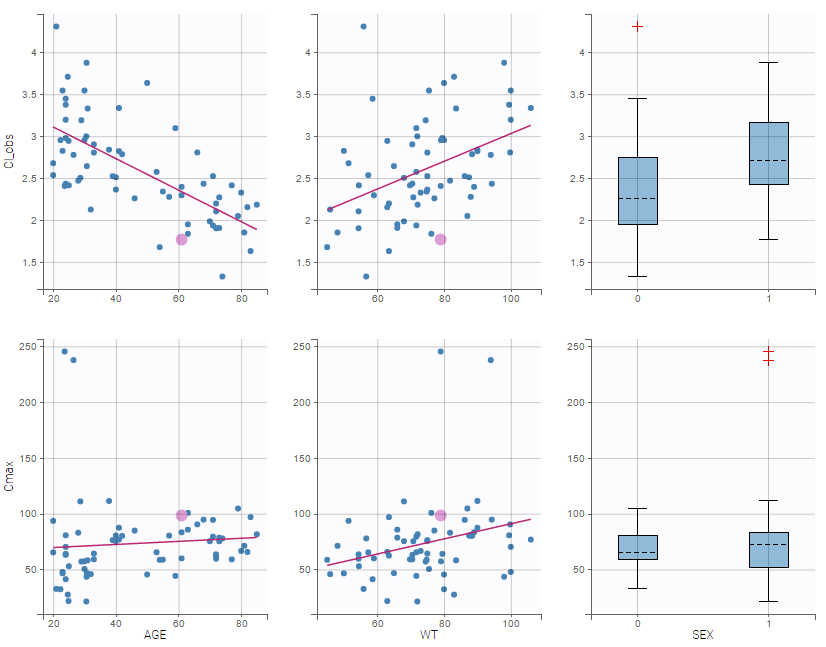

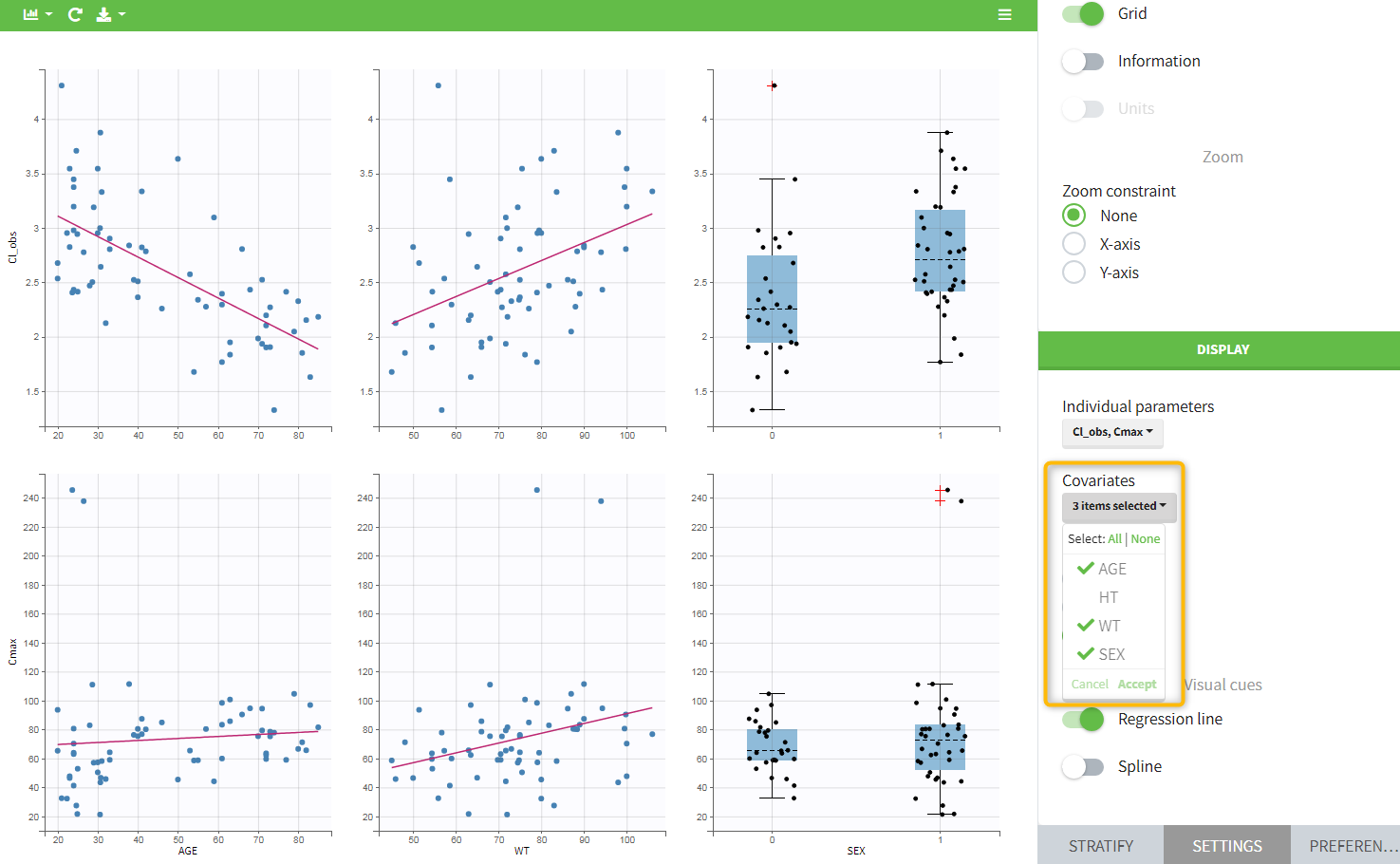

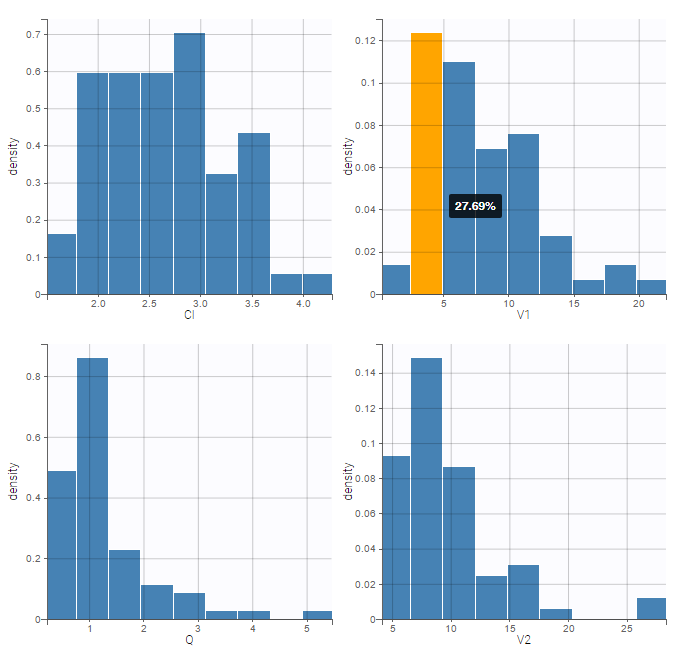

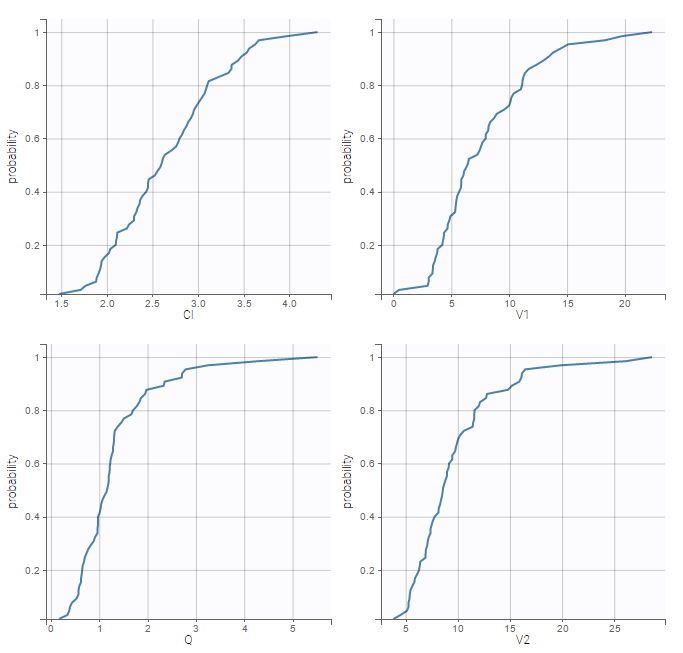

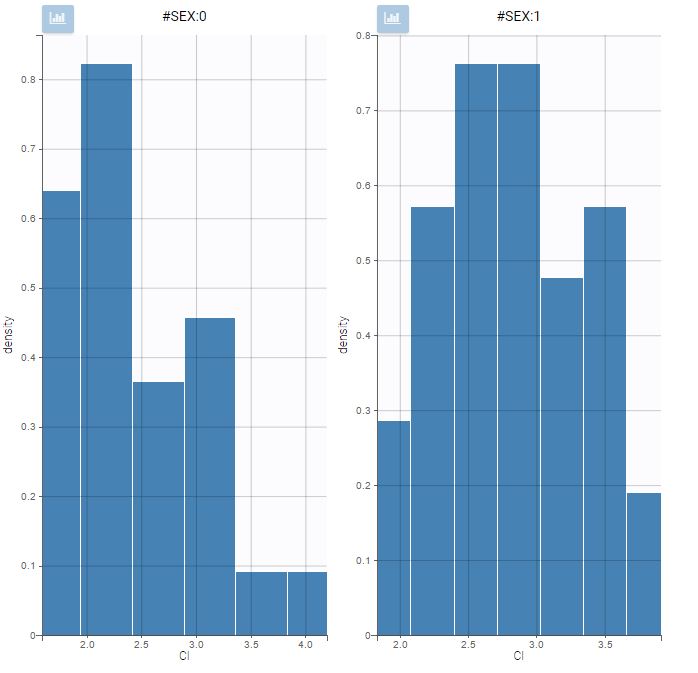

Covariates will automatically appear in the output tables. Plots of estimated NCA and/or CA parameters versus covariate values will also be generated. In addition, covariates can be used to stratify (split, color or filter) any plot. Statistics about the covariates distributions are available in table format in “Results > Cov. stat.” and in graphical format in “Plots > Covariate viewer”.

Note: It is preferable to avoid spaces and special characters (stars, etc) in the strings for the categories of the categorical covariates. Underscores are allowed.

Example:

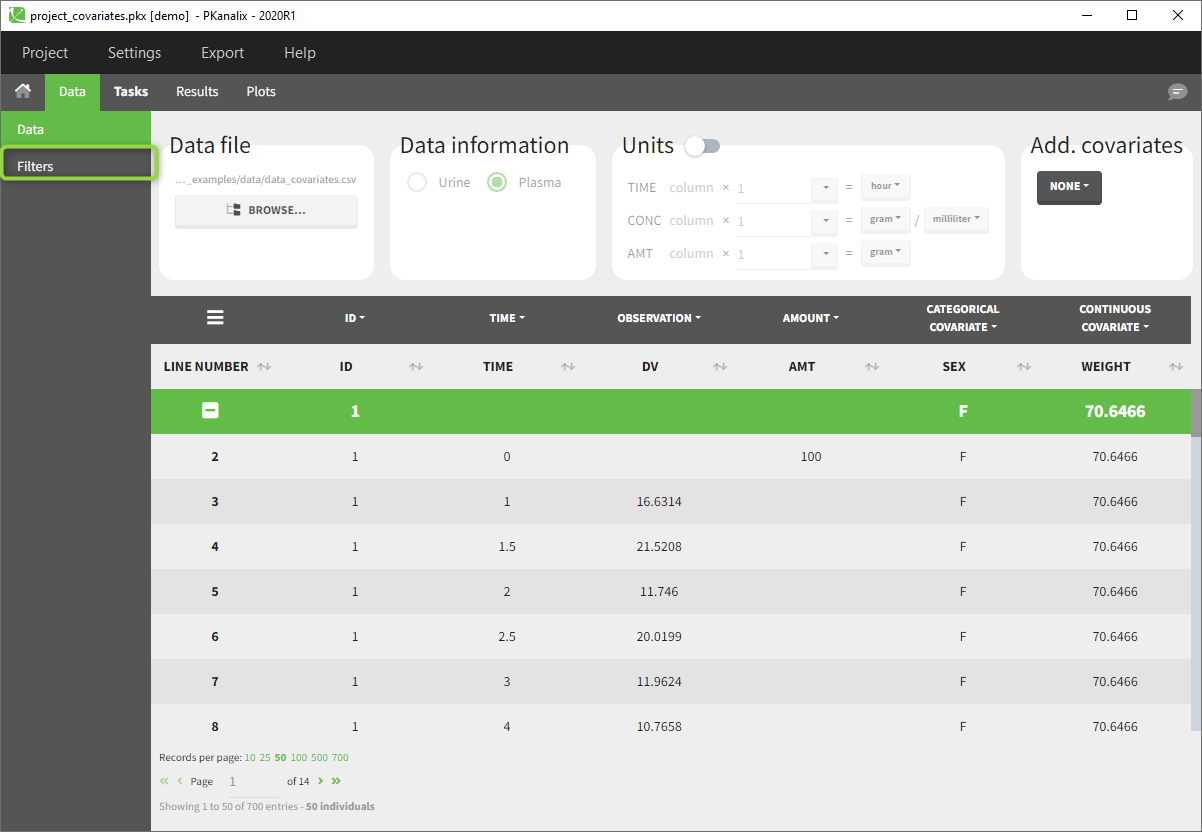

- demo project_covariates.pkx

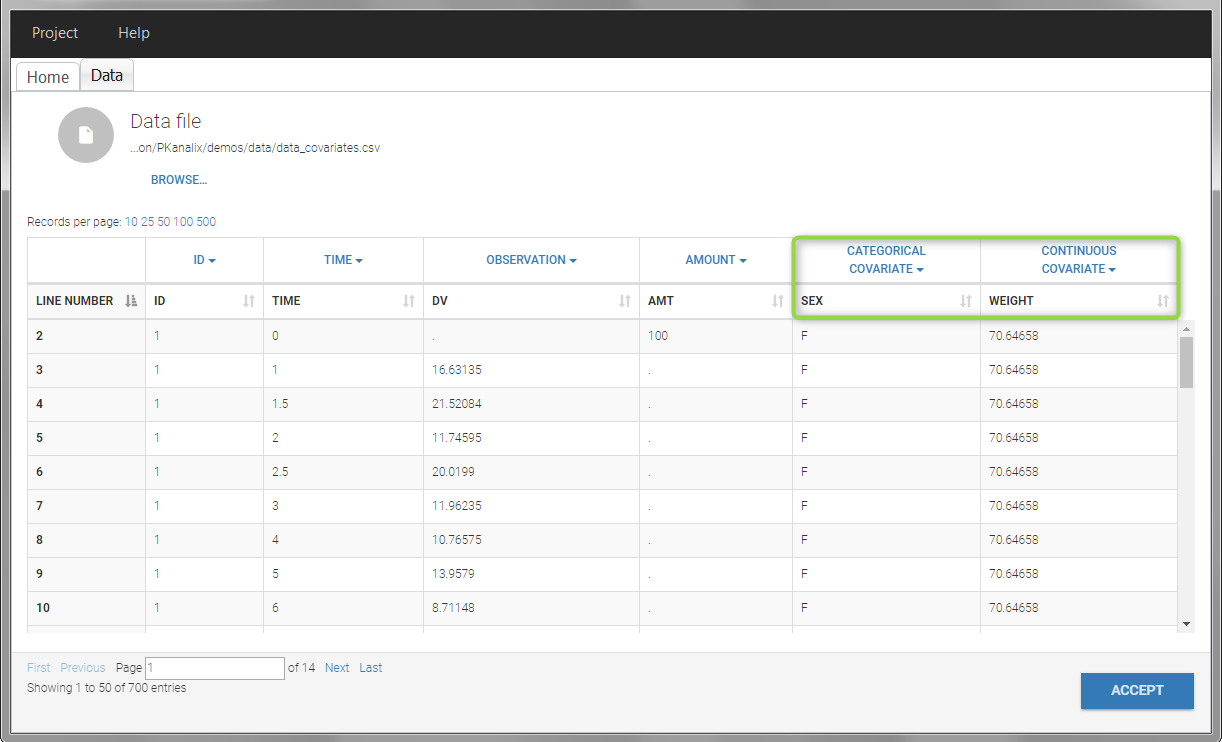

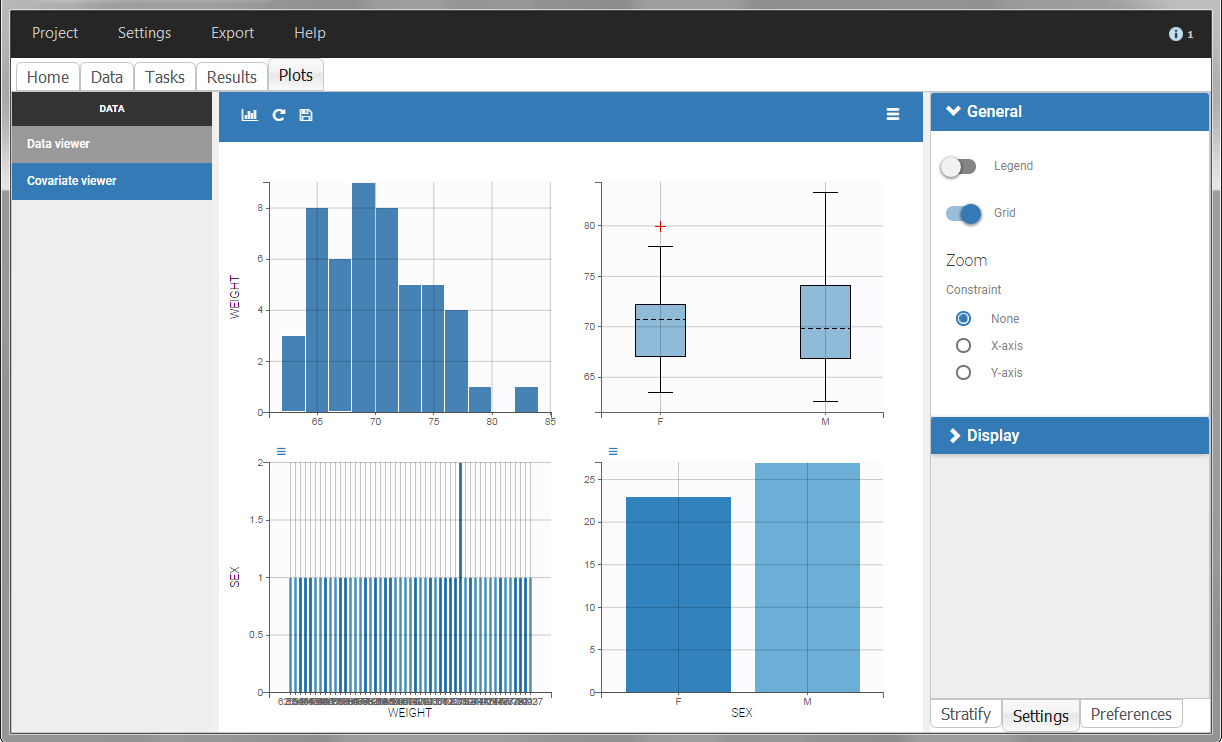

In this data set, “SEX” is tagged as CATEGORICAL COVARIATE and “WEIGHT” as CONTINUOUS COVARIATE.

The “cov stat” table permits to see a few statistics of the covariate values in the data set. In the plot “Covariate viewer”, we see that the distribution of weight is similar for males and females.

|

|

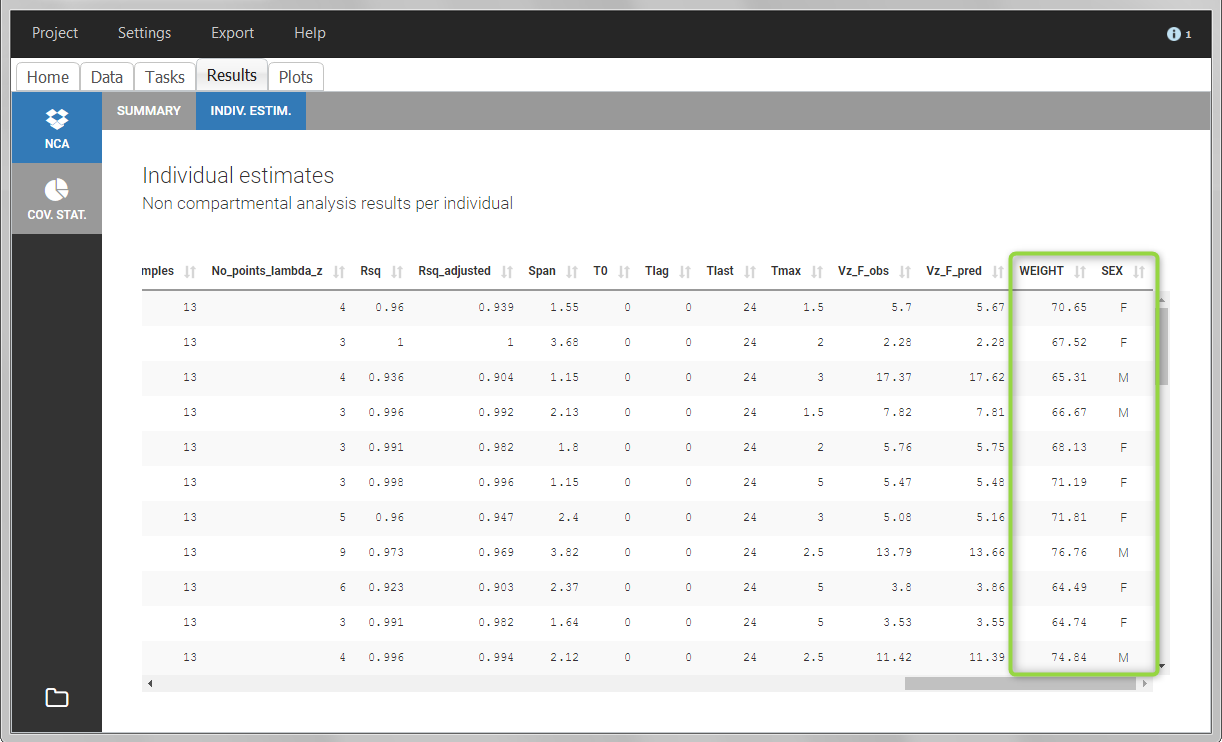

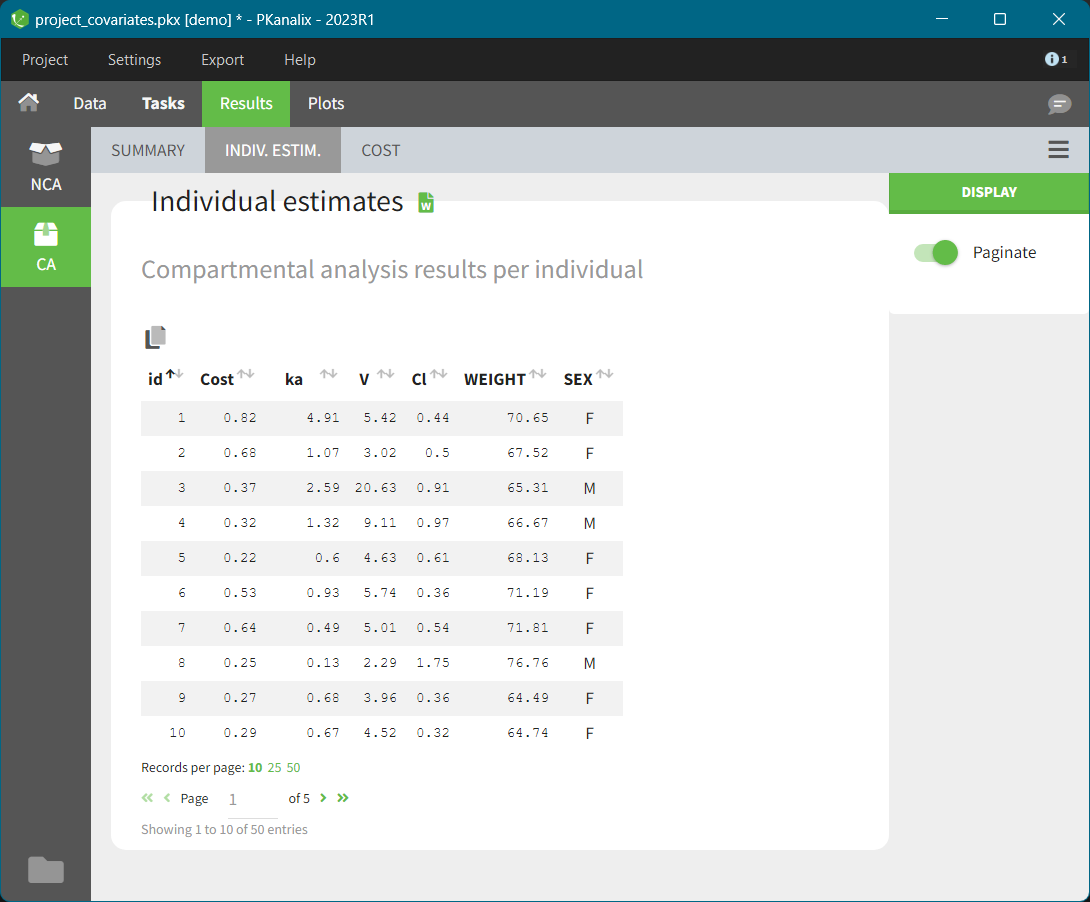

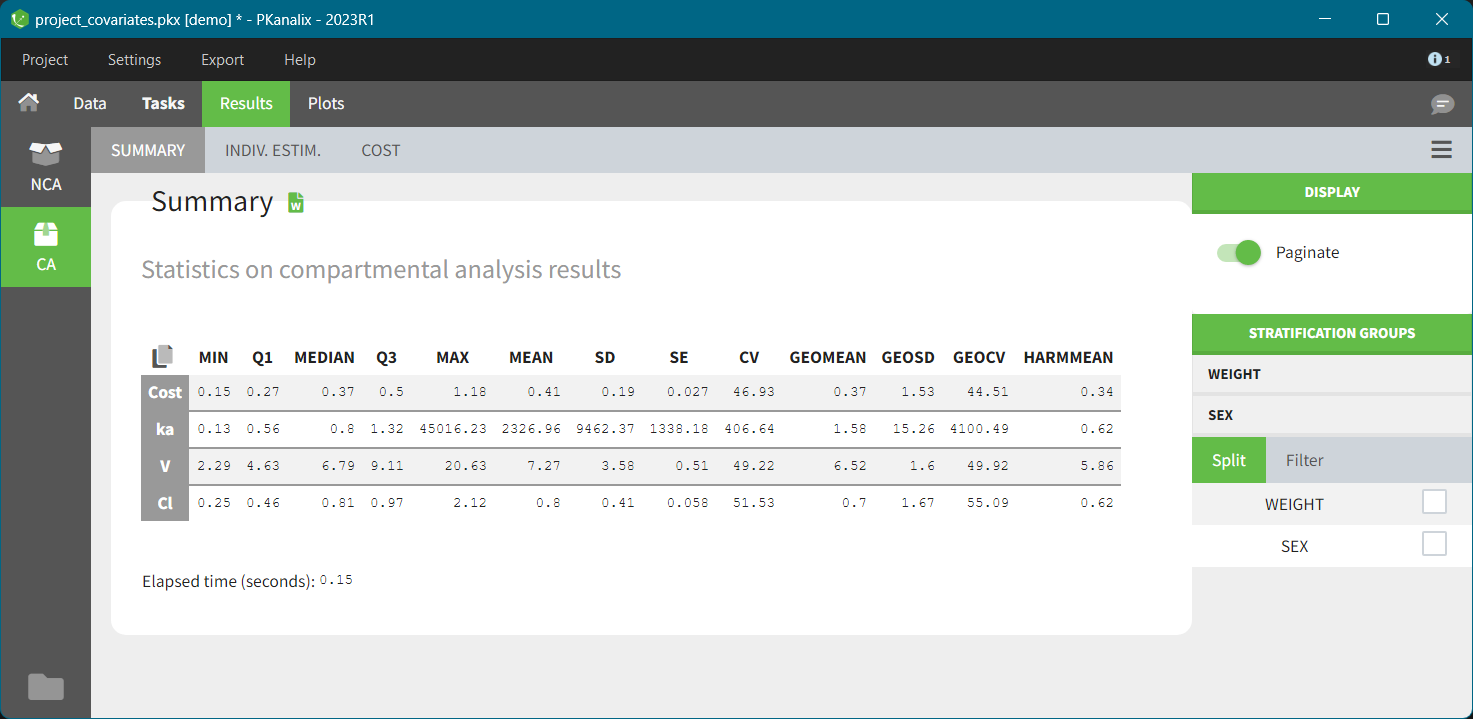

After running the NCA and CA tasks, both covariates appear in the table of individual estimated parameters estimated.

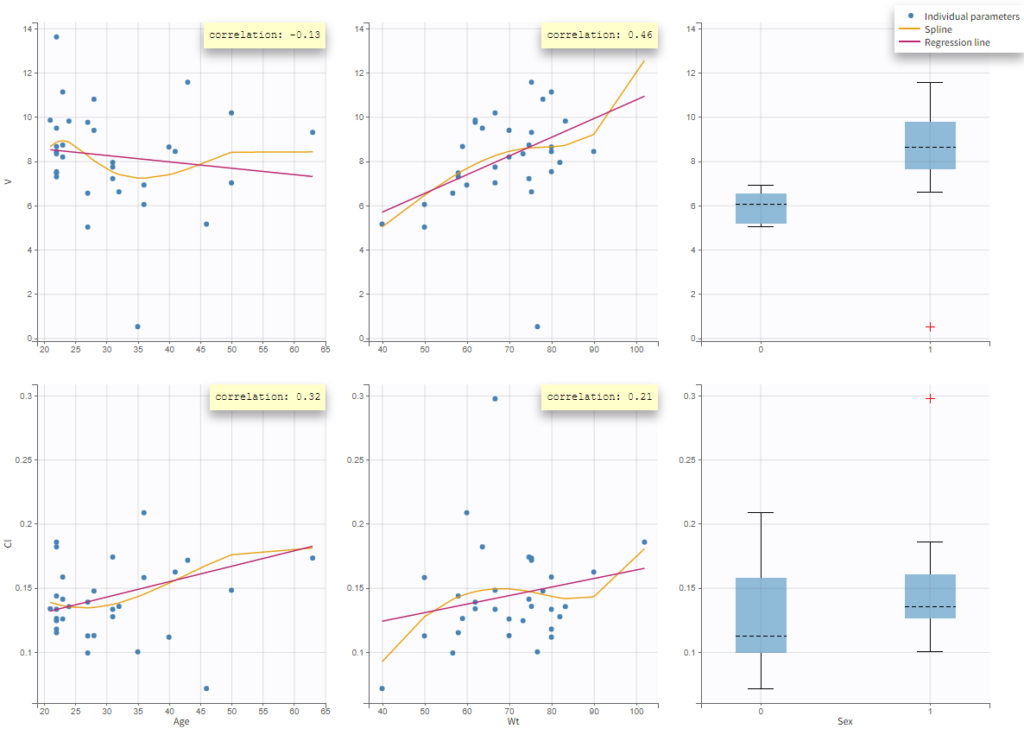

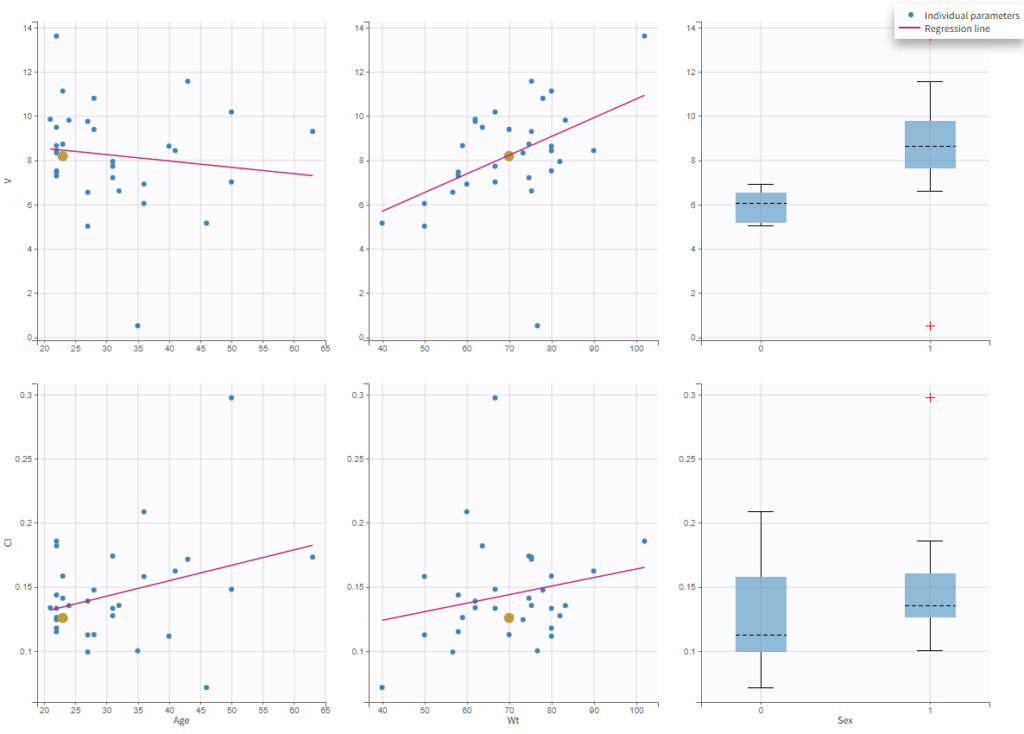

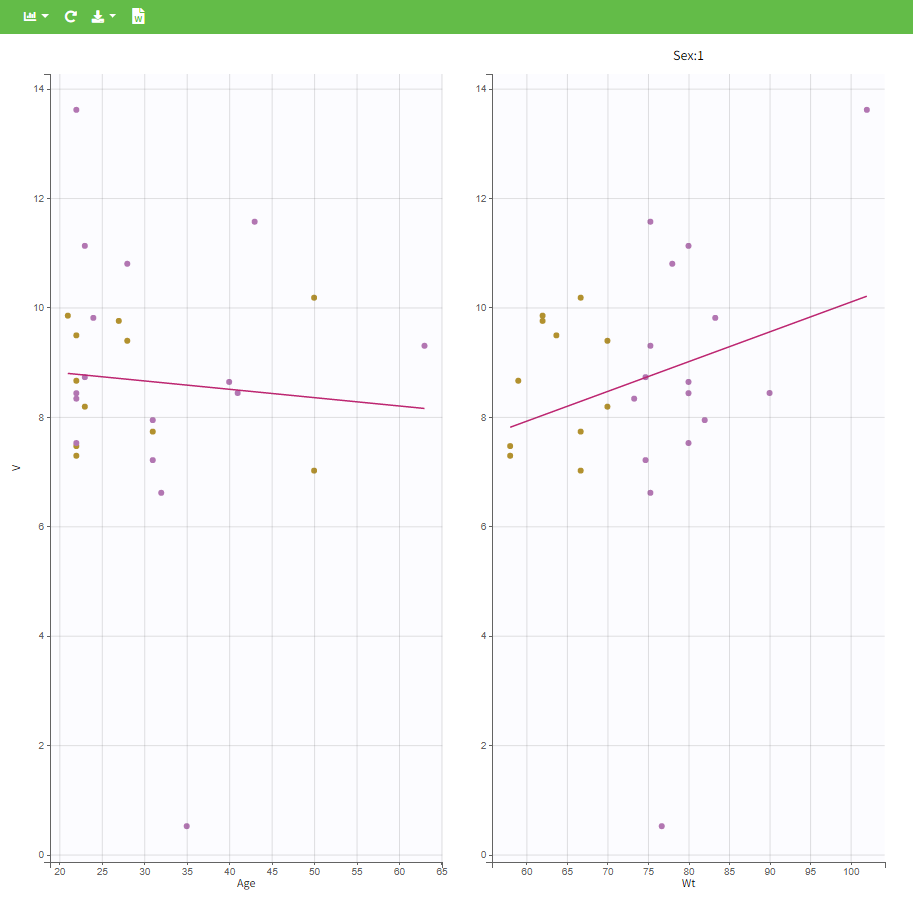

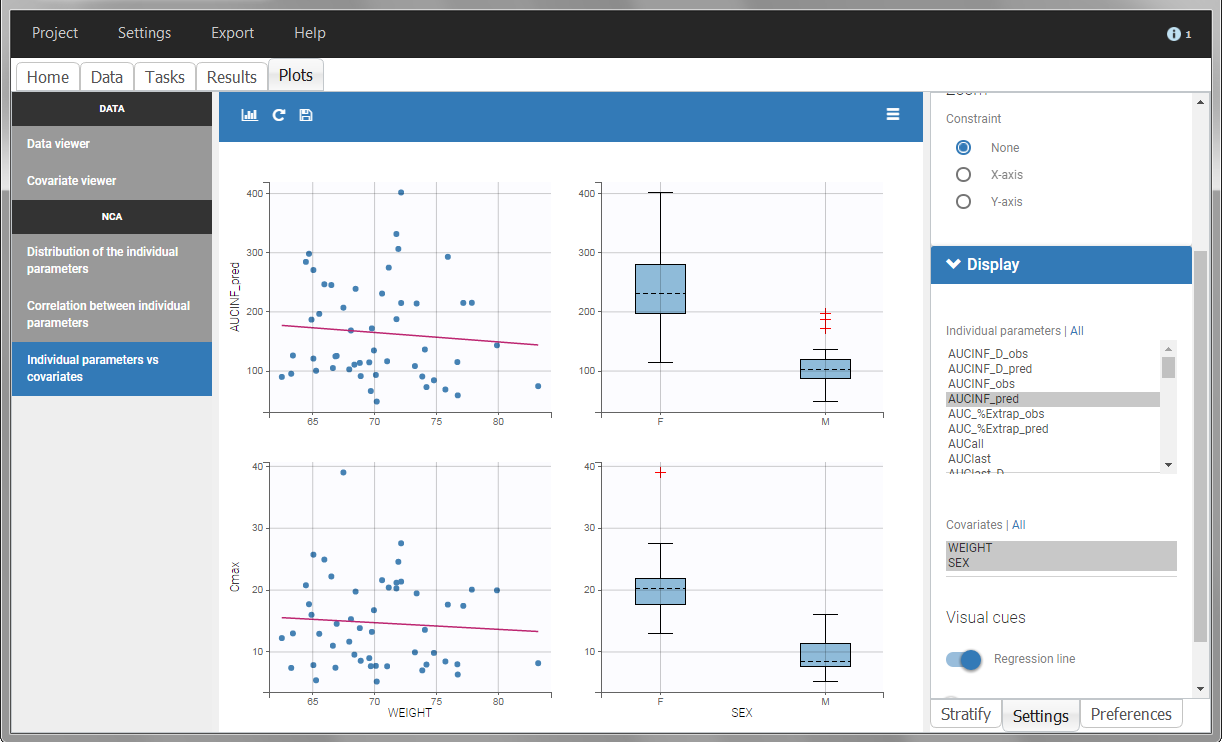

In the plot “parameters versus covariates”, the parameters values are plotted as scatter plot with the parameter value (here Cmax and AUCINF_pred) on on y-axis and the weight value on the x-axis, and as boxplots for sex female and sex male.

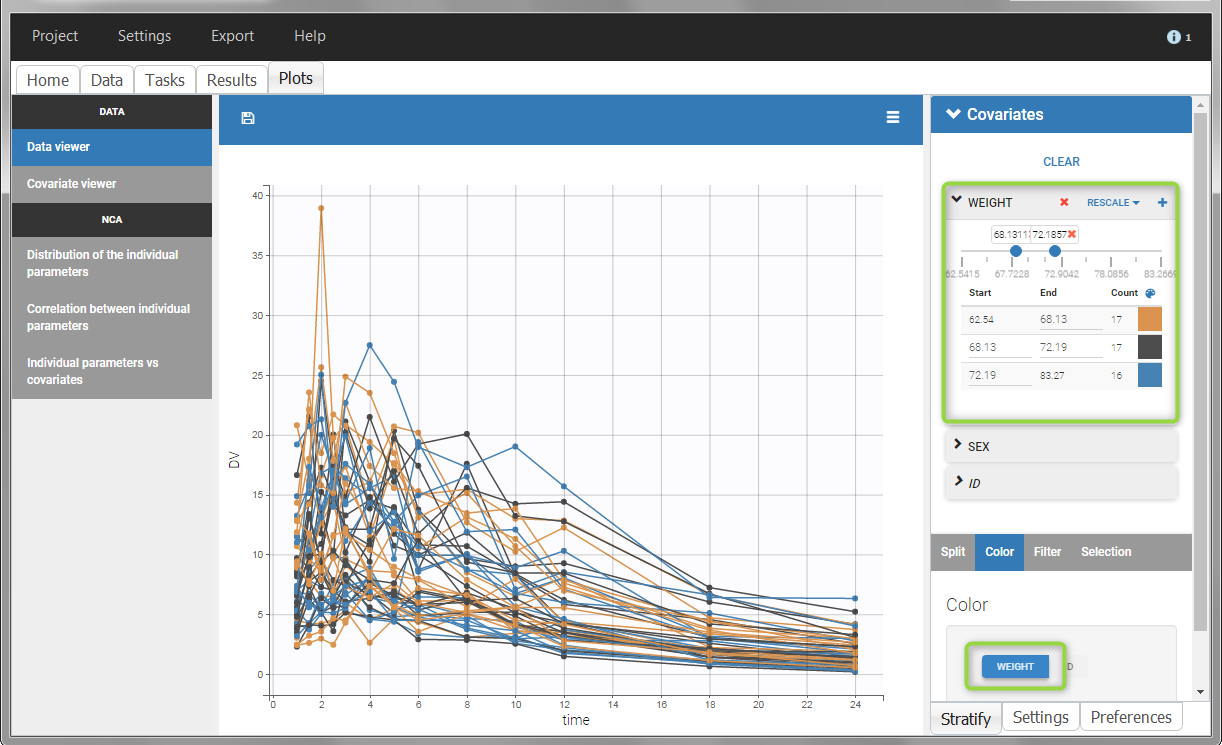

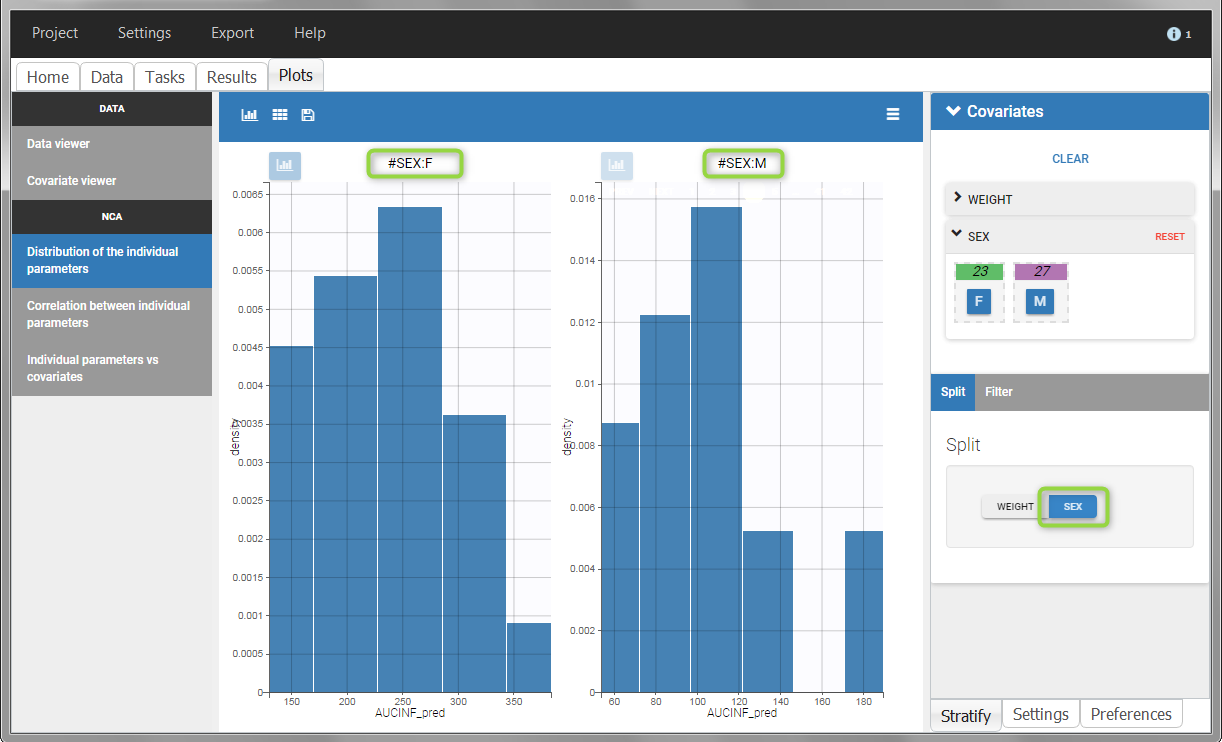

All plots can be stratified using the covariates. For instance, the “Data viewer” can be colored by weight after having created 3 weight groups. Below we also show the plot “Distribution of the parameters” split by sex with selection of the AUCINF_pred parameter.

|

|

Description of all possible column types

Column-types used for all types of lines:

- ID (mandatory): identifier of the individual

- OCCASION (formerly OCC): identifier (index) of the occasion

- TIME (mandatory): time of the dose or observation record

- NOMINAL TIME: time at which doses and observations were expected to occur

- DATE/DAT1/DAT2/DAT3: date of the dose or observation record, to be used in combination with the TIME column

- EVENT ID (formerly EVID): identifier to indicate if the line is a dose-line or a response-line

- IGNORED OBSERVATION (formerly MDV): identifier to ignore the OBSERVATION information of that line

- IGNORED LINE (from 2019 version): identifier to ignore all the informations of that line

- CONTINUOUS COVARIATE (formerly COV): continuous covariates (which can take values on a continuous scale)

- CATEGORICAL COVARIATE (formerly CAT): categorical covariate (which can only take a finite number of values)

- REGRESSOR (formerly X): defines a regression variable, i.e a variable that can be used in the structural model (used e.g for time-varying covariates)

- IGNORE: ignores the information of that column for all lines

Column-types used for response-lines:

- OBSERVATION (mandatory, formerly Y): records the measurement/observation for continuous, count, categorical or time-to-event data

- OBSERVATION ID (formerly YTYPE): identifier for the observation type (to distinguish different types of observations, e.g PK and PD)

- CENSORING (formerly CENS): marks censored data, below the lower limit or above the upper limit of quantification

- LIMIT: upper or lower boundary for the censoring interval in case of CENSORING column

Column-types used for dose-lines:

- AMOUNT (mandatory, formerly AMT): dose amount (with version 2024 only mandatory if dataset contains STEADY STATE, ADDITIONAL DOSES, INFUSIONRATE or INFUSION DURATION columns)

- ADMINISTRATION ID (formerly ADM): identifier for the type of dose (given via different routes for instance)

- INFUSION RATE (formerly RATE): rate of the dose administration (used in particular for infusions)

- INFUSION DURATION (formerly TINF): duration of the dose administration (used in particular for infusions)

- ADDITIONAL DOSES (formerly ADDL): number of doses to add in addition to the defined dose, at intervals INTERDOSE INTERVAL

- INTERDOSE INTERVAL (formerly II): interdose interval for doses added using ADDITIONAL DOSES or STEADY-STATE column types

- STEADY STATE (formerly SS): marks that steady-state has been achieved, and will add a predefined number of doses before the actual dose, at interval INTERDOSE INTERVAL, in order to achieve steady-state

2.3.Data formatting

The dataset format that is used in PKanalix is the same as for the entire MonolixSuite, to allow smooth transitions between applications. In this format, some rules have to be fullfilled, for example:

- Each line corresponds to one individual and one time point.

- Each line can include a single measurement (also called observation), or a dose amount (or both a measurement and a dose amount).

- Dose amount should be indicated for each individual dose in a column AMOUNT, even if it is identical for all.

- Headers are free but there can be only one header line.

If your dataset is not in this format, in most cases, it is possible to format it in a few steps in the data formatting tab, to incorporate the missing information.

In this case, the original dataset should be loaded in the “Format data” box, or directly in the “Data Formatting” tab, instead of the “Data” tab. In the data formatting module, you will be guided to build a dataset in the MonolixSuite format, starting from the loaded csv file. The resulting formatted dataset is then loaded in the Data tab as if you loaded an already-formatted dataset in “Data” directly. Then as for defining any dataset, you can tag columns, accept the dataset, and once accepted, units can be specified in the Data tab and the Filters tab can be used to select only parts of this dataset for analysis. Note that units and filters are neither information to be included in the data file, nor part of the data formatting process.

From the version 2024R1, it is possible to save and reapply data formatting steps on multiple projects, using data formatting presets.

Jump to:

- Data formatting workflow

- Dataset initialization (mandatory step)

- Selecting header lines or lines to exclude to merge header lines or exclude a line

- Tagging mandatory columns such as ID and TIME

- Initialization example Demo project CreateOcc_AdmIdbyCategory.pkx

- Creating occasions from a SORT column to distinguish different sets of measurements within each subject, (eg formulations). Demo project CreateOcc_AdmIdbyCategory.pkx

- Selecting an observation type (required to add a treatment)

- Merging observations from several columns

- As observation ids to map them to several outputs in CA. Demo project merge_obsID_ParentMetabolite.pkx

- As occasions to analyze them simultaneously in NCA. Demo project merge_occ_ParentMetabolite.pkx

- Option “Duplicate information from undefined columns”

- Specifying censoring from censoring tags eg “BLQ” instead of a number in an observation column. Demo project DoseAndLOQ_manual.pkx

- Adding doses in the dataset Demo project DoseAndLOQ_manual.pkx

- Reading censoring limits or dosing information from the dataset “by category” or “from data”. Demo projects DoseAndLOQ_byCategory.pkx and DoseAndLOQ_fromData.pkx

- Creating occasions from dosing intervals to analyze separately the measurements following different doses.Demo project doseIntervals_as_Occ.mlxtran

- Handling urine data to merge start and end times in a single column. Demo project Urine_LOQinObs.pkx

- Adding new columns from an external file, eg new covariates, or individual parameters estimated in a previous analysis. Demo warfarin_PKPDseq_project.mlxtran

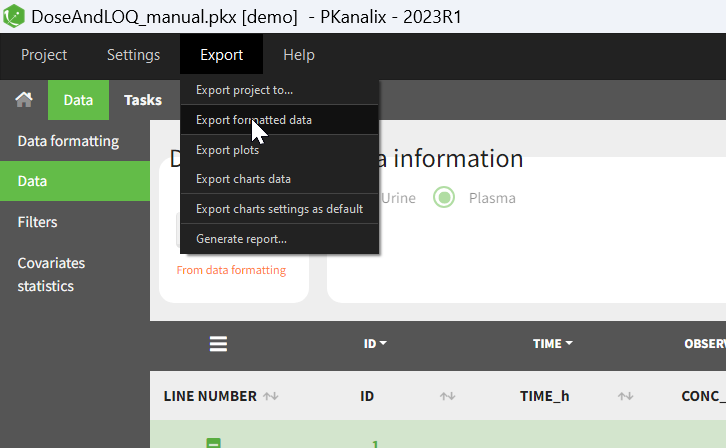

- Exporting the formatted dataset

1. Data formatting workflow

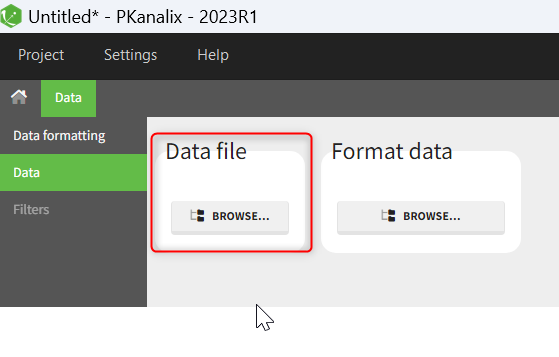

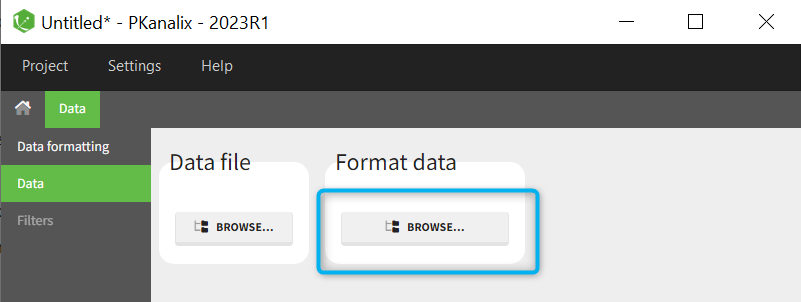

When opening a new project, two Browse buttons appear. The first one, under “Data file”, can be used to load a dataset already in a MonolixSuite-standard format, while the second one, under “Format data”, allows to load a dataset to format in the Data formatting module.

After loading a dataset to format, data formatting operations can be specified in several subtabs: Initialization, Observations, Treatments and Additional columns.

- Initialization is mandatory and must be filled before using the other subtabs.

- Observations is required to enable the Treatments tab.

After Initialization has been validated by clicking on “Next”, a button “Preview” is available from any subtab to view in the Data tab the formatted dataset based on the formatting operations currently specified.

2. Dataset initialization

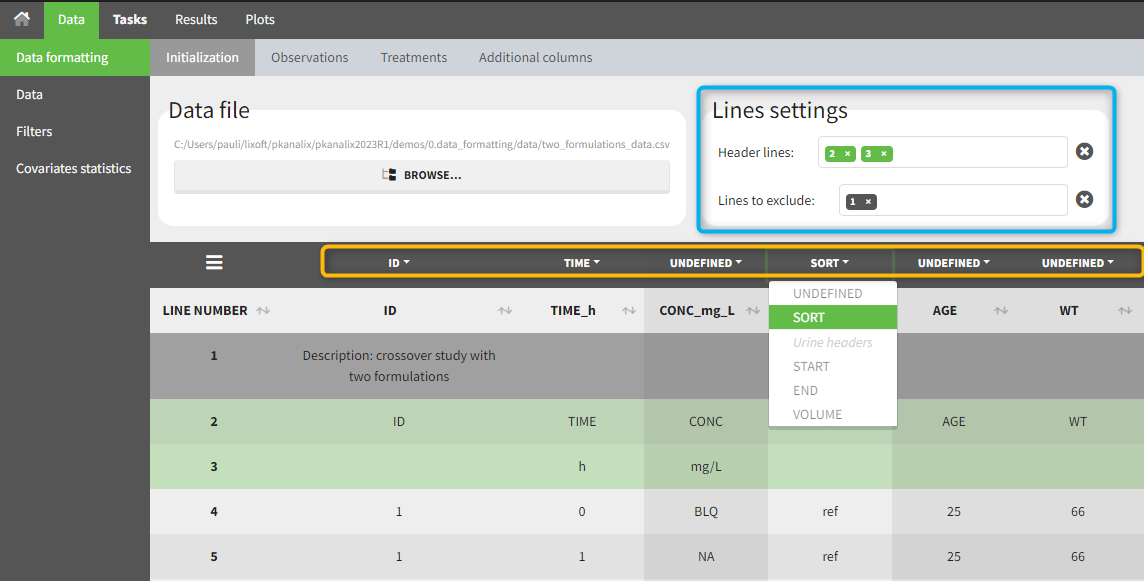

The first tab in Data formatting is named Initialization. This is where the user can select header lines or lines to exclude (in the blue area on the screenshot below) or tag columns (in the yellow area).

Selecting header lines or lines to exclude

These settings should contain line numbers for lines that should be either handled as column headers or that should be excluded.

- Header lines: one or several lines containing column header information. By default, the first line of the dataset is selected as header. If several lines are selected, they are merged by data formatting into a single line, concatenating the cells in each column.

- Lines to exclude (optional): lines that should be excluded from the formatted dataset by data formatting.

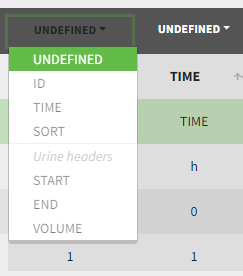

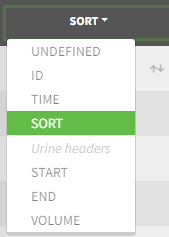

Tagging mandatory columns

Only the columns corresponding to the following tabs must be tagged in Initialization, while all the other columns should keep the default UNDEFINED tag:

- ID (mandatory): subject identifiers

- TIME (mandatory): the single time column

- SORT (optional): one or several columns containing SORT variables can be tagged as SORT. Occasions based on these columns will be created in the formatted dataset as described in Section 3.

- START, END and VOLUME (mandatory in case of urine data): these column tags replace the TIME tag in case of urine data, if the urine collection time intervals are encoded in the dataset with two time columns for the start and end times of the intervals. In that case there should also be a column with the urine volume in each interval. See Section 10 for more details.

Initialization example

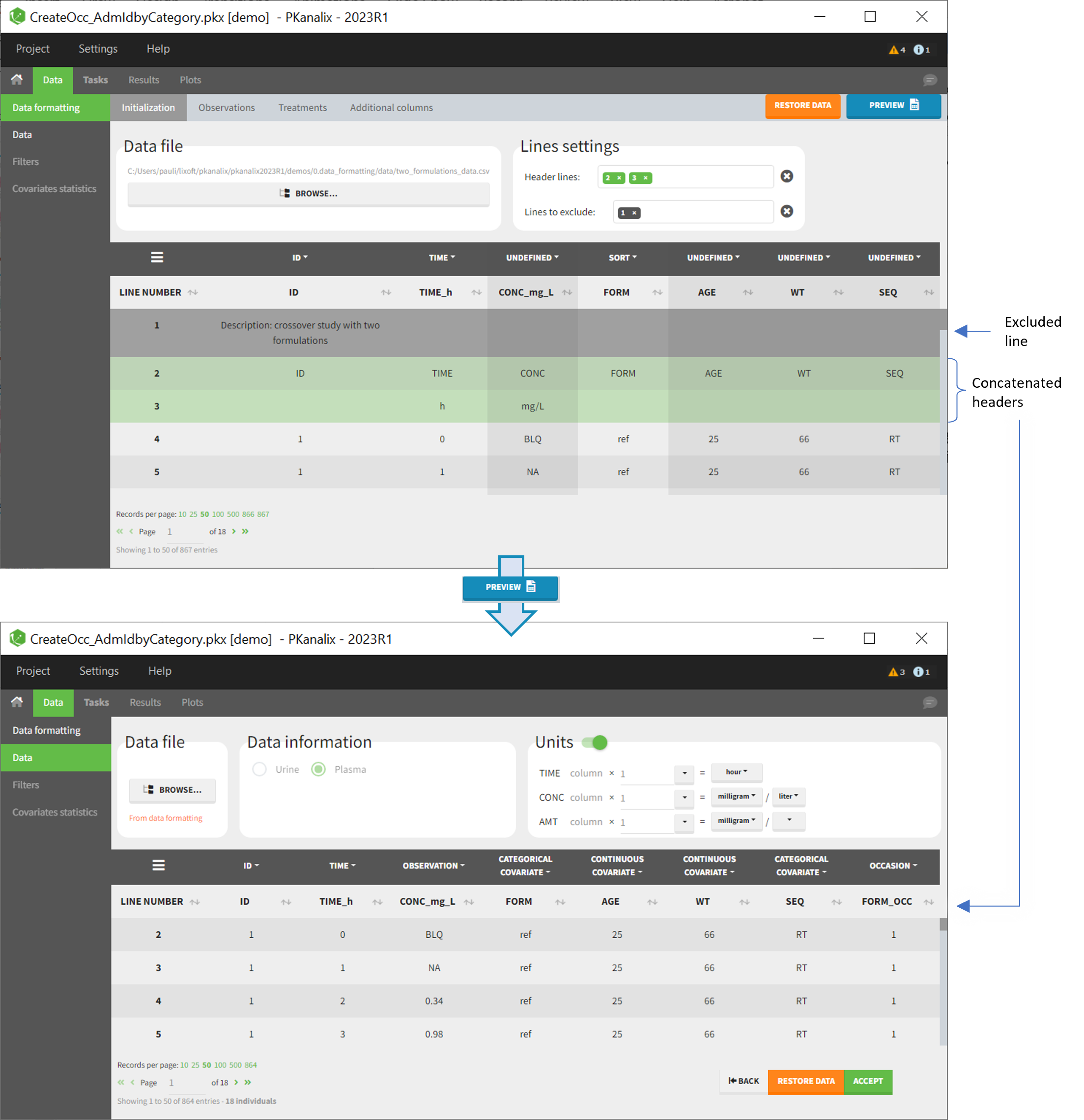

- demo CreateOcc_AdmIdbyCategory.pkx (the screenshot below focuses on the formatting initialization and excludes other elements present in the demo):

In this demo the first line of the dataset is excluded because it contains a description of the study. The second line contains column headers while the third line contains column units. Since the MonolixSuite-standard format allows only a single header line, lines 2 and 3 are merged together in the formatted dataset.

3. Creating occasions from a SORT column

A SORT variable can be used to distinguish different sets of measurements (usually concentrations) within each subject, that should be analyzed separately by PKanalix (for example: different formulations given to each individual at different periods of time, or multiple doses where concentration profiles are available to be analyzed following several doses).

In PKanalix, these different sets of measurements must be distinguished as OCCASIONS (or periods of time), via the OCCASION column-type. However, a column tagged as OCCASION can only contain integers with occasion indexes. Thus, if a column with a SORT variable contains strings, its format must be adapted by Data formatting, in the following way:

- the user must tag the column as SORT in the Initialization subtab of Data formatting,

- the user validates the Initialization with “Next”, then clicks on “Preview” (after optionally defining other data formatting operations),

- the formatted data is shown in Data: the column tagged as SORT is automatically duplicated. The original column is automatically tagged as CATEGORICAL COVARIATE in Data, while the duplicated column, which has the same name appended with “_OCC”, is tagged as OCCASION. This column contains occasion indexes instead of strings.

Example:

- demo CreateOcc_AdmIdbyCategory.pkx (the screenshot below focuses on the formatting of occasions and excludes other elements present in the demo):

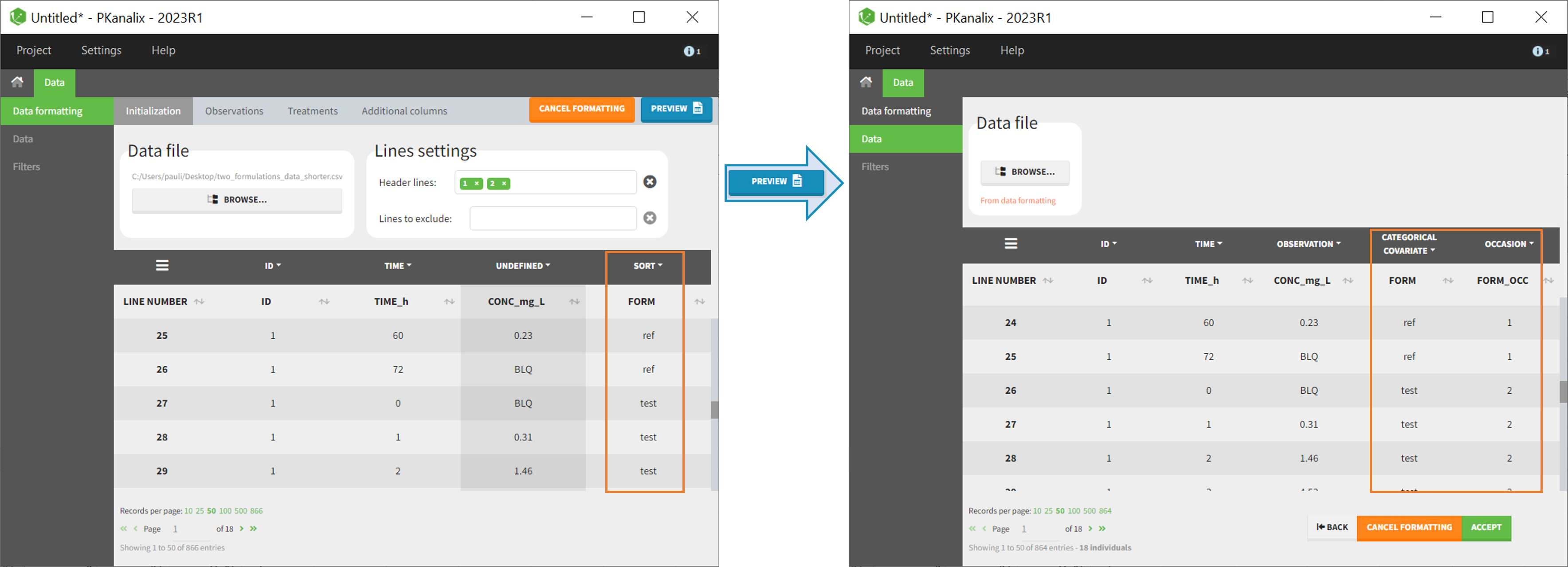

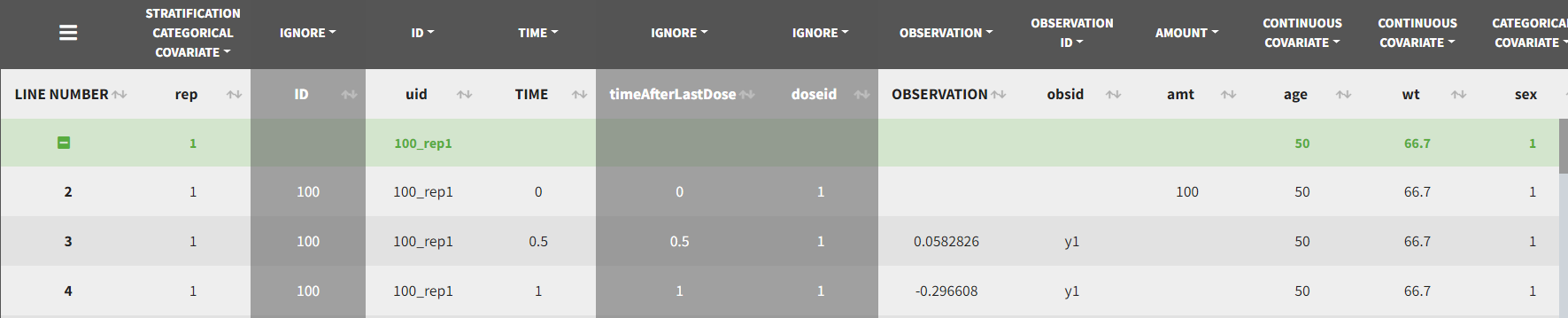

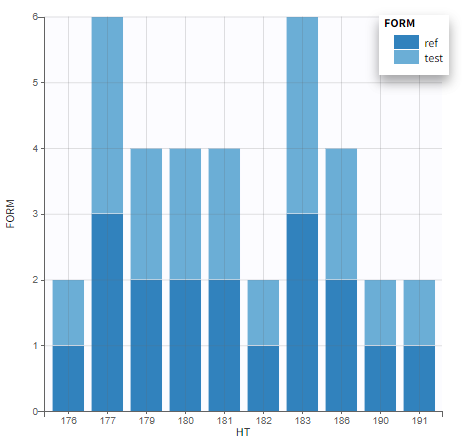

The image below shows lines 25 to 29 from the dataset from the CreateOcc_AdmIdbyCategory.pkx demo, where covariate columns have been removed to simplify the example. This dataset contains two sets of concentration measurements for each individual, corresponding to two different drug formulations administered on different periods. The sets of concentrations are distinguished with the FORM column, which contains “ref” and “test” categories (reference/test formulations). The column is tagged as SORT in Data formatting Initialization. After clicking on “Preview”, we can see in the Data tab that a new column named FORM_OCC has been created with occasion indexes for each individual: for subject 1, FORM_OCC=1 corresponds to the reference formulation because it appears first in the dataset, and FORM_OCC=2 corresponds to the test formulation because it appears in second in the dataset.

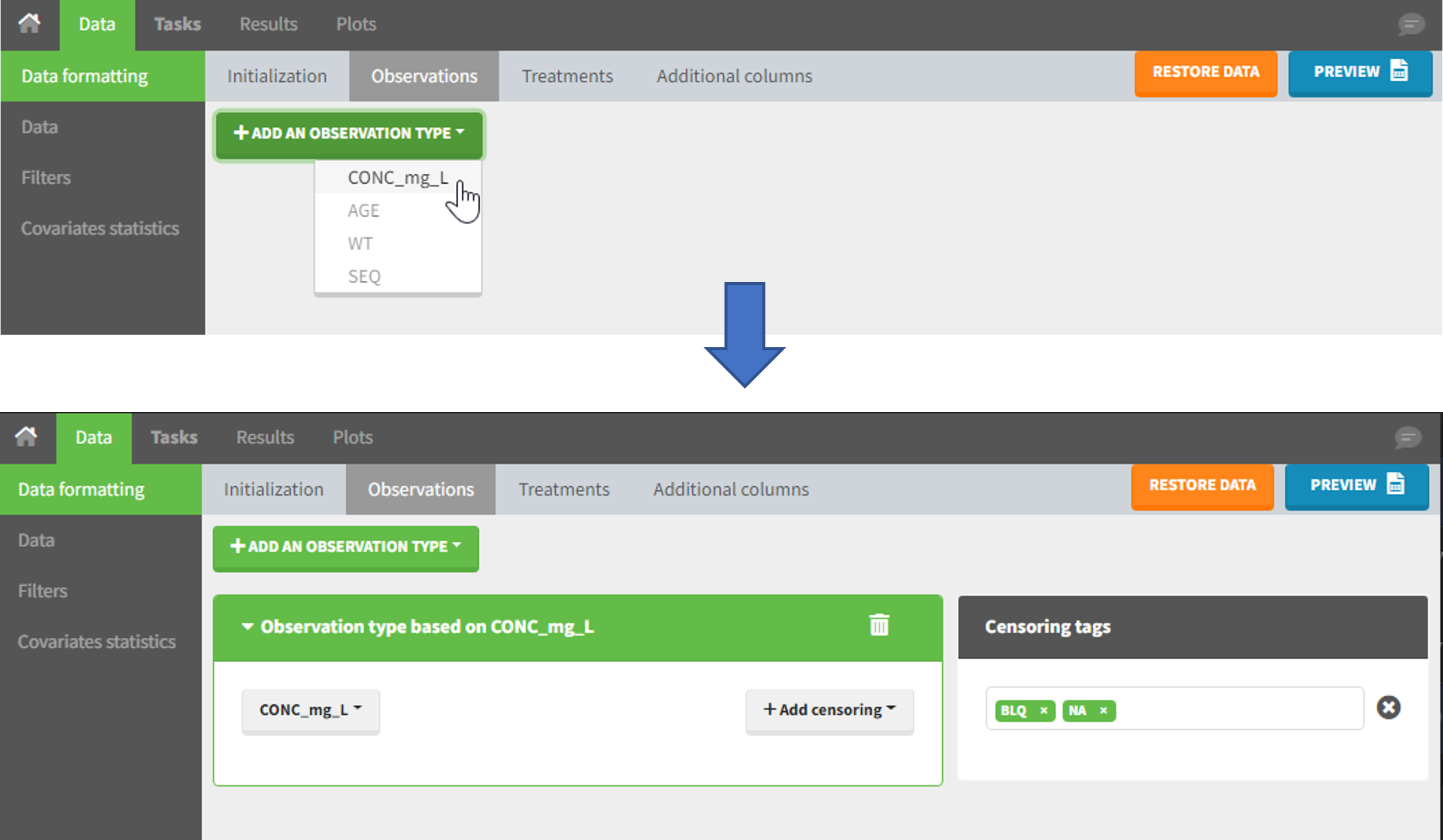

4. Selecting an observation type

The second subtab in Data formatting allows to select one or several observation types. An observation type corresponds to a column of the dataset, that contains a type of measurements (usually drug concentrations, but it can also be PD measurements for example). Only columns that have not been tagged as ID, TIME or SORT are available as observation type.

This action is optional and can have several purposes:

- If doses must be added by Data formatting (see Section 7), specifying the column containing observations is mandatory, to avoid duplicating observations on new dose lines.

- If several observation types exist in different columns (for example: concentrations for different analytes, or measurements for PK and PD), they must be specified in Data formatting to be merged into a single observation column (see Section 5).

- In the MonolixSuite-standard format, the column containing observations can only contain numbers, and no string except “.” for a missing observation. Thus if this column contains strings in the original dataset, it must be adapted by Data formatting, with two different cases:

- if the strings are tags for censored observations (usually BLQ: below the limit of quantification), they can be specified in Data formatting to adapt the encoding of the censored observations (see Section 6),

- any other string in the column is automatically replaced by “.” by Data formatting.

5. Merging observations from several columns

The MonolixSuite-standard format allows a single column containing all observations (such as concentrations or PD measurements). Thus if a dataset contains several observation types in different columns (for example: concentrations for different analytes, or measurements for PK and PD), they must be specified in Data formatting to be merged into a single observation column.

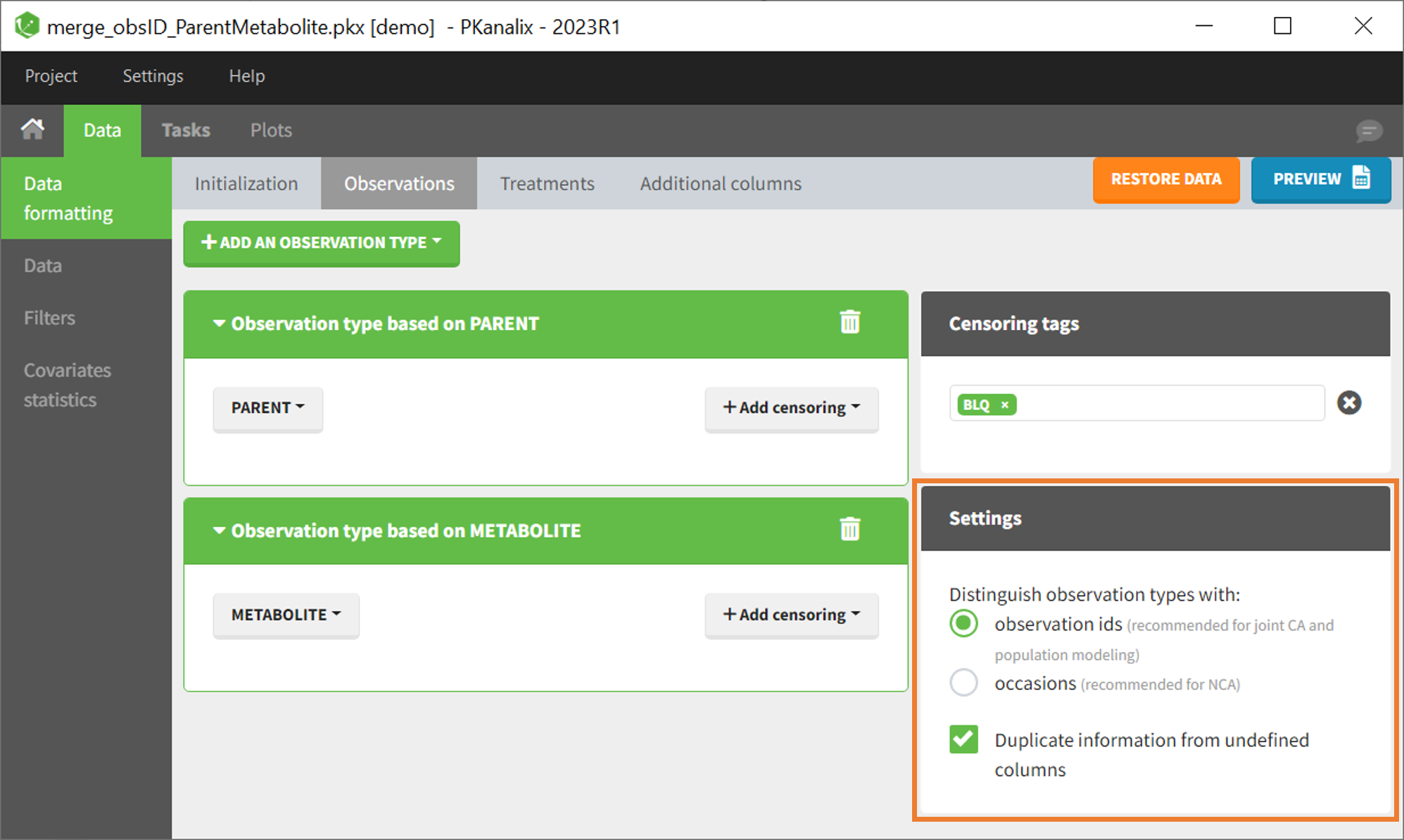

In that case, different settings can be chosen in the area marked in orange in the screenshot below:

- The user must choose between distinguishing observation types with observation ids or occasions.

- The user can unselect the option “Duplicate information from undefined columns”.

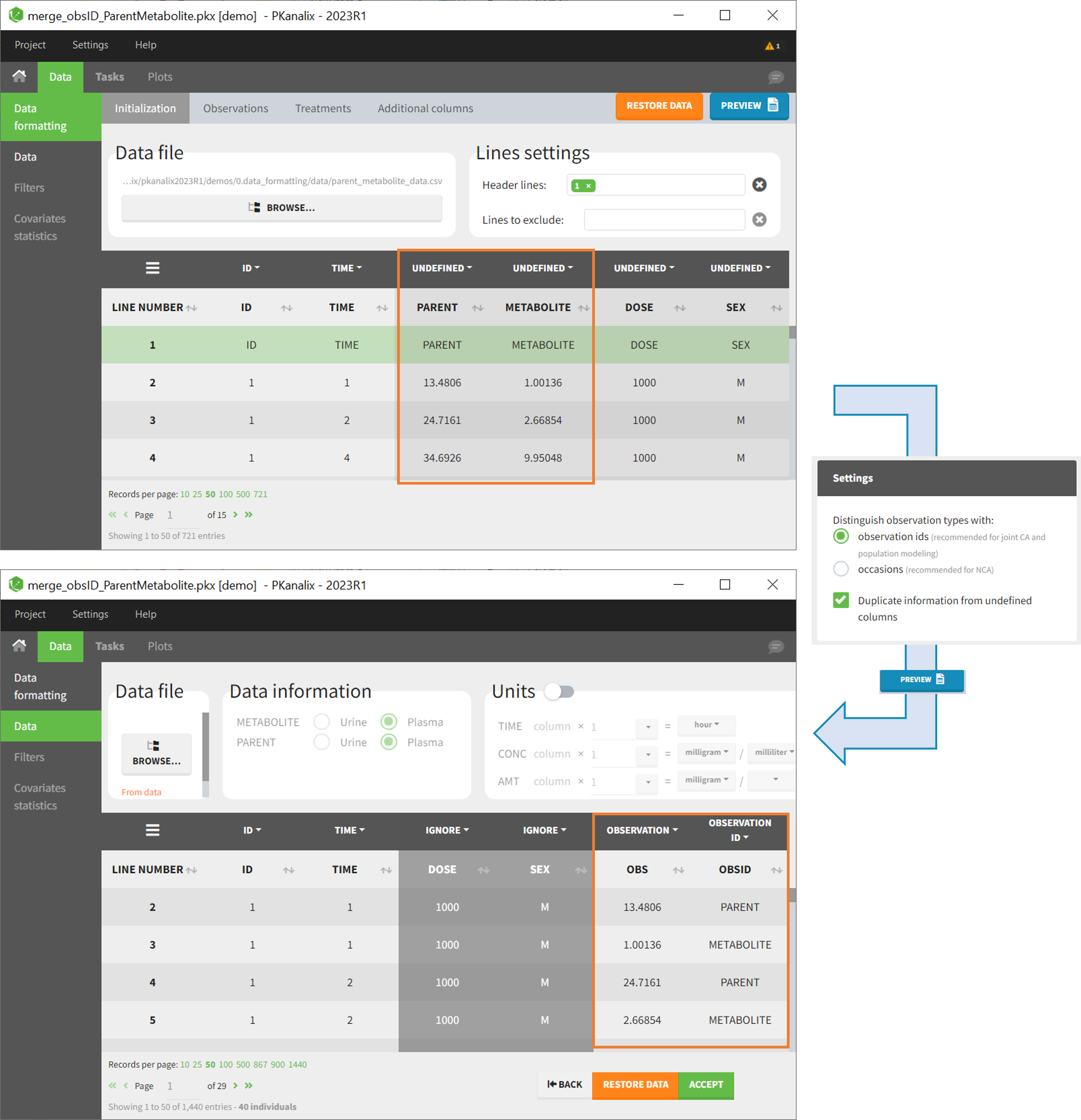

As observation ids

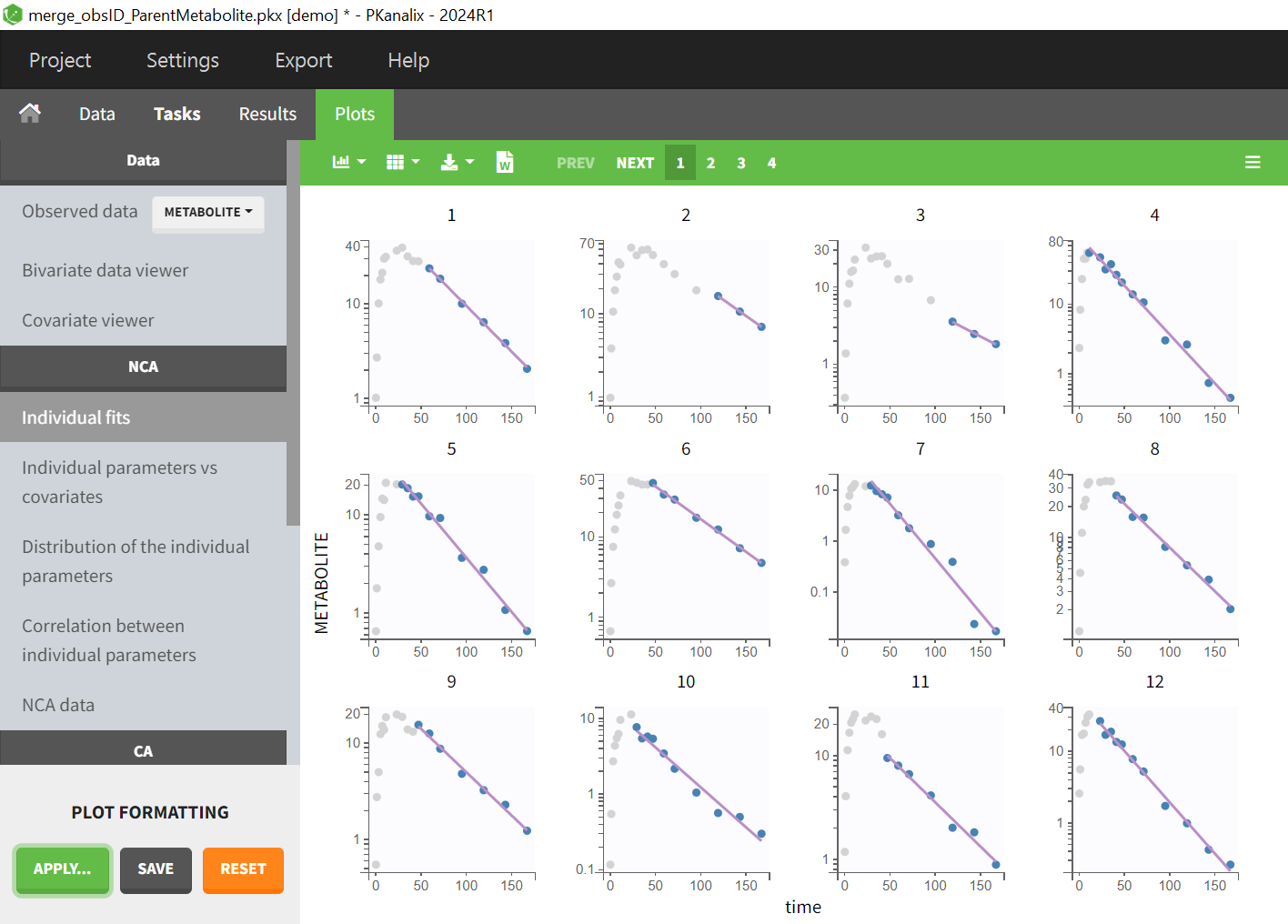

After selecting the “Distinguish observation types with: observation ids” option and clicking “Preview,” the columns for different observation types are combined into a single column called “OBS.” Each row of the dataset is duplicated for each observation type, with one value per observation type. Additionally, an “OBSID” column is created, with the name of the observation type corresponding to the measurement on each row.

This option is recommended for joint modeling of observation types, such as CA in PKanalix or population modeling in Monolix. It is important to note that NCA cannot be performed on two different observation ids simultaneously, so it is necessary to choose one observation id for the analysis.

Example:

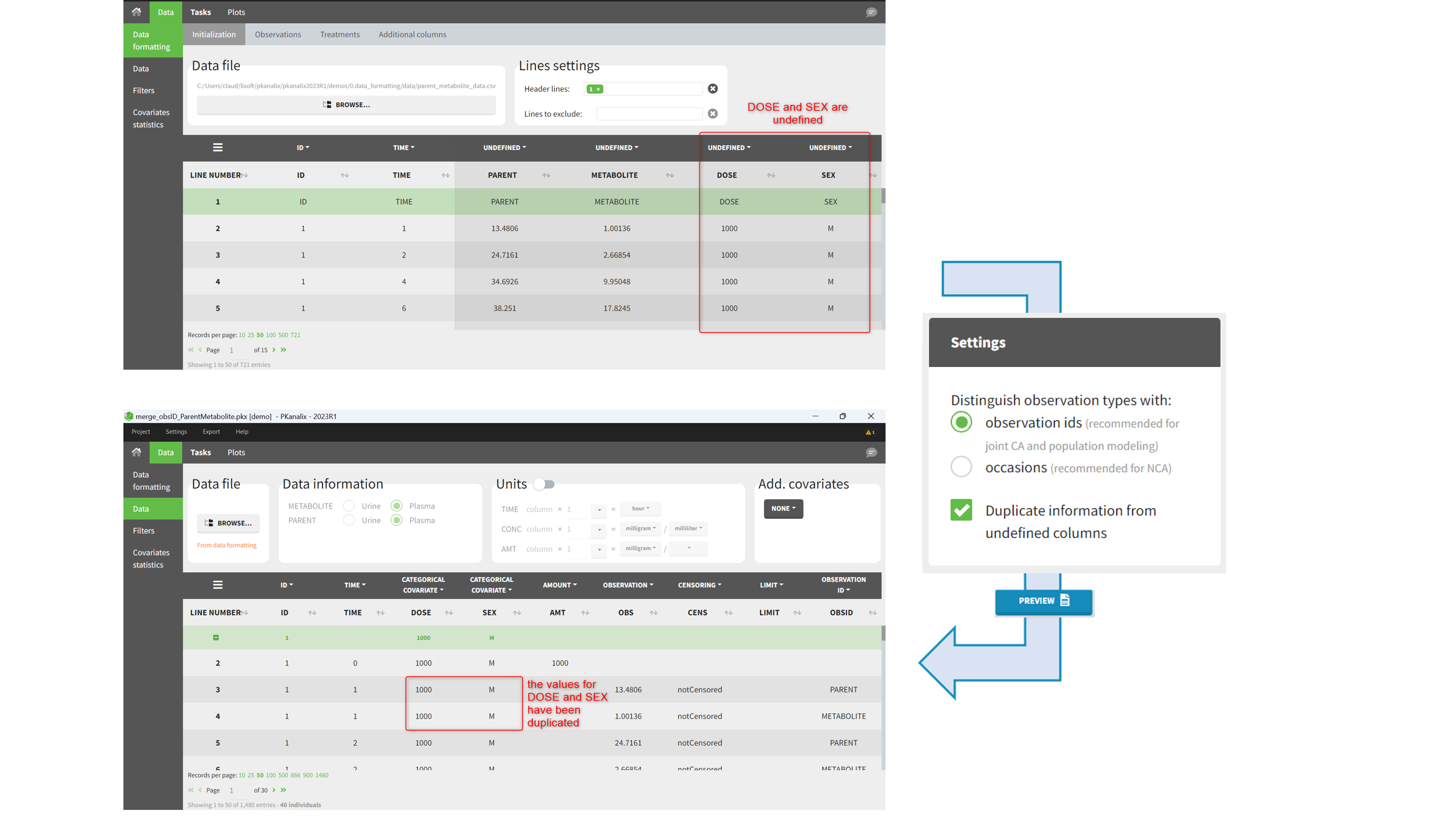

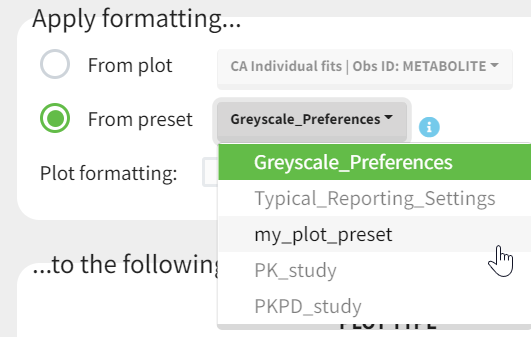

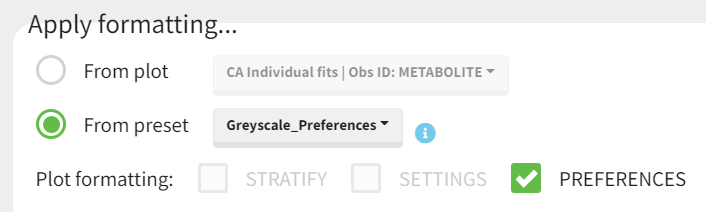

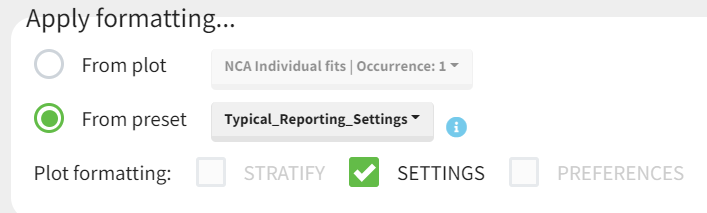

- demo merge_obsID_ParentMetabolite.pkx (the screenshot below focuses on the formatting of observations and excludes other elements present in the demo):

This demo involves two columns that contain drug parent and metabolite concentrations. When merging both observation types with observation ids, a new column called OBSID is generated with categories labeled as “PARENT” and “METABOLITE.”

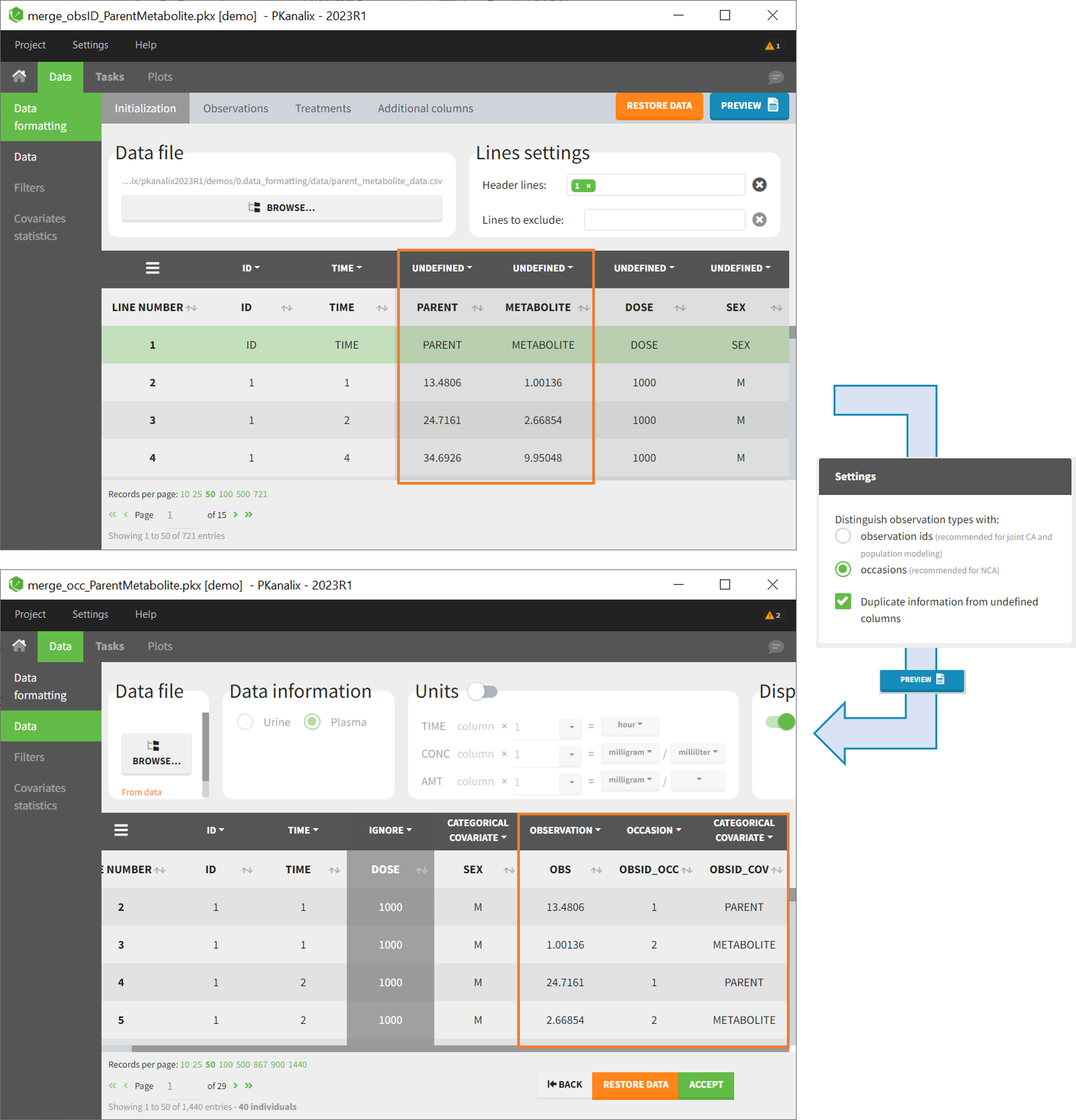

As occasions

After selecting the “Distinguish observation types with: occasions” option and clicking “Preview,” the columns for different observation types are combined into a single column called “OBS.” Each row of the dataset is duplicated for each observation type, with one value per observation type. Additionally, two columns are created: an “OBSID_OCC” column with the index of the observation type corresponding to the measurement on each row, and an “OBSID_COV” with the name of the observation type.

This option is recommended for NCA, which can be run on different occasions for each individual. However, joint modeling of the observation types with CA or population modeling with Monolix cannot be performed with this option.

Example:

- demo merge_occ_ParentMetabolite.pkx:

This demo involves two columns that contain drug parent and metabolite concentrations. When merging both observation types with occasions, two new columns called OBSID_OCC and OBSID_COV are generated with OBSID_OCC=1 corresponding to OBSID_COV=”PARENT” catand OBSID_OCC=2 corresponding to OBSID_COV=”METABOLITE.”

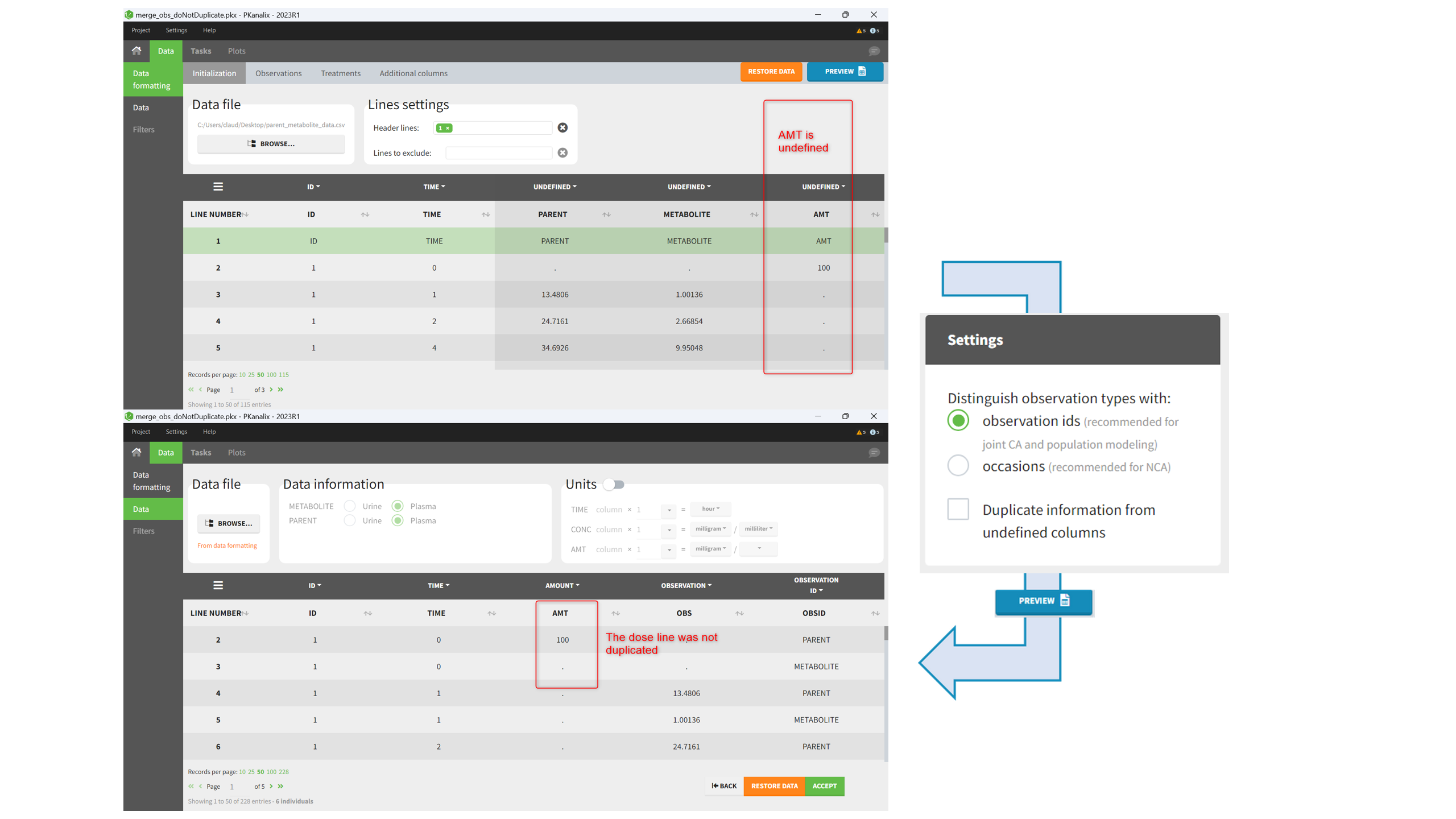

Duplicate information from undefined columns

When merging two observation columns into a single column, all other columns will see their lines duplicated. The data formatting will know how to treat columns which have been tagged in the Initialization tab, but not the other columns (header “UNDEFINED”) which are not used for data formatting. A checkbox enables to decide if the information from these columns should be duplicated on the new lines, or if “.” should be used instead. The default option is to duplicate information, because in general, the undefined columns correspond to covariates with one value per individual, so this value is the same for the two lines that correspond to the same id.

It is rare that you need to uncheck this box. An example where you should not duplicate the information is if you already have a column Amount in the MonolixSuite format, so with a dose amount only at the dosing time, and “.” everywhere else. If you do not want to specify amount again in data formatting, and simply want to merge observation columns as observation ids, you should not duplicate the lines of the Amount column which is undefined. Indeed, the dose amounts have been administered only once.

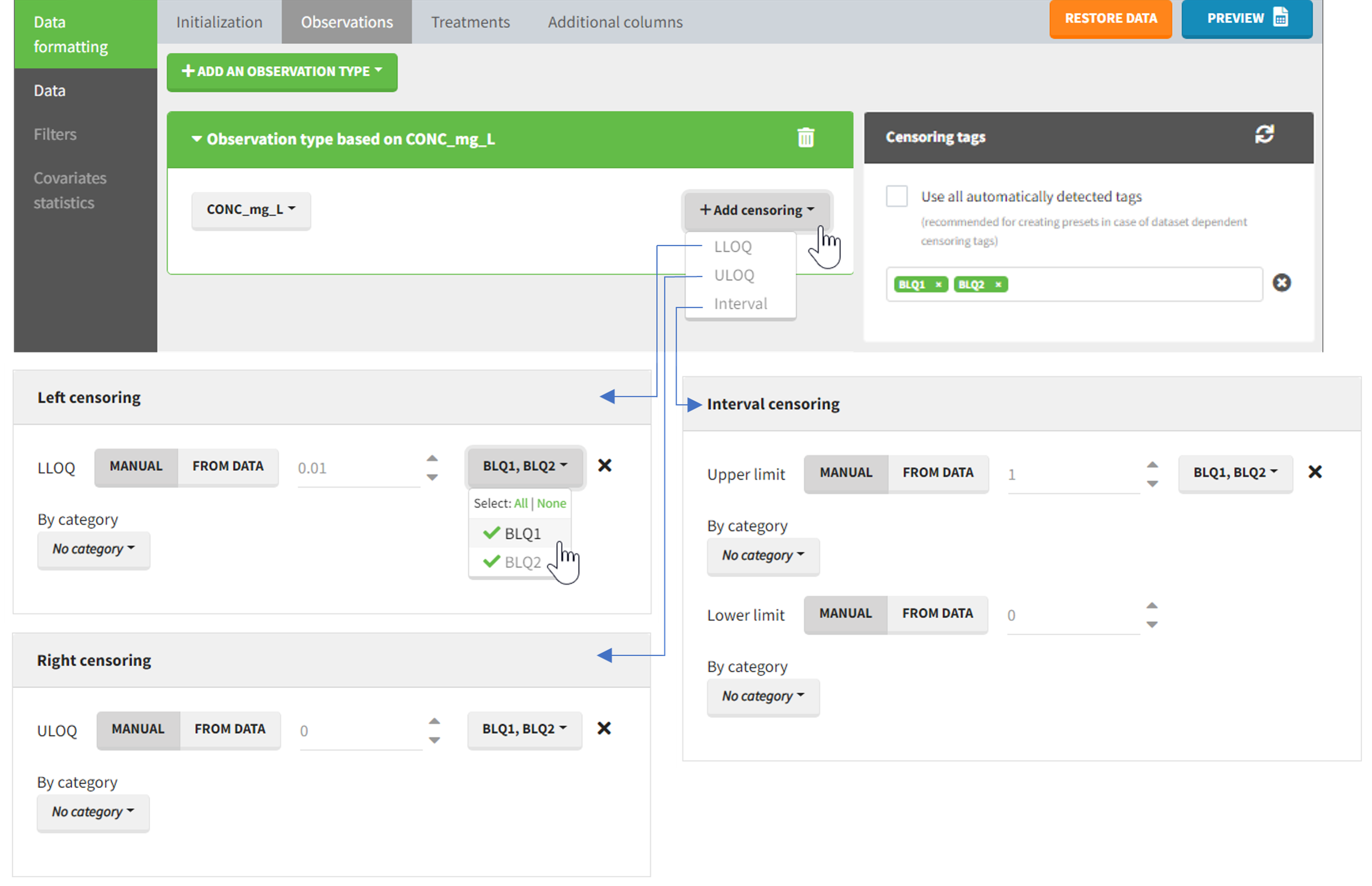

6. Specifying censoring from censoring tags

In the MonolixSuite-standard format, censored observations are encoded with a 1or -1 flag in a column tagged as CENSORING in the Data tab, while exact observations have a 0 flag in that column. In addition, on rows for censored observations, the LOQ is indicated in the observation column: it is the LLOQ (lower limit of quantification) if CENSORING=1 or the ULOQ (upper limit of quantification) if CENSORING=-1. Finally, to specify a censoring interval, an additional column tagged as LIMIT in the Data tab must exist in the dataset, with the other censoring bound.

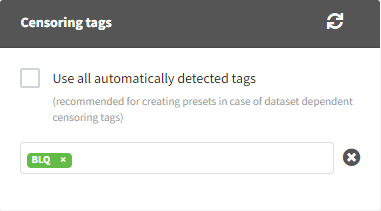

The Data Formatting module can take as input a dataset with censoring tags directly in the observation column, and adapt the dataset format as described above. After selecting one or several observation types in the Observations subtab (see Section 4), all strings found in the corresponding columns are displayed in the “Censoring tags” on the right of the observation types. If at least one string is found, the user can then define some censoring associated with an observation type and with one or several censoring tags with the button “Add censoring”. Additionally, option “Use all automatically detected tags” exist and can be used when all censoring tags correspond to the same censoring type (this option is recommended for the usage with data formatting presets, if censoring tags vary between data sets in which the preset will be used). 3 types of censoring can be defined:

- LLOQ: this corresponds to left-censoring, where the censored observation is below a lower limit of quantification (LLOQ), that must specified by the user. In that case Data Formatting replaces the censoring tags in the observation column by the LLOQ, and creates a new CENS column tagged as CENSORING in the Data tab, with 1 on rows that had censoring tags before formatting, and 0 on other rows.

- ULOQ: this corresponds to right-censoring, where the censored observation is above an upper limit of quantification (ULOQ), that must specified by the user. Here Data Formatting replaces the censoring tags in the observation column by the ULOQ, and creates a new CENS column tagged as CENSORING in the Data tab, with -1 on rows that had censoring tags before formatting, and 0 on other rows.

- Interval: this is for interval-censoring, where the user must specify two bound of a censoring interval, to which the censored observation belong. Data Formatting replaces the censoring tags in the observation column by the upper bound of the interval, and creates two new columns: a CENS column tagged as CENSORING in the Data tab, with 1 on rows that had censoring tags before formatting, and 0 on other rows, and a LIMIT column with the lower bound of the censoring interval on rows that had censoring tags before formatting, and “.” on other rows.

For each type of censoring, available options to define the limits are:

- “Manual“: limits are defined manually, by entering the limit values for all censored observations.

- “By category“: limits are defined manually for different categories read from the dataset.

- “From data“: limits are directly read from the dataset.

The options “by category” and “from data” are described in detail in Section 8.

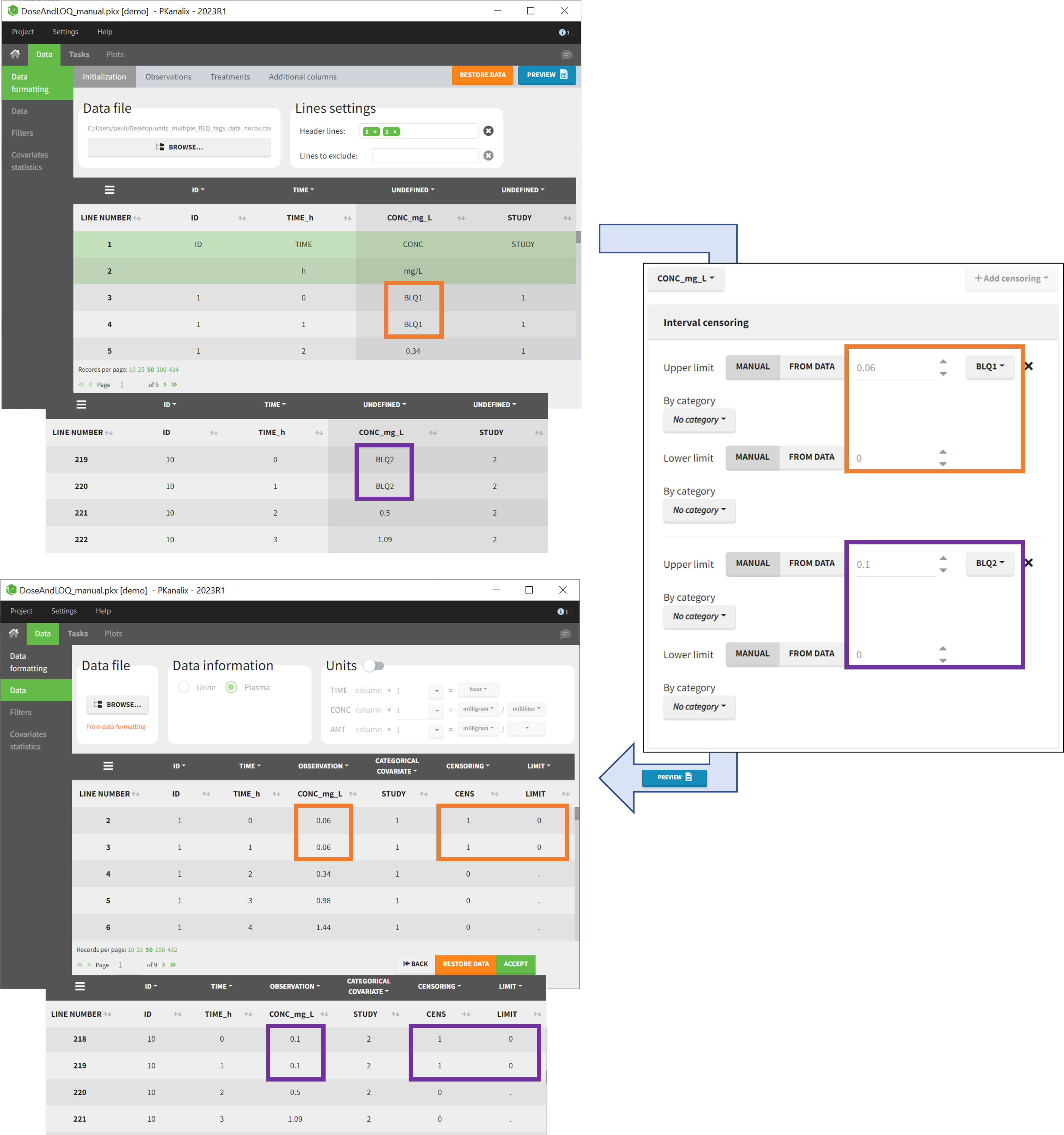

Example:

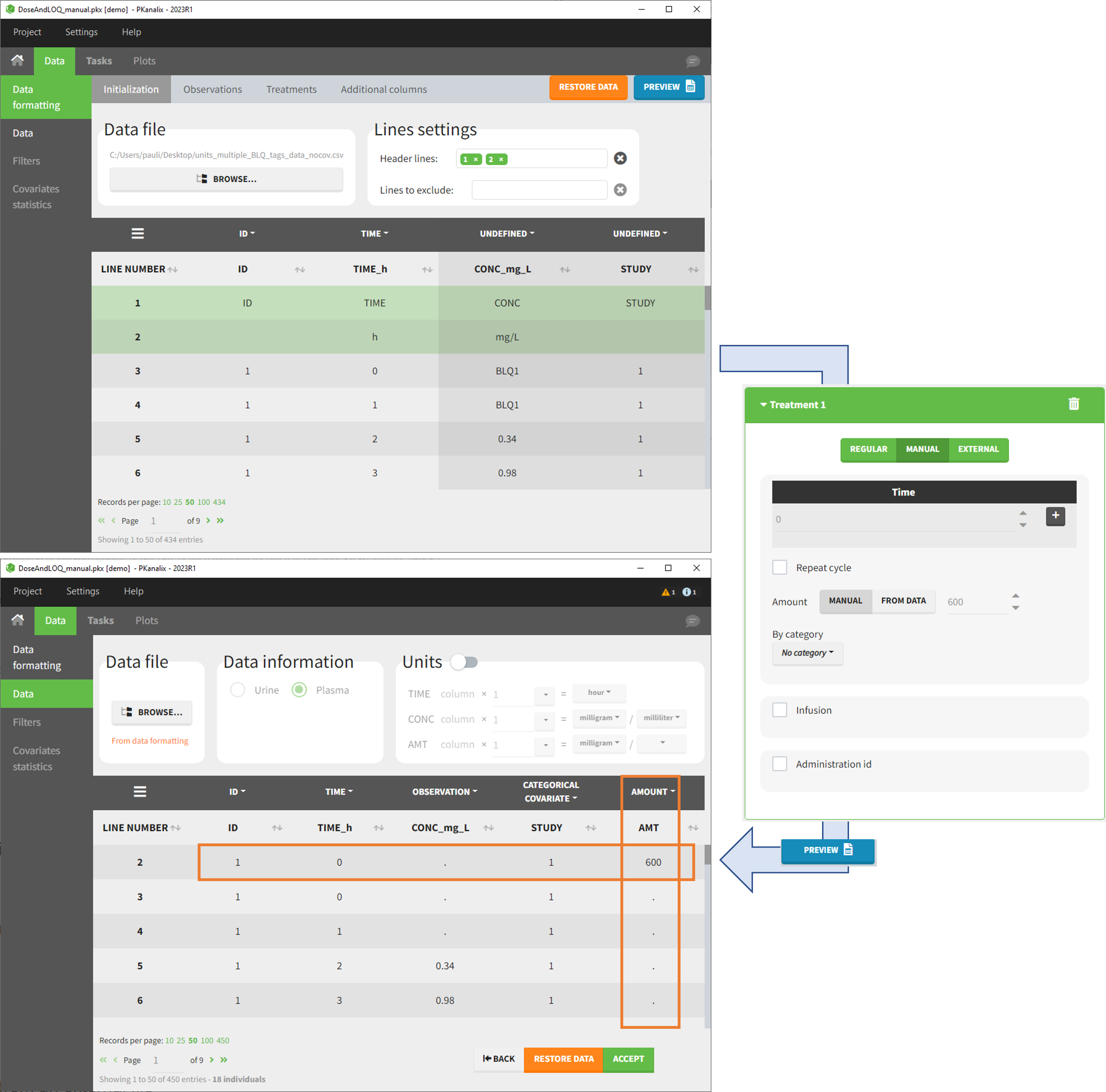

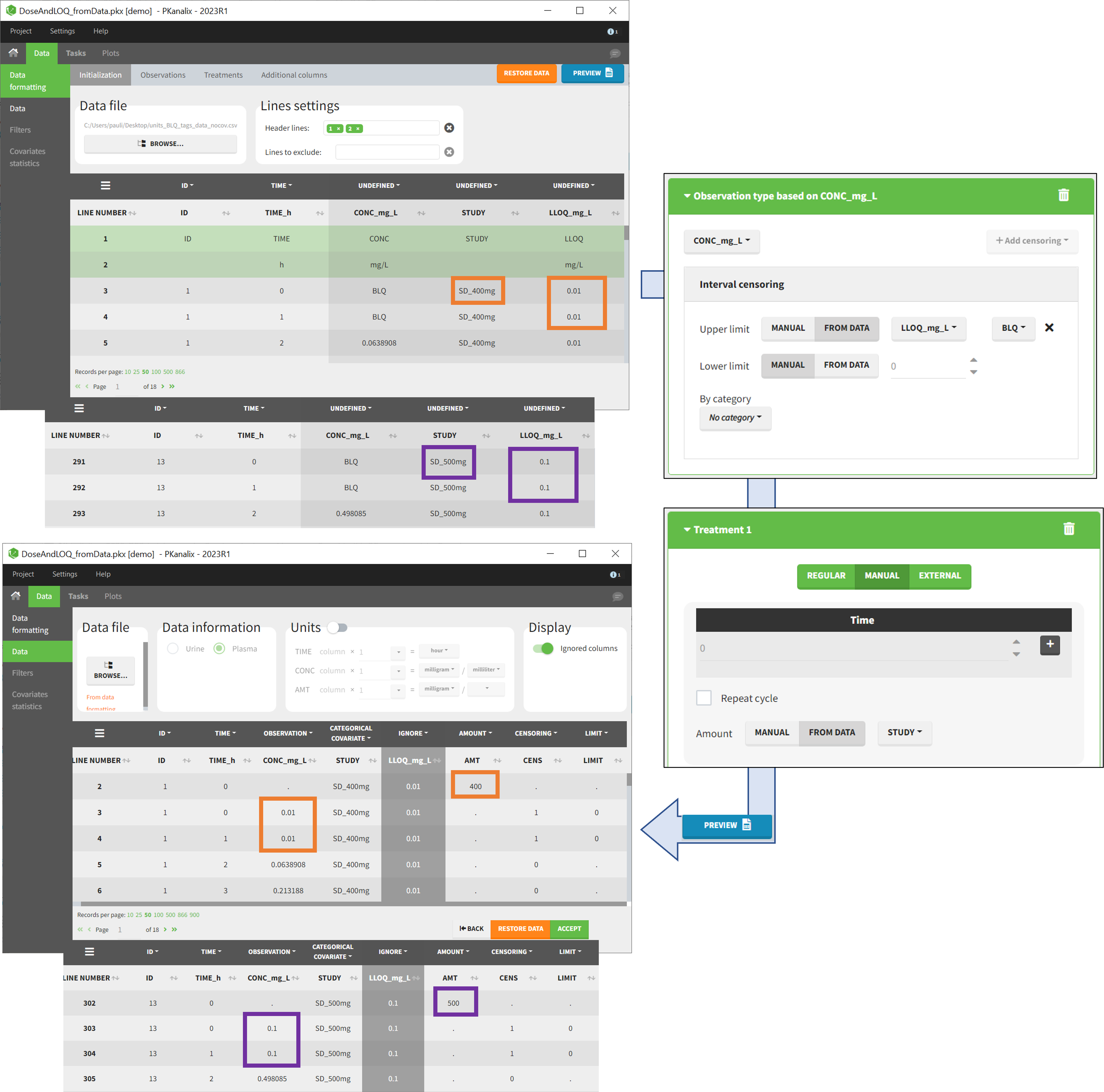

- demo DoseAndLOQ_manual.pkx (the screenshot below focuses on the formatting of censored observations and excludes other elements present in the demo):

In this demo there are two censoring tags in the CONC column: BLQ1 (from Study 1) and BLQ2 (from Study 2), that correspond to different LLOQs. An interval censoring is defined for each censoring tag, with manual limits, where LLOQ=0.06 for BLQ1 and LLOQ=0.1 for BLQ2, and the lower limit of the censoring interval being 0 in both cases.

7. Adding doses in the dataset

Datasets in MonolixSuite-standard format should contain all information on doses, as dose lines. An AMOUNT column records the amount of the administrated doses on dose-lines, with “.” on response-lines. In case of infusion, an INFUSION DURATION or INFUSION RATE column records the infusion duration or rate. If there are several types of administration, an ADMINISTRATION ID column can distinguish the different types of doses with integers.

If doses are missing from a dataset, the Data Formatting module can be used to add dose lines and dose-related columns: after initializing the dataset, the user can specify one or several treatments in the Treatments subtab. The following operations are then performed by Data Formatting:

- a new dose line is inserted in the dataset for each defined dose, with the dataset sorted by subject and times. On such a dose line, the values from the next line are duplicated for all columns, except for the observation column in which “.” is used for the dose line.

- A new column AMT is created with “.” on all lines except on dose lines, on which dose amounts are used. The AMT column is automatically tagged as AMOUNT in the Data tab.

- If administration ids have been defined in the treatment, an ADMID column is created, with “.” on all lines except on dose lines, on which administration ids are used. The ADMID column is automatically tagged as ADMINISTRATION ID in the Data tab.

- If an infusion duration or rate has been defined, a new INFDUR (for infusion duration) or INFRATE (for infusion rate) is created, with “.” on all lines except on dose lines. The INFDUR column is automatically tagged as INFUSION DURATION in the Data tab, and the INFRATE column is automatically tagged as INFUSION RATE.

For each treatment, the dosing schedule can defined as:

- regular: for regularly spaced dosing times, defined with the start time, inter-dose internal, and number of doses. A “repeat cycle” option allows to repeat the regular dosing schedule to generate a more complex regimen.

- manual: a vector of one or several dosing times, each defined manually. A “repeat cycle” option allows to repeat the manual dosing schedule to generate a more complex regimen.

- external: an external text file with columns id (optional), occasions (optional), time (mandatory), amount (mandatory), admid (administration id, optional), tinf or rate (optional), that allows to define individual doses.

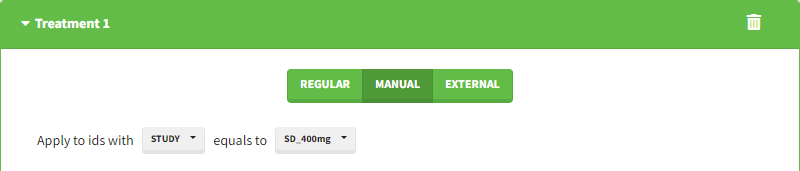

Starting from the 2024R1 version, different dosing schedules can be defined for different individuals, based on information from other columns, which can be useful in cases when different cohorts received different dosing regimens, or when working with data pooled from multiple studies. To define a treatment just for a specific cohort or study, the dropdown on the top of the treatment section can be used:

While dose amounts, administration ids and infusion durations or rates are defined in the external file for external treatments, available options to define them for treatments of type “manual” or “regular” are:

- “Manual“: this applies the same amount (or administration id or infusion duration or rate) to all doses.

- “By category“: dose amounts (or administration id or infusion duration or rate) are defined manually for different categories read from the dataset.

- “From data“: dose amounts (or administration id or infusion duration or rate) are directly read from the dataset.

The options “by category” and “from data” are described in detail in Section 8.

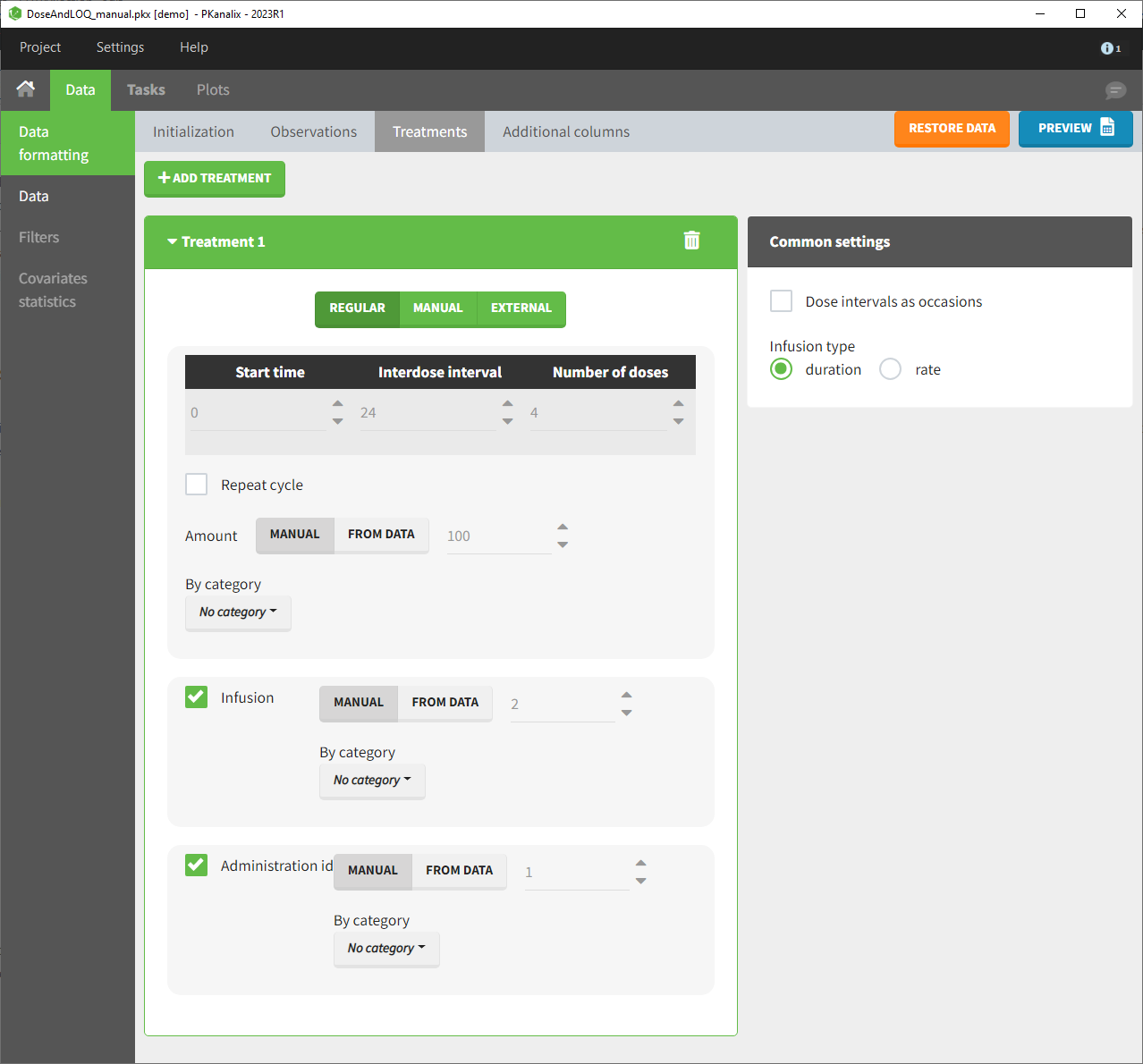

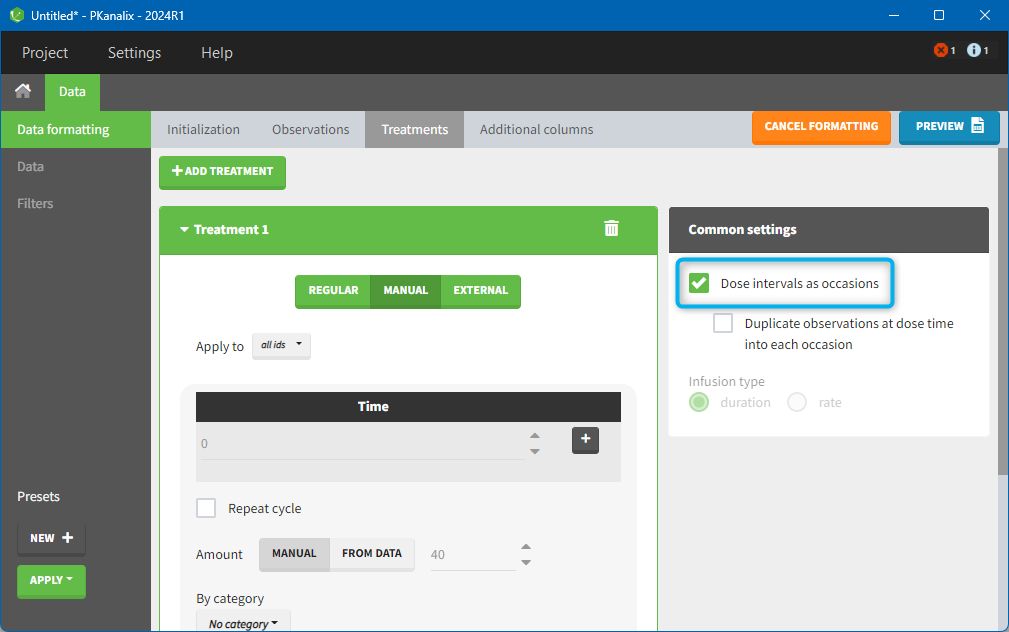

There is a “common settings” panel on the right:

- dose intervals as occasions: this creates a column to distinguish the dose intervals as different occasions (see Section 9).

- infusion type: If several treatments correspond to infusion administration, they need to share the same type of encoding for infusion information: as infusion duration or as infusion rate.

Example:

- demo DoseAndLOQ_manual.pkx (the screenshot below focuses on the formatting of doses and excludes other elements present in the demo):

In this demo, doses are initially not included in the dataset to format. A single dose at time 0 with an amount of 600 is added for each individual by Data Formatting. This creates a new AMT column in the formatted dataset, tagged as AMOUNT.

8. Reading censoring limits or dosing information from the dataset

When defining censoring limits for observations (see Section 6) or dose amounts, administration ids, infusion duration or rate for treatments (see Section 7), two options allow to define different values for different rows, based on information already present in the dataset: “by category” and “from data”.

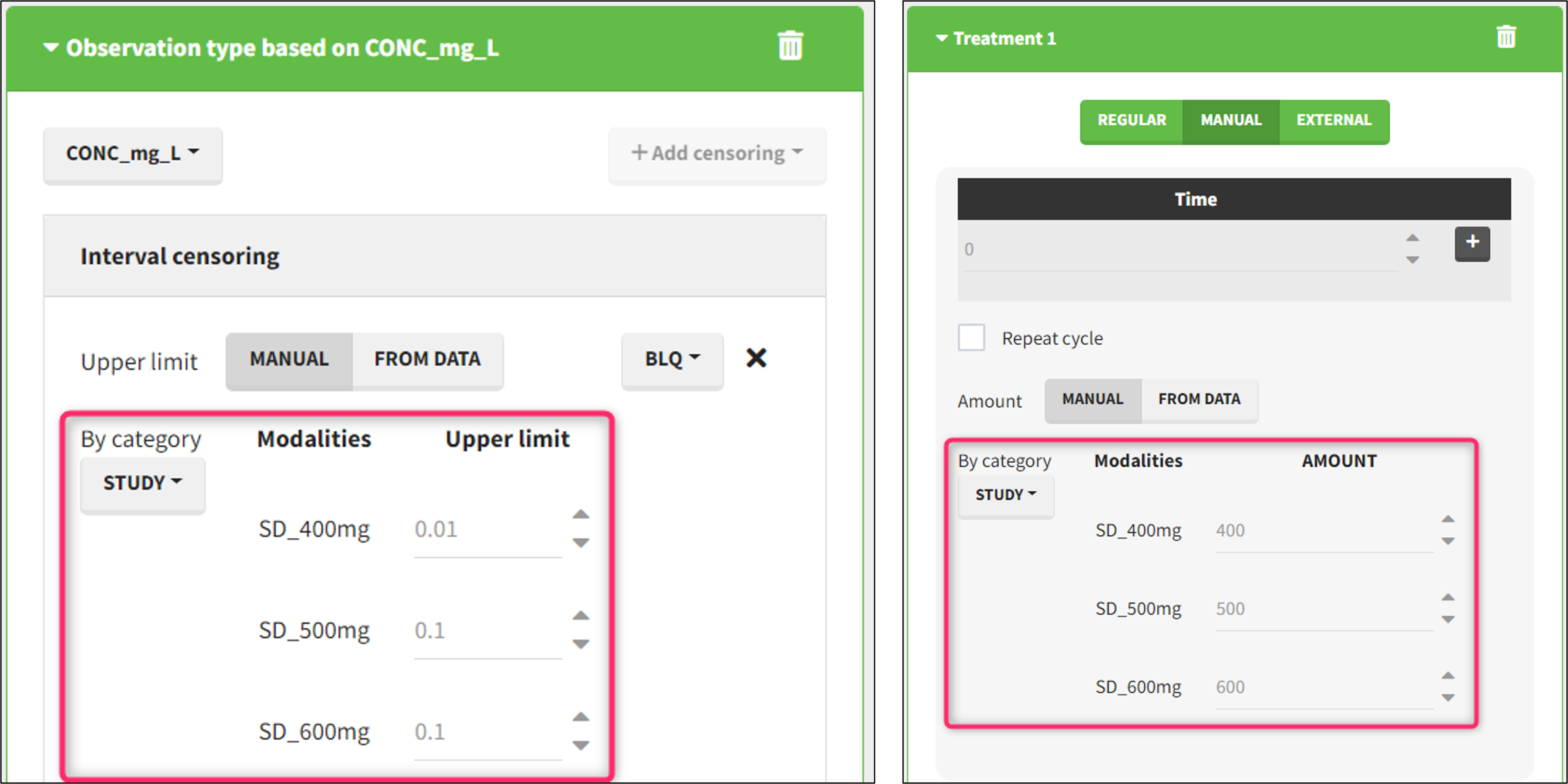

By category

It is possible to define manually different censoring limits, dose amounts, administration ids, infusion durations, or rates for different categories within a dataset’s column. After selecting this column in the “By category” drop-down menu, the different modalities in the column are displayed and a value must be manually assigned each modality.

- For censoring limits, the censoring limit used to replace each censoring tag depends on the modality on the same row.

- For doses, the value chosen for the newly created column (AMT for amount, ADMID for administration id, INFDUR for infusion duration, INFRATE for infusion rate) on each new dose line depends on the modality on the first row found in the dataset for the same individual and the same time as the dose, or the next time if there is no line in the initial dataset at that time, or the previous time if no time is found after the dose.

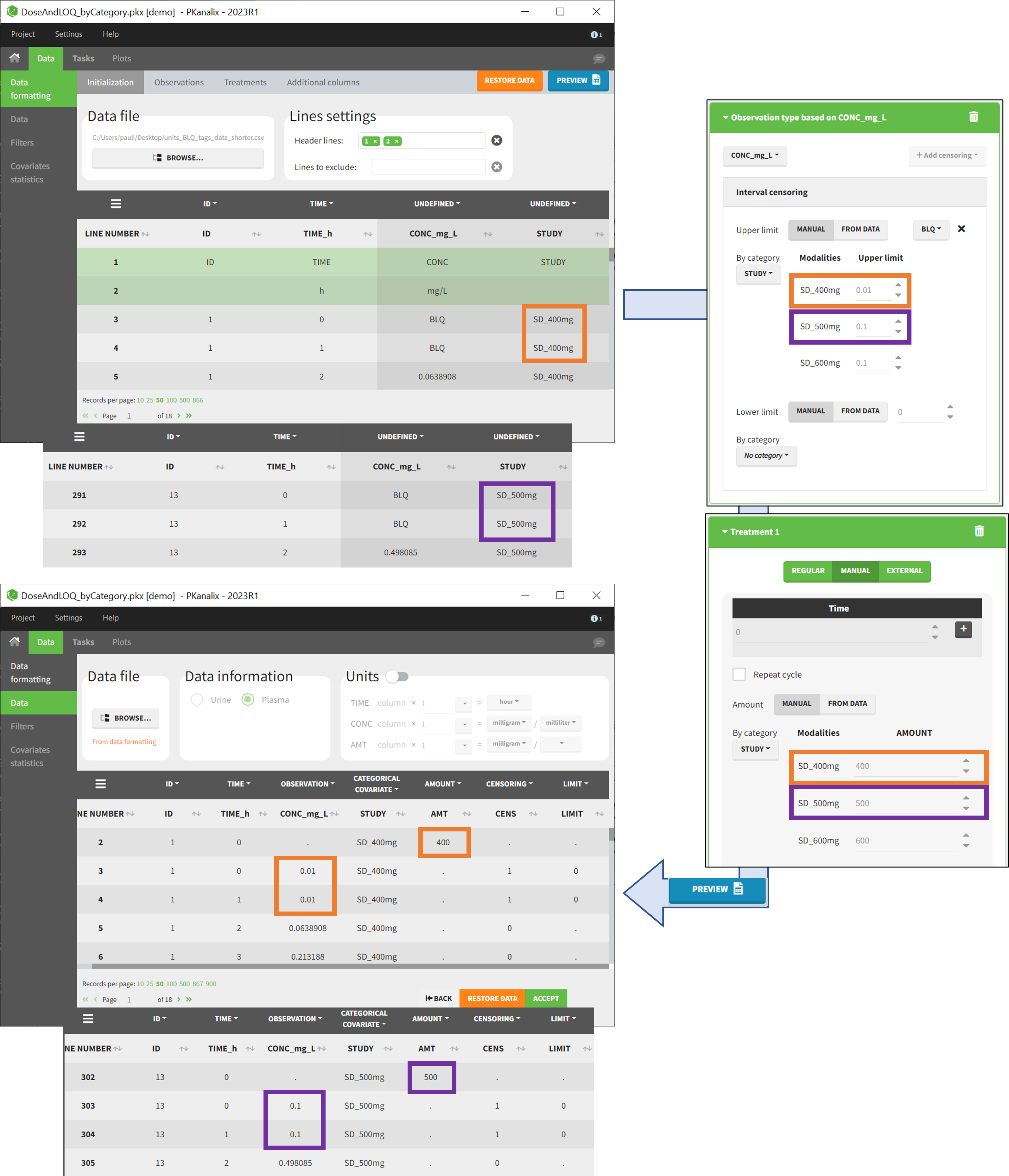

Example:

- demo DoseAndLOQ_byCategory.pkx (the screenshot below focuses on the formatting of doses and excludes other elements present in the demo):

In this demo there are three studies distinguished in the STUDY column with the categories “SD_400mg”, “SD_500mg” and “SD_600mg”. In Data Formatting, a single dose is manually defined at time 0 for all individuals, with different amounts depending the STUDY category. In addition, censoring interval is defined for the censoring tags BLQ, with an upper limit of the censoring interval (lower limit of quantification) that also depends on the STUDY category. Three new columns – AMT for dose amounts, CENS for censoring tags (0 or 1), and LIMIT for the lower limit of the censoring intervals – are created by Data Formatting. A new dose line is then inserted at time 0 for each individual.

From data

The option “From data” is used to directly read censoring limits, dose amounts, administration ids, infusion durations, or rates from a dataset’s column. The column must contain either numbers or numbers inside strings. In that case, the first number found in the string is extracted (including decimals with .).

- For censoring limits, the censoring limit used to replace each censoring tag is read from the selected column on the same row.

- For doses, the value chosen for the newly created column (AMT for amount, ADMID for administration id, INFDUR for infusion duration, INFRATE for infusion rate) on each new dose line is read from the selected column on the first row found in the dataset for the same individual and the same time as the dose, or the next time if there is no line in the initial dataset at that time, or the previous time if no time is found after the dose.

Example:

- demo DoseAndLOQ_fromData.pkx (the screenshot below focuses on the formatting of doses and censoring and excludes other elements present in the demo):

In this demo there are three studies distinguished in the STUDY column with the categories “SD_400mg”, “SD_500mg” and “SD_600mg”. In Data Formatting, a single dose is manually defined at time 0 for all individuals, with the amount read the STUDY column. In addition, censoring interval is defined for the censoring tags BLQ, with an upper limit of the censoring interval (lower limit of quantification) read from the LLOQ_mg_L column. Three new columns – AMT for dose amounts, CENS for censoring tags (0 or 1), and LIMIT for the lower limit of the censoring intervals – are created by Data Formatting. A new dose line is then inserted at time 0 for each individual, with amount 400, 500 or 600 for studies SD_400mg, SD_500mg and SD_600mg respectively.

9. Creating occasions from dosing intervals

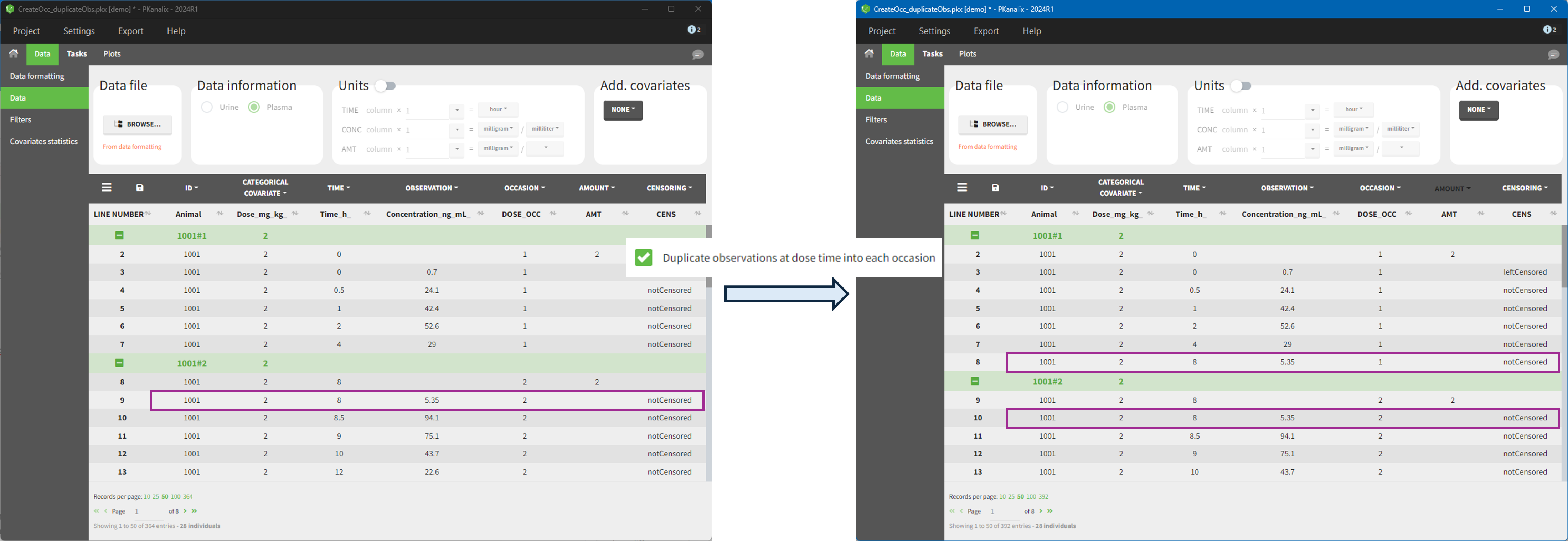

The option “Dose intervals as occasions” in the Treatments subtab of Data Formatting allows to create an occasion column to distinguish dose intervals. This is useful if the sets of measurements following different doses should be analyzed independently for a same individual. From the 2024 version on, an additional option “Duplicate observations at dose times into each occasion” is available. This option allows to duplicate the observations which are at exactly the same time as dose, such that they appear both as last point of the previous occasion and first point of the next occasion.

Example:

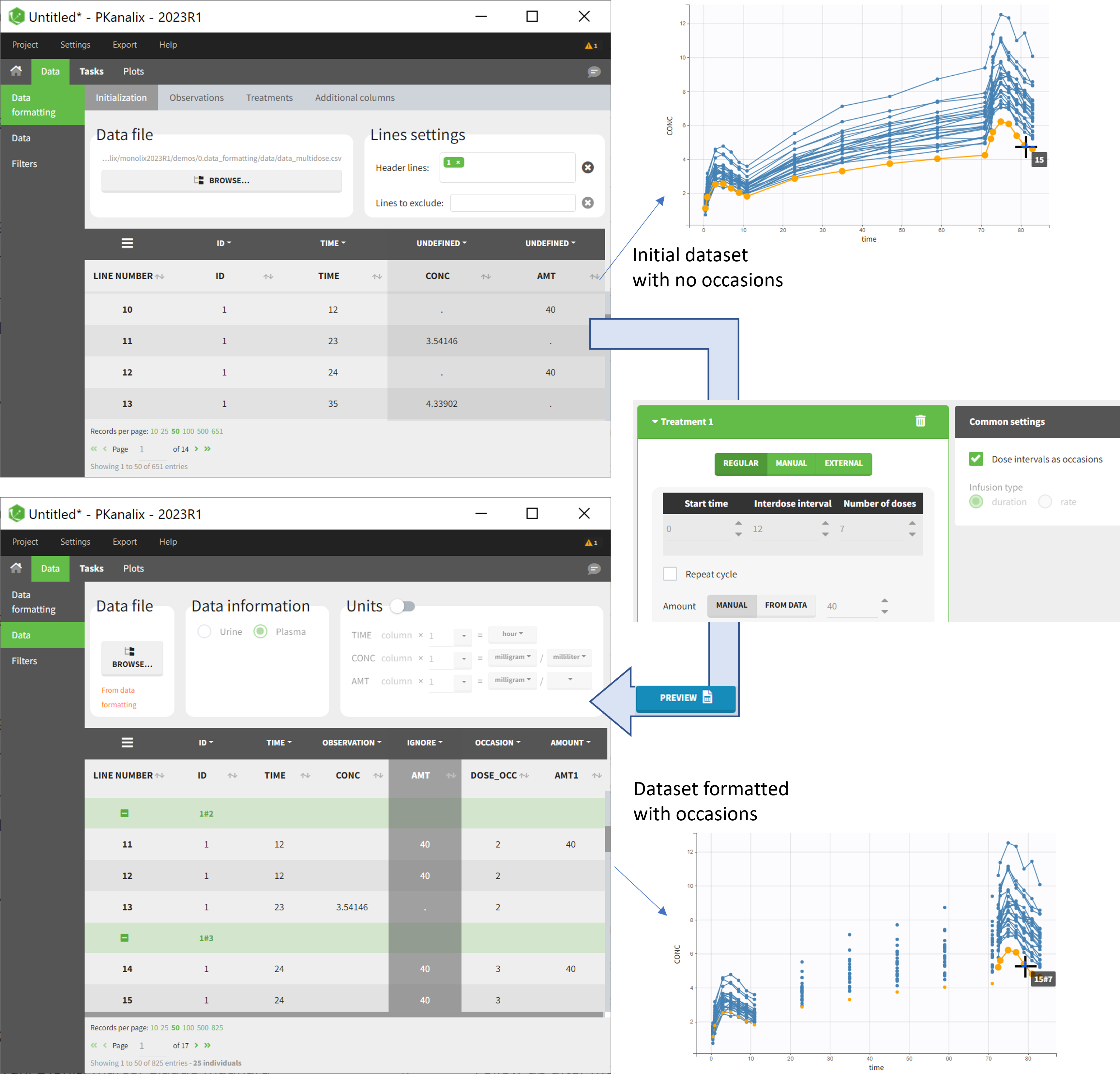

- demo doseIntervals_as_Occ.mlxtran (Monolix demo in the folder 0.data_formatting, here imported into PKanalix):

This demo imported from a Monolix demo has an initial dataset in Monolix-standard format, with multiple doses encoded as dose lines with dose amounts in the AMT column. When using this dataset directly into Monolix or PKanalix, a single analysis is done on each individual concentration profile considering all doses, which means that NCA would be done on the concentrations after the last dose only, and modeling (CA in PKanalix or population modeling in Monolix) would be estimated with a single set of parameter values for each individual. If instead we want to run separate analyses on the sets of concentrations following each dose, we need to distinguish them as occasions with a new column added with the Data Formatting module. To this end, we define the same treatment as in the initial dataset with Data Formatting (here as regular multiple doses) with the option “Dose intervals as occasions” selected. After clicking Preview, Data Formatting adds two new columns: an AMT1 column with the new doses, to be tagged as AMOUNT instead of the AMT column that will now be ignored, and a DOSE_OCC column to be tagged as OCCASION.

Example:

- demo CreateOcc_duplicateObs.pkx:

10. Handling urine data

In PKanalix-standard format, the start and end times of urine collection intervals must be recorded in a single column, tagged as TIME column-type, where the end time of an interval automatically acts as start time for the next interval (see here for more details). If a dataset contains start and end times in two different columns, they can be merged into a single column by Data Formatting. This is done automatically by tagging these two columns as START and END in the Initialization subtab of Data Formatting (see Section 2). In addition the column containing urine collection volume must be tagged as VOLUME.

Example:

- demo Urine_LOQinObs.plx:

11. Adding new columns from an external file

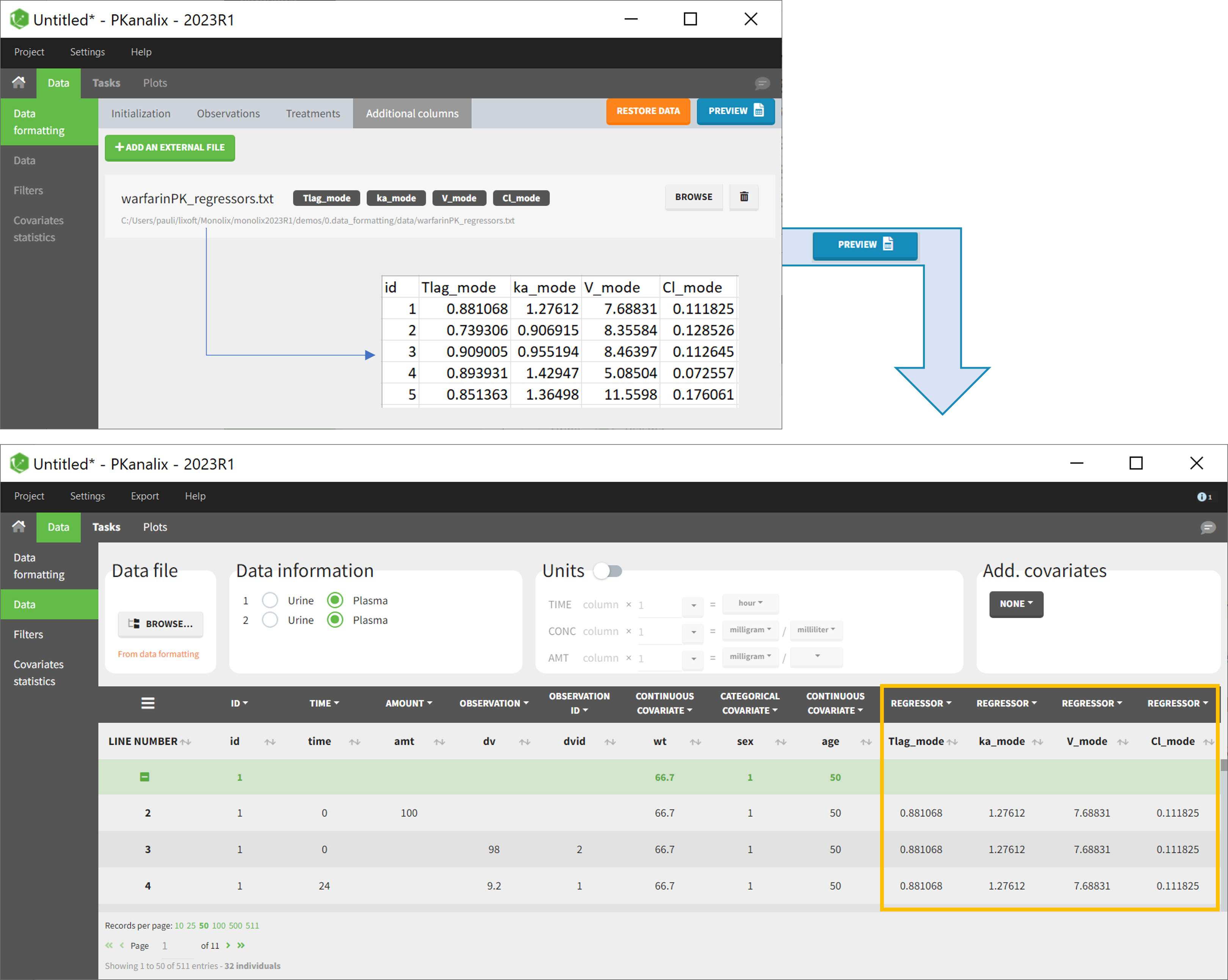

The last subtab is used to insert additional columns in the dataset from a separate file. The external file must contain a table with a column named ID or id with the same subject identifiers as in the dataset to format, and other columns with a header name and individual values (numbers or strings). There can be only one value per individual, which means that the additional columns inserted in the formatted dataset can contain only a constant value within each individual, and not time-varying values.

Examples of additional columns that can be added with this option are:

- individual parameters estimated in a previous analysis, to be read as regressors to avoid estimating them. Time-varying regressors are not handled.

- new covariates.

If occasions are defined in the formatted dataset, it is possible to have an occasion column in the external file and values defined per subject-occasion.

Example:

- demo warfarin_PKPDseq_project.mlxtran (Monolix demo in the folder 0.data_formatting, here imported into PKanalix):

This demo imported from a Monolix demo has an initial PKPD dataset in Monolix-standard format. The option “Additional columns” is used to add the PK parameters estimated on the PK part of the data in another Monolix project.

2.3.1.Data formatting presets

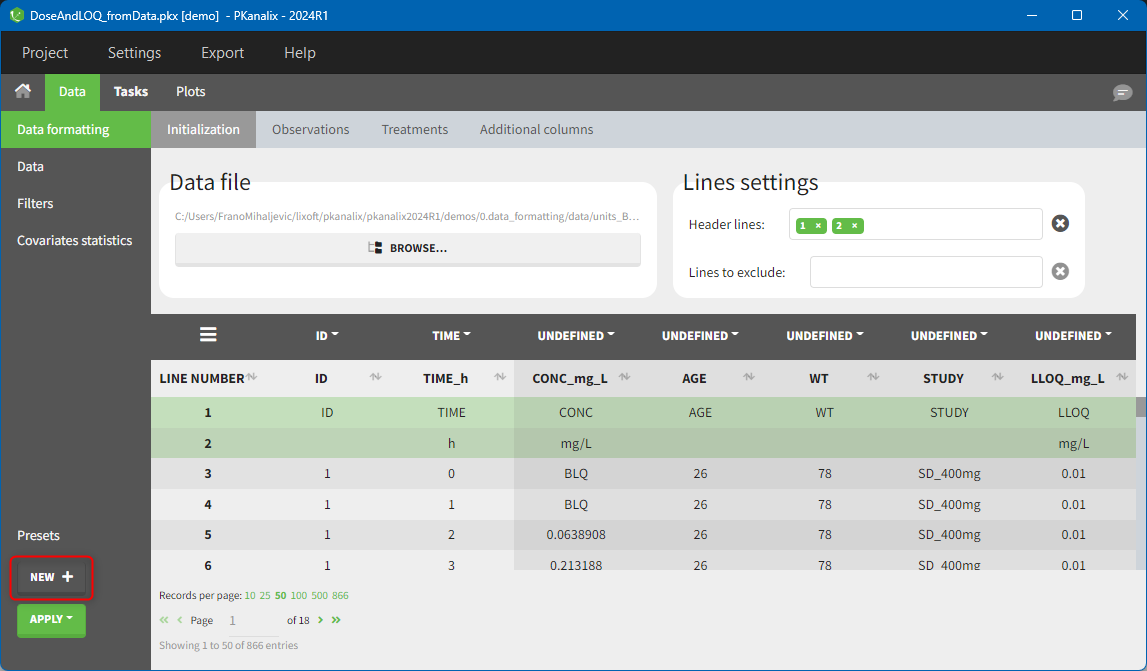

Starting from the 2024R1 version, if users need to perform the same or similar data formatting steps with each new project, a data formatting preset can be created and applied for new projects.

A typical workflow for using data formatting presets is as follows:

- Data formatting steps are performed on a specific project.

- Steps are saved in a data formatting preset.

- Preset is reapplied on multiple new projects (manually or automatically).

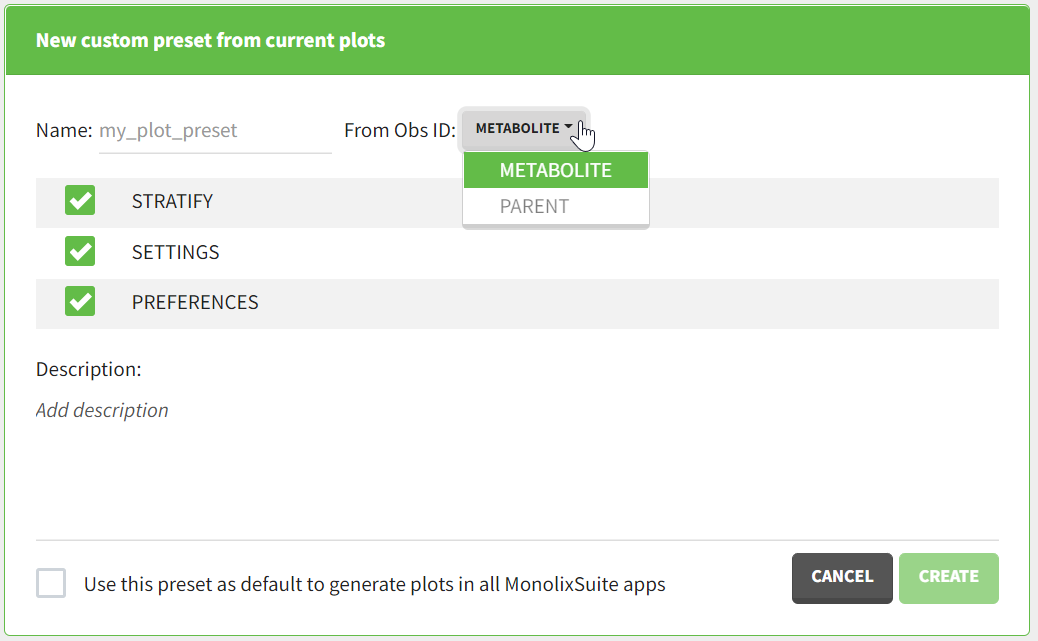

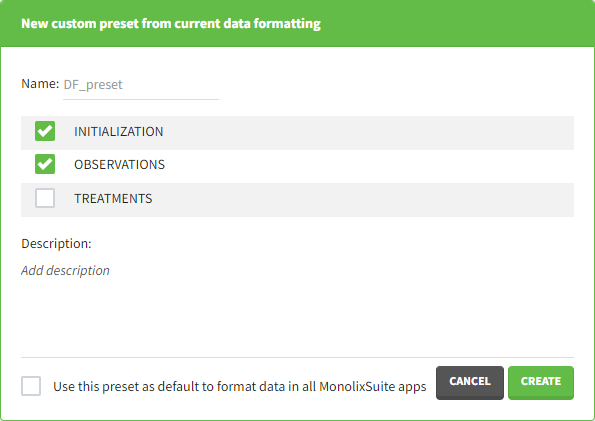

Creating a data formatting preset

After data formatting steps are done on a project, a preset can be created by clicking on the New button in the bottom left corner of the interface, while in the Data formatting tab:

Clicking on this button will open a pop-up window with a form that contains four parts:

- Name: contains a name of the preset, this information will be used to distinguish between presets when applying them.

- Configuration: contains three checkboxes (initialization, observations, treatments). Users can choose which steps of data formatting will be saved in the preset. If option “External” was used in creating treatments, the external file paths will not be saved in the preset.

- Description: a custom description can be added.

- Use this preset as default to format data in all MonolixSuite apps: if ticked, the preset will be automatically applied every time a data set is loaded for data formatting in PKanalix and Monolix.

After saving the preset by clicking on Create, the description will be updated with the summary of all the steps performed in data formatting.

Important to note is that if a user wants to save censoring information in the data formatting preset, and the censored tags change between projects (e.g., censoring tag <LOQ=0.1> with varying numbers is used in different projects), the option “Use all automatically detected tags” should be used in the Observations tab. This way, no specific censoring tags will be saved in the preset, but they will be automatically detected and applied each time a preset is applied.

Applying a preset

A preset can be applied manually using the Apply button in the bottom left corner of the data formatting tab. Clicking on the Apply button will open a dropdown selection of all saved presets and the desired preset can be chosen.

The Apply button is enabled only after the initialization step is performed (ID and TIME columns are selected and the button Next was clicked). This allows PKanalix to fall back to the initialized state, if the initialization step from a preset fails (e.g., if ID and TIME column header saved in the presets do not exist in the new data set).

After applying a preset, all data formatting steps saved in the preset will try to be applied. If it is not possible to apply certain steps (e.g., some censoring tags or column headers saved in a preset do not exist), the error message will appear.

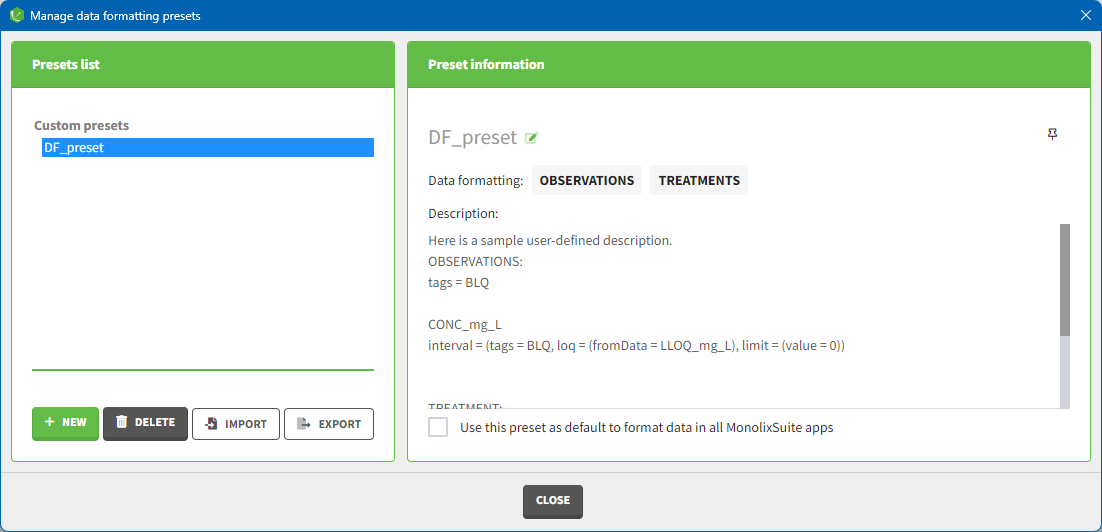

Managing presets

Presets can be created, deleted, edited, imported and exported by clicking on Settings > Manage Presets > Data Formatting.

The pop-window allows users to:

- Create presets: clicking on the button New has the same behavior as clicking on the button New in the Data formatting tab (described in the section “Creating a data formatting preset”).

- Delete presets: clicking on the button Delete will permanently delete a preset. Clicking on this button will not ask for confirmation.

- Edit presets: by clicking on a preset in the left panel, the preset information will appear in the right panel. Name and preset description of the presets can then be updated, and a preset can be selected to automatically format data in PKanalix and Monolix.

- Export presets: a selected preset can be exported as a lixpst file which can be shared between users and imported using the Import button.

- Import presets: a user can import a preset from a lixpst file exported from another computer.

Additionally, an option to pin presets (always show them on top) can be used by clicking on the icon to facilitate the usage of presets when a user has a lot of them.

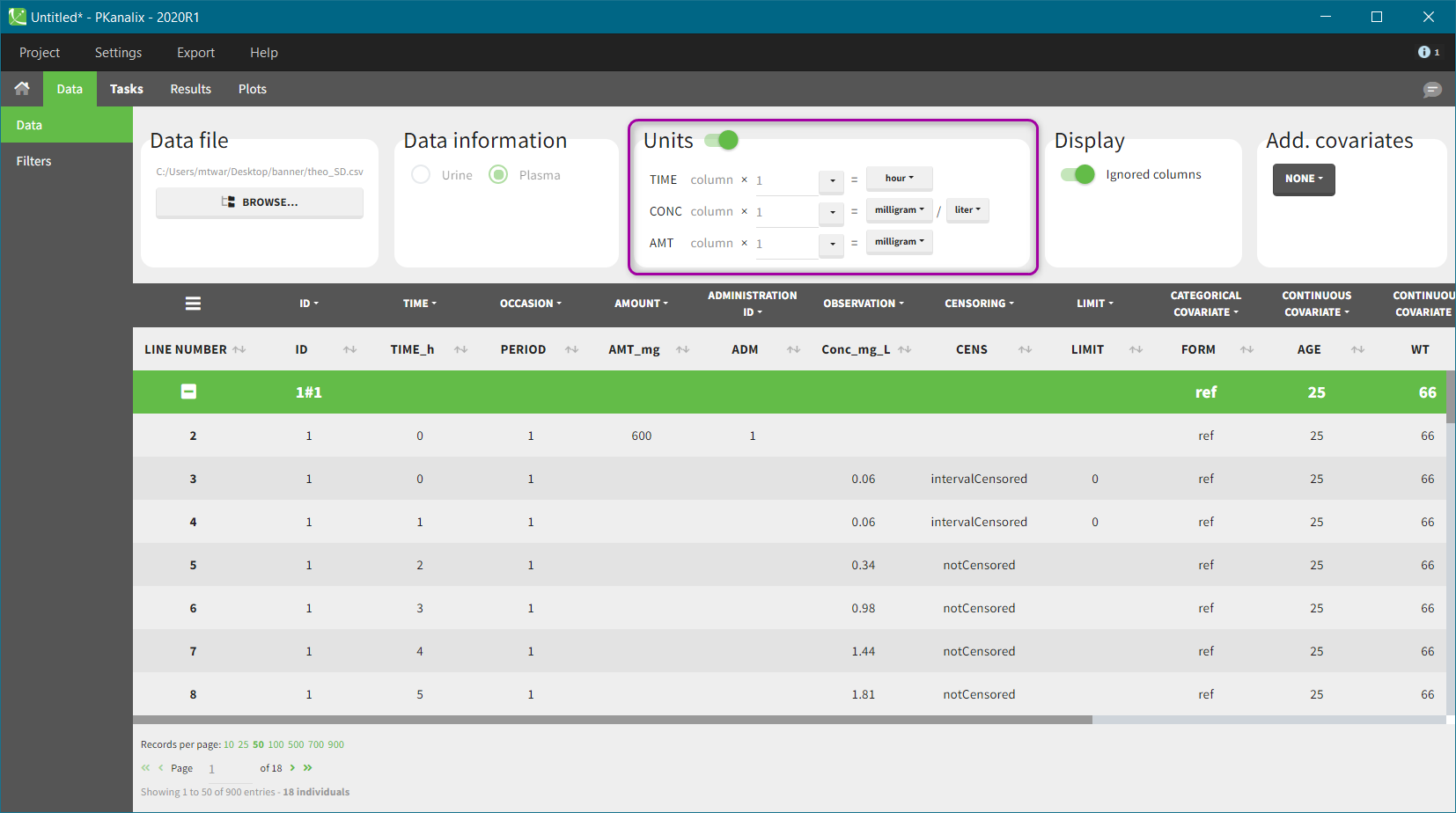

2.4.Units: display and scaling

- It displays units of the NCA and CA parameters calculated automatically from information provided by a user about units of measurements in a dataset. Units are shown in the results tables, on plots and are added to the saved files.

- It allows for scaling values of a dataset to represent outputs in preferred units, which facilitates the interpretation of the results. Scaling is done in the PKanalix data manager, and does not change the original data file.

- Units definition

- Units of the NCA parameters

- Units of the CA parameters

- Units display

- Units preferences

Units definition

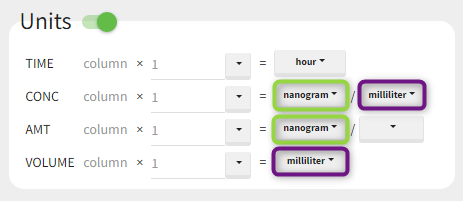

Units of the NCA and CA parameters are considered as combinations of units of: time, amount and volume. For instance, unit of AUC is [concentration unit * time unit] = [amount unit / volume unit * time unit]. These quantities correspond to columns in a typical PK dataset: time of measurements, observed concentration, dose amount (and volume of collected urine when relevant).

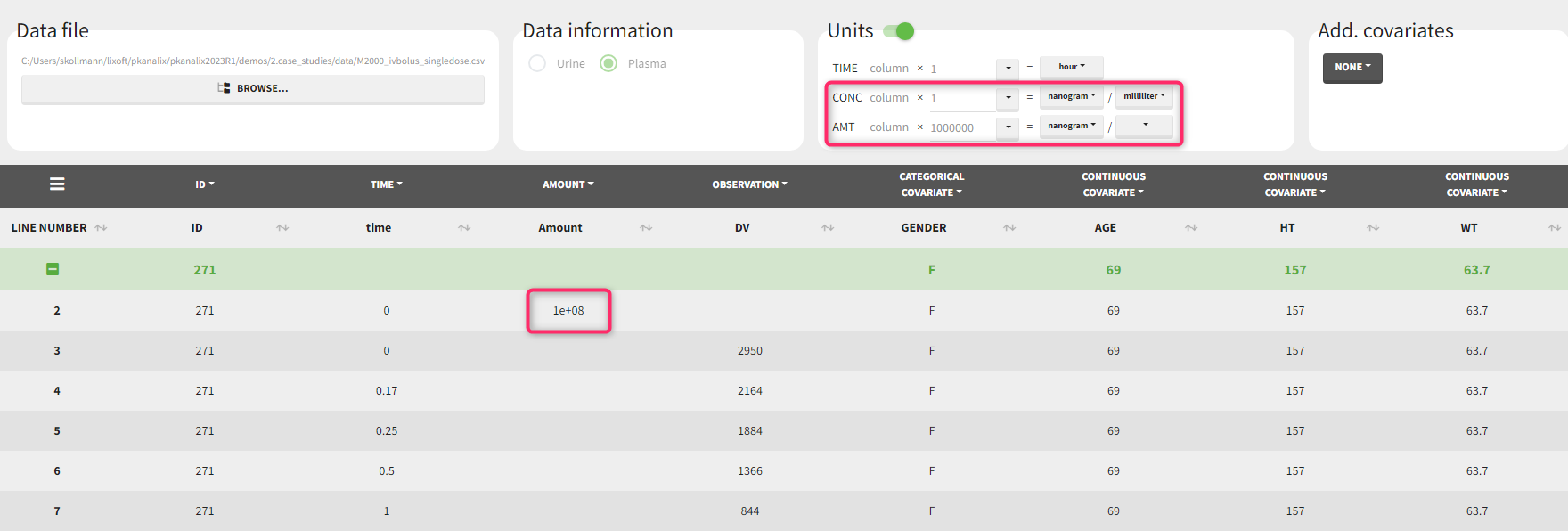

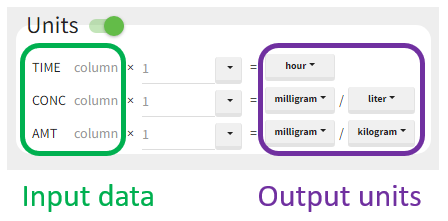

The “Units” block allows to define the preferred units for the output NCA parameters (purple frame below), which are related to the units of the data set columns (green frame below) via scaling factors (in the middle). The output units are displayed in results and plots after running the NCA and CA tasks.

In PKanalix version 2023R1, the amount can now also be specified in mass of the administered dose per body mass or body surface area. This eliminates the need to edit NCA or CA parameters by post-processing unit conversion.

Concentration is a quantity defined as “amount per volume”. It has two separate units, which are linked (and equal) to AMT and VOLUME respectively. Changing the amount unit of CONC will automatically update the amount unit of AMT. This constraint allows to apply simplifications to the output parameters units, for instance have Cmax_D as [mL] and not [mg/mL/mg].

Units without data conversion: Output units correspond to units of the input data.

In other words, desired units of the NCA and CA parameters correspond to units of measurements in the dataset. In this case, select from the drop-down menus units of the input data and keep the default scaling factor (equal to one). All calculations are performed using values in the original dataset and selected units are displayed in the results tables and on plots.

Units conversion: output units are different from the units of the input data.

NCA and CA parameters can be calculated and displayed in any units, not necessarily the same as used in the dataset. The scaling factors (by default equal to 1) multiply the corresponding columns in a dataset and transform them to a dataset in new units. PKanalix shows data with new, scaled values after having clicked on the “accept” button. This conversion occurs only internally and the original dataset file remains unchanged. So, in this case, select desired output units from the list and, knowing units of the input data, scale the original values to the new units.

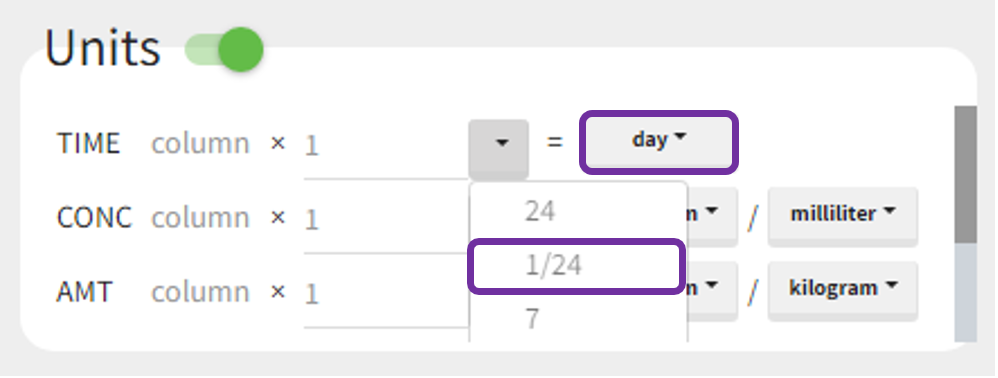

Example 1: input time units in [hours] and desired output units in [days]

For instance, let measurement times in a dataset be in hours. To obtain the outputs in days, set the output time unit as “days” and the scaling factor to 1/24, as shown below. It reads as follows:

(values of time in hours from the dataset) * (1/24) = time in days.

After accepting the scaling, a dataset shown in the Data tab is converted internally to a new data set. It contains original values multiplied by scaling factors. Then, all computations in the NCA and CA tasks are performed using new values, so that the results correspond to selected units.

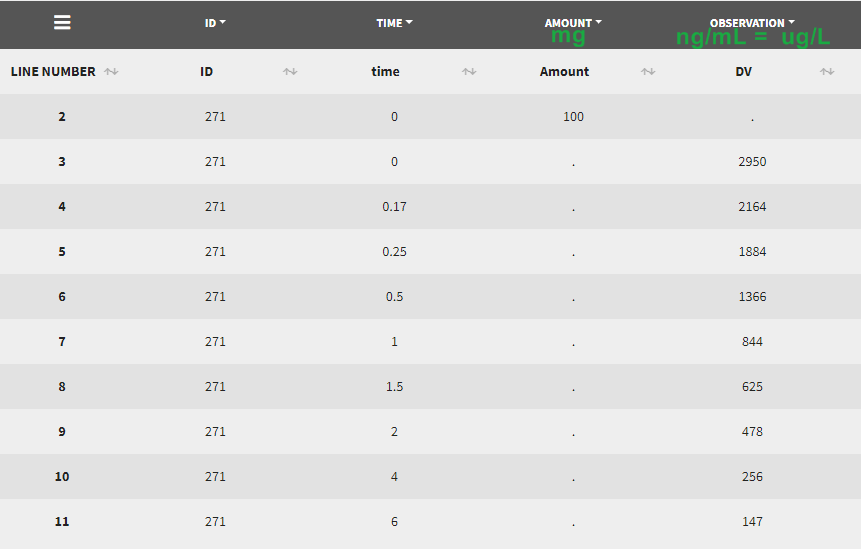

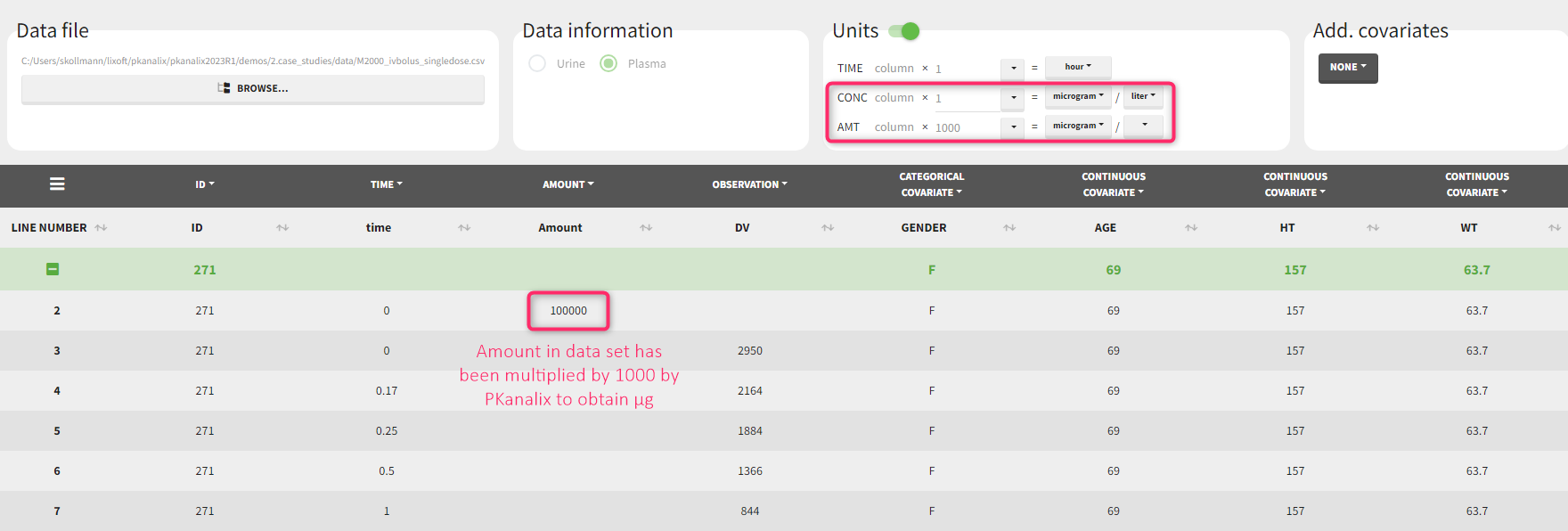

Example 2: input data set concentration units in [ng/mL] and amount in [mg]

Let’s assume that we have a data set where the concentration units are [ng/mL]=[ug/L] and the dose amount units are [mg], as presented above. It is not possible to indicate these units directly, as the amount unit of CONC and AMT must be the same. One option would be to indicate the CONC as [mg/kL] and the AMT as [mg] but having the volume unit as [kL] is not very convenient. We will thus use the scaling factors to harmonize the units of the concentration and the amount.

Let’s assume that we have a data set where the concentration units are [ng/mL]=[ug/L] and the dose amount units are [mg], as presented above. It is not possible to indicate these units directly, as the amount unit of CONC and AMT must be the same. One option would be to indicate the CONC as [mg/kL] and the AMT as [mg] but having the volume unit as [kL] is not very convenient. We will thus use the scaling factors to harmonize the units of the concentration and the amount.

If we would like to have the output NCA parameters using [ug] and [L], we can define the CONC units as [µg/L] (as the data set input units) and scale the AMT to convert the amount column from [mg] to [ug] unit with a scaling factor of 1000. After clicking “accept” on the bottom right, the values in the amount column of the (internal) data set have been multiplied by 1000 by PKanalix such that they are now in [µg].

If we would like to have the output NCA parameters using [ng] and [mL], we can define the CONC units as [ng/mL] (as the data set input units) and scale the AMT to convert the amount column from [mg] to [ng] unit with a scaling factor of 1000000.

Units for the NCA parameters

| Rsq | no unit |

| Rsq_adjusted | no unit |

| Corr_XY | no unit |

| No_points_lambda_z | no unit |

| Lambda_z | time-1 |

| Lambda_z_lower | time |

| Lambda_z_upper | time |

| HL_lambda_z | time |

| Span | no unit |

| Lambda_z_intercept | no unit |

| T0 | time |

| Tlag | time |

| Tmax_Rate | time |

| Max_Rate | amount.time-1 |

| Mid_Pt_last | time |

| Rate_last | amount.time-1 |

| Rate_last_pred | amount.time-1 |

| AURC_last | amount |

| AURC_last_D | grading |

| Vol_UR | volume |

| Amount_recovered | amount |

| Percent_recovered | % [not calculable when grading -> set as NaN] |

| AURC_all | amount |

| AURC_INF_obs | amount |

| AURC_PerCentExtrap_obs | % |

| AURC_INF_pred | amount |

| AURC_PerCentExtrap_pred | % |

| C0 | amount.volume-1 |

| Tmin | time |

| Cmin | amount.volume-1 |

| Tmax | time |

| Cmax | amount.volume-1 |

| Cmax_D | grading.volume-1 |

| Tlast | time |

| Clast | amount.volume-1 |

| AUClast | time.amount.volume-1 |

| AUClast_D | grading.time.volume-1 |

| AUMClast | time2.amount.volume-1 |

| AUCall | time.amount.volume-1 |

| AUCINF_obs | time.amount.volume-1 |

| AUCINF_D_obs | grading.time.volume-1 |

| AUCINF_pred | time.amount.volume-1 |

| AUCINF_D_pred | grading.time.volume-1 |

| AUC_PerCentExtrap_obs | % |

| AUC_PerCentBack_Ext_obs | % |

| AUMCINF_obs | time2.amount.volume-1 |

| AUMC_PerCentExtrap_obs | % |

| Vz_F_obs | volume.grading-1 |

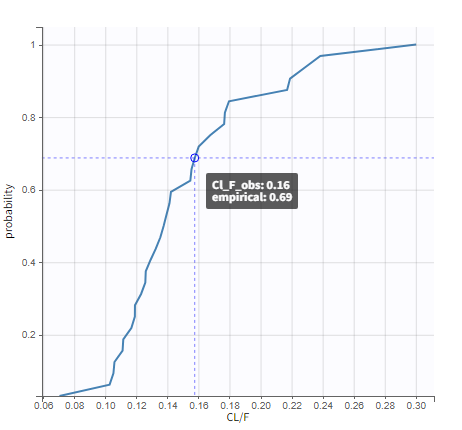

| Cl_F_obs | volume.time-1.grading-1 |

| Cl_obs | volume.time-1.grading-1 |

| Cl_pred | volume.time-1.grading-1 |

| Vss_obs | volume.grading-1 |

| Clast_pred | amount.volume-1 |

| AUC_PerCentExtrap_pred | % |

| AUC_PerCentBack_Ext_pred | % |

| AUMCINF_pred | time2.amount.volume-1 |

| AUMC_PerCentExtrap_pred | % |

| Vz_F_pred | volume.grading-1 |

| Cl_F_pred | volume.time-1.grading-1 |

| Vss_pred | volume.grading-1 |

| Tau | time |

| Ctau | amount.volume-1 |

| Ctrough | amount.volume-1 |

| AUC_TAU | time.amount.volume-1 |

| AUC_TAU_D | grading.time.volume-1 |

| AUC_TAU_PerCentExtrap | % |

| AUMC_TAU | time2.amount.volume-1 |

| Vz | volume.grading-1 |

| Vz_obs | volume.grading-1 |

| Vz_pred | volume.grading-1 |

| Vz_F | volume.grading-1 |

| CLss_F | volume.time-1.grading-1 |

| CLss | volume.time-1.grading-1 |

| CAvg | amount.volume-1 |

| FluctuationPerCent | % |

| FluctuationPerCent_Tau | % |

| Accumulation_index | no unit |

| Swing | no unit |

| Swing_Tau | no unit |

| Dose | amount.grading-1 |

| N_Samples | no unit |

| MRTlast | time |

| MRTINF_obs | time |

| MRTINF_pred | time |

| AUC_lower_upper | time.amount/volume |

| AUC_lower_upper_D | grading.time.volume-1 |

| CAVG_lower_upper | amount/volume |

| AURC_lower_upper | amount |

| AURC_lower_upper_D | grading |

Units for the PK parameters

Units are available only in PKanalix. When exporting a project to Monolix, values of PK parameters are re-converted to the original dataset unit. Below, volumeFactor is defined implicitly as: volumeFactor=amtFactor/concFacto, where “factor” is the scaling factor used in the “Units” block in the Data tab.

| PARAMETER | UNIT | INVERSE of UNITS |

| V (V1, V2, V3) | volume.grading-1 | value/volumeFactor |

| k (ka, k12, k21, k31, k13, Ktr) | 1/time | value*timeFactor |

| Cl, Q (Q2, Q3) | volume/time.grading-1 | value*timeFactor/volumeFactor |

| Vm | amount/time.grading-1 | value*timeFactor/amountFactor |

| Km | concentration | value/concFactor |

| T (Tk0, Tlag, Mtt) | time | value/timeFactor |

| alpha, beta, gamma | 1/time | value*timeFactor |

| A, B, C | grading/volume | value*volumeFactor |

Units display

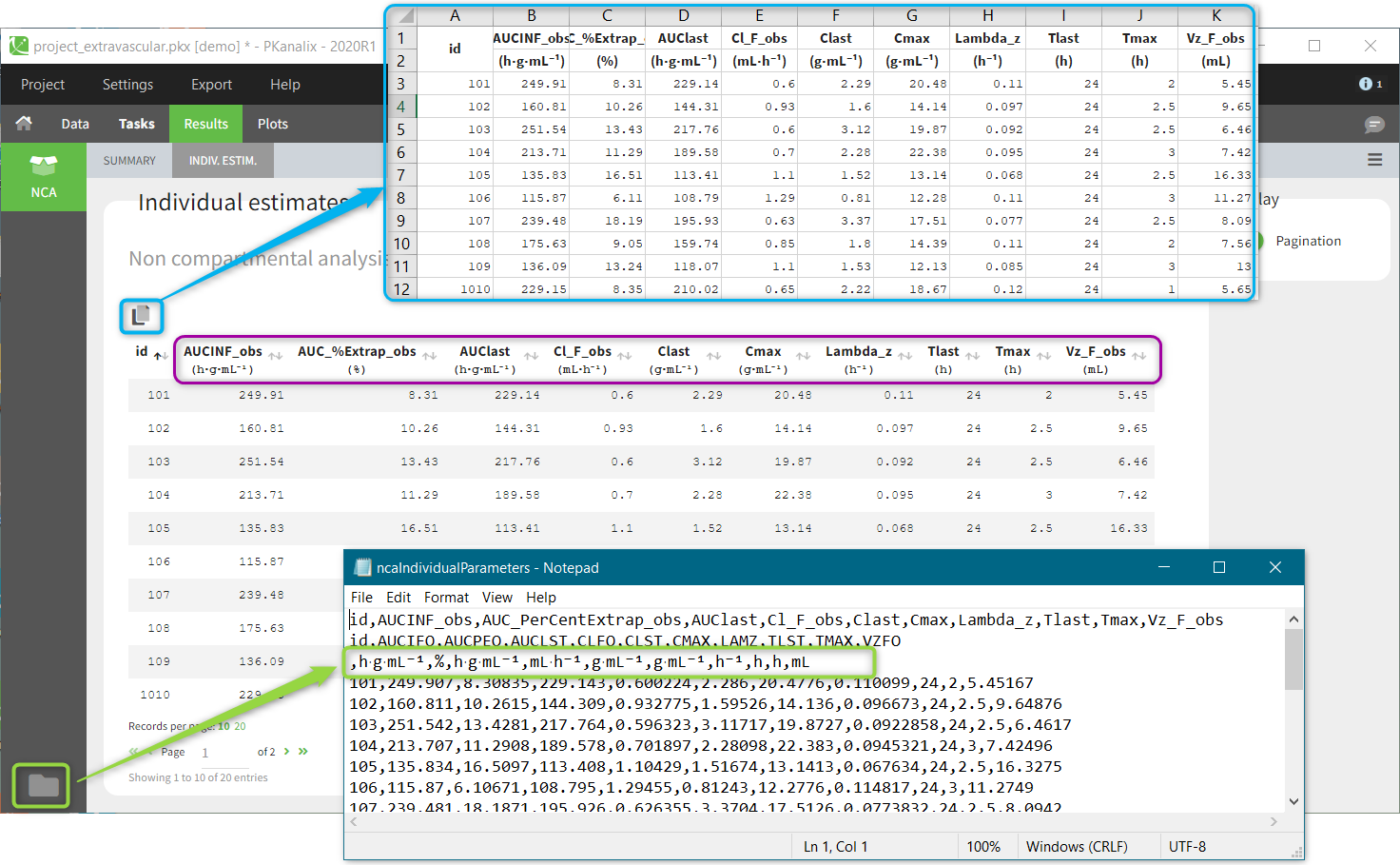

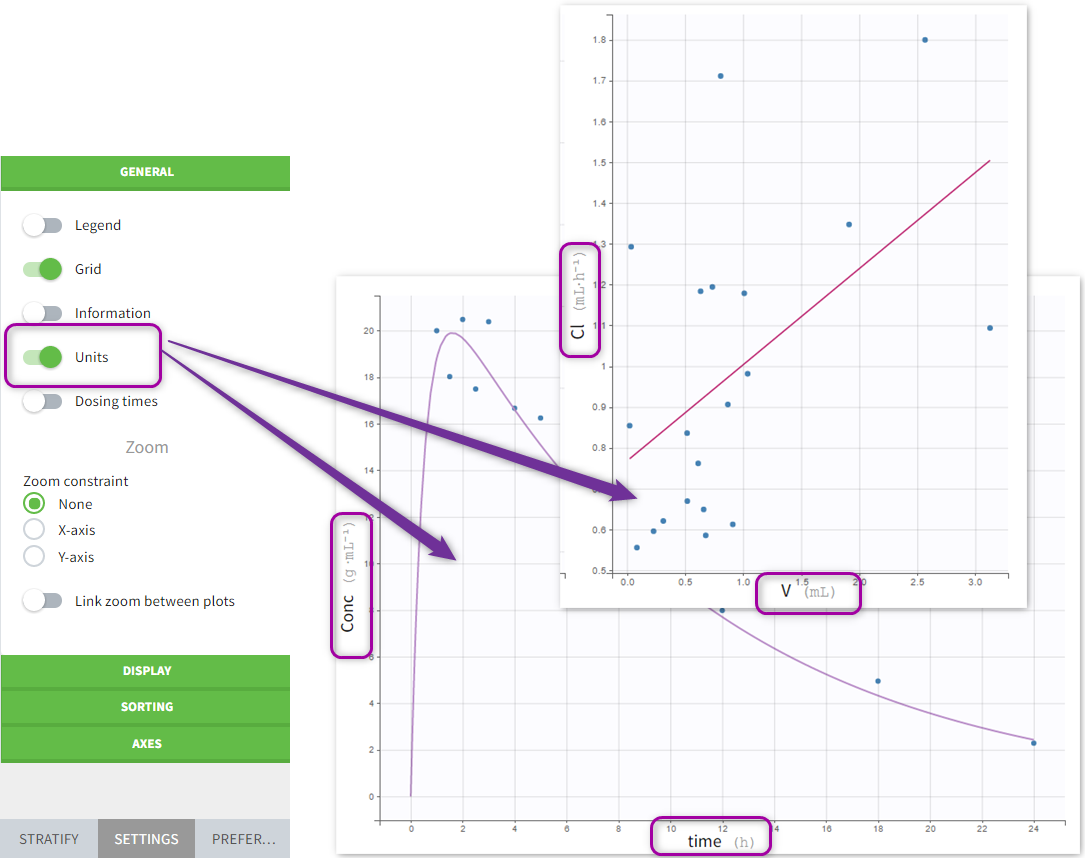

To visualise units, switch on the “units toggle” and accept a dataset. Then, after running the NCA and CA tasks, units are displayed:

- In the results table next to the parameter name (violet frame), in the table copied with “copy” button

(blue frame) and in the saved .txt files in the result folder (green frame).

(blue frame) and in the saved .txt files in the result folder (green frame).

- On plots if the “units” display is switched on in the General plots settings

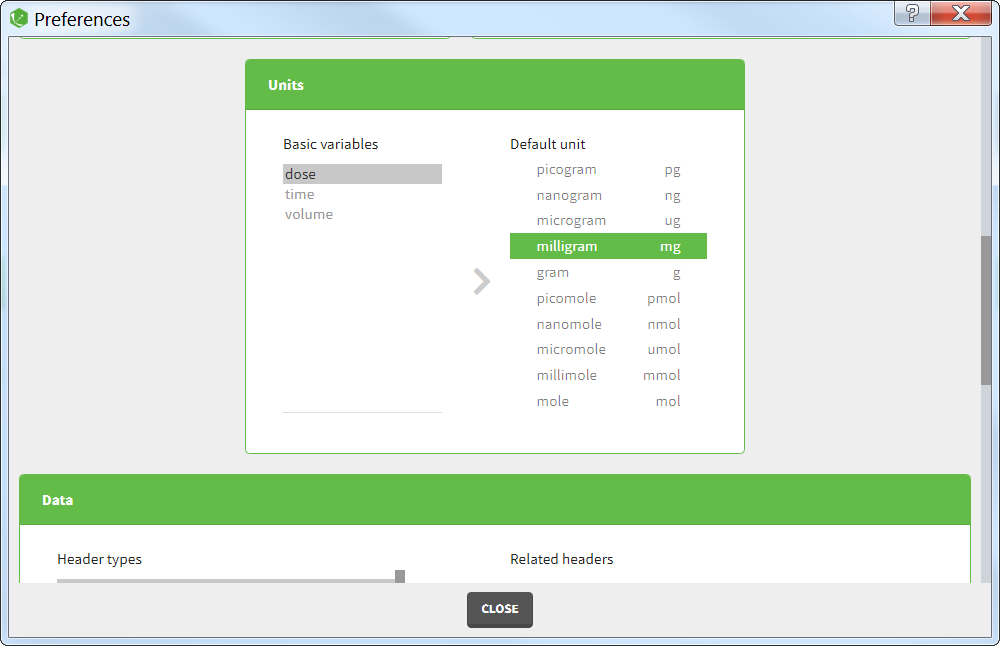

Units preferences

The units selected by default when starting a new PKnaalix project can be chosen in the menu Settings > Preferences.

2.5.Filtering a data set

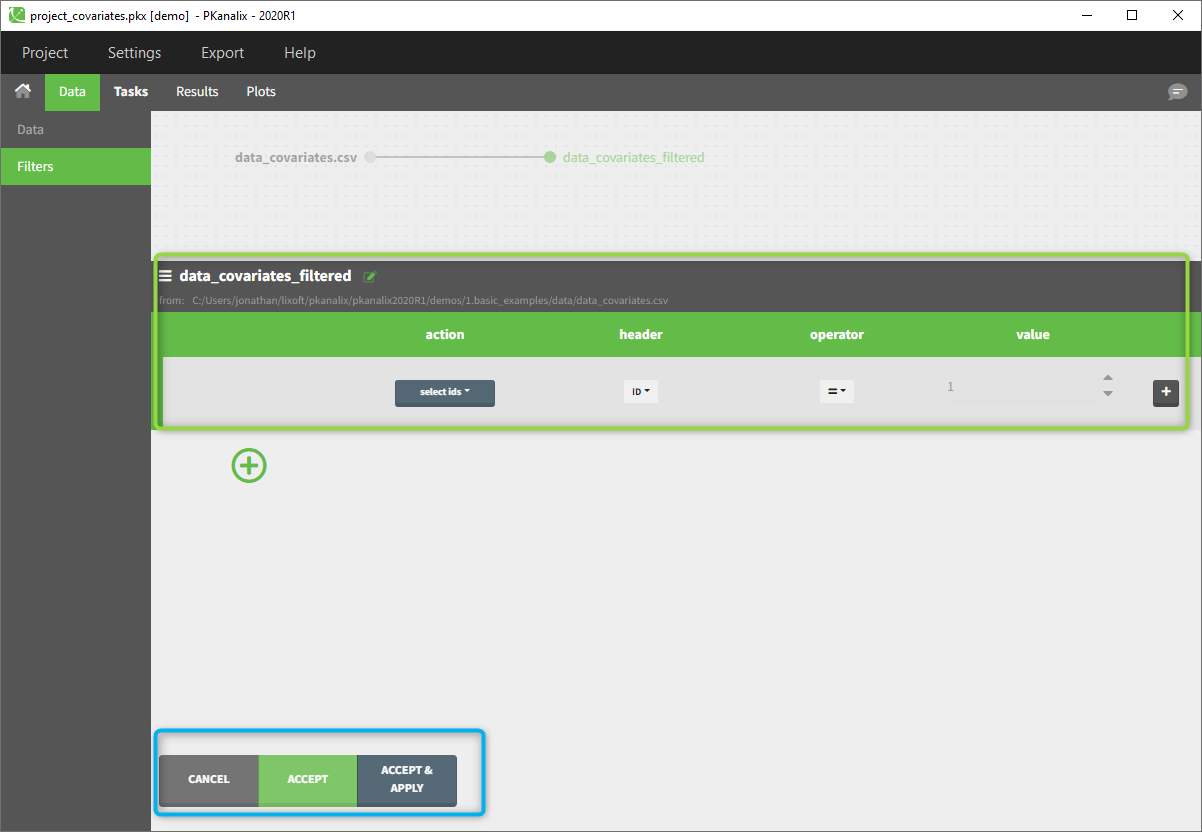

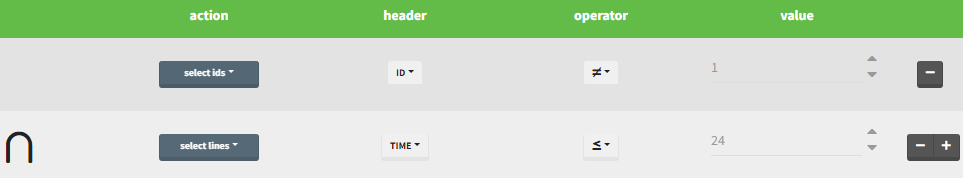

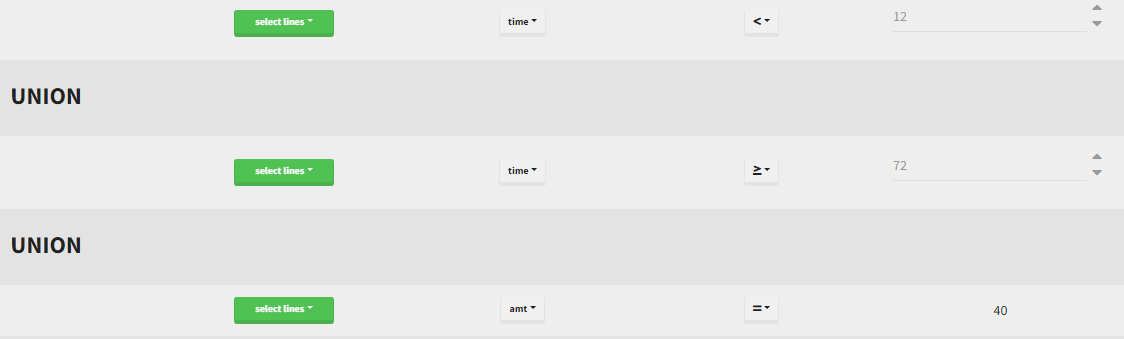

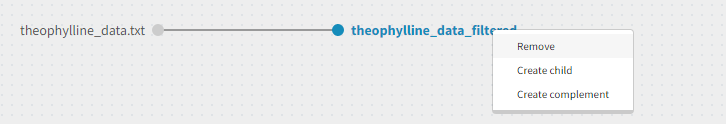

Starting on the 2020 version, filtering a data set to only take a subpart into account in your modelization is possible. It allows to make filters on some specific IDs, times, measurement values,… It is also possible to define complementary filters and also filters of filters. It is accessible through the filters item on the data tab.

- Creation of a filter

- Filtering actions

- Filters with several actions

- Other filers: filter of filter and complementary filters

Creation of a filter

To create a filter, you need to click on the data set name. You can then create a “child”. It corresponds to a subpart of the data set where you will define your filtering actions.

You can see on the top (in the green rectangle) the action that you will complete and you can CANCEL, ACCEPT, or ACCEPT & APPLY with the bottoms on the bottom.

Filtering a data set: actions

In all the filtering actions, you need to define

- An action: it corresponds to one of the following possibilities: select ids, remove ids, select lines, remove lines.

- A header: it corresponds to the column of the data set you wish to have an action on. Notice that it corresponds to a column of the data set that was tagged with a header.